Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37243

*** Why ***

As it stands, we have two thread pool solutions concurrently in use in PyTorch mobile: (1) the open source pthreadpool library under third_party, and (2) Caffe2's implementation of pthreadpool under caffe2/utils/threadpool. Since the primary use-case of the latter has been to act as a drop-in replacement for the third party version so as to enable integration and usage from within NNPACK and QNNPACK, Caffe2's implementation is intentionally written to the exact same interface as the third party version.

The original argument in favor of C2's implementation has been improved performance as a result of using spin locks, as opposed to relinquishing the thread's time slot and putting it to sleep - a less expensive operation up to a point. That seems to have given C2's implementation the upper hand in performance, hence justifying the added maintenance complexity, until the third party version improved in parallel surpassing the efficiency of C2's implementation as I have verified in benchmarks. With that advantage gone, there is no reason to continue using C2's implementation in PyTorch mobile either from the perspective of performance or code hygiene. As a matter of fact, there is considerable performance benefit to be had as a result of using the third party version as it currently stands.

This is a tricky change though, mainly because in order to avoid potential performance regressions, of which I have witnessed none but just in abundance of caution, we have decided to continue using the internal C2's implementation whenever building for Caffe2. Again, this is mainly to avoid potential performance regressions in production C2 use cases even if doing so results in reduced performance as far as I can tell.

So to summarize, today, and as it currently stands, we are using C2's implementation for (1) NNPACK, (2) PyTorch QNNPACK, and (3) ATen parallel_for on mobile builds, while using the third party version of pthreadpool for XNNPACK as XNNPACK does not provide any build options to link against an external implementation unlike NNPACK and QNNPACK do.

The goal of this PR then, is to unify all usage on mobile to the third party implementation both for improved performance and better code hygiene. This applies to PyTorch's use of NNPACK, QNNPACK, XNNPACK, and mobile's implementation of ATen parallel_for, all getting routed to the

exact same third party implementation in this PR.

Considering that NNPACK, QNNPACK, and XNNPACK are not mobile specific, these benefits carry over to non-mobile builds of PyTorch (but not Caffe2) as well. The implementation of ATen parallel_for on non-mobile builds remains unchanged.

*** How ***

This is where things get tricky.

A good deal of the build system complexity in this PR arises from our desire to maintain C2's implementation intact for C2's use.

pthreadpool is a C library with no concept of namespaces, which means two copies of the library cannot exist in the same binary or symbol collision will occur violating ODR. This means that somehow, and based on some condition, we must decide on the choice of a pthreadpool implementation. In practice, this has become more complicated as a result of all the possible combinations that USE_NNPACK, USE_QNNPACK, USE_PYTORCH_QNNPACK, USE_XNNPACK, USE_SYSTEM_XNNPACK, USE_SYSTEM_PTHREADPOOL and other variables can result in. Having said that, I have done my best in this PR to surgically cut through this complexity in a way that minimizes the side effects, considering the significance of the performance we are leaving on the table, yet, as a result of this combinatorial explosion explained above I cannot guarantee that every single combination will work as expected on the first try. I am heavily relying on CI to find any issues as local testing can only go that far.

Having said that, this PR provides a simple non mobile-specific C++ thread pool implementation on top of pthreadpool, namely caffe2::PThreadPool that automatically routes to C2's implementation or the third party version depending on the build configuration. This simplifies the logic at the cost of pushing the complexity to the build scripts. From there on, this thread pool is used in aten parallel_for, and NNPACK and family, again, routing all usage of threading to C2 or third party pthreadpool depending on the build configuration.

When it is all said or done, the layering will look like this:

a) aten::parallel_for, uses

b) caffe2::PThreadPool, which uses

c) pthreadpool C API, which delegates to

c-1) third_party implementation of pthreadpool if that's what the build has requested, and the rabbit hole ends here.

c-2) C2's implementation of pthreadpool if that's what the build has requested, which itself delegates to

c-2-1) caffe2::ThreadPool, and the rabbit hole ends here.

NNPACK, and (PyTorch) QNNPACK directly hook into (c). They never go through (b).

Differential Revision: D21232894

Test Plan: Imported from OSS

Reviewed By: dreiss

Pulled By: AshkanAliabadi

fbshipit-source-id: 8b3de86247fbc3a327e811983e082f9d40081354

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39587

Example of using direct linking to pytorch_jni library from aar and updating android/README.md with the tutorial how to do it.

Adding `nativeBuild` dimension to `test_app`, using direct aar dependencies, as headers packaging is not landed yet, excluding `nativeBuild` from building by default for CI.

Additional change to `scripts/build_pytorch_android.sh`:

Skipping clean task here as android gradle plugin 3.3.2 exteralNativeBuild has problems with it when abiFilters are specified.

Will be returned back in the following diffs with upgrading of gradle and android gradle plugin versions.

Test Plan: Imported from OSS

Differential Revision: D22118945

Pulled By: IvanKobzarev

fbshipit-source-id: 31c54b49b1f262cbe5f540461d3406f74851db6c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39588

Before this diff we used c++_static linking.

Users will dynamically link to libpytorch_jni.so and have at least one more their own shared library that probably uses stl library.

We must have not more than one stl per app. ( https://developer.android.com/ndk/guides/cpp-support#one_stl_per_app )

To have only one stl per app changing ANDROID_STL way to c++_shared, that will add libc++_shared.so to packaging.

Test Plan: Imported from OSS

Differential Revision: D22118031

Pulled By: IvanKobzarev

fbshipit-source-id: ea1e5085ae207a2f42d1fa9f6ab8ed0a21768e96

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39868

### Summary

why disable NNPACK on iOS

- To stay consistency with our internal version

- It's currently blocking some external users due to its lack support of x86 architecture

- https://github.com/pytorch/pytorch/issues/32040

- https://discuss.pytorch.org/t/undefined-symbols-for-architecture-x86-64-for-libtorch-in-swift-unit-test/84552/6

- NNPACK uses fast convolution algorithms (FFT, winograd) to reduce the computational complexity of convolutions with large kernel size. The algorithmic speedup is limited to specific conv params which are unlikely to appear in mobile networks.

- Since XNNPACK has been enabled, it performs much better than NNPACK on depthwise-separable convolutions which is the algorithm being used by most of mobile computer vision networks.

### Test Plan

- CI Checks

Test Plan: Imported from OSS

Differential Revision: D22087365

Pulled By: xta0

fbshipit-source-id: 89a959b0736c1f8703eff10723a8fbd02357fd4a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39999

Cleaned up the android build scripts. Consolidated common functions into

common.sh. Also made a few minor fixes:

- We should trust build_android.sh doing right about reusing existing

`build_android_$abi` directory;

- We should clean up `pytorch_android/src/main/jniLibs/` to remove

broken symbolic links in case custom abi list changes since last build;

Test Plan: Imported from OSS

Differential Revision: D22036926

Pulled By: ljk53

fbshipit-source-id: e93915ee4f195111b6171cdabc667fa0135d5195

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39188

Extracting Vulkan_LIBS and Vulkan_INCLUDES setup from `cmake/Dependencies.cmake` to `cmake/VulkanDependencies.cmake` and reuse it in android/pytorch_android/CMakeLists.txt

Adding control to build with Vulkan setting env variable `USE_VULKAN` for `scripts/build_android.sh` `scripts/build_pytorch_android.sh`

We do not use Vulkan backend in pytorch_android, but with this build option we can track android aar change with `USE_VULKAN` added.

Currently it is 88Kb.

Test Plan: Imported from OSS

Differential Revision: D21770892

Pulled By: IvanKobzarev

fbshipit-source-id: a39433505fdcf43d3b524e0fe08062d5ebe0d872

Summary:

* Does a basic upload of release candidates to an extra folder within our

S3 bucket.

* Refactors AWS promotion to allow for easier development of restoration

of backups

Backup restoration usage:

```

RESTORE_FROM=v1.6.0-rc3 restore-backup.sh

```

Requires:

* AWS credentials to upload / download stuff

* Anaconda credentials to upload

Pull Request resolved: https://github.com/pytorch/pytorch/pull/38690

Differential Revision: D21691033

Pulled By: seemethere

fbshipit-source-id: 31118814db1ca701c55a3cb0bc32caa1e77a833d

Summary:

Files that were named the same within the anaconda repository, i.e.

pytorch_1.5.0-cpu.bz2, were found to be clobbering each other,

especially amongst different platforms.

This lead to similarly named packages for different platforms to not get

promoted.

This also adds "--skip" to our anaconda upload so that we don't end up

overwriting our releases just in case this script gets run twice.

Also, conda search ends up erroring out if it doesn't find anything for

the current platform being searched for so we should just continue

forward if we don't find anything since we want to be able to use this

script for all of the packages we support which also do not release

packages for all of the same platforms. (torchtext for example only has

"noarch")

This should also probably be back-ported to the `release/1.5` branch since this changeset was used to release `v1.5.0`

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37089

Differential Revision: D21184768

Pulled By: seemethere

fbshipit-source-id: dbe12d74df593b57405b178ddb2375691e128a49

Summary:

Adds a new promotion pipeline for both our wheel packages hosted on S3

as well as our conda packages hosted on anaconda.

Promotion is only run on tags that that match the following regex:

/v[0-9]+(\.[0-9]+)*/

Example:

v1.5.0

The promotion pipeline is also only run after a manual approval from

someone within the CircleCI security context "org-member"

> NOTE: This promotion pipeline does not cover promotion of packages that

> are published to PyPI, this is an intentional choice as those

> packages cannot be reverted after they have been published.

TODO: Write a proper testing pipeline for this

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34993

Differential Revision: D20539497

Pulled By: seemethere

fbshipit-source-id: 104772d3c3898d77a24ef9bf25f7dbd2496613df

Summary:

How this actually works:

1. Get's a list of URLs from anaconda for pkgs to download, most

likely from pytorch-test

2. Download all of those packages locally in a temp directory

3. Upload all of those packages, with a dry run upload by default

This, along with https://github.com/pytorch/pytorch/issues/34500 basically completes the scripting work for the eventual promotion pipeline.

Currently testing with:

```

TEST_WITHOUT_GIT_TAG=1 TEST_PYTORCH_PROMOTE_VERSION=1.4.0 PYTORCH_CONDA_FROM=pytorch scripts/release/promote/conda_to_conda.sh

```

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34659

Differential Revision: D20432687

Pulled By: seemethere

fbshipit-source-id: c2a99f6cbc6a7448e83e666cde11d6875aeb878e

Summary:

Is reliant on scripts for promotion from s3 to s3 to have already run.

A continuation of the work done in https://github.com/pytorch/pytorch/issues/34274

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34500

Test Plan: yeah_sandcastle

Differential Revision: D20389101

Pulled By: seemethere

fbshipit-source-id: 5e5b554cff964630c5414d48be35f14ba6894021

Summary:

**Summary**

This commit adds `tools/clang_format_new.py`, which downloads a platform-appropriate

clang-format binary to a `.gitignored` location, verifies the binary by comparing its

SHA1 hash to a reference hash (also included in this commit), and runs it on all files

matched a specific regex in a list of whitelisted subdirectories of pytorch.

This script will eventually replace `tools/clang_format.py`.

**Testing**

Ran the script.

*No Args*

```

pytorch > ./tools/clang_format.py

Downloading clang-format to /Users/<user>/Desktop/pytorch/.clang-format-bin

0% |################################################################| 100%

Using clang-format located at /Users/<user>/Desktop/pytorch/.clang-format-bin/clang-format

> echo $?

0

> git status

<bunch of files>

```

`--diff` *mode*

```

> ./tools/clang_format.py --diff

Using clang-format located at /Users/<user>/Desktop/pytorch/.clang-format-bin/clang-format

Some files are not formatted correctly

> echo $?

1

<format files using the script>

> ./tools/clang_format.py --diff

Using clang-format located at /Users/<user>/Desktop/pytorch/.clang-format-bin/clang-format

All files are formatted correctly

> echo $?

0

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34566

Differential Revision: D20431290

Pulled By: SplitInfinity

fbshipit-source-id: 3966f769cfb923e58ead9376d85e97127415bdc6

Summary:

If SELECTED_OP_LIST is specified as a relative path in command line, CMake build will fail.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33942

Differential Revision: D20392797

Pulled By: ljk53

fbshipit-source-id: dffeebc48050970e286cf263bdde8b26d8fe4bce

Summary:

When a system has ROCm dev tools installed, `scripts/build_mobile.sh` tried to use it.

This PR fixes looking up unused ROCm library when building libtorch mobile.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34478

Differential Revision: D20388147

Pulled By: ljk53

fbshipit-source-id: b512c38fa2d3cda9ac20fe47bcd67ad87c848857

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34410

### Summary

Currently, the iOS jobs are not being run on PRs anymore. This is because all iOS jobs have specified the `org-member` as a context which used to include all pytorch members. But seems like recently this rule has changed. It turns out that only users from the admin group or builder group can have access right to the context values. https://circleci.com/gh/organizations/pytorch/settings#contexts/2b885fc9-ef3a-4b86-8f5a-2e6e22bd0cfe

This PR will remove `org-member` from the iOS simulator build which doesn't require code signing. For the arm64 builds, they'll only be run on master, not on PRs anymore.

### Test plan

- The iOS simulator job should be able to appear in the PR workflow

Test Plan: Imported from OSS

Differential Revision: D20347270

Pulled By: xta0

fbshipit-source-id: 23f37d40160c237dc280e0e82f879c1d601f72ac

Summary:

Currently testing against the older release `1.4.0` with:

```

PYTORCH_S3_FROM=nightly TEST_WITHOUT_GIT_TAG=1 TEST_PYTORCH_PROMOTE_VERSION=1.4.0 scripts/release/promote/libtorch_to_s3.sh

PYTORCH_S3_FROM=nightly TEST_WITHOUT_GIT_TAG=1 TEST_PYTORCH_PROMOTE_VERSION=1.4.0 scripts/release/promote/wheel_to_s3.sh

```

These scripts can also be used for `torchvision` as well which may make the release process better there as well.

Later on this should be made into a re-usable module that can be downloaded from anywhere and used amongst all pytorch repositories.

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34274

Test Plan: sandcastle_will_deliver

Differential Revision: D20294419

Pulled By: seemethere

fbshipit-source-id: c8c31b5c42af5096f09275166ac43d45a459d25c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34203

Currently cmake and mobile build scripts still build libcaffe2 by

default. To build pytorch mobile users have to set environment variable

BUILD_PYTORCH_MOBILE=1 or set cmake option BUILD_CAFFE2_MOBILE=OFF.

PyTorch mobile has been released for a while. It's about time to change

CMake and build scripts to build libtorch by default.

Changed caffe2 CI job to build libcaffe2 by setting BUILD_CAFFE2_MOBILE=1

environment variable. Only found android CI for libcaffe2 - do we ever

have iOS CI for libcaffe2?

Test Plan: Imported from OSS

Differential Revision: D20267274

Pulled By: ljk53

fbshipit-source-id: 9d997032a599c874d62fbcfc4f5d4fbf8323a12e

Summary:

Among all ONNX tests, ONNXRuntime tests are taking the most time on CI (almost 60%).

This is because we are testing larger models (mainly torchvision RCNNs) for multiple onnx opsets.

I decided to divide tests between two jobs for older/newer opsets. This is now reducing the test time from 2h to around 1h10mins.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33242

Reviewed By: hl475

Differential Revision: D19866498

Pulled By: houseroad

fbshipit-source-id: 446c1fe659e85f5aef30efc5c4549144fcb5778c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34187

Noticed that a recent PR broke Android/iOS CI but didn't break mobile

build with host toolchain. Turns out one mobile related flag was not

set on PYTORCH_BUILD_MOBILE code path:

```

"set(INTERN_DISABLE_MOBILE_INTERP ON)"

```

First, move the INTERN_DISABLE_MOBILE_INTERP macro below, to stay with

other "mobile + pytorch" options - it's not relevant to "mobile + caffe2"

so doesn't need to be set as common "mobile" option;

Second, rename PYTORCH_BUILD_MOBILE env-variable to

BUILD_PYTORCH_MOBILE_WITH_HOST_TOOLCHAIN - it's a bit verbose but

becomes more clear what it does - there is another env-variable

"BUILD_PYTORCH_MOBILE" used in scripts/build_android.sh, build_ios.sh,

which toggles between "mobile + pytorch" v.s. "mobile + caffe2";

Third, combine BUILD_PYTORCH_MOBILE_WITH_HOST_TOOLCHAIN with ANDROID/IOS

to avoid missing common mobile options again in future.

Test Plan: Imported from OSS

Differential Revision: D20251864

Pulled By: ljk53

fbshipit-source-id: dc90cc87ffd4d0bf8a78ae960c4ce33a8bb9e912

Summary:

**Summary**

This commit adds a script that fetches a platform-appropriate `clang-format` binary

from S3 for use during PyTorch development. The goal is for everyone to use the exact

same `clang-format` binary so that there are no formatting conflicts.

**Testing**

Ran the script.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33644

Differential Revision: D20076598

Pulled By: SplitInfinity

fbshipit-source-id: cd837076fd30e9c7a8280665c0d652a33b559047

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33722

In order to improve CPU performance on floating-point models on mobile, this PR introduces a new CPU backend for mobile that implements the most common mobile operators with NHWC memory layout support through integration with XNNPACK.

XNNPACK itself, and this codepath, are currently only included in the build, but the actual integration is gated with USE_XNNPACK preprocessor guards. This preprocessor symbol is intentionally not passed on to the compiler, so as to enable this rollout in multiple stages in follow up PRs. This changeset will build XNNPACK as part of the build if the identically named USE_XNNPACK CMAKE variable, defaulted to ON, is enabled, but will not actually expose or enable this code path in any other way.

Furthermore, it is worth pointing out that in order to efficiently map models to these operators, some front-end method of exposing this backend to the user is needed. The less efficient implementation would be to hook these operators into their corresponding native implementations, granted that a series of XNNPACK-specific conditions are met, much like how NNPACK is integrated with PyTorch today for instance.

Having said that, while the above implementation is still expected to outperform NNPACK based on the benchmarks I ran, the above integration would be leave a considerable gap between the performance achieved and the maximum performance potential XNNPACK enables, as it does not provide a way to compute and factor out one-time operations out of the inner most forward() loop.

The more optimal solution, and one we will decide on soon, would involve either providing a JIT pass that maps nn operators onto these newly introduced operators, while allowing one-time calculations to be factored out, much like quantized mobile models. Alternatively, new eager-mode modules can also be introduced that would directly call into these implementations either through c10 or some other mechanism, also allowing for decoupling of op creation from op execution.

This PR does not include any of the front end changes mentioned above. Neither does it include the mobile threadpool unification present in the original https://github.com/pytorch/pytorch/issues/30644. Furthermore, this codepath seems to be faster than NNPACK in a good number of use cases, which can potentially allow us to remove NNPACK from aten to make the codebase a little simpler, granted that there is widespread support for such a move.

Regardless, these changes will be introduced gradually and in a more controlled way in subsequent PRs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32509

Test Plan:

Build: CI

Functionality: Not exposed

Reviewed By: dreiss

Differential Revision: D20069796

Pulled By: AshkanAliabadi

fbshipit-source-id: d46c1c91d4bea91979ea5bd46971ced5417d309c

Summary:

In order to improve CPU performance on floating-point models on mobile, this PR introduces a new CPU backend for mobile that implements the most common mobile operators with NHWC memory layout support through integration with XNNPACK.

XNNPACK itself, and this codepath, are currently only included in the build, but the actual integration is gated with USE_XNNPACK preprocessor guards. This preprocessor symbol is intentionally not passed on to the compiler, so as to enable this rollout in multiple stages in follow up PRs. This changeset will build XNNPACK as part of the build if the identically named USE_XNNPACK CMAKE variable, defaulted to ON, is enabled, but will not actually expose or enable this code path in any other way.

Furthermore, it is worth pointing out that in order to efficiently map models to these operators, some front-end method of exposing this backend to the user is needed. The less efficient implementation would be to hook these operators into their corresponding **native** implementations, granted that a series of XNNPACK-specific conditions are met, much like how NNPACK is integrated with PyTorch today for instance.

Having said that, while the above implementation is still expected to outperform NNPACK based on the benchmarks I ran, the above integration would be leave a considerable gap between the performance achieved and the maximum performance potential XNNPACK enables, as it does not provide a way to compute and factor out one-time operations out of the inner most forward() loop.

The more optimal solution, and one we will decide on soon, would involve either providing a JIT pass that maps nn operators onto these newly introduced operators, while allowing one-time calculations to be factored out, much like quantized mobile models. Alternatively, new eager-mode modules can also be introduced that would directly call into these implementations either through c10 or some other mechanism, also allowing for decoupling of op creation from op execution.

This PR does not include any of the front end changes mentioned above. Neither does it include the mobile threadpool unification present in the original https://github.com/pytorch/pytorch/issues/30644. Furthermore, this codepath seems to be faster than NNPACK in a good number of use cases, which can potentially allow us to remove NNPACK from aten to make the codebase a little simpler, granted that there is widespread support for such a move.

Regardless, these changes will be introduced gradually and in a more controlled way in subsequent PRs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32509

Reviewed By: dreiss

Differential Revision: D19521853

Pulled By: AshkanAliabadi

fbshipit-source-id: 99a1fab31d0ece64961df074003bb852c36acaaa

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33318

### Summary

Recently, we have a [discussion](https://discuss.pytorch.org/t/libtorch-on-watchos/69073/14) in the forum about watchOS. This PR adds the support for building watchOS libraries.

### Test Plan

- `BUILD_PYTORCH_MOBILE=1 IOS_PLATFORM=WATCHOS ./scripts/build_ios.sh`

Test Plan: Imported from OSS

Differential Revision: D19896534

Pulled By: xta0

fbshipit-source-id: 7b9286475e895d9fefd998246e7090ac92c4c9b6

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30315

The new structure is that libtorch_cpu contains the bulk of our

code, and libtorch depends on libtorch_cpu and libtorch_cuda.

This is a reland of https://github.com/pytorch/pytorch/pull/29731 but

I've extracted all of the prep work into separate PRs which can be

landed before this one.

Some things of note:

* torch/csrc/cuda/nccl.cpp was added to the wrong list of SRCS, now fixed (this didn't matter before because previously they were all in the same library)

* The dummy file for libtorch was brought back from the dead; it was previously deleted in #20774

In an initial version of the patch, I forgot to make torch_cuda explicitly depend on torch_cpu. This lead to some very odd errors, most notably "bin/blob_test: hidden symbol `_ZNK6google8protobuf5Arena17OnArenaAllocationEPKSt9type_infom' in lib/libprotobuf.a(arena.cc.o) is referenced by DSO"

* A number of places in Android/iOS builds have to add torch_cuda explicitly as a library, as they do not have transitive dependency calculation working correctly

* I had to torch_cpu/torch_cuda caffe2_interface_library so that they get whole-archived linked into torch when you statically link. And I had to do this in an *exported* fashion because torch needs to depend on torch_cpu_library. In the end I exported everything and removed the redefinition in the Caffe2Config.cmake. However, I am not too sure why the old code did it in this way in the first place; however, it doesn't seem to have broken anything to switch it this way.

* There's some uses of `__HIP_PLATFORM_HCC__` still in `torch_cpu` code, so I had to apply it to that library too (UGH). This manifests as a failer when trying to run the CUDA fuser. This doesn't really matter substantively right now because we still in-place HIPify, but it would be good to fix eventually. This was a bit difficult to debug because of an unrelated HIP bug, see https://github.com/ROCm-Developer-Tools/HIP/issues/1706Fixes#27215 (as our libraries are smaller), and executes on

part of the plan in #29235.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D18790941

Pulled By: ezyang

fbshipit-source-id: 01296f6089d3de5e8365251b490c51e694f2d6c7

Summary:

Move the shell script into this separate PR to make the original PR

smaller and less scary.

Test Plan:

- With stacked PRs:

1. analyze test project and compare with expected results:

```

ANALYZE_TEST=1 CHECK_RESULT=1 tools/code_analyzer/build.sh

```

2. analyze LibTorch:

```

ANALYZE_TORCH=1 tools/code_analyzer/build.sh

```

Differential Revision: D18474749

Pulled By: ljk53

fbshipit-source-id: 55c5cae3636cf2b1c4928fd2dc615d01f287076a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29731

The new structure is that libtorch_cpu contains the bulk of our

code, and libtorch depends on libtorch_cpu and libtorch_cuda.

Some subtleties about the patch:

- There were a few functions that crossed CPU-CUDA boundary without API macros. I just added them, easy enough. An inverse situation was aten/src/THC/THCTensorRandom.cu where we weren't supposed to put API macros directly in a cpp file.

- DispatchStub wasn't getting all of its symbols related to static members on DispatchStub exported properly. I tried a few fixes but in the end I just moved everyone off using DispatchStub to dispatch CUDA/HIP (so they just use normal dispatch for those cases.) Additionally, there were some mistakes where people incorrectly were failing to actually import the declaration of the dispatch stub, so added includes for those cases.

- torch/csrc/cuda/nccl.cpp was added to the wrong list of SRCS, now fixed (this didn't matter before because previously they were all in the same library)

- The dummy file for libtorch was brought back from the dead; it was previously deleted in #20774

- In an initial version of the patch, I forgot to make torch_cuda explicitly depend on torch_cpu. This lead to some very odd errors, most notably "bin/blob_test: hidden symbol `_ZNK6google8protobuf5Arena17OnArenaAllocationEPKSt9type_infom' in lib/l

ibprotobuf.a(arena.cc.o) is referenced by DSO"

- A number of places in Android/iOS builds have to add torch_cuda explicitly as a library, as they do not have transitive dependency calculation working correctly. This situation also happens with custom C++ extensions.

- There's a ROCm compiler bug where extern "C" on functions is not respected. There's a little workaround to handle this.

- Because I was too lazy to check if HIPify was converting TORCH_CUDA_API into TORCH_HIP_API, I just made it so HIP build also triggers the TORCH_CUDA_API macro. Eventually, we should translate and keep the nature of TORCH_CUDA_API constant in all cases.

Fixes#27215 (as our libraries are smaller), and executes on

part of the plan in #29235.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D18632773

Pulled By: ezyang

fbshipit-source-id: ea717c81e0d7554ede1dc404108603455a81da82

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30144

Create script to produce libtorch that only contains ops needed by specific

models. Developers can use this workflow to further optimize mobile build size.

Need keep a dummy stub for unused (stripped) ops because some JIT side

logic requires certain function schemas to be existed in the JIT op

registry.

Test Steps:

1. Build "dump_operator_names" binary and use it to dump root ops needed

by a specific model:

```

build/bin/dump_operator_names --model=mobilenetv2.pk --output=mobilenetv2.yaml

```

2. The MobileNetV2 model should use the following ops:

```

- aten::t

- aten::dropout

- aten::mean.dim

- aten::add.Tensor

- prim::ListConstruct

- aten::addmm

- aten::_convolution

- aten::batch_norm

- aten::hardtanh_

- aten::mm

```

NOTE that for some reason it outputs "aten::addmm" but actually uses "aten::mm".

You need fix it manually for now.

3. Run custom build script locally (use Android as an example):

```

SELECTED_OP_LIST=mobilenetv2.yaml scripts/build_pytorch_android.sh armeabi-v7a

```

4. Checkout demo app that uses locally built library instead of

downloading from jcenter repo:

```

git clone --single-branch --branch custom_build git@github.com:ljk53/android-demo-app.git

```

5. Copy locally built libraries to demo app folder:

```

find ${HOME}/src/pytorch/android -name '*.aar' -exec cp {} ${HOME}/src/android-demo-app/HelloWorldApp/app/libs/ \;

```

6. Build demo app with locally built libtorch:

```

cd ${HOME}/src/android-demo-app/HelloWorldApp

./gradlew clean && ./gradlew assembleDebug

```

7. Install and run the demo app.

In-APK arm-v7 libpytorch_jni.so build size reduced from 5.5M to 2.9M.

Test Plan: Imported from OSS

Differential Revision: D18612127

Pulled By: ljk53

fbshipit-source-id: fa8d5e1d3259143c7346abd1c862773be8c7e29a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29715

Previous we hard code it to enable static dispatch when building mobile

library. Since we are exploring approaches to deprecate static dispatch

we should make it optional. This PR moved the setting from cmake to bash

build scripts which can be overridden.

Test Plan: - verified it's still using static dispatch when building with these scripts.

Differential Revision: D18474640

Pulled By: ljk53

fbshipit-source-id: 7591acc22009bfba36302e3b2a330b1428d8e3f1

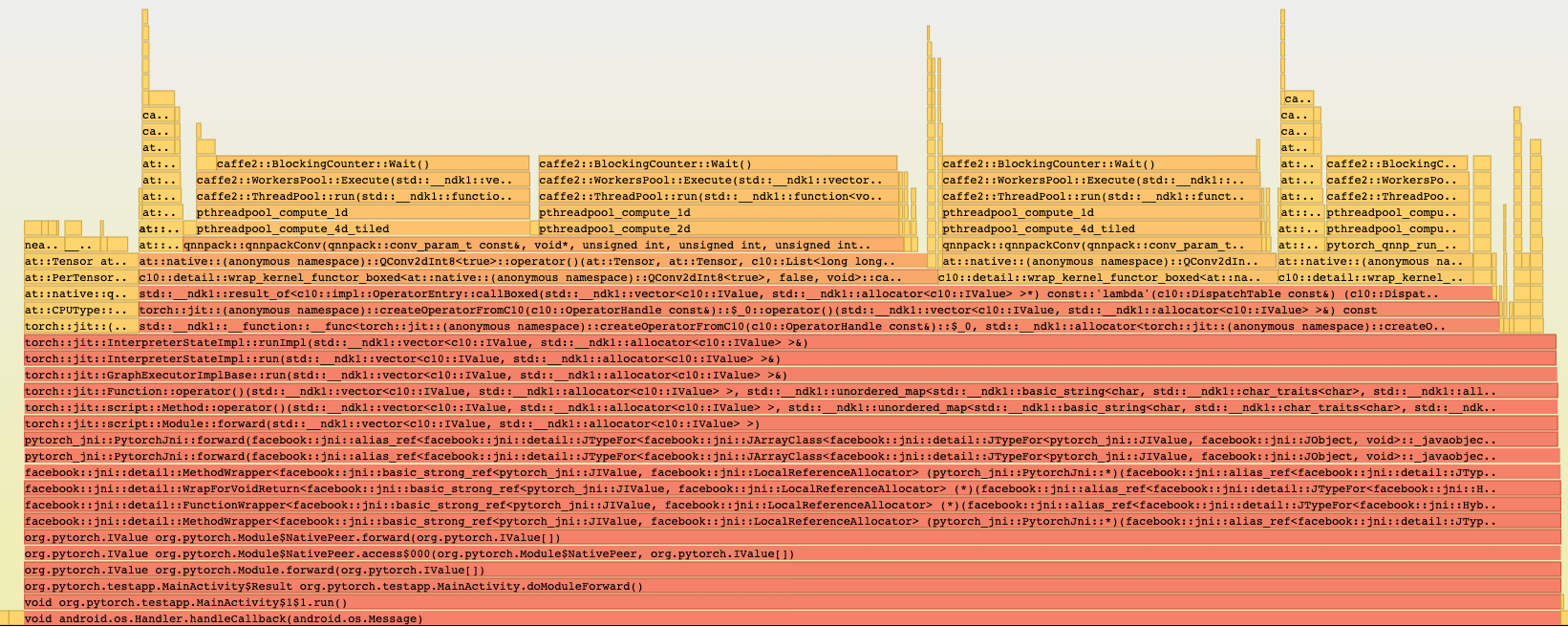

Summary:

Reason:

To have one-step build for test android application based on the current code state that is ready for profiling with simpleperf, systrace etc. to profile performance inside the application.

## Parameters to control debug symbols stripping

Introducing /CMakeLists parameter `ANDROID_DEBUG_SYMBOLS` to be able not to strip symbols for pytorch (not add linker flag `-s`)

which is checked in `scripts/build_android.sh`

On gradle side stripping happens by default, and to prevent it we have to specify

```

android {

packagingOptions {

doNotStrip "**/*.so"

}

}

```

which is now controlled by new gradle property `nativeLibsDoNotStrip `

## Test_App

`android/test_app` - android app with one MainActivity that does inference in cycle

`android/build_test_app.sh` - script to build libtorch with debug symbols for specified android abis and adds `NDK_DEBUG=1` and `-PnativeLibsDoNotStrip=true` to keep all debug symbols for profiling.

Script assembles all debug flavors:

```

└─ $ find . -type f -name *apk

./test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

./test_app/app/build/outputs/apk/resnet/debug/test_app-resnet-debug.apk

```

## Different build configurations

Module for inference can be set in `android/test_app/app/build.gradle` as a BuildConfig parameters:

```

productFlavors {

mobilenetQuant {

dimension "model"

applicationIdSuffix ".mobilenetQuant"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_MOBILENET_QUANT'))

addManifestPlaceholders([APP_NAME: "PyMobileNetQuant"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-mobilenet\"")

}

resnet {

dimension "model"

applicationIdSuffix ".resnet"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_RESNET18'))

addManifestPlaceholders([APP_NAME: "PyResnet"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-resnet\"")

}

```

In that case we can setup several apps on the same device for comparison, to separate packages `applicationIdSuffix`: 'org.pytorch.testapp.mobilenetQuant' and different application names and logcat tags as `manifestPlaceholder` and another BuildConfig parameter:

```

─ $ adb shell pm list packages | grep pytorch

package:org.pytorch.testapp.mobilenetQuant

package:org.pytorch.testapp.resnet

```

In future we can add another BuildConfig params e.g. single/multi threads and other configuration for profiling.

At the moment 2 flavors - for resnet18 and for mobilenetQuantized

which can be installed on connected device:

```

cd android

```

```

gradle test_app:installMobilenetQuantDebug

```

```

gradle test_app:installResnetDebug

```

## Testing:

```

cd android

sh build_test_app.sh

adb install -r test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

```

```

cd $ANDROID_NDK

python simpleperf/run_simpleperf_on_device.py record --app org.pytorch.testapp.mobilenetQuant -g --duration 10 -o /data/local/tmp/perf.data

adb pull /data/local/tmp/perf.data

python simpleperf/report_html.py

```

Simpleperf report has all symbols:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28406

Differential Revision: D18386622

Pulled By: IvanKobzarev

fbshipit-source-id: 3a751192bbc4bc3c6d7f126b0b55086b4d586e7a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28405

### Summary

As discussed with AshkanAliabadi and ljk53, the iOS TestApp will share the same benchmark code with Android's speed_benchmark_torch.cpp. This PR is the first part which contains the Objective-C++ code.

The second PR will include the scripts to setup and run the benchmark project. The third PR will include scripts that can automate the whole "build - test - install" process.

There are many ways to run the benchmark project. The easiest way is to use cocoapods. Simply run `pod install`. However, that will pull the 1.3 binary which is not what we want, but we can still use this approach to test the benchmark code. The second PR will contain scripts to run custom builds that we can tweak.

### Test Plan

- Don't break any existing CI jobs (except for those flaky ones)

Test Plan: Imported from OSS

Differential Revision: D18064187

Pulled By: xta0

fbshipit-source-id: 4cfbb83c045803d8b24bf6d2c110a55871d22962

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27593

## Summary

Since the nightly jobs are lack of testing phases, we don't really have a way to test the binary before uploading it to AWS. To make the work more solid, we need to figure out a way to verify the binary.

Fortunately, the XCode tool chain offers a way to build your app without XCode app, which is the [xcodebuild](https://developer.apple.com/library/archive/technotes/tn2339/_index.html) command. Now we can link our binary to a testing app and run `xcodebuild` to to see if there is any linking error. The PRs below have already done some of the preparation jobs

- [#26261](https://github.com/pytorch/pytorch/pull/26261)

- [#26632](https://github.com/pytorch/pytorch/pull/26632)

The challenge comes when testing the arm64 build as we don't have a way to code-sign our TestApp. Circle CI has a [tutorial](https://circleci.com/docs/2.0/ios-codesigning/) but is too complicated to implement. Anyway, I figured out an easier way to do it

1. Disable automatically code sign in XCode

2. Export the encoded developer certificate and provisioning profile to org-context in Circle CI (done)

3. Install the developer certificate to the key chain store on CI machines via Fastlane.

4. Add the testing code to PR jobs and verify the result.

5. Add the testing code to nightly jobs and verify the result.

## Test Plan

- Both PR jobs and nightly jobs can finish successfully.

- `xcodebuild` can finish successfully

Test Plan: Imported from OSS

Differential Revision: D17848814

Pulled By: xta0

fbshipit-source-id: 48353f001c38e61eed13a43943253cae30d8831a

Summary:

1. scripts/build_android_libtorch_and_pytorch_android.sh

- Builds libtorch for android_abis (by default for all 4: x86, x86_64, armeabi-v7a, arm-v8a) but cab be specified only custom list as a first parameter e.g. "x86"

- Creates symbolic links inside android/pytorch_android to results of the previous builds:

`pytorch_android/src/main/jniLibs/${abi}` -> `build_android/install/lib`

`pytorch_android/src/main/cpp/libtorch_include/${abi}` -> `build_android/install/include`

- Runs gradle assembleRelease to build aar files

proxy can be specified inside (for devservers)

2. android/run_tests.sh

Running pytorch_android tests, contains instruction how to setup and run android emulator in headless and noaudio mode to run it on devserver

proxy can be specified inside (for devservers)

#Test plan

Scenario to build x86 libtorch and android aars with it and run tests:

```

cd pytorch

sh scripts/build_android_libtorch_and_pytorch_android.sh x86

sh android/run_tests.sh

```

Tested on my devserver - build works, tests passed

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26833

Differential Revision: D17673972

Pulled By: IvanKobzarev

fbshipit-source-id: 8cb7c3d131781854589de6428a7557c1ba7471e9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26632

### Summary

This script builds the TestApp (located in ios folder) to generate an iOS x86 executable via the `xcodebuild` toolchain on macOS. The goal is to provide a quick way to test the generated static libraries to see if there are any linking errors. The script can also be used by the iOS CI jobs. To run the script, simply see description below:

```shell

$ruby scripts/xcode_ios_x86_build.rb --help

-i, --install path to the cmake install folder

-x, --xcodeproj path to the XCode project file

```

### Note

The script mainly deals with the iOS simulator build. For the arm64 build, I haven't found a way to disable the Code Sign using the `xcodebuiild` tool chain (XCode 10). If anyone knows how to do that, please feel free to leave a comment below.

### Test Plan

- The script can build the TestApp and link the generated static libraries successfully

- Don't break any CI job

Test Plan: Imported from OSS

Differential Revision: D17530990

Pulled By: xta0

fbshipit-source-id: f50bef7127ff8c11e884c99889cecff82617212b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26478

### Summary

Since QNNPACK [doesn't support bitcode](7d2a4e9931/scripts/build-ios-arm64.sh (L40)), I'm going to disable it in our CMake scripts. This won't hurt any existing functionalities, and will only affect the build size. Any application that wants to integrate our framework should turn off bitcode as well.

### Test plan

- CI job works

- LibTorch.a can be compiled and run on iOS devices

Test Plan: Imported from OSS

Differential Revision: D17489020

Pulled By: xta0

fbshipit-source-id: 950619b9317036cad0505d8a531fb8f5331dc81f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26440

As we are optimizing build size for Android/iOS, it starts diverging

from default build on several build options, e.g.:

- USE_STATIC_DISPATCH=ON;

- disable autograd;

- disable protobuf;

- no caffe2 ops;

- no torch/csrc/api;

...

Create this build_mobile.sh script to 'simulate' mobile build mode

with host toolchain so that people who don't work on mobile regularly

can debug Android/iOS CI error more easily. It might also be used to

build libtorch on devices like raspberry pi natively.

Test Plan:

- run scripts/build_mobile.sh -DBUILD_BINARY=ON

- run build_mobile/bin/speed_benchmark_torch on host machine

Differential Revision: D17466580

Pulled By: ljk53

fbshipit-source-id: 7abb6b50335af5b71e58fb6d6f9c38eb74bd5781

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26113

After https://github.com/pytorch/pytorch/pull/16914, passing in an

argument such as "build_deps" (i.e. python setup.py build_deps develop) is

invalid since it gets picked up as an invalid argument.

ghstack-source-id: 90003508

Test Plan:

Before, this script would execute "python setup.py build_deps

develop", which errored. Now it executes "python setup.py develop" without an

error. Verified by successfully running the script on devgpu. In setup.py,

there is already a `RUN_BUILD_DEPS = True` flag.

Differential Revision: D17350359

fbshipit-source-id: 91278c3e9d9f7c7ed8dea62380f18ba5887ab081

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25896

Similar change as PR #25822.

Test Plan:

- Updated CI to use the new script.

- Will check pytorch android CI output to make sure it builds libtorch

instead of libcaffe2.

Reviewed By: dreiss

Differential Revision: D17279722

Pulled By: ljk53

fbshipit-source-id: 93abcef0dfb93df197fabff29e53d71db5674255

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25822

### Summary

Since protobuf has been removed from mobile, the `build_host_protoc.sh` can be removed from `build_ios.sh` as well. However, the old caffe2 mobile build still depend on it, therefore, I introduced this `BUILD_PYTORCH_MOBILE` flag to gate the build.

- iOS device build

```

BUILD_PYTORCH_MOBILE=1 IOS_ARCH=arm64 ./scripts/build_ios.sh

BUILD_PYTORCH_MOBILE=1 IOS_ARCH=armv7s ./scripts/build_ios.sh

```

- iOS simulator build

```

BUILD_PYTORCH_MOBILE=1 IOS_PLATFORM=SIMULATOR ./scripts/build_ios.sh

```

### Test Plan

All device and simulator builds run successfully

Test Plan: Imported from OSS

Differential Revision: D17264469

Pulled By: xta0

fbshipit-source-id: f8994bbefec31b74044eaf01214ae6df797816c3

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24331

Currently our logs are something like 40M a pop. Turning off warnings and turning on verbose makefiles (to see the compile commands) reduces this to more like 8M. We could probably reduce log size more but verbose makefile is really useful and we'll keep it turned on for Windows.

Some findings:

1. Setting `CMAKE_VERBOSE_MAKEFILE` inside CMakelists.txt itself as suggested in https://github.com/ninja-build/ninja/issues/900#issuecomment-417917630 does not work on Windows. Setting `-DCMAKE_VERBOSE_MAKEFILE=1` does work (and we respect this environment variable.)

2. The high (`/W3`) warning level is by default on MSVC is due to cmake inserting this in the default flags. On recent versions of cmake, CMP0092 can be used to disable this flag in the default set. The string replace trick sort of works, but the standard snippet you'll find on the internet won't disable the flag from nvcc. I inspected the CUDA cmake code and verified it does respect CMP0092

3. `EHsc` is also in the default flags; this one cannot be suppressed via a policy. The string replace trick seems to work...

4. ... however, it seems nvcc implicitly inserts an `/EHs` after `-Xcompiler` specified flags, which means that if we add `/EHa` to our set of flags, you'll get a warning from nvcc. So we probably have to figure out how to exclude EHa from the nvcc flags set (EHs does seem to work fine.)

5. To suppress warnings in nvcc, you must BOTH pass `-w` and `-Xcompiler /w`. Individually these are not enough.

The patch applies these things; it also fixes a bug where nvcc verbose command printing doesn't work with `-GNinja`.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D17131746

Pulled By: ezyang

fbshipit-source-id: fb142f8677072a5430664b28155373088f074c4b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24330

In principle, we should be able to use the MSVC generator

to do a Windows build, but with the latest build of our

Windows AMI, this is no longer possible. An in-depth

investigation about why this is no longer working should

occur in https://github.com/pytorch/pytorch/issues/24386

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24330

Test Plan: Imported from OSS

Differential Revision: D16828794

Pulled By: ezyang

fbshipit-source-id: fa826a8a6692d3b8d5252fce52fe823eb58169bf

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24030

The cmake arg - `USE_QNNPACK` was disabled for iOS build due to its lack of support for building multiple archs(armv7;armv7s;arm64) simultaneously.To enable it, we need to specify the value of IOS_ARCH explicitly in the build command:

```

./scripts/build_ios.sh \

-DIOS_ARCH=arm64 \

-DBUILD_CAFFE2_MOBILE=OFF \

```

However,the iOS.cmake will overwirte this value according to the value of `IOS_PLATFORM`. This PR is a fix to this problem.

Test Plan:

- `USE_QNNPACK` should be turned on by cmake.

- `libqnnpack.a` can be generated successfully.

- `libortch.a` can be compiled and run successfully on iOS devices.

<img src="https://github.com/xta0/AICamera-ObjC/blob/master/aicamera.gif?raw=true" width="400">

Differential Revision: D16771014

Pulled By: xta0

fbshipit-source-id: 4cdfd502cb2bcd29611e4c22e2efdcdfe9c920d3

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24029

The cmake toolchain file for building iOS is currently in `/third-pary/ios-cmake`. Since the upstream is not active anymore, It's better to maintain this file ourselves moving forward.This PR is also the prerequisite for enabling QNNPACK for iOS.

Test Plan:

- The `libtorch.a` can be generated successfully

- The `libtorch.a` can be compiled and run on iOS devices

<img src="https://github.com/xta0/AICamera-ObjC/blob/master/aicamera.gif?raw=true" width="400">

Differential Revision: D16770980

Pulled By: xta0

fbshipit-source-id: 1ed7b12b3699bac52b74183fa7583180bb17567e

Summary:

ONNX uses virtualenv, and PyTorch doesn't. So --user flag is causing problems in ONNX ci...

Fixing it by moving it to pytorch only scripts. And will install ninja in onnx ci separately.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22946

Reviewed By: bddppq

Differential Revision: D16297781

Pulled By: houseroad

fbshipit-source-id: 52991abac61beaf3cfbcc99af5bb1cd27b790485

Summary:

This is an extension to the original PR https://github.com/pytorch/pytorch/pull/21765

1. Increase the coverage of different opsets support, comments, and blacklisting.

2. Adding backend tests for both caffe2 and onnxruntime on opset 7 and opset 8.

3. Reusing onnx model tests in caffe2 for onnxruntime.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22421

Reviewed By: zrphercule

Differential Revision: D16225518

Pulled By: houseroad

fbshipit-source-id: 01ae3eed85111a83a0124e9e95512b80109d6aee

Summary:

- Fix typo in ```torch/onnx/utils.py``` when looking up registered custom ops.

- Add a simple test case

1. Register custom op with ```TorchScript``` using ```cpp_extension.load_inline```.

2. Register custom op with ```torch.onnx.symbolic``` using ```register_custom_op_symbolic```.

3. Export model with custom op, and verify with Caffe2 backend.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21321

Differential Revision: D16101097

Pulled By: houseroad

fbshipit-source-id: 084f8b55e230e1cb6e9bd7bd52d7946cefda8e33

Summary:

So far, we only have py2 ci for onnx. I think py3 support is important. And we have the plan to add onnxruntime backend tests, which only supports py3.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21715

Reviewed By: bddppq

Differential Revision: D15796885

Pulled By: houseroad

fbshipit-source-id: 8554dbb75d13c57b67ca054446a13a016983326c

Summary:

Fixes#21026.

1. Improve build docs for Windows

2. Change `BUILD_SHARED_LIBS=ON` for Caffe2 local builds

3. Change to out-source builds for LibTorch and Caffe2 (transferred to #21452)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21190

Differential Revision: D15695223

Pulled By: ezyang

fbshipit-source-id: 0ad69d7553a40fe627582c8e0dcf655f6f63bfdf

Summary:

This PR is an intermediate step toward the ultimate goal of eliminating "caffe2" in favor of "torch". This PR moves all of the files that had constituted "libtorch.so" into the "libcaffe2.so" library, and wraps "libcaffe2.so" with a shell library named "libtorch.so". This means that, for now, `caffe2/CMakeLists.txt` becomes a lot bigger, and `torch/CMakeLists.txt` becomes smaller.

The torch Python bindings (`torch_python.so`) still remain in `torch/CMakeLists.txt`.

The follow-up to this PR will rename references to `caffe2` to `torch`, and flatten the shell into one library.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17783

Differential Revision: D15284178

Pulled By: kostmo

fbshipit-source-id: a08387d735ae20652527ced4e69fd75b8ff88b05

Summary:

We now can build libtorch for Android.

This patch aims to provide two improvements to the build

- Make the architecture overridable by providing an environment variable `ANDROID_ABI`.

- Use `--target install` when calling cmake to actually get the header files nicely in one place.

I ran the script without options to see if the caffe2 builds are affected (in particularly by the install), but they seem to run OK and probably only produce a few files in build_android/install.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20152

Differential Revision: D15249020

Pulled By: pjh5

fbshipit-source-id: bc89f1dcadce36f63dc93f9249cba90a7fc9e93d

Summary:

* adds TORCH_API and AT_CUDA_API in places

* refactor code generation Python logic to separate

caffe2/torch outputs

* fix hip and asan

* remove profiler_cuda from hip

* fix gcc warnings for enums

* Fix PythonOp::Kind

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19554

Differential Revision: D15082727

Pulled By: kostmo

fbshipit-source-id: 83a8a99717f025ab44b29608848928d76b3147a4

Summary:

New pip package becomes more restricted. We need to add extra flag to make the installation work.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19725

Differential Revision: D15078698

Pulled By: houseroad

fbshipit-source-id: bbd782a0c913b5a1db3e9333de1ca7d88dc312f1

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19218

Sync some contents between fbcode/caffe2 and xplat/caffe2 to move closer towards a world where they are identical.

Reviewed By: dzhulgakov

Differential Revision: D14919916

fbshipit-source-id: 29c6b6d89ac556d58ae3cd02619aca88c79591c1

Summary:

`scripts/build_windows.bat` is the original way to build caffe2 on Windows, but since it is merged into libtorch, the build scripts should be unified because they actually do the same thing except there are some different flags.

The follow-up is to add the tests. Looks like the CI job for caffe2 windows is defined [here](https://github.com/pytorch/ossci-job-dsl/blob/master/src/jobs/caffe2.groovy#L906). Could we make them a separate file, just like what we've done in `.jenkins/pytorch/win-build.sh`? There's a bunch of things we can do there, like using ninja and sccache to accelerate build.

cc orionr yf225

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18683

Differential Revision: D14730188

Pulled By: ezyang

fbshipit-source-id: ea287d7f213d66c49faac307250c31f9abeb0ebe

Summary:

This commit did below enhancements:

1, add doc for build_android.sh;

2, add install step for build_android.sh, thus the headers and libraries can be collected together for further usage conveniently;

3, change the default INSTALL_PREFIX from $PYTORCH_ROOT/install to $PYTORCH_ROOT/build_android/install to make the project directory clean.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17298

Differential Revision: D14149709

Pulled By: soumith

fbshipit-source-id: a3a38cb41f26377e21aa89e49e57e8f21c9c1a39

Summary:

bypass-lint

- Change all Caffe2 builds to use setup.py instead of cmake

- Add a -cmake- Caffe2 build configuration that uses cmake and only builds cpp

- Move skipIfCI logic from onnx test scripts to the rest of CI logic

- Removal of old PYTHONPATH/LD_LIBRARY_PATH/etc. env management

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15917

Reviewed By: orionr

Differential Revision: D13637583

Pulled By: pjh5

fbshipit-source-id: c5c5639db0251ba12b6e4b51b2ac3b26a8953153

Summary:

Hello,

This is a little patch to fix `DeprecationWarning: invalid escape sequence`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15733

Differential Revision: D13587291

Pulled By: soumith

fbshipit-source-id: ce68db2de92ca7eaa42f78ca5ae6fbc1d4d90e05

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15322

caffe2 mobile opengl code is not used, deleting it to reduce complications when we perform other changes

Reviewed By: Maratyszcza

Differential Revision: D13499943

fbshipit-source-id: 6479f6b9f50f08b5ae28f8f0bc4a1c4fc3f3c3c2

Summary:

QNNPACK contains assembly files, and CMake tries to build them for wrong architectures in multi-arch builds. This patch has two effects:

- Disables QNNPACK in multi-arch iOS builds

- Specifies a single `IOS_ARCH=arm64` by default (covers most iPhones/iPads on the market)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14125

Differential Revision: D13112366

Pulled By: Maratyszcza

fbshipit-source-id: b369083045b440e41d506667a92e41139c11a971

Summary:

The experimental ops for the c10 dispatcher have accidentally been disabled in the oss build when the directory changed from `c10` to `experimental/c10`. This PR re-enables them.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/12821

Differential Revision: D10446779

Pulled By: smessmer

fbshipit-source-id: ac58cd1ba1281370e62169ec26052d0962225375

Summary:

Need to link CUDA statically for benchmarking purpose.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/10596

Reviewed By: llyfacebook

Differential Revision: D9370738

Pulled By: sf-wind

fbshipit-source-id: 4464d62473e95fe8db65b0bd3b301f262bf269bf

Summary:

Add flags for LMDB and LevelDB, default `OFF`. These can be enabled with

```

USE_LMDB=1 USE_LEVELDB=1 python setup.py build_deps

```

Also add a flag to build Caffe2 ops, which is default `ON`. Disable with

```

NO_CAFFE2_OPS=1 python setup.py build_deps

```

cc Yangqing soumith pjh5 mingzhe09088

Pull Request resolved: https://github.com/pytorch/pytorch/pull/11462

Reviewed By: soumith

Differential Revision: D9758156

Pulled By: orionr

fbshipit-source-id: 95fd206d72fdf44df54fc5d0aeab598bff900c63

Summary:

Continuing pjh5's work to remove FULL_CAFFE2 flag completely.

With these changes you'll be able to also do something like

```

NO_TEST=1 python setup.py build_deps

```

and this will skip building tests in caffe2, aten, and c10d. By default the tests are built.

cc mingzhe09088 Yangqing

Pull Request resolved: https://github.com/pytorch/pytorch/pull/11321

Reviewed By: mingzhe09088

Differential Revision: D9694950

Pulled By: orionr

fbshipit-source-id: ff5c4937a23d1a263378a196a5eda0cba98af0a8

Summary:

This PR adds all PyTorch and Caffe2 job configs to CircleCI.

Steps for the CircleCI mini-trial:

- [ ] Make sure this PR passes Jenkins CI and fbcode internal tests

- [x] Approve this PR

- [ ] Ask CircleCI to turn up the number of build machines

- [ ] Land this PR so that the new `.circleci/config.yml` will take effect

Several Caffe2 tests are flaky on CircleCI machines and hence skipped when running on CircleCI. A proper fix for them will be worked on after a successful mini-trial.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/11264

Differential Revision: D9656793

Pulled By: yf225

fbshipit-source-id: 7832e90018f3dff7651489c04a179d6742168fe1

Summary:

This completely removes BUILD_CAFFE2 from CMake. There is still a little bit of "full build" stuff in setup.py that enables USE_CUDNN and BUILD_PYTHON, but otherwise everything should be enabled for PyTorch as well as Caffe2. This gets us a lot closer to full unification.

cc mingzhe09088, pjh5, ezyang, smessmer, Yangqing

Pull Request resolved: https://github.com/pytorch/pytorch/pull/8338

Reviewed By: mingzhe09088

Differential Revision: D9600513

Pulled By: orionr

fbshipit-source-id: 9f6ca49df35b920d3439dcec56e7b26ad4768b7d

Summary:

Building caffe2 and pytorch separately will end up duplicated symbols as they now share some basic libs. And it's especially bad for registry. This PR fixes our CI and build them in one shot with shared symbols.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/10427

Reviewed By: bddppq

Differential Revision: D9282372

Pulled By: yinghai

fbshipit-source-id: 0514931ea88277029a68fa5368ff4336472f132e

Summary:

Breaking this out of #8338

When BUILD_CAFFE2 and BUILD_ATEN are removed, we need to install typing on Mac.

cc orionr

Pull Request resolved: https://github.com/pytorch/pytorch/pull/9271

Reviewed By: orionr

Differential Revision: D8768701

Pulled By: mingzhe09088

fbshipit-source-id: 052b96e90e64b01e6b5dd48b91c0fb12fb96b54a

Summary:

Will bump up to opset 8 in another PR to match the current opset version.

Already tested through generating the models in current model zoo.

Closes https://github.com/pytorch/pytorch/pull/8854

Reviewed By: ezyang

Differential Revision: D8666437

Pulled By: houseroad

fbshipit-source-id: feffdf704dd3136aa59c0f1ff1830c14d1bd20aa

* Run onnx integration tests in caffe2 CI

* verbose log

* turn off onnx verbose installation log

* can not install ninja

* Do not use all cores to build pytorch

* install tests require

* pip install to user dir

* use determined path to improve (s)ccache hit

* Do not change path in test.sh

* Add the compile cache hit trick to conda install as well

* cover jenkins in CI environment detection

* Move ONNX integration tests from onnx-fb-universe to PyTorch repo

* Switch to use torchvision

* Delete single rnn operator tests, they have been covered in e2e tests in test_caffe2.py

* Mirror the fix in onnx-fb-universe to bypass cuda check

667326d84b

* Statically linking CUDA for Anaconda builds

* typo

* Adding a summary line

* Comments

* Typo fix

* Fix faulty parameter passing

* Removing problem CUDA modules for now

* Fixing unused debugging function

* Turning off static cuda linking until script changes are in

* Disabling mkl

* Adding integrated pytorch-caffe2 package

* Updates

* Fixing more substitution

* Fix to pytorch build location

* Bugfixes, progress towards including CUDA libs in package

* Fix to sed call

* Putting off packaing CUDA libs for Caffe2

* Progress towards packaging CUDA libs

* Progress towards packaging CUDA libs

* Changes to CUDA copying

* Turning on CUDA lib packaging

* Correction to env variables passed into meta.yaml

* typo

* Adding more needed variables in build.sh

* Adding some debugging info

* Changing versioning to have dates and be in build string

* Removing version from build string

* Removing packaging CUDA logic for static linking (later)

* Changing version to mirror pytorch

* Removing env variable req in build.sh

* Change to sed to port to mac

* Changes without centos changes

* Changes for protobuf 3.5 and gcc 4.8

* Changing 3.4.1 back to 3.5.1

* Preventing installing two versions of setuptools

* Fixing setuptools bug

* Fixing conda

* Adding hypothesis and onnx to conda builds

* Updates but still not working

* Adding required changes to conda_full

* Updates

* Moving to more general build_anaconda script

* Adding check for gcc version

* Adding general ways to add/remove packages from meta.yaml?

* Changes for specific packages to build on gcc 5.4

* Fix with glog spec

* Requiring >numpy 1.12 for python 3 to satisfy opencv dependency

* Adding pydot to required testing packages

* Adding script to read conda versions for gcc ABI

* Trying to fix segfault by installing in env instead

* conda activate -> source activate

* Trying adding back leveldb

* Setting locale for ONNX + conda-search changed its format

* read_conda_versions handles libprotobuf

* Conda script updates

* Adding a protobuf-working test

* Removing changes to proto defs b/c they will require internal changes in a separate diff

- Remove USE_ARM64 option because it doesn't do what is expected

- Disable ARM ComputeLibrary for non-ARM/ARM64 builds

- Remove analysis of CMake options from scripts/build_android.sh

- Add user-specified CMake options at the end of command line to allow overriding defaults

- Update README for ARM ComputeLibrary integration and do not require to disable NNPACK for ARM64 build with ARM ComputeLibrary

* [WIP] moving conda scripts to separate build+test

* [WIP] Splitting conda-builds into build and test phases

* Migrating build_local to call build_anaconda

* Tidying up a regex

Adds another package to Anaconda.org with a "-full" suffix which includes more libraries by default. This also installs NCCL 2.1 onto the CI Ubuntu docker images to accomplish this.

Summary:

Addresses issue #1676

Now when `make install` is run, the `caffe2` (and `caffe`) python modules will be installed into the correct site-packages directory (relative to the prefix) instead of directly in the prefix.

Closes https://github.com/caffe2/caffe2/pull/1677

Reviewed By: pietern

Differential Revision: D6710247

Pulled By: bddppq

fbshipit-source-id: b49167d48fd94d87f7b7c1ebf0f187ec6a203470

Summary:

iOS is also depend on USE_MOBILE_OPENGL, so I think we should only disable it for Android.

Closes https://github.com/caffe2/caffe2/pull/1835

Differential Revision: D6880522

Pulled By: Maratyszcza

fbshipit-source-id: b2c2fa052ad5948bc52fa49eb22c86eb08f59a39

Summary:

Last fix was uncommitted due to a bug in internal build (CAFFE2_API causing error). This one re-applies it as well as a few more, especially enabling gtest.

Earlier commit message: Basically, this should make windows {static_lib, shared_lib} * {static_runtime, shared_runtime} * {cpu, gpu} work other than gpu shared_lib, which willyd kindly pointed out a symbol limit problem. A few highlights:

(1) Updated newest protobuf.

(2) use protoc dllexport command to ensure proper symbol export for windows.

(3) various code updates to make sure that C2 symbols are properly shown

(4) cmake file changes to make build proper

(5) option to choose static runtime and shared runtime similar to protobuf

(6) revert to visual studio 2015 as current cuda and msvc 2017 do not play well together.

(7) enabled gtest and fixed testing bugs.

Earlier PR is #1793

Closes https://github.com/caffe2/caffe2/pull/1827

Differential Revision: D6832086

Pulled By: Yangqing

fbshipit-source-id: 85f86e9a992ee5c53c70b484b761c9d6aed721df

Summary:

Now we use **clang** to build Caffe2 for Android with arm64-v8a ABI, but clang doesn't support "-s" compilation flag. If we append this flag to clang, it will report a warning:

> clang++: warning: argument unused during compilation: '-s' [-Wunused-command-line-argument]

This submit will check we use gcc or clang to build Caffe2 for Android.

Closes https://github.com/caffe2/caffe2/pull/1834

Differential Revision: D6833011

Pulled By: Yangqing

fbshipit-source-id: e4655d126fb3586e7af605a31a6b1c1ed66b9bcb

Summary:

Historically, for interface dependent libraries (glog, gflags and protobuf), exposing them in Caffe2Config.cmake is usually difficult.

New versions of glog and gflags ship with new-style cmake targets, so one does not need to use variables. New-style targets also make it easier for people to depend on them in installed config files.

This diff modernizes the gflags library, and still provides a fallback path if the installed gflags does not have cmake config files coming with it.

It does change one behavior of the build process though - when one specifies -DUSE_GFLAGS=ON but gflags cannot be found, the old script automatically turns it off but the new script crashes, forcing the user to specify USE_GFLAGS=OFF.

Closes https://github.com/caffe2/caffe2/pull/1819

Differential Revision: D6826604

Pulled By: Yangqing

fbshipit-source-id: 210f3926f291c8bfeb24eb9671e5adfcbf8cf7fe

Summary:

More changes to be added later. I need to make a PR so that I can point jenkins to this

Closes https://github.com/caffe2/caffe2/pull/1767

Reviewed By: orionr

Differential Revision: D6817174

Pulled By: pjh5

fbshipit-source-id: 0fc73ed7d781b5972e0234f8c9864c5e57180591

Summary:

This reverts commit d286264fccc72bf90a2fcd7da533ecca23ce557e

bypass-lint

An infra SEV is better than not reverting this diff.

If you copy this password, see you in SEV Review!

cause_a_sev_many_files

Differential Revision: D6817719

fbshipit-source-id: 8fe0ad7aba75caaa4c3cac5e0a804ab957a1b836

Summary:

Basically, this should make windows {static_lib, shared_lib} * {static_runtime, shared_runtime} * {cpu, gpu} work. A few highlights:

(1) Updated newest protobuf.

(2) use protoc dllexport command to ensure proper symbol export.

(3) various code updates to make sure that C2 symbols are properly shown

(4) cmake file changes to make build proper

(5) option to choose static runtime and shared runtime similar to protobuf

(6) revert to visual studio 2015 as current cuda and msvc 2017 do not play well together.

Closes https://github.com/caffe2/caffe2/pull/1793

Reviewed By: dzhulgakov

Differential Revision: D6817719

Pulled By: Yangqing

fbshipit-source-id: d286264fccc72bf90a2fcd7da533ecca23ce557e

Summary:

The android.cmake.toolchain file we use from a submodule is unmaintained and not updated since 2015.

It causes numerous problems in Caffe2 build:

- Caffe2 can't be built for Android ARM64, because gcc toolchain for ARM64 doesn't support NEON-FP16 intrinsics, and the android.cmake.toolchain we use doesn't allow us specify clang-5.0 from NDK r15c

- Caffe2 can't be built with Android NDK r16 (the most recent NDK version)

- Caffe2 can't be built for Android with Ninja generator

This change updates the build script to use $ANDROID/build/cmake/android.cmake.toolchain instead, which is maintained by Android team, and synchronized with Android NDK version.

As this toolchain file doesn't support "armeabi-v7a with NEON FP16" ABI, I had to disable mobile OpenGL backend, which requires NEON-FP16 extension to build. With some work, it can be re-enabled in the future.

Closes https://github.com/caffe2/caffe2/pull/1740

Differential Revision: D6707099

Pulled By: Maratyszcza

fbshipit-source-id: 8488594c4225deed0323c1e54c8d71c804b328df

Summary:

Lots of unwanted stuff here that shouldn't be in this branch. I just need to make a PR so I can test it

Closes https://github.com/caffe2/caffe2/pull/1765

Reviewed By: orionr

Differential Revision: D6752610

Pulled By: pjh5

fbshipit-source-id: cc93290773640a9eb029f350b17f520ac5f2504e

Summary:

Change the directory name for ipython notebook.

Change the executable name fro ipython to jupyter

Pass arguments to the script to the notebook, instead of fixing --ip='*'. In some setup, --ip='*' cause jupyter notebook not displayed.

Closes https://github.com/caffe2/caffe2/pull/1546

Reviewed By: pietern

Differential Revision: D6460324

Pulled By: sf-wind

fbshipit-source-id: f73d7be96525e2ab97f3d0e7fcb4b1557934f873

Summary:

The scripts/build_local.sh script would always build protoc from the

third_party protobuf tree and override the PROTOBUF_PROTOC_EXECUTABLE

CMake variable. This variable is used by the protobuf CMake files, so

it doesn't let us detect whether the protoc was specified by the user

or by the protobuf CMake files (e.g. an existing installation). This

in turn led to a problem where system installed headers would be

picked up while using protoc built from third_party. This only works

if the system installed version matches the version included in the

Caffe2 tree. Therefore, this commit changes the variable to specify a

custom protoc executable to CAFFE2_CUSTOM_PROTOC_EXECUTABLE, and

forces the use of the bundled libprotobuf when it is specified.

The result is that we now EITHER specify a custom protoc (as required

for cross-compilation where protoc must be compiled for the host and

libprotobuf for the target architecture) and use libprotobuf from the

Caffe2 tree, OR use system protobuf.

If system protobuf cannot be found, we fall back to building protoc

and libprotobuf in tree and packaging it as part of the Caffe2 build

artifacts.

Closes https://github.com/caffe2/caffe2/pull/1328

Differential Revision: D6032836

Pulled By: pietern

fbshipit-source-id: b75f8dd88412f02c947dc81ca43f7b2788da51e5

Summary:

The build_local.sh script current is single thread, which is really slow. Use the same mechanism in build_android.sh to parallelize the build.

Closes https://github.com/caffe2/caffe2/pull/1282

Differential Revision: D5992231

Pulled By: sf-wind

fbshipit-source-id: 01ba06b6efcb0f535f974a2dfffbae9ba385d27d

Summary:

After this, windows should be all green.

Closes https://github.com/caffe2/caffe2/pull/1228

Reviewed By: bwasti

Differential Revision: D5888328

Pulled By: Yangqing

fbshipit-source-id: 98fd39a4424237f2910df69c8609455d7af3ca34

Summary:

There does not exist appropriate build script for Tizen software platform.

This commit is to fix#847.

Signed-off-by: Geunsik Lim <geunsik.lim@samsung.com>

Closes https://github.com/caffe2/caffe2/pull/877

Differential Revision: D5571335

Pulled By: Yangqing

fbshipit-source-id: 12759a3c0cb274ef93d7127b8185341e087f2bfa

Summary:

1. switch the protoc building system from msbuild to cmake

2. set default CMAKE_GENERATE to VS2015

3. set default CMAKE_BUILD_TYPE to Release

4. improve error handling

5. add the generated protobuf include path

6. exclude many optional dependencies from build_windows.bat

Closes https://github.com/caffe2/caffe2/pull/1014

Differential Revision: D5559402

Pulled By: Yangqing

fbshipit-source-id: 019e3a6c3c909154027fa932ce1d6549476b23bb

Summary:

use user defined android ndk path instead of hard code.

Closes https://github.com/caffe2/caffe2/pull/506

Differential Revision: D5162646

Pulled By: Yangqing

fbshipit-source-id: 5093888e15607b3bf6682e05eb91aa94c6206b01

Summary:

Added python-pip and python-numpy into build_raspbian.sh script

because they are not installed in ubuntu/debian minimal image.

Closes https://github.com/caffe2/caffe2/pull/609

Differential Revision: D5121550

Pulled By: Yangqing

fbshipit-source-id: 14dd1450275fcc2aa9d2a06f0982f460528a1930

Summary: