Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65861

First in a series. This PR changes the code in deploy.h/cpp and

interpreter_impl.h/cpp to be camel case instead of snake case. Starting

with this as it has the most impact on downstream users.

Test Plan: Imported from OSS

Reviewed By: shannonzhu

Differential Revision: D31291183

Pulled By: suo

fbshipit-source-id: ba6f74042947c9a08fb9cb3ad7276d8dbb5b2934

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63612

This makes Tensor inherit from a new class TensorBase, that provides a subset of Tensor that doesn't

directly depend on native_functions.yaml. Code that only includes TensorBase.h with thus not need to

be rebuilt every time someone changes an operator signature.

Making `Tensor` inherit from this class means that `const TensorBase&` parameters will be callable

with an ordinary `Tensor`. I've also made `Tensor` constructible and assignable from `TensorBase` to

minimize friction in code mixing the two types.

To help enforce that `Tensor.h` and `Functions.h` aren't accidentally included, I've added an error

into `Operators.h` if `TORCH_ASSERT_NO_OPERATORS` is defined. We can either set this in the build

system for certain folders, or just define it at the top of any file.

I've also included an example of manually special-casing the commonly used `contiguous` operator.

The inline function's slow path defers to `TensorBase::__dispatch_contiguous` which is defined in

`Tensor.cpp`. I've made it so `OptionalTensorRef` is constructible from `TensorBase`, so I can

materialize a `Tensor` for use in dispatch without actually increasing its refcount.

Test Plan: Imported from OSS

Reviewed By: gchanan

Differential Revision: D30728580

Pulled By: ezyang

fbshipit-source-id: 2cbc8eee08043382ee6904ea8e743b1286921c03

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61801

resubmitting because the last one was unrecoverable due to making changes incorrectly in the stack

Test Plan: Imported from OSS

Reviewed By: desertfire

Differential Revision: D29812510

Pulled By: makslevental

fbshipit-source-id: ba9685dc81b6699724104d5ff3211db5852370a6

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63995

JIT methods already have name() in their interface, and Py methods have names in their implementation. I'm adding this for a particular case where someone tried to use name() on a JIT method that we're replacing with an IMethod.

Test Plan: add case to imethod API test

Reviewed By: suo

Differential Revision: D30559401

fbshipit-source-id: 76236721f5cd9a9d9d488ddba12bfdd01d679a2c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63345

This diff did the following few things to enable the tests:

1. Exposed IMethod as TORCH_API.

2. Linked torch_deploy to test_api if USE_DEPLOY == 1.

3. Generated torch::deploy examples when building torch_deploy library.

Test Plan: ./build/bin/test_api --gtest_filter=IMethodTest.*

Reviewed By: ngimel

Differential Revision: D30346257

Pulled By: alanwaketan

fbshipit-source-id: 932ae7d45790dfb6e00c51893933a054a0fad86d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62521

This diff did the following few things to enable the tests:

1. Exposed IMethod as TORCH_API.

2. Linked torch_deploy to test_api if USE_DEPLOY == 1.

Test Plan:

./build/bin/test_api --gtest_filter=IMethodTest.*

To be noted, one needs to run `python torch/csrc/deploy/example/generate_examples.py` before the above command.

Reviewed By: ezyang

Differential Revision: D30055372

Pulled By: alanwaketan

fbshipit-source-id: 50eb3689cf84ed0f48be58cd109afcf61ecca508

Summary:

Enable Gelu bf16/fp32 in CPU path using Mkldnn implementation. User doesn't need to_mkldnn() explicitly. New Gelu fp32 performs better than original one.

Add Gelu backward for https://github.com/pytorch/pytorch/pull/53615.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58525

Reviewed By: ejguan

Differential Revision: D29940369

Pulled By: ezyang

fbshipit-source-id: df9598262ec50e5d7f6e96490562aa1b116948bf

Summary:

Fixes https://github.com/pytorch/pytorch/issues/60747

Enhances the C++ versions of `Transformer`, `TransformerEncoderLayer`, and `TransformerDecoderLayer` to support callables as their activation functions. The old way of specifying activation function still works as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62342

Reviewed By: malfet

Differential Revision: D30022592

Pulled By: jbschlosser

fbshipit-source-id: d3c62410b84b1bd8c5ed3a1b3a3cce55608390c4

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62442

For PythonMethodWrapper::setArgumentNames, make sure to use the correct method

specified by method_name_ rather than using the parent model_ obj which itself

_is_ callable, but that callable is not the right signature to extract.

For Python vs Script, unify the behavior to avoid the 'self' parameter, so we only

list the argument names to the unbound arguments which is what we need in practice.

Test Plan: update unit test and it passes

Reviewed By: alanwaketan

Differential Revision: D29965283

fbshipit-source-id: a4e6a1d0f393f2a41c3afac32285548832da3fb4

Summary:

As GoogleTest `TEST` macro is non-compliant with it as well as `DEFINE_DISPATCH`

All changes but the ones to `.clang-tidy` are generated using following script:

```

for i in `find . -type f -iname "*.c*" -or -iname "*.h"|xargs grep cppcoreguidelines-avoid-non-const-global-variables|cut -f1 -d:|sort|uniq`; do sed -i "/\/\/ NOLINTNEXTLINE(cppcoreguidelines-avoid-non-const-global-variables)/d" $i; done

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62008

Reviewed By: driazati, r-barnes

Differential Revision: D29838584

Pulled By: malfet

fbshipit-source-id: 1b2f8602c945bd4ce50a9bfdd204755556e31d13

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903

### Remaining Tasks

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP).

### Summary

1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed.

2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415).

It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now.

3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now.

4. One test is currently being skipped -

[test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines.

The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d.

Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses.

Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code.

Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests.

### Testing

1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2.

Only one test had to be modified, as it was hardcoded for AVX2.

2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support.

### Would the downclocking caused by AVX512 pose an issue?

I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance.

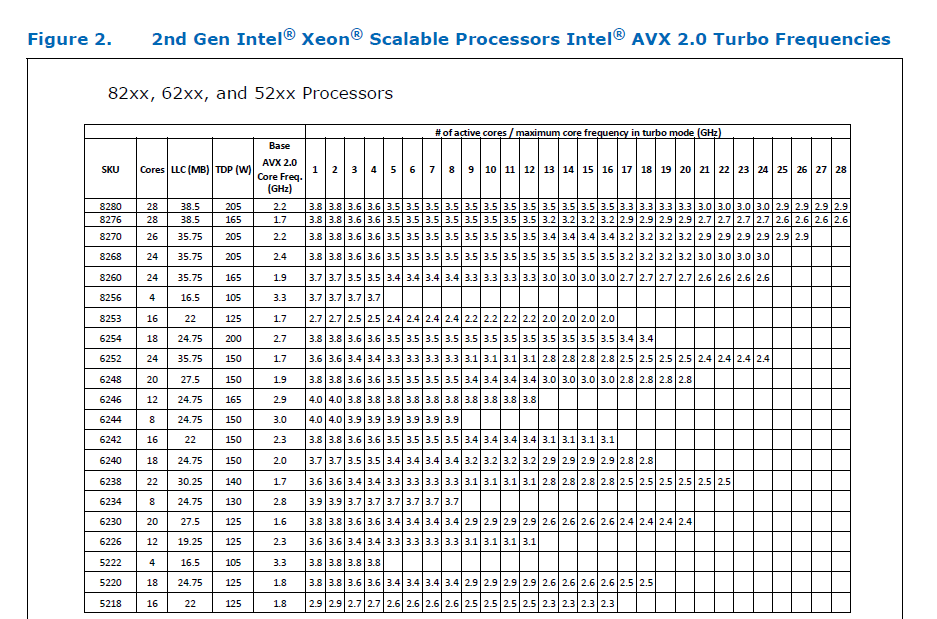

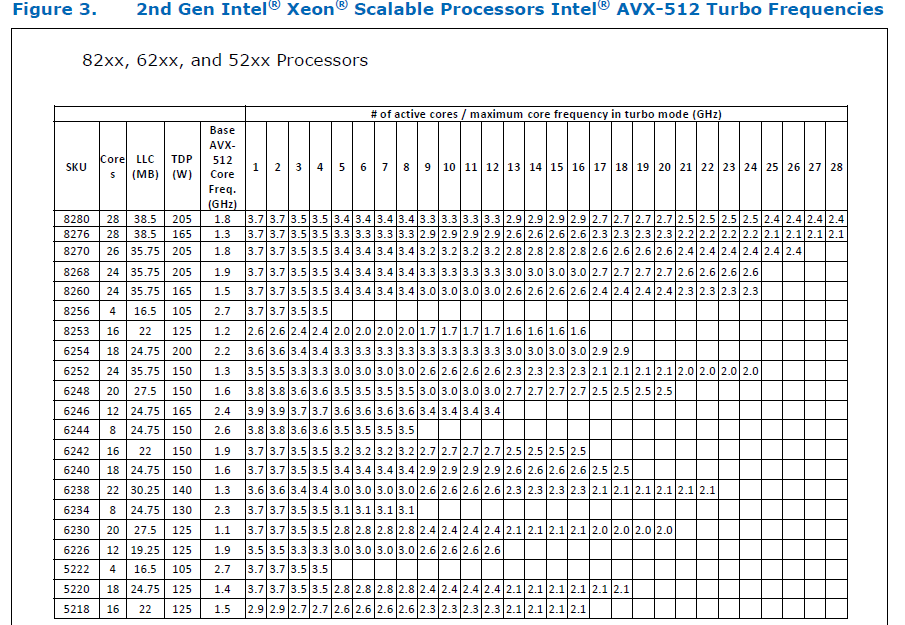

This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance.

Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -

The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them.

### Is PyTorch always faster with AVX512?

No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512.

It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed.

Original pull request: https://github.com/pytorch/pytorch/pull/56992

Reviewed By: soulitzer

Differential Revision: D29266289

Pulled By: ezyang

fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

Summary:

Expose IMethod interface, which provides a unified interface to either script or python methods backed by torchscript or torchdeploy.

IMethod provides a way to depend on a torch method without depending on a particular runtime implementation such as torchscript or python/deploy.

Test Plan: add unit tests.

Reviewed By: suo

Differential Revision: D29463455

fbshipit-source-id: 903391d9af9fbdd8fcdb096c1a136ec6ac153b7c

Summary:

The grad() function needs to return the updated values, and hence

needs a non-empty inputs to populate.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52016

Test Plan:

Passes Python and C++ unit tests, and added new tests to catch this behavior.

Fixes https://github.com/pytorch/pytorch/issues/47061

Reviewed By: albanD

Differential Revision: D26406444

Pulled By: dagitses

fbshipit-source-id: 023aeca9a40cd765c5bad6a1a2f8767a33b75a1a

Summary:

Fixes https://github.com/pytorch/pytorch/issues/27655

This PR adds a C++ and Python version of ReflectionPad3d with structured kernels. The implementation uses lambdas extensively to better share code from the backward and forward pass.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59791

Reviewed By: gchanan

Differential Revision: D29242015

Pulled By: jbschlosser

fbshipit-source-id: 18e692d3b49b74082be09f373fc95fb7891e1b56

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58570

**What the PR does**

Generate a fast-path `at::meta::{op}` API for calling meta functions without having to go through the dispatcher. This will be important for perf for external backends that want to use meta functions for shape checking (which seems likely to be what we end up doing for LazyTensorCore).

**Details**

In order to avoid naming collisions I had to make two small changes:

- rename `MetaFunctions.h` template -> `NativeMetaFunctions.h` (this is the file that declares the impl() function for every structured operator).

- rename the meta class: `at::meta::{op}::meta()` -> `at::meta::structured_{op}::meta()`

I also deleted a few unnecessary includes, since any file that includes NativeFunctions.h will automatically include NativeMetaFunctions.h.

**Why I made the change**

This change isn't actually immediately used anywhere; I already started writing it because I thought it would be useful for structured composite ops, but that isn't actually true (see [comment](https://github.com/pytorch/pytorch/pull/58266#issuecomment-843213147)). The change feels useful and unambiguous though so I think it's safe to add. I added explicit tests for C++ meta function calls just to ensure that I wrote it correctly - which is actually how I hit the internal linkage issue in the PR below this in the stack.

Test Plan: Imported from OSS

Reviewed By: pbelevich

Differential Revision: D28711299

Pulled By: bdhirsh

fbshipit-source-id: d410d17358c2b406f0191398093f17308b3c6b9e

Summary:

Fixes https://github.com/pytorch/pytorch/issues/35379

- Adds `retains_grad` attribute backed by cpp as a native function. The python bindings for the function are skipped to be consistent with `is_leaf`.

- Tried writing it without native function, but the jit test `test_tensor_properties` seems to require that it be a native function (or alternatively maybe it could also work if we manually add a prim implementation?).

- Python API now uses `retain_grad` implementation from cpp

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59362

Reviewed By: jbschlosser

Differential Revision: D28969298

Pulled By: soulitzer

fbshipit-source-id: 335f2be50b9fb870cd35dc72f7dadd6c8666cc02

Summary:

Fixes https://github.com/pytorch/pytorch/issues/4661

- Add warnings in engine's `execute` function so it can be triggered through both cpp and python codepaths

- Adds an RAII guard version of `c10::Warning::set_warnAlways` and replaces all prior usages of the set_warnAlways with the new one

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59412

Reviewed By: jbschlosser

Differential Revision: D28969294

Pulled By: soulitzer

fbshipit-source-id: b03369c926a3be18ce1cf363b39edd82a14245f0

Summary:

Adds `is_inference` as a native function w/ manual cpp bindings.

Also changes instances of `is_inference_tensor` to `is_inference` to be consistent with other properties such as `is_complex`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58729

Reviewed By: mruberry

Differential Revision: D28874507

Pulled By: soulitzer

fbshipit-source-id: 0fa6bcdc72a4ae444705e2e0f3c416c1b28dadc7

Summary:

Fixes https://github.com/pytorch/pytorch/issues/56608

- Adds binding to the `c10::InferenceMode` RAII class in `torch._C._autograd.InferenceMode` through pybind. Also binds the `torch.is_inference_mode` function.

- Adds context manager `torch.inference_mode` to manage an instance of `c10::InferenceMode` (global). Implemented in `torch.autograd.grad_mode.py` to reuse the `_DecoratorContextManager` class.

- Adds some tests based on those linked in the issue + several more for just the context manager

Issues/todos (not necessarily for this PR):

- Improve short inference mode description

- Small example

- Improved testing since there is no direct way of checking TLS/dispatch keys

-

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58045

Reviewed By: agolynski

Differential Revision: D28390595

Pulled By: soulitzer

fbshipit-source-id: ae98fa036c6a2cf7f56e0fd4c352ff804904752c

Summary:

Fixes a bug introduced by https://github.com/pytorch/pytorch/issues/57057

cc ailzhang while writing the tests, I realized that for these functions, we don't properly set the CreationMeta in no grad mode and Inference mode. Added a todo there.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57669

Reviewed By: soulitzer

Differential Revision: D28231005

Pulled By: albanD

fbshipit-source-id: 08a68d23ded87027476914bc87f3a0537f01fc33

Summary:

This is an automatic change generated by the following script:

```

#!/usr/bin/env python3

from subprocess import check_output, check_call

import os

def get_compiled_files_list():

import json

with open("build/compile_commands.json") as f:

data = json.load(f)

files = [os.path.relpath(node['file']) for node in data]

for idx, fname in enumerate(files):

if fname.startswith('build/') and fname.endswith('.DEFAULT.cpp'):

files[idx] = fname[len('build/'):-len('.DEFAULT.cpp')]

return files

def run_clang_tidy(fname):

check_call(["python3", "tools/clang_tidy.py", "-c", "build", "-x", fname,"-s"])

changes = check_output(["git", "ls-files", "-m"])

if len(changes) == 0:

return

check_call(["git", "commit","--all", "-m", f"NOLINT stubs for {fname}"])

def main():

git_files = check_output(["git", "ls-files"]).decode("ascii").split("\n")

compiled_files = get_compiled_files_list()

for idx, fname in enumerate(git_files):

if fname not in compiled_files:

continue

if fname.startswith("caffe2/contrib/aten/"):

continue

print(f"[{idx}/{len(git_files)}] Processing {fname}")

run_clang_tidy(fname)

if __name__ == "__main__":

main()

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56892

Reviewed By: H-Huang

Differential Revision: D27991944

Pulled By: malfet

fbshipit-source-id: 5415e1eb2c1b34319a4f03024bfaa087007d7179

Summary:

`is_variable` spits out a deprecation warning during the build (if it's

still something that needs to be tested we can ignore deprecated

warnings for the whole test instead of this change).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56305

Pulled By: driazati

Reviewed By: ezyang

Differential Revision: D27834218

fbshipit-source-id: c7bbea7e9d8099bac232a3a732a27e4cd7c7b950

Summary:

Temporary fix to give people extra time to finish the deprecation.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56401

Reviewed By: xw285cornell, drdarshan

Differential Revision: D27862196

Pulled By: albanD

fbshipit-source-id: ed460267f314a136941ba550b904dee0321eb0c6

Summary:

This PR adds a `padding_idx` parameter to `nn.EmbeddingBag` and `nn.functional.embedding_bag`. As with `nn.Embedding`'s `padding_idx` argument, if an embedding's index is equal to `padding_idx` it is ignored, so it is not included in the reduction.

This PR does not add support for `padding_idx` for quantized or ONNX `EmbeddingBag` for opset10/11 (opset9 is supported). In these cases, an error is thrown if `padding_idx` is provided.

Fixes https://github.com/pytorch/pytorch/issues/3194

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49237

Reviewed By: walterddr, VitalyFedyunin

Differential Revision: D26948258

Pulled By: jbschlosser

fbshipit-source-id: 3ca672f7e768941f3261ab405fc7597c97ce3dfc

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54403

A few important points about InferenceMode behavior:

1. All tensors created in InferenceMode are inference tensors except for view ops.

- view ops produce output has the same is_inference_tensor property as their input.

Namely view of normal tensor inside InferenceMode produce a normal tensor, which is

exactly the same as creating a view inside NoGradMode. And view of

inference tensor outside InferenceMode produce inference tensor as output.

2. All ops are allowed inside InferenceMode, faster than normal mode.

3. Inference tensor cannot be saved for backward.

Test Plan: Imported from OSS

Reviewed By: ezyang

Differential Revision: D27316483

Pulled By: ailzhang

fbshipit-source-id: e03248a66d42e2d43cfe7ccb61e49cc4afb2923b

Summary:

Non-backwards-compatible change introduced in https://github.com/pytorch/pytorch/pull/53843 is tripping up a lot of code. Better to set it to False initially and then potentially flip to True in the later version to give people time to adapt.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55169

Reviewed By: mruberry

Differential Revision: D27511150

Pulled By: jbschlosser

fbshipit-source-id: 1ac018557c0900b31995c29f04aea060a27bc525