* Re-apply PyTorch pthreadpool changes

Summary:

This re-applies D21232894 (b9d3869df3) and D22162524, plus updates jni_deps in a few places

to avoid breaking host JNI tests.

Test Plan: `buck test @//fbandroid/mode/server //fbandroid/instrumentation_tests/com/facebook/caffe2:host-test`

Reviewed By: xcheng16

Differential Revision: D22199952

fbshipit-source-id: df13eef39c01738637ae8cf7f581d6ccc88d37d5

* Enable XNNPACK ops on iOS and macOS.

Test Plan: buck run aibench:run_bench -- -b aibench/specifications/models/pytorch/pytext/pytext_mobile_inference.json --platform ios --framework pytorch --remote --devices D221 (9788a74da8)AP-12.0.1

Reviewed By: xta0

Differential Revision: D21886736

fbshipit-source-id: ac482619dc1b41a110a3c4c79cc0339e5555edeb

* Respect user set thread count. (#40707)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/40707

Test Plan: Imported from OSS

Differential Revision: D22318197

Pulled By: AshkanAliabadi

fbshipit-source-id: f11b7302a6e91d11d750df100d2a3d8d96b5d1db

* Fix and reenable threaded QNNPACK linear (#40587)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40587

Previously, this was causing divide-by-zero only in the multithreaded

empty-batch case, while calculating tiling parameters for the threads.

In my opinion, the bug here is using a value that is allowed to be zero

(batch size) for an argument that should not be zero (tile size), so I

fixed the bug by bailing out right before the call to

pthreadpool_compute_4d_tiled.

Test Plan: TestQuantizedOps.test_empty_batch

Differential Revision: D22264414

Pulled By: dreiss

fbshipit-source-id: 9446d5231ff65ef19003686f3989e62f04cf18c9

* Fix batch size zero for QNNPACK linear_dynamic (#40588)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40588

Two bugs were preventing this from working. One was a divide by zero

when multithreading was enabled, fixed similarly to the fix for static

quantized linear in the previous commit. The other was computation of

min and max to determine qparams. FBGEMM uses [0,0] for [min,max] of

empty input, do the same.

Test Plan: Added a unit test.

Differential Revision: D22264415

Pulled By: dreiss

fbshipit-source-id: 6ca9cf48107dd998ef4834e5540279a8826bc754

Co-authored-by: David Reiss <dreiss@fb.com>

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39587

Example of using direct linking to pytorch_jni library from aar and updating android/README.md with the tutorial how to do it.

Adding `nativeBuild` dimension to `test_app`, using direct aar dependencies, as headers packaging is not landed yet, excluding `nativeBuild` from building by default for CI.

Additional change to `scripts/build_pytorch_android.sh`:

Skipping clean task here as android gradle plugin 3.3.2 exteralNativeBuild has problems with it when abiFilters are specified.

Will be returned back in the following diffs with upgrading of gradle and android gradle plugin versions.

Test Plan: Imported from OSS

Differential Revision: D22118945

Pulled By: IvanKobzarev

fbshipit-source-id: 31c54b49b1f262cbe5f540461d3406f74851db6c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39588

Before this diff we used c++_static linking.

Users will dynamically link to libpytorch_jni.so and have at least one more their own shared library that probably uses stl library.

We must have not more than one stl per app. ( https://developer.android.com/ndk/guides/cpp-support#one_stl_per_app )

To have only one stl per app changing ANDROID_STL way to c++_shared, that will add libc++_shared.so to packaging.

Test Plan: Imported from OSS

Differential Revision: D22118031

Pulled By: IvanKobzarev

fbshipit-source-id: ea1e5085ae207a2f42d1fa9f6ab8ed0a21768e96

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39868

### Summary

why disable NNPACK on iOS

- To stay consistency with our internal version

- It's currently blocking some external users due to its lack support of x86 architecture

- https://github.com/pytorch/pytorch/issues/32040

- https://discuss.pytorch.org/t/undefined-symbols-for-architecture-x86-64-for-libtorch-in-swift-unit-test/84552/6

- NNPACK uses fast convolution algorithms (FFT, winograd) to reduce the computational complexity of convolutions with large kernel size. The algorithmic speedup is limited to specific conv params which are unlikely to appear in mobile networks.

- Since XNNPACK has been enabled, it performs much better than NNPACK on depthwise-separable convolutions which is the algorithm being used by most of mobile computer vision networks.

### Test Plan

- CI Checks

Test Plan: Imported from OSS

Differential Revision: D22087365

Pulled By: xta0

fbshipit-source-id: 89a959b0736c1f8703eff10723a8fbd02357fd4a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39999

Cleaned up the android build scripts. Consolidated common functions into

common.sh. Also made a few minor fixes:

- We should trust build_android.sh doing right about reusing existing

`build_android_$abi` directory;

- We should clean up `pytorch_android/src/main/jniLibs/` to remove

broken symbolic links in case custom abi list changes since last build;

Test Plan: Imported from OSS

Differential Revision: D22036926

Pulled By: ljk53

fbshipit-source-id: e93915ee4f195111b6171cdabc667fa0135d5195

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39188

Extracting Vulkan_LIBS and Vulkan_INCLUDES setup from `cmake/Dependencies.cmake` to `cmake/VulkanDependencies.cmake` and reuse it in android/pytorch_android/CMakeLists.txt

Adding control to build with Vulkan setting env variable `USE_VULKAN` for `scripts/build_android.sh` `scripts/build_pytorch_android.sh`

We do not use Vulkan backend in pytorch_android, but with this build option we can track android aar change with `USE_VULKAN` added.

Currently it is 88Kb.

Test Plan: Imported from OSS

Differential Revision: D21770892

Pulled By: IvanKobzarev

fbshipit-source-id: a39433505fdcf43d3b524e0fe08062d5ebe0d872

Summary:

* Does a basic upload of release candidates to an extra folder within our

S3 bucket.

* Refactors AWS promotion to allow for easier development of restoration

of backups

Backup restoration usage:

```

RESTORE_FROM=v1.6.0-rc3 restore-backup.sh

```

Requires:

* AWS credentials to upload / download stuff

* Anaconda credentials to upload

Pull Request resolved: https://github.com/pytorch/pytorch/pull/38690

Differential Revision: D21691033

Pulled By: seemethere

fbshipit-source-id: 31118814db1ca701c55a3cb0bc32caa1e77a833d

Summary:

Files that were named the same within the anaconda repository, i.e.

pytorch_1.5.0-cpu.bz2, were found to be clobbering each other,

especially amongst different platforms.

This lead to similarly named packages for different platforms to not get

promoted.

This also adds "--skip" to our anaconda upload so that we don't end up

overwriting our releases just in case this script gets run twice.

Also, conda search ends up erroring out if it doesn't find anything for

the current platform being searched for so we should just continue

forward if we don't find anything since we want to be able to use this

script for all of the packages we support which also do not release

packages for all of the same platforms. (torchtext for example only has

"noarch")

This should also probably be back-ported to the `release/1.5` branch since this changeset was used to release `v1.5.0`

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37089

Differential Revision: D21184768

Pulled By: seemethere

fbshipit-source-id: dbe12d74df593b57405b178ddb2375691e128a49

Summary:

Adds a new promotion pipeline for both our wheel packages hosted on S3

as well as our conda packages hosted on anaconda.

Promotion is only run on tags that that match the following regex:

/v[0-9]+(\.[0-9]+)*/

Example:

v1.5.0

The promotion pipeline is also only run after a manual approval from

someone within the CircleCI security context "org-member"

> NOTE: This promotion pipeline does not cover promotion of packages that

> are published to PyPI, this is an intentional choice as those

> packages cannot be reverted after they have been published.

TODO: Write a proper testing pipeline for this

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34993

Differential Revision: D20539497

Pulled By: seemethere

fbshipit-source-id: 104772d3c3898d77a24ef9bf25f7dbd2496613df

Summary:

How this actually works:

1. Get's a list of URLs from anaconda for pkgs to download, most

likely from pytorch-test

2. Download all of those packages locally in a temp directory

3. Upload all of those packages, with a dry run upload by default

This, along with https://github.com/pytorch/pytorch/issues/34500 basically completes the scripting work for the eventual promotion pipeline.

Currently testing with:

```

TEST_WITHOUT_GIT_TAG=1 TEST_PYTORCH_PROMOTE_VERSION=1.4.0 PYTORCH_CONDA_FROM=pytorch scripts/release/promote/conda_to_conda.sh

```

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34659

Differential Revision: D20432687

Pulled By: seemethere

fbshipit-source-id: c2a99f6cbc6a7448e83e666cde11d6875aeb878e

Summary:

Is reliant on scripts for promotion from s3 to s3 to have already run.

A continuation of the work done in https://github.com/pytorch/pytorch/issues/34274

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34500

Test Plan: yeah_sandcastle

Differential Revision: D20389101

Pulled By: seemethere

fbshipit-source-id: 5e5b554cff964630c5414d48be35f14ba6894021

Summary:

**Summary**

This commit adds `tools/clang_format_new.py`, which downloads a platform-appropriate

clang-format binary to a `.gitignored` location, verifies the binary by comparing its

SHA1 hash to a reference hash (also included in this commit), and runs it on all files

matched a specific regex in a list of whitelisted subdirectories of pytorch.

This script will eventually replace `tools/clang_format.py`.

**Testing**

Ran the script.

*No Args*

```

pytorch > ./tools/clang_format.py

Downloading clang-format to /Users/<user>/Desktop/pytorch/.clang-format-bin

0% |################################################################| 100%

Using clang-format located at /Users/<user>/Desktop/pytorch/.clang-format-bin/clang-format

> echo $?

0

> git status

<bunch of files>

```

`--diff` *mode*

```

> ./tools/clang_format.py --diff

Using clang-format located at /Users/<user>/Desktop/pytorch/.clang-format-bin/clang-format

Some files are not formatted correctly

> echo $?

1

<format files using the script>

> ./tools/clang_format.py --diff

Using clang-format located at /Users/<user>/Desktop/pytorch/.clang-format-bin/clang-format

All files are formatted correctly

> echo $?

0

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34566

Differential Revision: D20431290

Pulled By: SplitInfinity

fbshipit-source-id: 3966f769cfb923e58ead9376d85e97127415bdc6

Summary:

If SELECTED_OP_LIST is specified as a relative path in command line, CMake build will fail.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33942

Differential Revision: D20392797

Pulled By: ljk53

fbshipit-source-id: dffeebc48050970e286cf263bdde8b26d8fe4bce

Summary:

When a system has ROCm dev tools installed, `scripts/build_mobile.sh` tried to use it.

This PR fixes looking up unused ROCm library when building libtorch mobile.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34478

Differential Revision: D20388147

Pulled By: ljk53

fbshipit-source-id: b512c38fa2d3cda9ac20fe47bcd67ad87c848857

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34410

### Summary

Currently, the iOS jobs are not being run on PRs anymore. This is because all iOS jobs have specified the `org-member` as a context which used to include all pytorch members. But seems like recently this rule has changed. It turns out that only users from the admin group or builder group can have access right to the context values. https://circleci.com/gh/organizations/pytorch/settings#contexts/2b885fc9-ef3a-4b86-8f5a-2e6e22bd0cfe

This PR will remove `org-member` from the iOS simulator build which doesn't require code signing. For the arm64 builds, they'll only be run on master, not on PRs anymore.

### Test plan

- The iOS simulator job should be able to appear in the PR workflow

Test Plan: Imported from OSS

Differential Revision: D20347270

Pulled By: xta0

fbshipit-source-id: 23f37d40160c237dc280e0e82f879c1d601f72ac

Summary:

Currently testing against the older release `1.4.0` with:

```

PYTORCH_S3_FROM=nightly TEST_WITHOUT_GIT_TAG=1 TEST_PYTORCH_PROMOTE_VERSION=1.4.0 scripts/release/promote/libtorch_to_s3.sh

PYTORCH_S3_FROM=nightly TEST_WITHOUT_GIT_TAG=1 TEST_PYTORCH_PROMOTE_VERSION=1.4.0 scripts/release/promote/wheel_to_s3.sh

```

These scripts can also be used for `torchvision` as well which may make the release process better there as well.

Later on this should be made into a re-usable module that can be downloaded from anywhere and used amongst all pytorch repositories.

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34274

Test Plan: sandcastle_will_deliver

Differential Revision: D20294419

Pulled By: seemethere

fbshipit-source-id: c8c31b5c42af5096f09275166ac43d45a459d25c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34203

Currently cmake and mobile build scripts still build libcaffe2 by

default. To build pytorch mobile users have to set environment variable

BUILD_PYTORCH_MOBILE=1 or set cmake option BUILD_CAFFE2_MOBILE=OFF.

PyTorch mobile has been released for a while. It's about time to change

CMake and build scripts to build libtorch by default.

Changed caffe2 CI job to build libcaffe2 by setting BUILD_CAFFE2_MOBILE=1

environment variable. Only found android CI for libcaffe2 - do we ever

have iOS CI for libcaffe2?

Test Plan: Imported from OSS

Differential Revision: D20267274

Pulled By: ljk53

fbshipit-source-id: 9d997032a599c874d62fbcfc4f5d4fbf8323a12e

Summary:

Among all ONNX tests, ONNXRuntime tests are taking the most time on CI (almost 60%).

This is because we are testing larger models (mainly torchvision RCNNs) for multiple onnx opsets.

I decided to divide tests between two jobs for older/newer opsets. This is now reducing the test time from 2h to around 1h10mins.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33242

Reviewed By: hl475

Differential Revision: D19866498

Pulled By: houseroad

fbshipit-source-id: 446c1fe659e85f5aef30efc5c4549144fcb5778c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34187

Noticed that a recent PR broke Android/iOS CI but didn't break mobile

build with host toolchain. Turns out one mobile related flag was not

set on PYTORCH_BUILD_MOBILE code path:

```

"set(INTERN_DISABLE_MOBILE_INTERP ON)"

```

First, move the INTERN_DISABLE_MOBILE_INTERP macro below, to stay with

other "mobile + pytorch" options - it's not relevant to "mobile + caffe2"

so doesn't need to be set as common "mobile" option;

Second, rename PYTORCH_BUILD_MOBILE env-variable to

BUILD_PYTORCH_MOBILE_WITH_HOST_TOOLCHAIN - it's a bit verbose but

becomes more clear what it does - there is another env-variable

"BUILD_PYTORCH_MOBILE" used in scripts/build_android.sh, build_ios.sh,

which toggles between "mobile + pytorch" v.s. "mobile + caffe2";

Third, combine BUILD_PYTORCH_MOBILE_WITH_HOST_TOOLCHAIN with ANDROID/IOS

to avoid missing common mobile options again in future.

Test Plan: Imported from OSS

Differential Revision: D20251864

Pulled By: ljk53

fbshipit-source-id: dc90cc87ffd4d0bf8a78ae960c4ce33a8bb9e912

Summary:

**Summary**

This commit adds a script that fetches a platform-appropriate `clang-format` binary

from S3 for use during PyTorch development. The goal is for everyone to use the exact

same `clang-format` binary so that there are no formatting conflicts.

**Testing**

Ran the script.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33644

Differential Revision: D20076598

Pulled By: SplitInfinity

fbshipit-source-id: cd837076fd30e9c7a8280665c0d652a33b559047

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33722

In order to improve CPU performance on floating-point models on mobile, this PR introduces a new CPU backend for mobile that implements the most common mobile operators with NHWC memory layout support through integration with XNNPACK.

XNNPACK itself, and this codepath, are currently only included in the build, but the actual integration is gated with USE_XNNPACK preprocessor guards. This preprocessor symbol is intentionally not passed on to the compiler, so as to enable this rollout in multiple stages in follow up PRs. This changeset will build XNNPACK as part of the build if the identically named USE_XNNPACK CMAKE variable, defaulted to ON, is enabled, but will not actually expose or enable this code path in any other way.

Furthermore, it is worth pointing out that in order to efficiently map models to these operators, some front-end method of exposing this backend to the user is needed. The less efficient implementation would be to hook these operators into their corresponding native implementations, granted that a series of XNNPACK-specific conditions are met, much like how NNPACK is integrated with PyTorch today for instance.

Having said that, while the above implementation is still expected to outperform NNPACK based on the benchmarks I ran, the above integration would be leave a considerable gap between the performance achieved and the maximum performance potential XNNPACK enables, as it does not provide a way to compute and factor out one-time operations out of the inner most forward() loop.

The more optimal solution, and one we will decide on soon, would involve either providing a JIT pass that maps nn operators onto these newly introduced operators, while allowing one-time calculations to be factored out, much like quantized mobile models. Alternatively, new eager-mode modules can also be introduced that would directly call into these implementations either through c10 or some other mechanism, also allowing for decoupling of op creation from op execution.

This PR does not include any of the front end changes mentioned above. Neither does it include the mobile threadpool unification present in the original https://github.com/pytorch/pytorch/issues/30644. Furthermore, this codepath seems to be faster than NNPACK in a good number of use cases, which can potentially allow us to remove NNPACK from aten to make the codebase a little simpler, granted that there is widespread support for such a move.

Regardless, these changes will be introduced gradually and in a more controlled way in subsequent PRs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32509

Test Plan:

Build: CI

Functionality: Not exposed

Reviewed By: dreiss

Differential Revision: D20069796

Pulled By: AshkanAliabadi

fbshipit-source-id: d46c1c91d4bea91979ea5bd46971ced5417d309c

Summary:

In order to improve CPU performance on floating-point models on mobile, this PR introduces a new CPU backend for mobile that implements the most common mobile operators with NHWC memory layout support through integration with XNNPACK.

XNNPACK itself, and this codepath, are currently only included in the build, but the actual integration is gated with USE_XNNPACK preprocessor guards. This preprocessor symbol is intentionally not passed on to the compiler, so as to enable this rollout in multiple stages in follow up PRs. This changeset will build XNNPACK as part of the build if the identically named USE_XNNPACK CMAKE variable, defaulted to ON, is enabled, but will not actually expose or enable this code path in any other way.

Furthermore, it is worth pointing out that in order to efficiently map models to these operators, some front-end method of exposing this backend to the user is needed. The less efficient implementation would be to hook these operators into their corresponding **native** implementations, granted that a series of XNNPACK-specific conditions are met, much like how NNPACK is integrated with PyTorch today for instance.

Having said that, while the above implementation is still expected to outperform NNPACK based on the benchmarks I ran, the above integration would be leave a considerable gap between the performance achieved and the maximum performance potential XNNPACK enables, as it does not provide a way to compute and factor out one-time operations out of the inner most forward() loop.

The more optimal solution, and one we will decide on soon, would involve either providing a JIT pass that maps nn operators onto these newly introduced operators, while allowing one-time calculations to be factored out, much like quantized mobile models. Alternatively, new eager-mode modules can also be introduced that would directly call into these implementations either through c10 or some other mechanism, also allowing for decoupling of op creation from op execution.

This PR does not include any of the front end changes mentioned above. Neither does it include the mobile threadpool unification present in the original https://github.com/pytorch/pytorch/issues/30644. Furthermore, this codepath seems to be faster than NNPACK in a good number of use cases, which can potentially allow us to remove NNPACK from aten to make the codebase a little simpler, granted that there is widespread support for such a move.

Regardless, these changes will be introduced gradually and in a more controlled way in subsequent PRs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32509

Reviewed By: dreiss

Differential Revision: D19521853

Pulled By: AshkanAliabadi

fbshipit-source-id: 99a1fab31d0ece64961df074003bb852c36acaaa

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33318

### Summary

Recently, we have a [discussion](https://discuss.pytorch.org/t/libtorch-on-watchos/69073/14) in the forum about watchOS. This PR adds the support for building watchOS libraries.

### Test Plan

- `BUILD_PYTORCH_MOBILE=1 IOS_PLATFORM=WATCHOS ./scripts/build_ios.sh`

Test Plan: Imported from OSS

Differential Revision: D19896534

Pulled By: xta0

fbshipit-source-id: 7b9286475e895d9fefd998246e7090ac92c4c9b6

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30315

The new structure is that libtorch_cpu contains the bulk of our

code, and libtorch depends on libtorch_cpu and libtorch_cuda.

This is a reland of https://github.com/pytorch/pytorch/pull/29731 but

I've extracted all of the prep work into separate PRs which can be

landed before this one.

Some things of note:

* torch/csrc/cuda/nccl.cpp was added to the wrong list of SRCS, now fixed (this didn't matter before because previously they were all in the same library)

* The dummy file for libtorch was brought back from the dead; it was previously deleted in #20774

In an initial version of the patch, I forgot to make torch_cuda explicitly depend on torch_cpu. This lead to some very odd errors, most notably "bin/blob_test: hidden symbol `_ZNK6google8protobuf5Arena17OnArenaAllocationEPKSt9type_infom' in lib/libprotobuf.a(arena.cc.o) is referenced by DSO"

* A number of places in Android/iOS builds have to add torch_cuda explicitly as a library, as they do not have transitive dependency calculation working correctly

* I had to torch_cpu/torch_cuda caffe2_interface_library so that they get whole-archived linked into torch when you statically link. And I had to do this in an *exported* fashion because torch needs to depend on torch_cpu_library. In the end I exported everything and removed the redefinition in the Caffe2Config.cmake. However, I am not too sure why the old code did it in this way in the first place; however, it doesn't seem to have broken anything to switch it this way.

* There's some uses of `__HIP_PLATFORM_HCC__` still in `torch_cpu` code, so I had to apply it to that library too (UGH). This manifests as a failer when trying to run the CUDA fuser. This doesn't really matter substantively right now because we still in-place HIPify, but it would be good to fix eventually. This was a bit difficult to debug because of an unrelated HIP bug, see https://github.com/ROCm-Developer-Tools/HIP/issues/1706Fixes#27215 (as our libraries are smaller), and executes on

part of the plan in #29235.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D18790941

Pulled By: ezyang

fbshipit-source-id: 01296f6089d3de5e8365251b490c51e694f2d6c7

Summary:

Move the shell script into this separate PR to make the original PR

smaller and less scary.

Test Plan:

- With stacked PRs:

1. analyze test project and compare with expected results:

```

ANALYZE_TEST=1 CHECK_RESULT=1 tools/code_analyzer/build.sh

```

2. analyze LibTorch:

```

ANALYZE_TORCH=1 tools/code_analyzer/build.sh

```

Differential Revision: D18474749

Pulled By: ljk53

fbshipit-source-id: 55c5cae3636cf2b1c4928fd2dc615d01f287076a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29731

The new structure is that libtorch_cpu contains the bulk of our

code, and libtorch depends on libtorch_cpu and libtorch_cuda.

Some subtleties about the patch:

- There were a few functions that crossed CPU-CUDA boundary without API macros. I just added them, easy enough. An inverse situation was aten/src/THC/THCTensorRandom.cu where we weren't supposed to put API macros directly in a cpp file.

- DispatchStub wasn't getting all of its symbols related to static members on DispatchStub exported properly. I tried a few fixes but in the end I just moved everyone off using DispatchStub to dispatch CUDA/HIP (so they just use normal dispatch for those cases.) Additionally, there were some mistakes where people incorrectly were failing to actually import the declaration of the dispatch stub, so added includes for those cases.

- torch/csrc/cuda/nccl.cpp was added to the wrong list of SRCS, now fixed (this didn't matter before because previously they were all in the same library)

- The dummy file for libtorch was brought back from the dead; it was previously deleted in #20774

- In an initial version of the patch, I forgot to make torch_cuda explicitly depend on torch_cpu. This lead to some very odd errors, most notably "bin/blob_test: hidden symbol `_ZNK6google8protobuf5Arena17OnArenaAllocationEPKSt9type_infom' in lib/l

ibprotobuf.a(arena.cc.o) is referenced by DSO"

- A number of places in Android/iOS builds have to add torch_cuda explicitly as a library, as they do not have transitive dependency calculation working correctly. This situation also happens with custom C++ extensions.

- There's a ROCm compiler bug where extern "C" on functions is not respected. There's a little workaround to handle this.

- Because I was too lazy to check if HIPify was converting TORCH_CUDA_API into TORCH_HIP_API, I just made it so HIP build also triggers the TORCH_CUDA_API macro. Eventually, we should translate and keep the nature of TORCH_CUDA_API constant in all cases.

Fixes#27215 (as our libraries are smaller), and executes on

part of the plan in #29235.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D18632773

Pulled By: ezyang

fbshipit-source-id: ea717c81e0d7554ede1dc404108603455a81da82

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30144

Create script to produce libtorch that only contains ops needed by specific

models. Developers can use this workflow to further optimize mobile build size.

Need keep a dummy stub for unused (stripped) ops because some JIT side

logic requires certain function schemas to be existed in the JIT op

registry.

Test Steps:

1. Build "dump_operator_names" binary and use it to dump root ops needed

by a specific model:

```

build/bin/dump_operator_names --model=mobilenetv2.pk --output=mobilenetv2.yaml

```

2. The MobileNetV2 model should use the following ops:

```

- aten::t

- aten::dropout

- aten::mean.dim

- aten::add.Tensor

- prim::ListConstruct

- aten::addmm

- aten::_convolution

- aten::batch_norm

- aten::hardtanh_

- aten::mm

```

NOTE that for some reason it outputs "aten::addmm" but actually uses "aten::mm".

You need fix it manually for now.

3. Run custom build script locally (use Android as an example):

```

SELECTED_OP_LIST=mobilenetv2.yaml scripts/build_pytorch_android.sh armeabi-v7a

```

4. Checkout demo app that uses locally built library instead of

downloading from jcenter repo:

```

git clone --single-branch --branch custom_build git@github.com:ljk53/android-demo-app.git

```

5. Copy locally built libraries to demo app folder:

```

find ${HOME}/src/pytorch/android -name '*.aar' -exec cp {} ${HOME}/src/android-demo-app/HelloWorldApp/app/libs/ \;

```

6. Build demo app with locally built libtorch:

```

cd ${HOME}/src/android-demo-app/HelloWorldApp

./gradlew clean && ./gradlew assembleDebug

```

7. Install and run the demo app.

In-APK arm-v7 libpytorch_jni.so build size reduced from 5.5M to 2.9M.

Test Plan: Imported from OSS

Differential Revision: D18612127

Pulled By: ljk53

fbshipit-source-id: fa8d5e1d3259143c7346abd1c862773be8c7e29a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29715

Previous we hard code it to enable static dispatch when building mobile

library. Since we are exploring approaches to deprecate static dispatch

we should make it optional. This PR moved the setting from cmake to bash

build scripts which can be overridden.

Test Plan: - verified it's still using static dispatch when building with these scripts.

Differential Revision: D18474640

Pulled By: ljk53

fbshipit-source-id: 7591acc22009bfba36302e3b2a330b1428d8e3f1

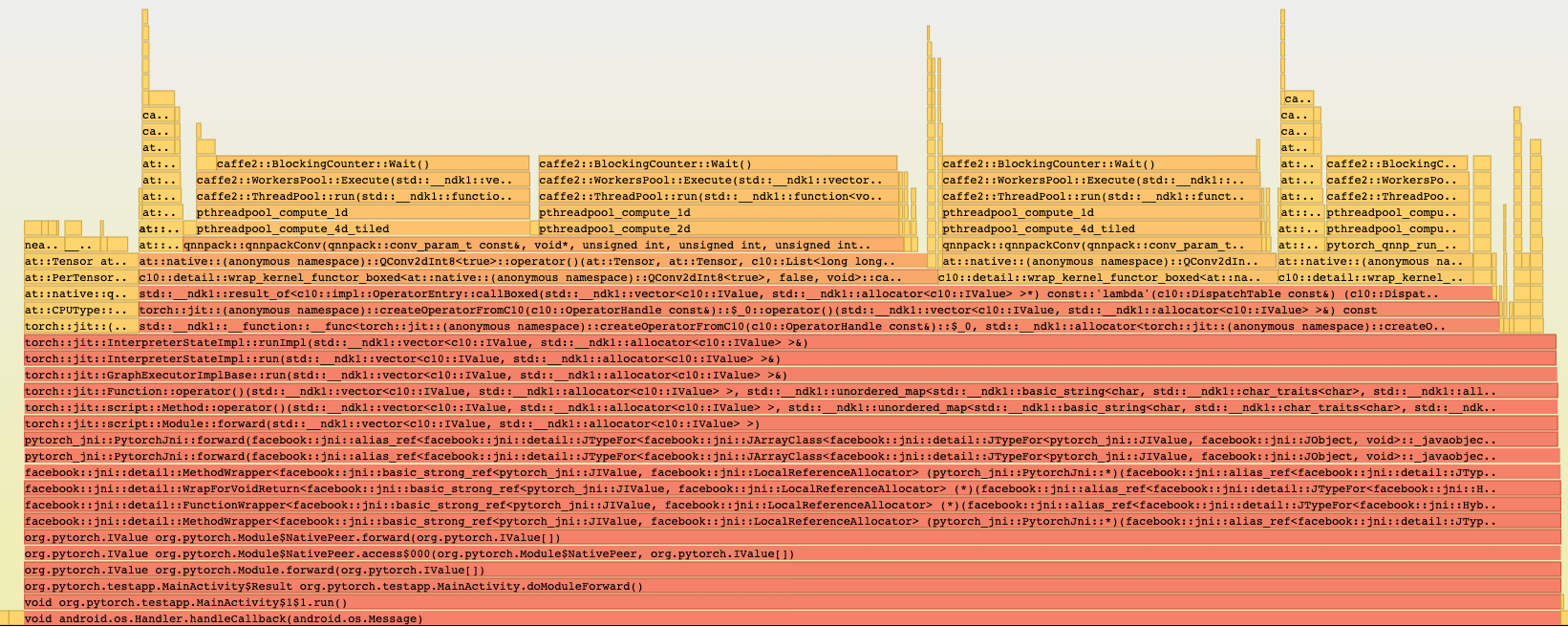

Summary:

Reason:

To have one-step build for test android application based on the current code state that is ready for profiling with simpleperf, systrace etc. to profile performance inside the application.

## Parameters to control debug symbols stripping

Introducing /CMakeLists parameter `ANDROID_DEBUG_SYMBOLS` to be able not to strip symbols for pytorch (not add linker flag `-s`)

which is checked in `scripts/build_android.sh`

On gradle side stripping happens by default, and to prevent it we have to specify

```

android {

packagingOptions {

doNotStrip "**/*.so"

}

}

```

which is now controlled by new gradle property `nativeLibsDoNotStrip `

## Test_App

`android/test_app` - android app with one MainActivity that does inference in cycle

`android/build_test_app.sh` - script to build libtorch with debug symbols for specified android abis and adds `NDK_DEBUG=1` and `-PnativeLibsDoNotStrip=true` to keep all debug symbols for profiling.

Script assembles all debug flavors:

```

└─ $ find . -type f -name *apk

./test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

./test_app/app/build/outputs/apk/resnet/debug/test_app-resnet-debug.apk

```

## Different build configurations

Module for inference can be set in `android/test_app/app/build.gradle` as a BuildConfig parameters:

```

productFlavors {

mobilenetQuant {

dimension "model"

applicationIdSuffix ".mobilenetQuant"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_MOBILENET_QUANT'))

addManifestPlaceholders([APP_NAME: "PyMobileNetQuant"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-mobilenet\"")

}

resnet {

dimension "model"

applicationIdSuffix ".resnet"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_RESNET18'))

addManifestPlaceholders([APP_NAME: "PyResnet"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-resnet\"")

}

```

In that case we can setup several apps on the same device for comparison, to separate packages `applicationIdSuffix`: 'org.pytorch.testapp.mobilenetQuant' and different application names and logcat tags as `manifestPlaceholder` and another BuildConfig parameter:

```

─ $ adb shell pm list packages | grep pytorch

package:org.pytorch.testapp.mobilenetQuant

package:org.pytorch.testapp.resnet

```

In future we can add another BuildConfig params e.g. single/multi threads and other configuration for profiling.

At the moment 2 flavors - for resnet18 and for mobilenetQuantized

which can be installed on connected device:

```

cd android

```

```

gradle test_app:installMobilenetQuantDebug

```

```

gradle test_app:installResnetDebug

```

## Testing:

```

cd android

sh build_test_app.sh

adb install -r test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

```

```

cd $ANDROID_NDK

python simpleperf/run_simpleperf_on_device.py record --app org.pytorch.testapp.mobilenetQuant -g --duration 10 -o /data/local/tmp/perf.data

adb pull /data/local/tmp/perf.data

python simpleperf/report_html.py

```

Simpleperf report has all symbols:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28406

Differential Revision: D18386622

Pulled By: IvanKobzarev

fbshipit-source-id: 3a751192bbc4bc3c6d7f126b0b55086b4d586e7a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28405

### Summary

As discussed with AshkanAliabadi and ljk53, the iOS TestApp will share the same benchmark code with Android's speed_benchmark_torch.cpp. This PR is the first part which contains the Objective-C++ code.

The second PR will include the scripts to setup and run the benchmark project. The third PR will include scripts that can automate the whole "build - test - install" process.

There are many ways to run the benchmark project. The easiest way is to use cocoapods. Simply run `pod install`. However, that will pull the 1.3 binary which is not what we want, but we can still use this approach to test the benchmark code. The second PR will contain scripts to run custom builds that we can tweak.

### Test Plan

- Don't break any existing CI jobs (except for those flaky ones)

Test Plan: Imported from OSS

Differential Revision: D18064187

Pulled By: xta0

fbshipit-source-id: 4cfbb83c045803d8b24bf6d2c110a55871d22962

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27593

## Summary

Since the nightly jobs are lack of testing phases, we don't really have a way to test the binary before uploading it to AWS. To make the work more solid, we need to figure out a way to verify the binary.

Fortunately, the XCode tool chain offers a way to build your app without XCode app, which is the [xcodebuild](https://developer.apple.com/library/archive/technotes/tn2339/_index.html) command. Now we can link our binary to a testing app and run `xcodebuild` to to see if there is any linking error. The PRs below have already done some of the preparation jobs

- [#26261](https://github.com/pytorch/pytorch/pull/26261)

- [#26632](https://github.com/pytorch/pytorch/pull/26632)

The challenge comes when testing the arm64 build as we don't have a way to code-sign our TestApp. Circle CI has a [tutorial](https://circleci.com/docs/2.0/ios-codesigning/) but is too complicated to implement. Anyway, I figured out an easier way to do it

1. Disable automatically code sign in XCode

2. Export the encoded developer certificate and provisioning profile to org-context in Circle CI (done)

3. Install the developer certificate to the key chain store on CI machines via Fastlane.

4. Add the testing code to PR jobs and verify the result.

5. Add the testing code to nightly jobs and verify the result.

## Test Plan

- Both PR jobs and nightly jobs can finish successfully.

- `xcodebuild` can finish successfully

Test Plan: Imported from OSS

Differential Revision: D17848814

Pulled By: xta0

fbshipit-source-id: 48353f001c38e61eed13a43943253cae30d8831a

Summary:

1. scripts/build_android_libtorch_and_pytorch_android.sh

- Builds libtorch for android_abis (by default for all 4: x86, x86_64, armeabi-v7a, arm-v8a) but cab be specified only custom list as a first parameter e.g. "x86"

- Creates symbolic links inside android/pytorch_android to results of the previous builds:

`pytorch_android/src/main/jniLibs/${abi}` -> `build_android/install/lib`

`pytorch_android/src/main/cpp/libtorch_include/${abi}` -> `build_android/install/include`

- Runs gradle assembleRelease to build aar files

proxy can be specified inside (for devservers)

2. android/run_tests.sh

Running pytorch_android tests, contains instruction how to setup and run android emulator in headless and noaudio mode to run it on devserver

proxy can be specified inside (for devservers)

#Test plan

Scenario to build x86 libtorch and android aars with it and run tests:

```

cd pytorch

sh scripts/build_android_libtorch_and_pytorch_android.sh x86

sh android/run_tests.sh

```

Tested on my devserver - build works, tests passed

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26833

Differential Revision: D17673972

Pulled By: IvanKobzarev

fbshipit-source-id: 8cb7c3d131781854589de6428a7557c1ba7471e9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26632

### Summary

This script builds the TestApp (located in ios folder) to generate an iOS x86 executable via the `xcodebuild` toolchain on macOS. The goal is to provide a quick way to test the generated static libraries to see if there are any linking errors. The script can also be used by the iOS CI jobs. To run the script, simply see description below:

```shell

$ruby scripts/xcode_ios_x86_build.rb --help

-i, --install path to the cmake install folder

-x, --xcodeproj path to the XCode project file

```

### Note

The script mainly deals with the iOS simulator build. For the arm64 build, I haven't found a way to disable the Code Sign using the `xcodebuiild` tool chain (XCode 10). If anyone knows how to do that, please feel free to leave a comment below.

### Test Plan

- The script can build the TestApp and link the generated static libraries successfully

- Don't break any CI job

Test Plan: Imported from OSS

Differential Revision: D17530990

Pulled By: xta0

fbshipit-source-id: f50bef7127ff8c11e884c99889cecff82617212b