mirror of

https://github.com/zebrajr/pytorch.git

synced 2025-12-06 12:20:52 +01:00

Add AVX512 support in ATen & remove AVX support (#61903)

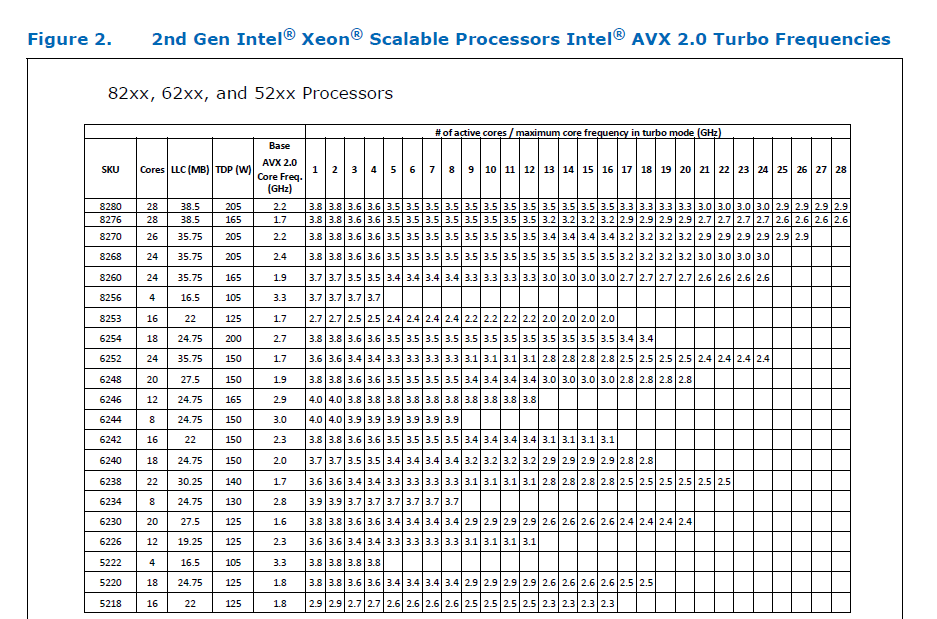

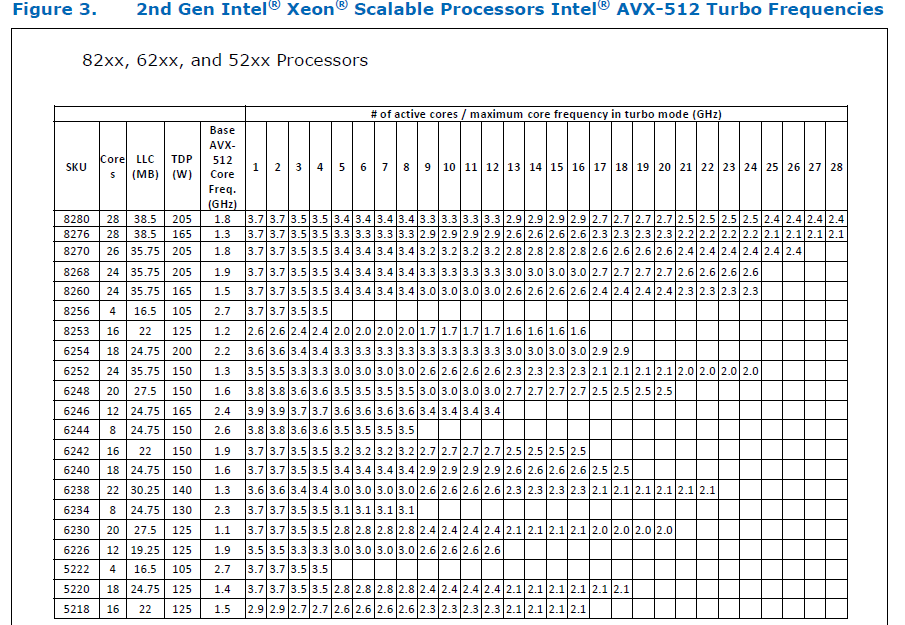

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903 ### Remaining Tasks - [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP). ### Summary 1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed. 2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415). It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now. 3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now. 4. One test is currently being skipped - [test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines. The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d. Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses. Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code. Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests. ### Testing 1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2. Only one test had to be modified, as it was hardcoded for AVX2. 2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support. ### Would the downclocking caused by AVX512 pose an issue? I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance. This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance. Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -   The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them. ### Is PyTorch always faster with AVX512? No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512. It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed. Original pull request: https://github.com/pytorch/pytorch/pull/56992 Reviewed By: soulitzer Differential Revision: D29266289 Pulled By: ezyang fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

This commit is contained in:

parent

59d6e07ada

commit

9e53c823b8

|

|

@ -132,7 +132,9 @@ fi

|

||||||

if [[ "${BUILD_ENVIRONMENT}" == *-NO_AVX-* || $TEST_CONFIG == 'nogpu_NO_AVX' ]]; then

|

if [[ "${BUILD_ENVIRONMENT}" == *-NO_AVX-* || $TEST_CONFIG == 'nogpu_NO_AVX' ]]; then

|

||||||

export ATEN_CPU_CAPABILITY=default

|

export ATEN_CPU_CAPABILITY=default

|

||||||

elif [[ "${BUILD_ENVIRONMENT}" == *-NO_AVX2-* || $TEST_CONFIG == 'nogpu_NO_AVX2' ]]; then

|

elif [[ "${BUILD_ENVIRONMENT}" == *-NO_AVX2-* || $TEST_CONFIG == 'nogpu_NO_AVX2' ]]; then

|

||||||

export ATEN_CPU_CAPABILITY=avx

|

export ATEN_CPU_CAPABILITY=default

|

||||||

|

elif [[ "${BUILD_ENVIRONMENT}" == *-NO_AVX512-* || $TEST_CONFIG == 'nogpu_NO_AVX512' ]]; then

|

||||||

|

export ATEN_CPU_CAPABILITY=avx2

|

||||||

fi

|

fi

|

||||||

|

|

||||||

if [ -n "$IN_PULL_REQUEST" ] && [[ "$BUILD_ENVIRONMENT" != *coverage* ]]; then

|

if [ -n "$IN_PULL_REQUEST" ] && [[ "$BUILD_ENVIRONMENT" != *coverage* ]]; then

|

||||||

|

|

|

||||||

3

aten.bzl

3

aten.bzl

|

|

@ -1,9 +1,8 @@

|

||||||

load("@rules_cc//cc:defs.bzl", "cc_library")

|

load("@rules_cc//cc:defs.bzl", "cc_library")

|

||||||

|

|

||||||

CPU_CAPABILITY_NAMES = ["DEFAULT", "AVX", "AVX2"]

|

CPU_CAPABILITY_NAMES = ["DEFAULT", "AVX2"]

|

||||||

CAPABILITY_COMPILER_FLAGS = {

|

CAPABILITY_COMPILER_FLAGS = {

|

||||||

"AVX2": ["-mavx2", "-mfma"],

|

"AVX2": ["-mavx2", "-mfma"],

|

||||||

"AVX": ["-mavx"],

|

|

||||||

"DEFAULT": [],

|

"DEFAULT": [],

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -50,7 +50,7 @@ if(NOT BUILD_LITE_INTERPRETER)

|

||||||

endif()

|

endif()

|

||||||

EXCLUDE(ATen_CORE_SRCS "${ATen_CORE_SRCS}" ${ATen_CORE_TEST_SRCS})

|

EXCLUDE(ATen_CORE_SRCS "${ATen_CORE_SRCS}" ${ATen_CORE_TEST_SRCS})

|

||||||

|

|

||||||

file(GLOB base_h "*.h" "detail/*.h" "cpu/*.h" "cpu/vec/vec256/*.h" "cpu/vec/*.h" "quantized/*.h")

|

file(GLOB base_h "*.h" "detail/*.h" "cpu/*.h" "cpu/vec/vec512/*.h" "cpu/vec/vec256/*.h" "cpu/vec/*.h" "quantized/*.h")

|

||||||

file(GLOB base_cpp "*.cpp" "detail/*.cpp" "cpu/*.cpp")

|

file(GLOB base_cpp "*.cpp" "detail/*.cpp" "cpu/*.cpp")

|

||||||

file(GLOB cuda_h "cuda/*.h" "cuda/detail/*.h" "cuda/*.cuh" "cuda/detail/*.cuh")

|

file(GLOB cuda_h "cuda/*.h" "cuda/detail/*.h" "cuda/*.cuh" "cuda/detail/*.cuh")

|

||||||

file(GLOB cuda_cpp "cuda/*.cpp" "cuda/detail/*.cpp")

|

file(GLOB cuda_cpp "cuda/*.cpp" "cuda/detail/*.cpp")

|

||||||

|

|

|

||||||

|

|

@ -108,12 +108,12 @@ std::string used_cpu_capability() {

|

||||||

case native::CPUCapability::DEFAULT:

|

case native::CPUCapability::DEFAULT:

|

||||||

ss << "NO AVX";

|

ss << "NO AVX";

|

||||||

break;

|

break;

|

||||||

case native::CPUCapability::AVX:

|

|

||||||

ss << "AVX";

|

|

||||||

break;

|

|

||||||

case native::CPUCapability::AVX2:

|

case native::CPUCapability::AVX2:

|

||||||

ss << "AVX2";

|

ss << "AVX2";

|

||||||

break;

|

break;

|

||||||

|

case native::CPUCapability::AVX512:

|

||||||

|

ss << "AVX512";

|

||||||

|

break;

|

||||||

#endif

|

#endif

|

||||||

default:

|

default:

|

||||||

break;

|

break;

|

||||||

|

|

|

||||||

|

|

@ -1,6 +1,5 @@

|

||||||

#include <ATen/cpu/FlushDenormal.h>

|

#include <ATen/cpu/FlushDenormal.h>

|

||||||

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

|

||||||

#include <cpuinfo.h>

|

#include <cpuinfo.h>

|

||||||

|

|

||||||

namespace at { namespace cpu {

|

namespace at { namespace cpu {

|

||||||

|

|

|

||||||

|

|

@ -1 +1,6 @@

|

||||||

#include <ATen/cpu/vec/vec256/functional.h>

|

#pragma once

|

||||||

|

|

||||||

|

#include <ATen/cpu/vec/functional_base.h>

|

||||||

|

#if !defined(__VSX__) || !defined(CPU_CAPABILITY_VSX)

|

||||||

|

#include <ATen/cpu/vec/functional_bfloat16.h>

|

||||||

|

#endif

|

||||||

|

|

|

||||||

|

|

@ -3,7 +3,7 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/vec256.h>

|

#include <ATen/cpu/vec/vec.h>

|

||||||

|

|

||||||

namespace at { namespace vec {

|

namespace at { namespace vec {

|

||||||

|

|

||||||

|

|

@ -3,7 +3,7 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/functional_base.h>

|

#include <ATen/cpu/vec/vec.h>

|

||||||

|

|

||||||

namespace at { namespace vec {

|

namespace at { namespace vec {

|

||||||

|

|

||||||

|

|

@ -15,26 +15,26 @@ template <> struct VecScalarType<BFloat16> { using type = float; };

|

||||||

template <typename scalar_t>

|

template <typename scalar_t>

|

||||||

using vec_scalar_t = typename VecScalarType<scalar_t>::type;

|

using vec_scalar_t = typename VecScalarType<scalar_t>::type;

|

||||||

|

|

||||||

// Note that we already have specializes member of Vectorized<scalar_t> for BFloat16

|

// Note that we already have specialized member of Vectorized<scalar_t> for BFloat16

|

||||||

// so the following function would run smoothly:

|

// so the following functions would run smoothly:

|

||||||

// using Vec = Vectorized<BFloat16>;

|

// using Vec = Vectorized<BFloat16>;

|

||||||

// Vec one = Vec(BFloat16(1));

|

// Vec one = Vec(BFloat16(1));

|

||||||

// vec::map([](Vec x) { return one / (one + x.exp()); }, y_ptr, x_ptr, N);

|

// vec::map([](Vec x) { return one / (one + x.exp()); }, y_ptr, x_ptr, N);

|

||||||

//

|

//

|

||||||

// Why we still need to specializes "funtional"?

|

// Then why we still need to specialize "funtional"?

|

||||||

// If we do specialization at Vectorized<> level, the above example would need 3 pairs of

|

// If we do specialization at Vectorized<> level, the above example would need 3 pairs of

|

||||||

// conversion of bf16->fp32/fp32->bf16, each for ".exp()", "+" and "/".

|

// conversion of bf16->fp32/fp32->bf16, each for ".exp()", "+" and "/".

|

||||||

// If we do specialization at vec::map<>() level, we have only 1 pair of conversion

|

// If we do specialization at vec::map<>() level, we have only 1 pair of conversion

|

||||||

// of bf16->fp32/fp32->bf16, for the input and output BFloat16 vector only.

|

// of bf16->fp32/fp32->bf16, for the input and output BFloat16 vector only.

|

||||||

//

|

//

|

||||||

// The following BFloat16 functionalities will only do data type conversion for input

|

// The following BFloat16 functionality will only do data type conversion for input

|

||||||

// and output vector (reduce functionalities will only convert the final scalar back to bf16).

|

// and output vector (reduce functionality will only convert the final scalar back to bf16).

|

||||||

// Compared to Vectorized<> specialization,

|

// Compared to Vectorized<> specialization,

|

||||||

// 1. better performance since we have less data type conversion;

|

// 1. better performance since we have less data type conversion;

|

||||||

// 2. less rounding error since immediate results are kept in fp32;

|

// 2. less rounding error since immediate results are kept in fp32;

|

||||||

// 3. accumulation done on data type of fp32.

|

// 3. accumulation done on data type of fp32.

|

||||||

//

|

//

|

||||||

// If you plan to extend this file, make sure add unit test at

|

// If you plan to extend this file, please ensure adding unit tests at

|

||||||

// aten/src/ATen/test/vec_test_all_types.cpp

|

// aten/src/ATen/test/vec_test_all_types.cpp

|

||||||

//

|

//

|

||||||

template <typename scalar_t = BFloat16, typename Op>

|

template <typename scalar_t = BFloat16, typename Op>

|

||||||

|

|

@ -1,6 +1,6 @@

|

||||||

#pragma once

|

#pragma once

|

||||||

#if defined(__clang__) && (defined(__x86_64__) || defined(__i386__))

|

#if defined(__GNUC__) && (defined(__x86_64__) || defined(__i386__))

|

||||||

/* Clang-compatible compiler, targeting x86/x86-64 */

|

/* GCC or clang-compatible compiler, targeting x86/x86-64 */

|

||||||

#include <x86intrin.h>

|

#include <x86intrin.h>

|

||||||

#elif defined(__clang__) && (defined(__ARM_NEON__) || defined(__aarch64__))

|

#elif defined(__clang__) && (defined(__ARM_NEON__) || defined(__aarch64__))

|

||||||

/* Clang-compatible compiler, targeting arm neon */

|

/* Clang-compatible compiler, targeting arm neon */

|

||||||

|

|

@ -14,9 +14,6 @@

|

||||||

#define _mm256_extract_epi16(X, Y) (_mm_extract_epi16(_mm256_extractf128_si256(X, Y >> 3), Y % 8))

|

#define _mm256_extract_epi16(X, Y) (_mm_extract_epi16(_mm256_extractf128_si256(X, Y >> 3), Y % 8))

|

||||||

#define _mm256_extract_epi8(X, Y) (_mm_extract_epi8(_mm256_extractf128_si256(X, Y >> 4), Y % 16))

|

#define _mm256_extract_epi8(X, Y) (_mm_extract_epi8(_mm256_extractf128_si256(X, Y >> 4), Y % 16))

|

||||||

#endif

|

#endif

|

||||||

#elif defined(__GNUC__) && (defined(__x86_64__) || defined(__i386__))

|

|

||||||

/* GCC-compatible compiler, targeting x86/x86-64 */

|

|

||||||

#include <x86intrin.h>

|

|

||||||

#elif defined(__GNUC__) && (defined(__ARM_NEON__) || defined(__aarch64__))

|

#elif defined(__GNUC__) && (defined(__ARM_NEON__) || defined(__aarch64__))

|

||||||

/* GCC-compatible compiler, targeting ARM with NEON */

|

/* GCC-compatible compiler, targeting ARM with NEON */

|

||||||

#include <arm_neon.h>

|

#include <arm_neon.h>

|

||||||

|

|

@ -1 +1,5 @@

|

||||||

|

#if defined(CPU_CAPABILITY_AVX512)

|

||||||

|

#include <ATen/cpu/vec/vec512/vec512.h>

|

||||||

|

#else

|

||||||

#include <ATen/cpu/vec/vec256/vec256.h>

|

#include <ATen/cpu/vec/vec256/vec256.h>

|

||||||

|

#endif

|

||||||

|

|

|

||||||

|

|

@ -1,6 +0,0 @@

|

||||||

#pragma once

|

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/functional_base.h>

|

|

||||||

#if !defined(__VSX__) || !defined(CPU_CAPABILITY_VSX)

|

|

||||||

#include <ATen/cpu/vec/vec256/functional_bfloat16.h>

|

|

||||||

#endif

|

|

||||||

|

|

@ -3,9 +3,9 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

#if !defined(__VSX__) || !defined(CPU_CAPABILITY_VSX)

|

#if !defined(__VSX__) || !defined(CPU_CAPABILITY_VSX)

|

||||||

#include <ATen/cpu/vec/vec256/vec256_float.h>

|

#include <ATen/cpu/vec/vec256/vec256_float.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_float_neon.h>

|

#include <ATen/cpu/vec/vec256/vec256_float_neon.h>

|

||||||

|

|

@ -68,9 +68,9 @@ std::ostream& operator<<(std::ostream& stream, const Vectorized<T>& vec) {

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

// ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ CAST (AVX) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

// ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ CAST (AVX2) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||||

|

|

||||||

template<>

|

template<>

|

||||||

inline Vectorized<float> cast<float, double>(const Vectorized<double>& src) {

|

inline Vectorized<float> cast<float, double>(const Vectorized<double>& src) {

|

||||||

|

|

@ -82,29 +82,6 @@ inline Vectorized<double> cast<double, float>(const Vectorized<float>& src) {

|

||||||

return _mm256_castps_pd(src);

|

return _mm256_castps_pd(src);

|

||||||

}

|

}

|

||||||

|

|

||||||

#if defined(CPU_CAPABILITY_AVX2)

|

|

||||||

|

|

||||||

// ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ CAST (AVX2) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

|

||||||

|

|

||||||

#define DEFINE_FLOAT_INT_CAST(int_t, float_t, float_ch) \

|

|

||||||

template<> \

|

|

||||||

inline Vectorized<int_t> cast<int_t, float_t>(const Vectorized<float_t>& src) { \

|

|

||||||

return _mm256_castp ## float_ch ## _si256(src); \

|

|

||||||

} \

|

|

||||||

template<> \

|

|

||||||

inline Vectorized<float_t> cast<float_t, int_t>(const Vectorized<int_t>& src) { \

|

|

||||||

return _mm256_castsi256_p ## float_ch (src); \

|

|

||||||

}

|

|

||||||

|

|

||||||

DEFINE_FLOAT_INT_CAST(int64_t, double, d)

|

|

||||||

DEFINE_FLOAT_INT_CAST(int32_t, double, d)

|

|

||||||

DEFINE_FLOAT_INT_CAST(int16_t, double, d)

|

|

||||||

DEFINE_FLOAT_INT_CAST(int64_t, float, s)

|

|

||||||

DEFINE_FLOAT_INT_CAST(int32_t, float, s)

|

|

||||||

DEFINE_FLOAT_INT_CAST(int16_t, float, s)

|

|

||||||

|

|

||||||

#undef DEFINE_FLOAT_INT_CAST

|

|

||||||

|

|

||||||

// ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ GATHER ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

// ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ GATHER ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||||

|

|

||||||

template<int64_t scale = 1>

|

template<int64_t scale = 1>

|

||||||

|

|

@ -243,8 +220,6 @@ inline deinterleave2<float>(const Vectorized<float>& a, const Vectorized<float>&

|

||||||

_mm256_permute2f128_ps(a_grouped, b_grouped, 0b0110001)); // 1, 3. 4 bits apart

|

_mm256_permute2f128_ps(a_grouped, b_grouped, 0b0110001)); // 1, 3. 4 bits apart

|

||||||

}

|

}

|

||||||

|

|

||||||

#endif // defined(CPU_CAPABILITY_AVX2)

|

#endif // (defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

#endif // (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

|

||||||

|

|

||||||

}}}

|

}}}

|

||||||

|

|

|

||||||

|

|

@ -3,8 +3,8 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

#include <sleef.h>

|

#include <sleef.h>

|

||||||

#endif

|

#endif

|

||||||

|

|

@ -100,7 +100,7 @@ public:

|

||||||

return _mm256_loadu_si256(reinterpret_cast<const __m256i*>(ptr));

|

return _mm256_loadu_si256(reinterpret_cast<const __m256i*>(ptr));

|

||||||

}

|

}

|

||||||

static Vectorized<BFloat16> loadu(const void* ptr, int16_t count) {

|

static Vectorized<BFloat16> loadu(const void* ptr, int16_t count) {

|

||||||

__at_align32__ int16_t tmp_values[size()];

|

__at_align__ int16_t tmp_values[size()];

|

||||||

std::memcpy(tmp_values, ptr, count * sizeof(int16_t));

|

std::memcpy(tmp_values, ptr, count * sizeof(int16_t));

|

||||||

return loadu(tmp_values);

|

return loadu(tmp_values);

|

||||||

}

|

}

|

||||||

|

|

@ -108,14 +108,14 @@ public:

|

||||||

if (count == size()) {

|

if (count == size()) {

|

||||||

_mm256_storeu_si256(reinterpret_cast<__m256i*>(ptr), values);

|

_mm256_storeu_si256(reinterpret_cast<__m256i*>(ptr), values);

|

||||||

} else if (count > 0) {

|

} else if (count > 0) {

|

||||||

__at_align32__ int16_t tmp_values[size()];

|

__at_align__ int16_t tmp_values[size()];

|

||||||

_mm256_storeu_si256(reinterpret_cast<__m256i*>(tmp_values), values);

|

_mm256_storeu_si256(reinterpret_cast<__m256i*>(tmp_values), values);

|

||||||

std::memcpy(ptr, tmp_values, count * sizeof(int16_t));

|

std::memcpy(ptr, tmp_values, count * sizeof(int16_t));

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

template <int64_t mask>

|

template <int64_t mask>

|

||||||

static Vectorized<BFloat16> blend(const Vectorized<BFloat16>& a, const Vectorized<BFloat16>& b) {

|

static Vectorized<BFloat16> blend(const Vectorized<BFloat16>& a, const Vectorized<BFloat16>& b) {

|

||||||

__at_align32__ int16_t tmp_values[size()];

|

__at_align__ int16_t tmp_values[size()];

|

||||||

a.store(tmp_values);

|

a.store(tmp_values);

|

||||||

if (mask & 0x01)

|

if (mask & 0x01)

|

||||||

tmp_values[0] = _mm256_extract_epi16(b.values, 0);

|

tmp_values[0] = _mm256_extract_epi16(b.values, 0);

|

||||||

|

|

@ -280,7 +280,7 @@ public:

|

||||||

Vectorized<BFloat16> erfinv() const {

|

Vectorized<BFloat16> erfinv() const {

|

||||||

__m256 lo, hi;

|

__m256 lo, hi;

|

||||||

cvtbf16_fp32(values, lo, hi);

|

cvtbf16_fp32(values, lo, hi);

|

||||||

__at_align32__ float tmp1[size() / 2], tmp2[size() / 2];

|

__at_align__ float tmp1[size() / 2], tmp2[size() / 2];

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

||||||

for (int64_t i = 0; i < size() / 2; i++) {

|

for (int64_t i = 0; i < size() / 2; i++) {

|

||||||

|

|

@ -318,7 +318,7 @@ public:

|

||||||

Vectorized<BFloat16> i0() const {

|

Vectorized<BFloat16> i0() const {

|

||||||

__m256 lo, hi;

|

__m256 lo, hi;

|

||||||

cvtbf16_fp32(values, lo, hi);

|

cvtbf16_fp32(values, lo, hi);

|

||||||

__at_align32__ float tmp1[size() / 2], tmp2[size() / 2];

|

__at_align__ float tmp1[size() / 2], tmp2[size() / 2];

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

||||||

for (int64_t i = 0; i < size() / 2; i++) {

|

for (int64_t i = 0; i < size() / 2; i++) {

|

||||||

|

|

@ -333,7 +333,7 @@ public:

|

||||||

__m256 lo, hi;

|

__m256 lo, hi;

|

||||||

cvtbf16_fp32(values, lo, hi);

|

cvtbf16_fp32(values, lo, hi);

|

||||||

constexpr auto sz = size();

|

constexpr auto sz = size();

|

||||||

__at_align32__ float tmp1[sz / 2], tmp2[sz / 2];

|

__at_align__ float tmp1[sz / 2], tmp2[sz / 2];

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

||||||

|

|

||||||

|

|

@ -350,10 +350,10 @@ public:

|

||||||

__m256 xlo, xhi;

|

__m256 xlo, xhi;

|

||||||

cvtbf16_fp32(values, lo, hi);

|

cvtbf16_fp32(values, lo, hi);

|

||||||

cvtbf16_fp32(x.values, xlo, xhi);

|

cvtbf16_fp32(x.values, xlo, xhi);

|

||||||

__at_align32__ float tmp1[size() / 2], tmp2[size() / 2];

|

__at_align__ float tmp1[size() / 2], tmp2[size() / 2];

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

||||||

__at_align32__ float tmpx1[size() / 2], tmpx2[size() / 2];

|

__at_align__ float tmpx1[size() / 2], tmpx2[size() / 2];

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx1), xlo);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx1), xlo);

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx2), xhi);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx2), xhi);

|

||||||

for (int64_t i = 0; i < size() / 2; ++i) {

|

for (int64_t i = 0; i < size() / 2; ++i) {

|

||||||

|

|

@ -370,10 +370,10 @@ public:

|

||||||

__m256 xlo, xhi;

|

__m256 xlo, xhi;

|

||||||

cvtbf16_fp32(values, lo, hi);

|

cvtbf16_fp32(values, lo, hi);

|

||||||

cvtbf16_fp32(x.values, xlo, xhi);

|

cvtbf16_fp32(x.values, xlo, xhi);

|

||||||

__at_align32__ float tmp1[size() / 2], tmp2[size() / 2];

|

__at_align__ float tmp1[size() / 2], tmp2[size() / 2];

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp1), lo);

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmp2), hi);

|

||||||

__at_align32__ float tmpx1[size() / 2], tmpx2[size() / 2];

|

__at_align__ float tmpx1[size() / 2], tmpx2[size() / 2];

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx1), xlo);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx1), xlo);

|

||||||

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx2), xhi);

|

_mm256_storeu_ps(reinterpret_cast<float*>(tmpx2), xhi);

|

||||||

for (int64_t i = 0; i < size() / 2; ++i) {

|

for (int64_t i = 0; i < size() / 2; ++i) {

|

||||||

|

|

@ -717,12 +717,13 @@ inline Vectorized<BFloat16> convert_float_bfloat16(const Vectorized<float>& a, c

|

||||||

return cvtfp32_bf16(__m256(a), __m256(b));

|

return cvtfp32_bf16(__m256(a), __m256(b));

|

||||||

}

|

}

|

||||||

|

|

||||||

#else //defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

|

||||||

|

#else // defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

inline std::tuple<Vectorized<float>, Vectorized<float>> convert_bfloat16_float(const Vectorized<BFloat16>& a) {

|

inline std::tuple<Vectorized<float>, Vectorized<float>> convert_bfloat16_float(const Vectorized<BFloat16>& a) {

|

||||||

constexpr int64_t K = Vectorized<BFloat16>::size();

|

constexpr int64_t K = Vectorized<BFloat16>::size();

|

||||||

__at_align32__ float arr[K];

|

__at_align__ float arr[K];

|

||||||

__at_align32__ BFloat16 arr2[K];

|

__at_align__ BFloat16 arr2[K];

|

||||||

a.store(arr2);

|

a.store(arr2);

|

||||||

convert(arr2, arr, K);

|

convert(arr2, arr, K);

|

||||||

return std::make_tuple(

|

return std::make_tuple(

|

||||||

|

|

@ -732,15 +733,15 @@ inline std::tuple<Vectorized<float>, Vectorized<float>> convert_bfloat16_float(c

|

||||||

|

|

||||||

inline Vectorized<BFloat16> convert_float_bfloat16(const Vectorized<float>& a, const Vectorized<float>& b) {

|

inline Vectorized<BFloat16> convert_float_bfloat16(const Vectorized<float>& a, const Vectorized<float>& b) {

|

||||||

constexpr int64_t K = Vectorized<BFloat16>::size();

|

constexpr int64_t K = Vectorized<BFloat16>::size();

|

||||||

__at_align32__ float arr[K];

|

__at_align__ float arr[K];

|

||||||

__at_align32__ BFloat16 arr2[K];

|

__at_align__ BFloat16 arr2[K];

|

||||||

a.store(arr);

|

a.store(arr);

|

||||||

b.store(arr + Vectorized<float>::size());

|

b.store(arr + Vectorized<float>::size());

|

||||||

convert(arr, arr2, K);

|

convert(arr, arr2, K);

|

||||||

return Vectorized<BFloat16>::loadu(arr2);

|

return Vectorized<BFloat16>::loadu(arr2);

|

||||||

}

|

}

|

||||||

|

|

||||||

#endif

|

#endif // defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

void load_fp32_from_bf16(const c10::BFloat16 *data, Vectorized<float>& out) {

|

void load_fp32_from_bf16(const c10::BFloat16 *data, Vectorized<float>& out) {

|

||||||

|

|

@ -759,7 +760,7 @@ void load_fp32_from_bf16(const c10::BFloat16 *data, Vectorized<float>& out1, Vec

|

||||||

}

|

}

|

||||||

#else // defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

#else // defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

void load_fp32_from_bf16(const c10::BFloat16 *data, Vectorized<float>& out) {

|

void load_fp32_from_bf16(const c10::BFloat16 *data, Vectorized<float>& out) {

|

||||||

__at_align32__ float values[Vectorized<float>::size()];

|

__at_align__ float values[Vectorized<float>::size()];

|

||||||

for (int k = 0; k < Vectorized<float>::size(); ++k) {

|

for (int k = 0; k < Vectorized<float>::size(); ++k) {

|

||||||

values[k] = data[k];

|

values[k] = data[k];

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -4,9 +4,10 @@

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <c10/util/complex.h>

|

#include <c10/util/complex.h>

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

|

||||||

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

#include <sleef.h>

|

#include <sleef.h>

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

|

|

@ -15,7 +16,7 @@ namespace vec {

|

||||||

// See Note [Acceptable use of anonymous namespace in header]

|

// See Note [Acceptable use of anonymous namespace in header]

|

||||||

namespace {

|

namespace {

|

||||||

|

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

template <> class Vectorized<c10::complex<double>> {

|

template <> class Vectorized<c10::complex<double>> {

|

||||||

private:

|

private:

|

||||||

|

|

@ -81,7 +82,7 @@ public:

|

||||||

if (count == size())

|

if (count == size())

|

||||||

return _mm256_loadu_pd(reinterpret_cast<const double*>(ptr));

|

return _mm256_loadu_pd(reinterpret_cast<const double*>(ptr));

|

||||||

|

|

||||||

__at_align32__ double tmp_values[2*size()];

|

__at_align__ double tmp_values[2*size()];

|

||||||

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

||||||

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

||||||

// instructions while a loop would be compiled to one instruction.

|

// instructions while a loop would be compiled to one instruction.

|

||||||

|

|

@ -106,7 +107,7 @@ public:

|

||||||

const c10::complex<double>& operator[](int idx) const = delete;

|

const c10::complex<double>& operator[](int idx) const = delete;

|

||||||

c10::complex<double>& operator[](int idx) = delete;

|

c10::complex<double>& operator[](int idx) = delete;

|

||||||

Vectorized<c10::complex<double>> map(c10::complex<double> (*const f)(const c10::complex<double> &)) const {

|

Vectorized<c10::complex<double>> map(c10::complex<double> (*const f)(const c10::complex<double> &)) const {

|

||||||

__at_align32__ c10::complex<double> tmp[size()];

|

__at_align__ c10::complex<double> tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

for (int i = 0; i < size(); i++) {

|

for (int i = 0; i < size(); i++) {

|

||||||

tmp[i] = f(tmp[i]);

|

tmp[i] = f(tmp[i]);

|

||||||

|

|

@ -288,8 +289,8 @@ public:

|

||||||

return sqrt().reciprocal();

|

return sqrt().reciprocal();

|

||||||

}

|

}

|

||||||

Vectorized<c10::complex<double>> pow(const Vectorized<c10::complex<double>> &exp) const {

|

Vectorized<c10::complex<double>> pow(const Vectorized<c10::complex<double>> &exp) const {

|

||||||

__at_align32__ c10::complex<double> x_tmp[size()];

|

__at_align__ c10::complex<double> x_tmp[size()];

|

||||||

__at_align32__ c10::complex<double> y_tmp[size()];

|

__at_align__ c10::complex<double> y_tmp[size()];

|

||||||

store(x_tmp);

|

store(x_tmp);

|

||||||

exp.store(y_tmp);

|

exp.store(y_tmp);

|

||||||

for (int i = 0; i < size(); i++) {

|

for (int i = 0; i < size(); i++) {

|

||||||

|

|

|

||||||

|

|

@ -4,9 +4,9 @@

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <c10/util/complex.h>

|

#include <c10/util/complex.h>

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

#include <sleef.h>

|

#include <sleef.h>

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

|

|

@ -15,7 +15,7 @@ namespace vec {

|

||||||

// See Note [Acceptable use of anonymous namespace in header]

|

// See Note [Acceptable use of anonymous namespace in header]

|

||||||

namespace {

|

namespace {

|

||||||

|

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

template <> class Vectorized<c10::complex<float>> {

|

template <> class Vectorized<c10::complex<float>> {

|

||||||

private:

|

private:

|

||||||

|

|

@ -117,7 +117,7 @@ public:

|

||||||

if (count == size())

|

if (count == size())

|

||||||

return _mm256_loadu_ps(reinterpret_cast<const float*>(ptr));

|

return _mm256_loadu_ps(reinterpret_cast<const float*>(ptr));

|

||||||

|

|

||||||

__at_align32__ float tmp_values[2*size()];

|

__at_align__ float tmp_values[2*size()];

|

||||||

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

||||||

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

||||||

// instructions while a loop would be compiled to one instruction.

|

// instructions while a loop would be compiled to one instruction.

|

||||||

|

|

@ -142,7 +142,7 @@ public:

|

||||||

const c10::complex<float>& operator[](int idx) const = delete;

|

const c10::complex<float>& operator[](int idx) const = delete;

|

||||||

c10::complex<float>& operator[](int idx) = delete;

|

c10::complex<float>& operator[](int idx) = delete;

|

||||||

Vectorized<c10::complex<float>> map(c10::complex<float> (*const f)(const c10::complex<float> &)) const {

|

Vectorized<c10::complex<float>> map(c10::complex<float> (*const f)(const c10::complex<float> &)) const {

|

||||||

__at_align32__ c10::complex<float> tmp[size()];

|

__at_align__ c10::complex<float> tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

for (int i = 0; i < size(); i++) {

|

for (int i = 0; i < size(); i++) {

|

||||||

tmp[i] = f(tmp[i]);

|

tmp[i] = f(tmp[i]);

|

||||||

|

|

@ -323,8 +323,8 @@ public:

|

||||||

return sqrt().reciprocal();

|

return sqrt().reciprocal();

|

||||||

}

|

}

|

||||||

Vectorized<c10::complex<float>> pow(const Vectorized<c10::complex<float>> &exp) const {

|

Vectorized<c10::complex<float>> pow(const Vectorized<c10::complex<float>> &exp) const {

|

||||||

__at_align32__ c10::complex<float> x_tmp[size()];

|

__at_align__ c10::complex<float> x_tmp[size()];

|

||||||

__at_align32__ c10::complex<float> y_tmp[size()];

|

__at_align__ c10::complex<float> y_tmp[size()];

|

||||||

store(x_tmp);

|

store(x_tmp);

|

||||||

exp.store(y_tmp);

|

exp.store(y_tmp);

|

||||||

for (int i = 0; i < size(); i++) {

|

for (int i = 0; i < size(); i++) {

|

||||||

|

|

|

||||||

|

|

@ -3,9 +3,9 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

#include <sleef.h>

|

#include <sleef.h>

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

|

|

@ -14,7 +14,8 @@ namespace vec {

|

||||||

// See Note [Acceptable use of anonymous namespace in header]

|

// See Note [Acceptable use of anonymous namespace in header]

|

||||||

namespace {

|

namespace {

|

||||||

|

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

|

||||||

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

template <> class Vectorized<double> {

|

template <> class Vectorized<double> {

|

||||||

private:

|

private:

|

||||||

|

|

@ -67,7 +68,7 @@ public:

|

||||||

return _mm256_loadu_pd(reinterpret_cast<const double*>(ptr));

|

return _mm256_loadu_pd(reinterpret_cast<const double*>(ptr));

|

||||||

|

|

||||||

|

|

||||||

__at_align32__ double tmp_values[size()];

|

__at_align__ double tmp_values[size()];

|

||||||

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

||||||

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

||||||

// instructions while a loop would be compiled to one instruction.

|

// instructions while a loop would be compiled to one instruction.

|

||||||

|

|

@ -100,7 +101,7 @@ public:

|

||||||

return _mm256_cmp_pd(values, _mm256_set1_pd(0.0), _CMP_UNORD_Q);

|

return _mm256_cmp_pd(values, _mm256_set1_pd(0.0), _CMP_UNORD_Q);

|

||||||

}

|

}

|

||||||

Vectorized<double> map(double (*const f)(double)) const {

|

Vectorized<double> map(double (*const f)(double)) const {

|

||||||

__at_align32__ double tmp[size()];

|

__at_align__ double tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

tmp[i] = f(tmp[i]);

|

tmp[i] = f(tmp[i]);

|

||||||

|

|

@ -175,8 +176,8 @@ public:

|

||||||

return map(calc_i0e);

|

return map(calc_i0e);

|

||||||

}

|

}

|

||||||

Vectorized<double> igamma(const Vectorized<double> &x) const {

|

Vectorized<double> igamma(const Vectorized<double> &x) const {

|

||||||

__at_align32__ double tmp[size()];

|

__at_align__ double tmp[size()];

|

||||||

__at_align32__ double tmp_x[size()];

|

__at_align__ double tmp_x[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

x.store(tmp_x);

|

x.store(tmp_x);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -185,8 +186,8 @@ public:

|

||||||

return loadu(tmp);

|

return loadu(tmp);

|

||||||

}

|

}

|

||||||

Vectorized<double> igammac(const Vectorized<double> &x) const {

|

Vectorized<double> igammac(const Vectorized<double> &x) const {

|

||||||

__at_align32__ double tmp[size()];

|

__at_align__ double tmp[size()];

|

||||||

__at_align32__ double tmp_x[size()];

|

__at_align__ double tmp_x[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

x.store(tmp_x);

|

x.store(tmp_x);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

|

||||||

|

|

@ -3,9 +3,9 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

#include <sleef.h>

|

#include <sleef.h>

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

|

|

@ -14,7 +14,7 @@ namespace vec {

|

||||||

// See Note [Acceptable use of anonymous namespace in header]

|

// See Note [Acceptable use of anonymous namespace in header]

|

||||||

namespace {

|

namespace {

|

||||||

|

|

||||||

#if (defined(CPU_CAPABILITY_AVX) || defined(CPU_CAPABILITY_AVX2)) && !defined(_MSC_VER)

|

#if defined(CPU_CAPABILITY_AVX2) && !defined(_MSC_VER)

|

||||||

|

|

||||||

template <> class Vectorized<float> {

|

template <> class Vectorized<float> {

|

||||||

private:

|

private:

|

||||||

|

|

@ -76,7 +76,7 @@ public:

|

||||||

static Vectorized<float> loadu(const void* ptr, int64_t count = size()) {

|

static Vectorized<float> loadu(const void* ptr, int64_t count = size()) {

|

||||||

if (count == size())

|

if (count == size())

|

||||||

return _mm256_loadu_ps(reinterpret_cast<const float*>(ptr));

|

return _mm256_loadu_ps(reinterpret_cast<const float*>(ptr));

|

||||||

__at_align32__ float tmp_values[size()];

|

__at_align__ float tmp_values[size()];

|

||||||

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

||||||

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

||||||

// instructions while a loop would be compiled to one instruction.

|

// instructions while a loop would be compiled to one instruction.

|

||||||

|

|

@ -107,7 +107,7 @@ public:

|

||||||

return _mm256_cmp_ps(values, _mm256_set1_ps(0.0f), _CMP_UNORD_Q);

|

return _mm256_cmp_ps(values, _mm256_set1_ps(0.0f), _CMP_UNORD_Q);

|

||||||

}

|

}

|

||||||

Vectorized<float> map(float (*const f)(float)) const {

|

Vectorized<float> map(float (*const f)(float)) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

tmp[i] = f(tmp[i]);

|

tmp[i] = f(tmp[i]);

|

||||||

|

|

@ -213,8 +213,8 @@ public:

|

||||||

return map(calc_i0e);

|

return map(calc_i0e);

|

||||||

}

|

}

|

||||||

Vectorized<float> igamma(const Vectorized<float> &x) const {

|

Vectorized<float> igamma(const Vectorized<float> &x) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_x[size()];

|

__at_align__ float tmp_x[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

x.store(tmp_x);

|

x.store(tmp_x);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -223,8 +223,8 @@ public:

|

||||||

return loadu(tmp);

|

return loadu(tmp);

|

||||||

}

|

}

|

||||||

Vectorized<float> igammac(const Vectorized<float> &x) const {

|

Vectorized<float> igammac(const Vectorized<float> &x) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_x[size()];

|

__at_align__ float tmp_x[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

x.store(tmp_x);

|

x.store(tmp_x);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -412,12 +412,11 @@ inline void convert(const float* src, float* dst, int64_t n) {

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

#ifdef CPU_CAPABILITY_AVX2

|

|

||||||

template <>

|

template <>

|

||||||

Vectorized<float> inline fmadd(const Vectorized<float>& a, const Vectorized<float>& b, const Vectorized<float>& c) {

|

Vectorized<float> inline fmadd(const Vectorized<float>& a, const Vectorized<float>& b, const Vectorized<float>& c) {

|

||||||

return _mm256_fmadd_ps(a, b, c);

|

return _mm256_fmadd_ps(a, b, c);

|

||||||

}

|

}

|

||||||

#endif

|

|

||||||

|

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -3,8 +3,8 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

// Sleef offers vectorized versions of some transcedentals

|

// Sleef offers vectorized versions of some transcedentals

|

||||||

// such as sin, cos, tan etc..

|

// such as sin, cos, tan etc..

|

||||||

// However for now opting for STL, since we are not building

|

// However for now opting for STL, since we are not building

|

||||||

|

|

@ -220,7 +220,7 @@ public:

|

||||||

return res;

|

return res;

|

||||||

}

|

}

|

||||||

else {

|

else {

|

||||||

__at_align32__ float tmp_values[size()];

|

__at_align__ float tmp_values[size()];

|

||||||

for (auto i = 0; i < size(); ++i) {

|

for (auto i = 0; i < size(); ++i) {

|

||||||

tmp_values[i] = 0.0;

|

tmp_values[i] = 0.0;

|

||||||

}

|

}

|

||||||

|

|

@ -261,19 +261,19 @@ public:

|

||||||

// Once we specialize that implementation for ARM

|

// Once we specialize that implementation for ARM

|

||||||

// this should be removed. TODO (kimishpatel)

|

// this should be removed. TODO (kimishpatel)

|

||||||

float operator[](int idx) const {

|

float operator[](int idx) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

return tmp[idx];

|

return tmp[idx];

|

||||||

}

|

}

|

||||||

float operator[](int idx) {

|

float operator[](int idx) {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

return tmp[idx];

|

return tmp[idx];

|

||||||

}

|

}

|

||||||

// For boolean version where we want to if any 1/all zero

|

// For boolean version where we want to if any 1/all zero

|

||||||

// etc. can be done faster in a different way.

|

// etc. can be done faster in a different way.

|

||||||

int zero_mask() const {

|

int zero_mask() const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

int mask = 0;

|

int mask = 0;

|

||||||

for (int i = 0; i < size(); ++ i) {

|

for (int i = 0; i < size(); ++ i) {

|

||||||

|

|

@ -284,8 +284,8 @@ public:

|

||||||

return mask;

|

return mask;

|

||||||

}

|

}

|

||||||

Vectorized<float> isnan() const {

|

Vectorized<float> isnan() const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float res[size()];

|

__at_align__ float res[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

for (int i = 0; i < size(); i++) {

|

for (int i = 0; i < size(); i++) {

|

||||||

if (_isnan(tmp[i])) {

|

if (_isnan(tmp[i])) {

|

||||||

|

|

@ -297,7 +297,7 @@ public:

|

||||||

return loadu(res);

|

return loadu(res);

|

||||||

};

|

};

|

||||||

Vectorized<float> map(float (*const f)(float)) const {

|

Vectorized<float> map(float (*const f)(float)) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

tmp[i] = f(tmp[i]);

|

tmp[i] = f(tmp[i]);

|

||||||

|

|

@ -332,8 +332,8 @@ public:

|

||||||

return map(std::atan);

|

return map(std::atan);

|

||||||

}

|

}

|

||||||

Vectorized<float> atan2(const Vectorized<float> &exp) const {

|

Vectorized<float> atan2(const Vectorized<float> &exp) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_exp[size()];

|

__at_align__ float tmp_exp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

exp.store(tmp_exp);

|

exp.store(tmp_exp);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -342,8 +342,8 @@ public:

|

||||||

return loadu(tmp);

|

return loadu(tmp);

|

||||||

}

|

}

|

||||||

Vectorized<float> copysign(const Vectorized<float> &sign) const {

|

Vectorized<float> copysign(const Vectorized<float> &sign) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_sign[size()];

|

__at_align__ float tmp_sign[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

sign.store(tmp_sign);

|

sign.store(tmp_sign);

|

||||||

for (size_type i = 0; i < size(); i++) {

|

for (size_type i = 0; i < size(); i++) {

|

||||||

|

|

@ -367,8 +367,8 @@ public:

|

||||||

return map(std::expm1);

|

return map(std::expm1);

|

||||||

}

|

}

|

||||||

Vectorized<float> fmod(const Vectorized<float>& q) const {

|

Vectorized<float> fmod(const Vectorized<float>& q) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_q[size()];

|

__at_align__ float tmp_q[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

q.store(tmp_q);

|

q.store(tmp_q);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -377,8 +377,8 @@ public:

|

||||||

return loadu(tmp);

|

return loadu(tmp);

|

||||||

}

|

}

|

||||||

Vectorized<float> hypot(const Vectorized<float> &b) const {

|

Vectorized<float> hypot(const Vectorized<float> &b) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_b[size()];

|

__at_align__ float tmp_b[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

b.store(tmp_b);

|

b.store(tmp_b);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -393,8 +393,8 @@ public:

|

||||||

return map(calc_i0e);

|

return map(calc_i0e);

|

||||||

}

|

}

|

||||||

Vectorized<float> igamma(const Vectorized<float> &x) const {

|

Vectorized<float> igamma(const Vectorized<float> &x) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_x[size()];

|

__at_align__ float tmp_x[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

x.store(tmp_x);

|

x.store(tmp_x);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -403,8 +403,8 @@ public:

|

||||||

return loadu(tmp);

|

return loadu(tmp);

|

||||||

}

|

}

|

||||||

Vectorized<float> igammac(const Vectorized<float> &x) const {

|

Vectorized<float> igammac(const Vectorized<float> &x) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_x[size()];

|

__at_align__ float tmp_x[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

x.store(tmp_x);

|

x.store(tmp_x);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -425,8 +425,8 @@ public:

|

||||||

return map(std::log2);

|

return map(std::log2);

|

||||||

}

|

}

|

||||||

Vectorized<float> nextafter(const Vectorized<float> &b) const {

|

Vectorized<float> nextafter(const Vectorized<float> &b) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_b[size()];

|

__at_align__ float tmp_b[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

b.store(tmp_b);

|

b.store(tmp_b);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

@ -490,8 +490,8 @@ public:

|

||||||

return this->sqrt().reciprocal();

|

return this->sqrt().reciprocal();

|

||||||

}

|

}

|

||||||

Vectorized<float> pow(const Vectorized<float> &exp) const {

|

Vectorized<float> pow(const Vectorized<float> &exp) const {

|

||||||

__at_align32__ float tmp[size()];

|

__at_align__ float tmp[size()];

|

||||||

__at_align32__ float tmp_exp[size()];

|

__at_align__ float tmp_exp[size()];

|

||||||

store(tmp);

|

store(tmp);

|

||||||

exp.store(tmp_exp);

|

exp.store(tmp_exp);

|

||||||

for (int64_t i = 0; i < size(); i++) {

|

for (int64_t i = 0; i < size(); i++) {

|

||||||

|

|

|

||||||

|

|

@ -3,8 +3,8 @@

|

||||||

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

// DO NOT DEFINE STATIC DATA IN THIS HEADER!

|

||||||

// See Note [Do not compile initializers with AVX]

|

// See Note [Do not compile initializers with AVX]

|

||||||

|

|

||||||

#include <ATen/cpu/vec/vec256/intrinsics.h>

|

#include <ATen/cpu/vec/intrinsics.h>

|

||||||

#include <ATen/cpu/vec/vec256/vec256_base.h>

|

#include <ATen/cpu/vec/vec_base.h>

|

||||||

#include <c10/macros/Macros.h>

|

#include <c10/macros/Macros.h>

|

||||||

|

|

||||||

namespace at {

|

namespace at {

|

||||||

|

|

@ -55,7 +55,7 @@ public:

|

||||||

}

|

}

|

||||||

template <int64_t mask>

|

template <int64_t mask>

|

||||||

static Vectorized<int64_t> blend(Vectorized<int64_t> a, Vectorized<int64_t> b) {

|

static Vectorized<int64_t> blend(Vectorized<int64_t> a, Vectorized<int64_t> b) {

|

||||||

__at_align32__ int64_t tmp_values[size()];

|

__at_align__ int64_t tmp_values[size()];

|

||||||

a.store(tmp_values);

|

a.store(tmp_values);

|

||||||

if (mask & 0x01)

|

if (mask & 0x01)

|

||||||

tmp_values[0] = _mm256_extract_epi64(b.values, 0);

|

tmp_values[0] = _mm256_extract_epi64(b.values, 0);

|

||||||

|

|

@ -93,7 +93,7 @@ public:

|

||||||

return _mm256_loadu_si256(reinterpret_cast<const __m256i*>(ptr));

|

return _mm256_loadu_si256(reinterpret_cast<const __m256i*>(ptr));

|

||||||

}

|

}

|

||||||

static Vectorized<int64_t> loadu(const void* ptr, int64_t count) {

|

static Vectorized<int64_t> loadu(const void* ptr, int64_t count) {

|

||||||

__at_align32__ int64_t tmp_values[size()];

|

__at_align__ int64_t tmp_values[size()];

|

||||||

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

||||||

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

||||||

// instructions while a loop would be compiled to one instruction.

|

// instructions while a loop would be compiled to one instruction.

|

||||||

|

|

@ -109,7 +109,7 @@ public:

|

||||||

// https://software.intel.com/content/www/us/en/develop/documentation/cpp-compiler-developer-guide-and-reference/top/compiler-reference/intrinsics/intrinsics-for-intel-advanced-vector-extensions/intrinsics-for-load-and-store-operations-1/mm256-storeu-si256.html

|

// https://software.intel.com/content/www/us/en/develop/documentation/cpp-compiler-developer-guide-and-reference/top/compiler-reference/intrinsics/intrinsics-for-intel-advanced-vector-extensions/intrinsics-for-load-and-store-operations-1/mm256-storeu-si256.html

|

||||||

_mm256_storeu_si256(reinterpret_cast<__m256i*>(ptr), values);

|

_mm256_storeu_si256(reinterpret_cast<__m256i*>(ptr), values);

|

||||||

} else if (count > 0) {

|

} else if (count > 0) {

|

||||||

__at_align32__ int64_t tmp_values[size()];

|

__at_align__ int64_t tmp_values[size()];

|

||||||

_mm256_storeu_si256(reinterpret_cast<__m256i*>(tmp_values), values);

|

_mm256_storeu_si256(reinterpret_cast<__m256i*>(tmp_values), values);

|

||||||

std::memcpy(ptr, tmp_values, count * sizeof(int64_t));

|

std::memcpy(ptr, tmp_values, count * sizeof(int64_t));

|

||||||

}

|

}

|

||||||

|

|

@ -216,7 +216,7 @@ public:

|

||||||

return _mm256_loadu_si256(reinterpret_cast<const __m256i*>(ptr));

|

return _mm256_loadu_si256(reinterpret_cast<const __m256i*>(ptr));

|

||||||

}

|

}

|

||||||

static Vectorized<int32_t> loadu(const void* ptr, int32_t count) {

|

static Vectorized<int32_t> loadu(const void* ptr, int32_t count) {

|

||||||

__at_align32__ int32_t tmp_values[size()];

|

__at_align__ int32_t tmp_values[size()];

|

||||||

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

// Ensure uninitialized memory does not change the output value See https://github.com/pytorch/pytorch/issues/32502

|

||||||

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

// for more details. We do not initialize arrays to zero using "={0}" because gcc would compile it to two

|

||||||

// instructions while a loop would be compiled to one instruction.

|

// instructions while a loop would be compiled to one instruction.

|

||||||

|

|

@ -232,7 +232,7 @@ public:

|

||||||

// https://software.intel.com/content/www/us/en/develop/documentation/cpp-compiler-developer-guide-and-reference/top/compiler-reference/intrinsics/intrinsics-for-intel-advanced-vector-extensions/intrinsics-for-load-and-store-operations-1/mm256-storeu-si256.html

|

// https://software.intel.com/content/www/us/en/develop/documentation/cpp-compiler-developer-guide-and-reference/top/compiler-reference/intrinsics/intrinsics-for-intel-advanced-vector-extensions/intrinsics-for-load-and-store-operations-1/mm256-storeu-si256.html

|

||||||

_mm256_storeu_si256(reinterpret_cast<__m256i*>(ptr), values);

|

_mm256_storeu_si256(reinterpret_cast<__m256i*>(ptr), values);

|

||||||

} else if (count > 0) {

|

} else if (count > 0) {

|

||||||

__at_align32__ int32_t tmp_values[size()];

|

__at_align__ int32_t tmp_values[size()];

|

||||||

_mm256_storeu_si256(reinterpret_cast<__m256i*>(tmp_values), values);

|

_mm256_storeu_si256(reinterpret_cast<__m256i*>(tmp_values), values);

|

||||||