mirror of

https://github.com/zebrajr/pytorch.git

synced 2025-12-07 12:21:27 +01:00

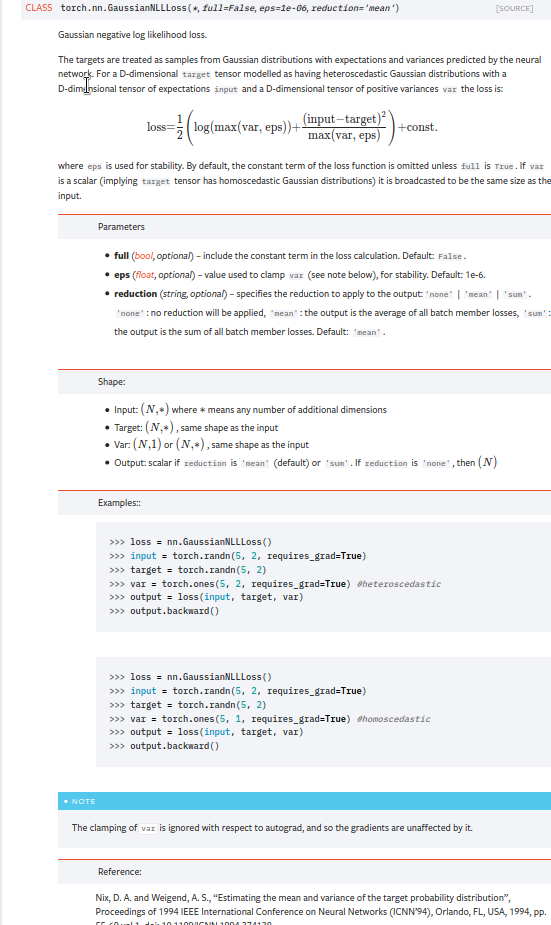

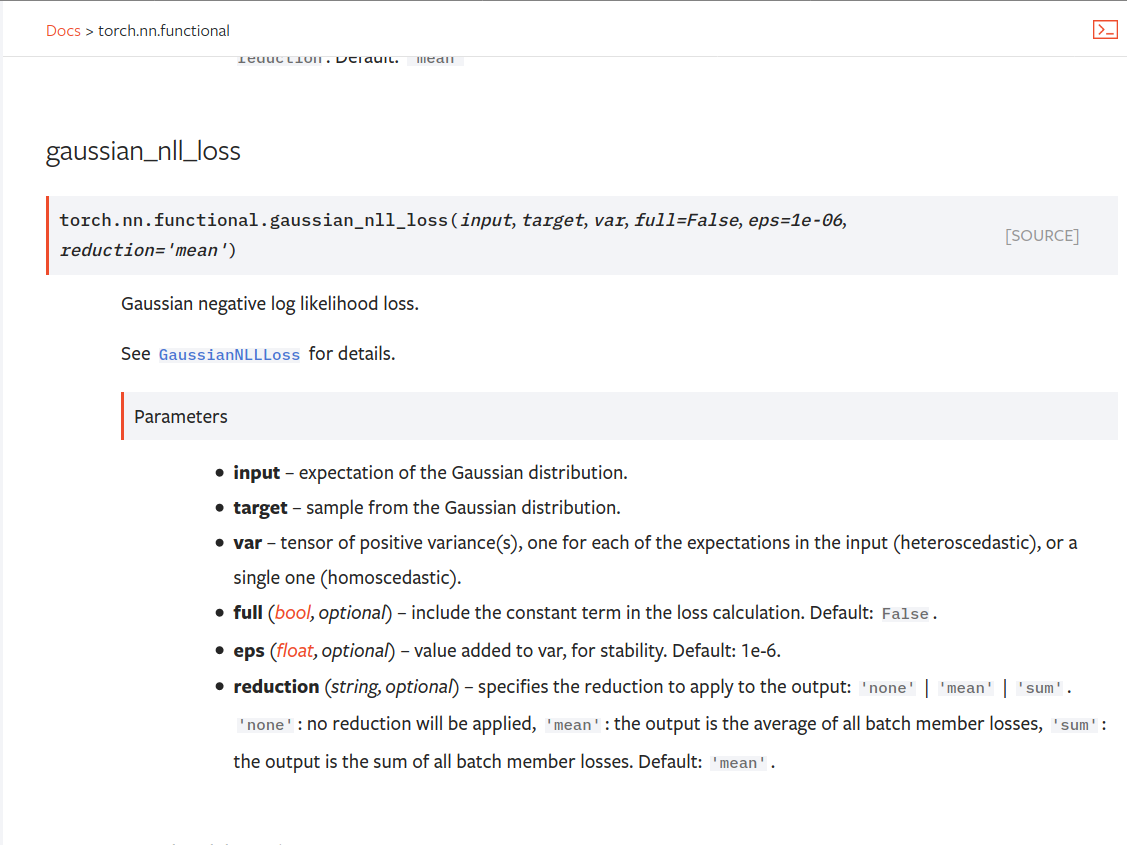

Summary: Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson ## Major changes: - Overhauled the actual loss calculation so that the shapes are now correct (in functional.py) - added the missing doc in nn.functional.rst ## Minor changes (in functional.py): - I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target. - I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut. Screenshots of updated docs attached. Let me know what you think, thanks! ## Edit: Description of change of behaviour (affecting BC): The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected. ### Example Define input tensors, all with size (2, 3). `input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)` `target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])` `var = 2*torch.ones(size=(2, 3), requires_grad=True)` Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3). `loss = torch.nn.GaussianNLLLoss(reduction='none')` Old behaviour: `print(loss(input, target, var)) ` `# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).` New behaviour: `print(loss(input, target, var)) ` `# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)` `# This has the expected size, (2, 3).` To recover the old behaviour, sum along all dimensions except for the 0th: `print(loss(input, target, var).sum(dim=1))` `# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`   Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469 Reviewed By: jbschlosser, agolynski Differential Revision: D27894170 Pulled By: albanD fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f |

||

|---|---|---|

| .. | ||

| _static | ||

| _templates | ||

| community | ||

| notes | ||

| rpc | ||

| scripts | ||

| __config__.rst | ||

| amp.rst | ||

| autograd.rst | ||

| backends.rst | ||

| benchmark_utils.rst | ||

| bottleneck.rst | ||

| checkpoint.rst | ||

| complex_numbers.rst | ||

| conf.py | ||

| cpp_extension.rst | ||

| cpp_index.rst | ||

| cuda.rst | ||

| cudnn_persistent_rnn.rst | ||

| cudnn_rnn_determinism.rst | ||

| data.rst | ||

| ddp_comm_hooks.rst | ||

| distributed.optim.rst | ||

| distributed.rst | ||

| distributions.rst | ||

| dlpack.rst | ||

| docutils.conf | ||

| fft.rst | ||

| futures.rst | ||

| fx.rst | ||

| hub.rst | ||

| index.rst | ||

| jit_builtin_functions.rst | ||

| jit_language_reference_v2.rst | ||

| jit_language_reference.rst | ||

| jit_python_reference.rst | ||

| jit_unsupported.rst | ||

| jit.rst | ||

| linalg.rst | ||

| math-quantizer-equation.png | ||

| mobile_optimizer.rst | ||

| model_zoo.rst | ||

| multiprocessing.rst | ||

| name_inference.rst | ||

| named_tensor.rst | ||

| nn.functional.rst | ||

| nn.init.rst | ||

| nn.rst | ||

| onnx.rst | ||

| optim.rst | ||

| package.rst | ||

| pipeline.rst | ||

| profiler.rst | ||

| quantization-support.rst | ||

| quantization.rst | ||

| random.rst | ||

| rpc.rst | ||

| sparse.rst | ||

| special.rst | ||

| storage.rst | ||

| tensor_attributes.rst | ||

| tensor_view.rst | ||

| tensorboard.rst | ||

| tensors.rst | ||

| torch.nn.intrinsic.qat.rst | ||

| torch.nn.intrinsic.quantized.rst | ||

| torch.nn.intrinsic.rst | ||

| torch.nn.qat.rst | ||

| torch.nn.quantized.dynamic.rst | ||

| torch.nn.quantized.rst | ||

| torch.overrides.rst | ||

| torch.quantization.rst | ||

| torch.rst | ||

| type_info.rst | ||