Continuation after https://github.com/pytorch/pytorch/pull/90163.

Here is a script I used to find all the non-existing arguments in the docstrings (the script can give false positives in presence of *args/**kwargs or decorators):

_Edit:_

I've realized that the indentation is wrong for the last `break` in the script, so the script only gives output for a function if the first docstring argument is wrong. I'll create a separate PR if I find more issues with corrected script.

``` python

import ast

import os

import docstring_parser

for root, dirs, files in os.walk('.'):

for name in files:

if root.startswith("./.git/") or root.startswith("./third_party/"):

continue

if name.endswith(".py"):

full_name = os.path.join(root, name)

with open(full_name, "r") as source:

tree = ast.parse(source.read())

for node in ast.walk(tree):

if isinstance(node, ast.FunctionDef):

all_node_args = node.args.args

if node.args.vararg is not None:

all_node_args.append(node.args.vararg)

if node.args.kwarg is not None:

all_node_args.append(node.args.kwarg)

if node.args.posonlyargs is not None:

all_node_args.extend(node.args.posonlyargs)

if node.args.kwonlyargs is not None:

all_node_args.extend(node.args.kwonlyargs)

args = [a.arg for a in all_node_args]

docstring = docstring_parser.parse(ast.get_docstring(node))

doc_args = [a.arg_name for a in docstring.params]

clean_doc_args = []

for a in doc_args:

clean_a = ""

for c in a.split()[0]:

if c.isalnum() or c == '_':

clean_a += c

if clean_a:

clean_doc_args.append(clean_a)

doc_args = clean_doc_args

for a in doc_args:

if a not in args:

print(full_name, node.lineno, args, doc_args)

break

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/90505

Approved by: https://github.com/malfet, https://github.com/ZainRizvi

Prior to this change, the symbolic_fn `layer_norm` (before ONNX version 17) always lose precision when eps is smaller than Float type, while PyTorch always take eps as Double. This PR adds `onnx::Cast` into eps related operations to prevent losing precision during the calculation.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/89869

Approved by: https://github.com/BowenBao

Preparation for the next PR in this stack: #89559.

I replaced

- `self.assertTrue(torch.equal(...))` with `self.assertEqual(..., rtol=0, atol=0, exact_device=True)`,

- the same for `self.assertFalse(...)` with `self.assertNotEqual(...)`, and

- `assert torch.equal(...)` with `torch.testing.assert_close(..., rtol=0, atol=0)` (note that we don't need to set `check_device=True` here since that is the default).

There were a few instances where the result of `torch.equal` is used directly. In that cases I've replaced with `(... == ...).all().item()` while sometimes also dropping the `.item()` depending on the context.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/89527

Approved by: https://github.com/mruberry

Extend `register_custom_op` to support onnx-script local function. The FunctionProto from onnx-script is represented by custom op and inserted into ModelProto for op execution.

NOTE: I did experiments on >2GB case of a simple model with large initializers:

```python

import torch

class Net(torch.nn.Module):

def __init__(self, B, C):

super().__init__()

self.layer_norm = torch.nn.LayerNorm((B, C), eps=1e-3)

def forward(self, x):

return self.layer_norm(x)

N, B, C = 3, 25000, 25000

model = Net(B, C)

x = torch.randn(N, B, C)

torch.onnx.export(model, x, "large_model.onnx", opset_version=12)

```

And it turns out we won't get model_bytes > 2GB after `_export_onnx` pybind cpp function, as we split initializer in external files in that function, and have serialization before return the model bytes, which protobuf is not allowed to be larger than 2GB at any circumstances.

The test cases can be found in the next PR #86907 .

Pull Request resolved: https://github.com/pytorch/pytorch/pull/86906

Approved by: https://github.com/justinchuby, https://github.com/BowenBao

Follow-up for #87735

Once again, because BUILD_CAFFE2=0 is not tested for ONNX exporter, one scenario slipped through. A use case where the model can be exported without aten fallback when operator_export_type=ONNX_ATEN_FALLBACK and BUILD_CAFFE2=0

A new unit test has been added, but it won't prevent regressions if BUILD_CAFFE2=0 is not executed on CI again

Fixes#87313

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88504

Approved by: https://github.com/justinchuby, https://github.com/BowenBao

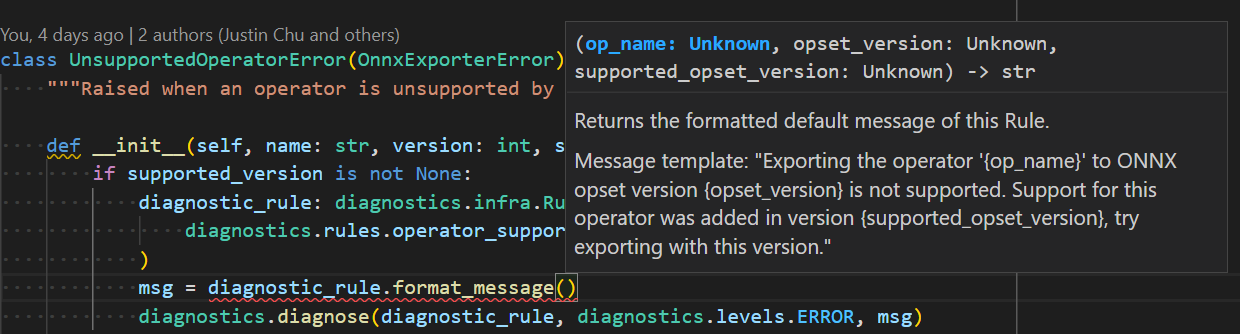

* Reflect required arguments in method signature for each diagnostic rule. Previous design accepts arbitrary sized tuple which is hard to use and prone to error.

* Removed `DiagnosticTool` to keep things compact.

* Removed specifying supported rule set for tool(context) and checking if rule of reported diagnostic falls inside the set, to keep things compact.

* Initial overview markdown file.

* Change `full_description` definition. Now `text` field should not be empty. And its markdown should be stored in `markdown` field.

* Change `message_default_template` to allow only named fields (excluding numeric fields). `field_name` provides clarity on what argument is expected.

* Added `diagnose` api to `torch.onnx._internal.diagnostics`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87830

Approved by: https://github.com/abock

Fixes https://github.com/pytorch/pytorch/issues/84365 and more

This PR addresses not only the issue above, but the entire family of issues related to `torch._C.Value.type()` parsing when `scalarType()` or `dtype()` is not available.

This issue exists before `JitScalarType` was introduced, but the new implementation refactored the bug in because the new api `from_name` and `from_dtype` requires parsing `torch._C.Value.type()` to get proper inputs, which is exactly the root cause for this family of bugs.

Therefore `from_name` and `from_dtype` must be called when the implementor knows the `name` and `dtype` without parsing a `torch._C.Value`. To handle the corner cases hidden within `torch._C.Value`, a new `from_value` API was introduced and it should be used in favor of the former ones for most cases. The new API is safer and doesn't require type parsing from user, triggering JIT asserts in the core of pytorch.

Although CI is passing for all tests, please review carefully all symbolics/helpers refactoring to make sure the meaning/intetion of the old call are not changed in the new call

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87245

Approved by: https://github.com/justinchuby, https://github.com/BowenBao

Fixes#83038

Currently _compare_ort_pytorch_outputs does not produce clearer error messages for differences in the zero point or scale of the two outputs. It also does not produce a clear error message for whether both are quantized.

This pull request adds assertions to output whether the scales and zero points have differences, and whether each individual output is quantized.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87242

Approved by: https://github.com/justinchuby, https://github.com/BowenBao

ONNX and PyTorch has different equation on pooling and different strategy on ceil_mode, which leads to discrepancy on corner case (#71549 ).

Specifically, PyTorch avereage pooling is not following [the equation on documentation](https://pytorch.org/docs/stable/generated/torch.nn.AvgPool2d.html), it allows sliding window to go off-bound instead, if they start within the left padding or the input (in NOTE section). More details can be found in #57178.

This PR changes avgpool in opset 10 and 11 back the way as opset 9, which it stops using ceil_mode and count_include_pad in onnx::AveragePool

A comprehensive test for all combinations of parameters can be found in the next PR. #87893

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87892

Approved by: https://github.com/BowenBao

The `_cast_` family of symbolic functions has been created from a template function. Even though it saved some lines, it very much obscured the intention of the code. Since the list doesn't really change and the `_cast_` family are IIRC deprecated, it is safe for us to expand the templates and make the code more readable.

This PR also removes any direct calls to `_cast_` functions to maintain a consistent pattern of directly creating `Cast` nodes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87666

Approved by: https://github.com/BowenBao

According to #38248, quantized::conv1d_relu shares packing parameters with Conv2D (kspatialDim is also 2), and needs a different unpacking way. Therefore, a new `QuantizedParamsType=Conv1D` is used to differentiate the two, and has to extract 1D information from 2D packed parameters.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/85997

Approved by: https://github.com/BowenBao

The deprecation messages in SymbolicContext will be emitted every time it is initialized. Since we already emit deprecation messages at registration time, the deprecation decorator can be removed in `__init__` to reduce noise.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/86065

Approved by: https://github.com/BowenBao

When aten fallback is true, `_layer_norm_returns_normalized_input_mean_rstd` can return a single value.

- Removed `_layer_norm_returns_normalized_input_mean_rstd` and have layer_norm call native_layer_norm.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/85979

Approved by: https://github.com/BowenBao

Separated from #85651 to highlight the type annotation changes. It should support all type annotations

needed by SARIF, except for the dictionary types described verbally like the following example. For now it

is only annotated as `Any`. To enable it, we will need to extend `jschema_to_python` tool to allow passing

in type hints.

```json

"messageStrings": {

"description": "A set of name/value pairs with arbitrary names. Each value is a multiformatMessageString object, which holds message strings in plain text and (optionally) Markdown format. The strings can include placeholders, which can be used to construct a message in combination with an arbitrary number of additional string arguments.",

"type": "object",

"additionalProperties": {

"$ref": "#/definitions/multiformatMessageString"

}

},

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/85898

Approved by: https://github.com/justinchuby, https://github.com/abock, https://github.com/thiagocrepaldi