# Summary

This PR introduces a new Tensor subclass that is designed to be used with torch.nn.functional.scaled_dot_product_attention. Currently we have a boolean `is_causal` flag that allows users to do do causal masking without the need to actually create the "realized" attention bias and pass into sdpa. We originally added this flag since there is native support in both fused kernels we support. This provides a big performance gain ( the kernels only need to iterate over ~0.5x the sequence, and for very large sequence lengths this can provide vary large memory improvements.

The flag was introduced when the early on in the kernel development and at the time it was implicitly meant to "upper_left" causal attention. This distinction only matters when the attention_bias is not square. For a more detailed break down see: https://github.com/pytorch/pytorch/issues/108108. The kernels default behavior has since changed, largely due to the rise of autogressive text generation. And unfortunately this would lead to a BC break. In the long term it may actually be beneficial to change the default meaning of `is_causal` to represent lower_right causal masking.

The larger theme though is laid here: https://github.com/pytorch/pytorch/issues/110681. The thesis being that there is alot of innovation in SDPA revolving around the attention_bias being used. This is the first in hopefully a few more attention_biases that we would like to add. The next interesting one would be `sliding_window` which is used by the popular mistral model family.

Results from benchmarking, I improved the meff_attention perf hence the slightly decreased max perf.

```Shell

+---------+--------------------+------------+-----------+-----------+-----------+-----------+----------------+----------+

| Type | Speedup | batch_size | num_heads | q_seq_len | k_seq_len | embed_dim | dtype | head_dim |

+---------+--------------------+------------+-----------+-----------+-----------+-----------+----------------+----------+

| Average | 1.2388050062214226 | | | | | | | |

| Max | 1.831672915579016 | 128 | 32 | 1024 | 2048 | 2048 | torch.bfloat16 | 64 |

| Min | 0.9430534166730135 | 1 | 16 | 256 | 416 | 2048 | torch.bfloat16 | 128 |

+---------+--------------------+------------+-----------+-----------+-----------+-----------+----------------+----------+

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/114823

Approved by: https://github.com/cpuhrsch

# Summary

- Adds type hinting support for SDPA

- Updates the documentation adding warnings and notes on the context manager

- Adds scaled_dot_product_attention to the non-linear activation function section of nn.functional docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94008

Approved by: https://github.com/cpuhrsch

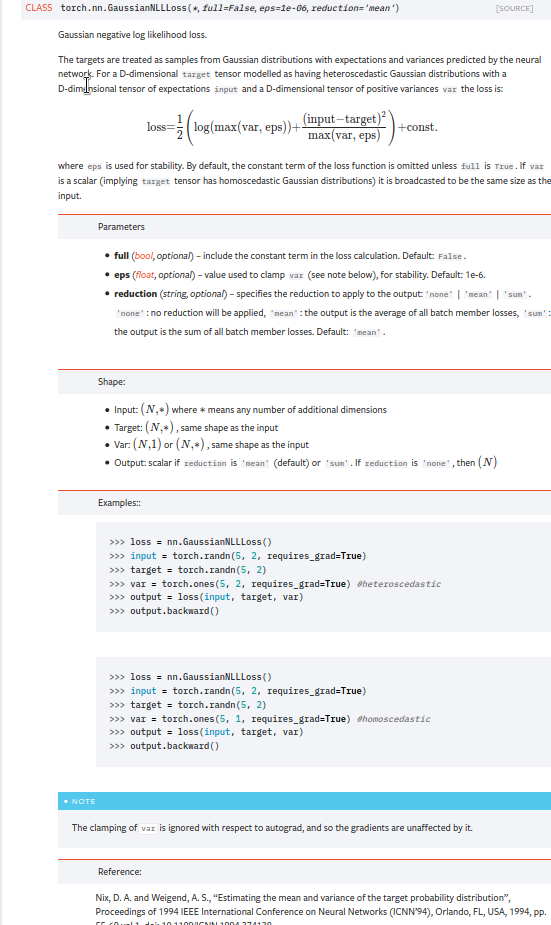

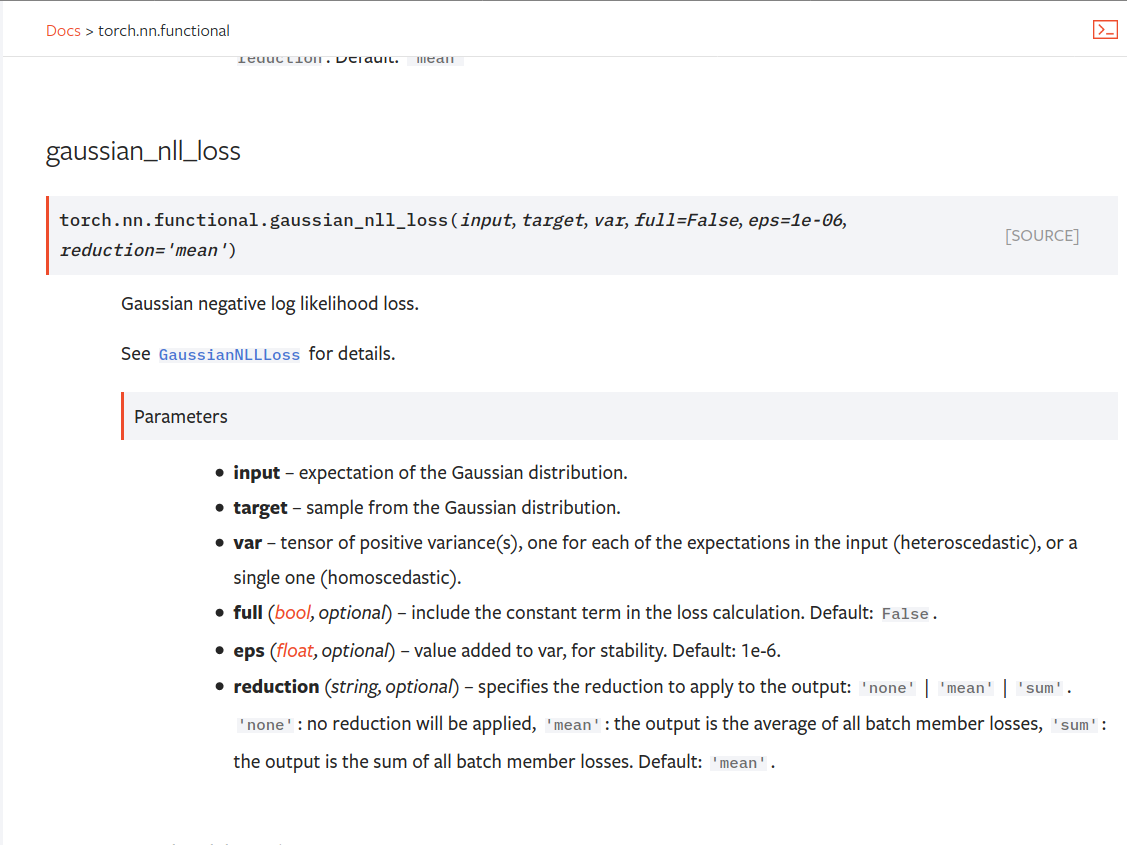

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

Summary:

Related to https://github.com/pytorch/pytorch/issues/52256

Splits torch.nn.functional into a table-of-contents page and many sub-pages, one for each function

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55038

Reviewed By: gchanan

Differential Revision: D27502677

Pulled By: zou3519

fbshipit-source-id: 38e450a0fee41c901eb56f94aee8a32f4eefc807

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43680

As discussed [here](https://github.com/pytorch/pytorch/issues/43342),

adding in a Python-only implementation of the triplet-margin loss that takes a

custom distance function. Still discussing whether this is necessary to add to

PyTorch Core.

Test Plan:

python test/run_tests.py

Imported from OSS

Reviewed By: albanD

Differential Revision: D23363898

fbshipit-source-id: 1cafc05abecdbe7812b41deaa1e50ea11239d0cb

Summary:

solves most of gh-38011 in the framework of solving gh-32703.

These should only be formatting fixes, I did not try to fix grammer and syntax.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41068

Differential Revision: D22411919

Pulled By: zou3519

fbshipit-source-id: 25780316b6da2cfb4028ea8a6f649bb18b746440

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/38120

Test Plan: build docs locally and attach a screenshot to this PR.

Differential Revision: D21477815

Pulled By: zou3519

fbshipit-source-id: 420bbcfcbd191d1a8e33cdf4a90c95bf00a5d226