Ryan Spring

23d03025dc

Implement Tanh Gelu Approximation ( #61439 )

...

Summary:

1. Implements https://github.com/pytorch/pytorch/issues/39853

2. Adds approximate boolean flag to Gelu

3. Enables Tanh Gelu approximation

4. Adds double backward support for Gelu

5. Enable Tanh Gelu in NvFuser

```

def gelu(x, approximate : str = 'none'):

if approximate == 'tanh':

# sqrt(2/pi) = 0.7978845608028654

return 0.5 * x * (1.0 + torch.tanh(0.7978845608028654 * (x + 0.044715 * torch.pow(x, 3.0))))

else:

return x * normcdf(x)

```

Linking XLA PR - https://github.com/pytorch/xla/pull/3039

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61439

Reviewed By: cpuhrsch

Differential Revision: D33850228

Pulled By: jbschlosser

fbshipit-source-id: 3cc33fb298e480d7ecc5c67716da019d60c6ab33

(cherry picked from commit 3a53b3e94f

2022-01-31 17:07:45 +00:00

vfdev

63429bf4b3

Removed JIT FC tweaks for interpolation options ( #71937 )

...

Summary:

Description:

- Removed JIT FC tweaks for interpolation options : nearest-exact and antialiasing

They were added in

- https://github.com/pytorch/pytorch/pull/64501 (Sept 04 2021)

- https://github.com/pytorch/pytorch/pull/65142 (Sept 16 2021)

cc jbschlosser

Pull Request resolved: https://github.com/pytorch/pytorch/pull/71937

Reviewed By: mrshenli

Differential Revision: D33845502

Pulled By: jbschlosser

fbshipit-source-id: 8a94454fd643cd2aef21b06689f72a0f16620d30

(cherry picked from commit b21173d64c

2022-01-28 19:56:59 +00:00

Joel Schlosser

cb823d9f07

Revert D33744717: [pytorch][PR] Implement Tanh Gelu Approximation

...

Test Plan: revert-hammer

Differential Revision:

D33744717 (f499ab9ceff499ab9cefe9fb2d1db1

2022-01-28 18:35:01 +00:00

Ryan Spring

f499ab9cef

Implement Tanh Gelu Approximation ( #61439 )

...

Summary:

1. Implements https://github.com/pytorch/pytorch/issues/39853

2. Adds approximate boolean flag to Gelu

3. Enables Tanh Gelu approximation

4. Adds double backward support for Gelu

5. Enable Tanh Gelu in NvFuser

```

def gelu(x, approximate : str = 'none'):

if approximate == 'tanh':

# sqrt(2/pi) = 0.7978845608028654

return 0.5 * x * (1.0 + torch.tanh(0.7978845608028654 * (x + 0.044715 * torch.pow(x, 3.0))))

else:

return x * normcdf(x)

```

Linking XLA PR - https://github.com/pytorch/xla/pull/3039

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61439

Reviewed By: mikaylagawarecki

Differential Revision: D33744717

Pulled By: jbschlosser

fbshipit-source-id: d64532a562ed53247bb4fa52bb16722634d5c187

(cherry picked from commit 4713dd9cca

2022-01-28 16:59:09 +00:00

kshitij12345

2981534f54

[nn] cross_entropy: no batch dim support ( #71055 )

...

Summary:

Reference: https://github.com/pytorch/pytorch/issues/60585

cc albanD mruberry jbschlosser walterddr kshitij12345

Pull Request resolved: https://github.com/pytorch/pytorch/pull/71055

Reviewed By: anjali411

Differential Revision: D33567403

Pulled By: jbschlosser

fbshipit-source-id: 4d0a311ad7419387c4547e43e533840c8b6d09d8

2022-01-13 14:48:51 -08:00

George Qi

d7db5fb462

ctc loss no batch dim support ( #70092 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/70092

Test Plan: Imported from OSS

Reviewed By: jbschlosser

Differential Revision: D33280068

Pulled By: george-qi

fbshipit-source-id: 3278fb2d745a396fe27c00fb5f40df0e7f584f81

2022-01-07 14:33:22 -08:00

Joel Schlosser

e6befbe85c

Add flag to optionally average output attention weights across heads ( #70055 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/47583

Pull Request resolved: https://github.com/pytorch/pytorch/pull/70055

Reviewed By: bhosmer

Differential Revision: D33457866

Pulled By: jbschlosser

fbshipit-source-id: 17746b3668b0148c1e1ed8333227b7c42f1e3bf5

2022-01-06 17:32:37 -08:00

kshitij12345

1aa98c7540

[docs] multi_head_attention_forward no-batch dim support ( #70590 )

...

Summary:

no batch dim support added in https://github.com/pytorch/pytorch/issues/67176

Pull Request resolved: https://github.com/pytorch/pytorch/pull/70590

Reviewed By: VitalyFedyunin

Differential Revision: D33405283

Pulled By: jbschlosser

fbshipit-source-id: 86217d7d540184fd12f3a9096605d2b1e9aa313e

2022-01-05 08:26:25 -08:00

vfdev

d2abf3f981

Added antialias flag to interpolate (CPU only, bicubic) ( #68819 )

...

Summary:

Description:

- Added antialias flag to interpolate (CPU only)

- forward and backward for bicubic mode

- added tests

Previous PR for bilinear, https://github.com/pytorch/pytorch/pull/65142

### Benchmarks

<details>

<summary>

Forward pass, CPU. PTH interpolation vs PIL

</summary>

Cases:

- PTH RGB 3 Channels, float32 vs PIL RGB uint8 (apples vs pears)

- PTH 1 Channel, float32 vs PIL 1 Channel Float

Code: https://gist.github.com/vfdev-5/b173761a567f2283b3c649c3c0574112

```

Torch config: PyTorch built with:

- GCC 9.3

- C++ Version: 201402

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- CPU capability usage: AVX2

- CUDA Runtime 11.1

- NVCC architecture flags: -gencode;arch=compute_61,code=sm_61

- CuDNN 8.0.5

- Build settings: BUILD_TYPE=Release, CUDA_VERSION=11.1, CUDNN_VERSION=8.0.5, CXX_COMPILER=/usr/bin/c++, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_PYTORCH_QNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Werror=cast-function-type -Wno-stringop-overflow, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.11.0, USE_CUDA=1, USE_CUDNN=1, USE_EIGEN_FOR_BLAS=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=OFF, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=0, USE_OPENMP=ON, USE_ROCM=OFF,

Num threads: 1

[------------------- Downsampling (bicubic): torch.Size([1, 3, 906, 438]) -> (320, 196) -------------------]

| Reference, PIL 8.4.0, mode: RGB | 1.11.0a0+gitb0bdf58

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 4.5 | 5.2

channels_last non-contiguous torch.float32 | 4.5 | 5.3

Times are in milliseconds (ms).

[------------------- Downsampling (bicubic): torch.Size([1, 3, 906, 438]) -> (460, 220) -------------------]

| Reference, PIL 8.4.0, mode: RGB | 1.11.0a0+gitb0bdf58

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 5.7 | 6.4

channels_last non-contiguous torch.float32 | 5.7 | 6.4

Times are in milliseconds (ms).

[------------------- Downsampling (bicubic): torch.Size([1, 3, 906, 438]) -> (120, 96) --------------------]

| Reference, PIL 8.4.0, mode: RGB | 1.11.0a0+gitb0bdf58

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 3.0 | 4.0

channels_last non-contiguous torch.float32 | 2.9 | 4.1

Times are in milliseconds (ms).

[------------------ Downsampling (bicubic): torch.Size([1, 3, 906, 438]) -> (1200, 196) -------------------]

| Reference, PIL 8.4.0, mode: RGB | 1.11.0a0+gitb0bdf58

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 14.7 | 17.1

channels_last non-contiguous torch.float32 | 14.8 | 17.2

Times are in milliseconds (ms).

[------------------ Downsampling (bicubic): torch.Size([1, 3, 906, 438]) -> (120, 1200) -------------------]

| Reference, PIL 8.4.0, mode: RGB | 1.11.0a0+gitb0bdf58

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 3.5 | 3.9

channels_last non-contiguous torch.float32 | 3.5 | 3.9

Times are in milliseconds (ms).

[---------- Downsampling (bicubic): torch.Size([1, 1, 906, 438]) -> (320, 196) ---------]

| Reference, PIL 8.4.0, mode: F | 1.11.0a0+gitb0bdf58

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 2.4 | 1.8

Times are in milliseconds (ms).

[---------- Downsampling (bicubic): torch.Size([1, 1, 906, 438]) -> (460, 220) ---------]

| Reference, PIL 8.4.0, mode: F | 1.11.0a0+gitb0bdf58

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 3.1 | 2.2

Times are in milliseconds (ms).

[---------- Downsampling (bicubic): torch.Size([1, 1, 906, 438]) -> (120, 96) ----------]

| Reference, PIL 8.4.0, mode: F | 1.11.0a0+gitb0bdf58

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 1.6 | 1.4

Times are in milliseconds (ms).

[--------- Downsampling (bicubic): torch.Size([1, 1, 906, 438]) -> (1200, 196) ---------]

| Reference, PIL 8.4.0, mode: F | 1.11.0a0+gitb0bdf58

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 7.9 | 5.7

Times are in milliseconds (ms).

[--------- Downsampling (bicubic): torch.Size([1, 1, 906, 438]) -> (120, 1200) ---------]

| Reference, PIL 8.4.0, mode: F | 1.11.0a0+gitb0bdf58

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 1.7 | 1.3

Times are in milliseconds (ms).

```

</details>

Code is moved from torchvision: https://github.com/pytorch/vision/pull/3810 and https://github.com/pytorch/vision/pull/4208

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68819

Reviewed By: mikaylagawarecki

Differential Revision: D33339117

Pulled By: jbschlosser

fbshipit-source-id: 6a0443bbba5439f52c7dbc1be819b75634cf67c4

2021-12-29 14:04:43 -08:00

srijan789

73b5b6792f

Adds reduction args to signature of F.multilabel_soft_margin_loss docs ( #70420 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/70301

Pull Request resolved: https://github.com/pytorch/pytorch/pull/70420

Reviewed By: gchanan

Differential Revision: D33336924

Pulled By: jbschlosser

fbshipit-source-id: 18189611b3fc1738900312efe521884bced42666

2021-12-28 09:48:05 -08:00

George Qi

7c690ef1c2

FractionalMaxPool3d with no_batch_dim support ( #69732 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/69732

Test Plan: Imported from OSS

Reviewed By: jbschlosser

Differential Revision: D33280090

Pulled By: george-qi

fbshipit-source-id: aaf90a372b6d80da0554bad28d56436676f9cb89

2021-12-22 14:30:32 -08:00

rohitgr7

78bea1bb66

update example in classification losses ( #69816 )

...

Summary:

Just updated a few examples that were either failing or raising deprecated warnings.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69816

Reviewed By: bdhirsh

Differential Revision: D33217585

Pulled By: albanD

fbshipit-source-id: c6804909be74585c8471b8166b69e6693ad62ca7

2021-12-21 02:46:48 -08:00

kshitij12345

e8d5c7cf7f

[nn] mha : no-batch-dim support (python) ( #67176 )

...

Summary:

Reference: https://github.com/pytorch/pytorch/issues/60585

* [x] Update docs

* [x] Tests for shape checking

Tests take roughly 20s on system that I use. Below is the timings for slowest 20 tests.

```

pytest test/test_modules.py -k _multih --durations=20

============================================================================================== test session starts ===============================================================================================

platform linux -- Python 3.10.0, pytest-6.2.5, py-1.10.0, pluggy-1.0.0

rootdir: /home/kshiteej/Pytorch/pytorch_no_batch_mha, configfile: pytest.ini

plugins: hypothesis-6.23.2, repeat-0.9.1

collected 372 items / 336 deselected / 36 selected

test/test_modules.py ..............ssssssss.............. [100%]

================================================================================================ warnings summary ================================================================================================

../../.conda/envs/pytorch-cuda-dev/lib/python3.10/site-packages/torch/backends/cudnn/__init__.py:73

test/test_modules.py::TestModuleCUDA::test_factory_kwargs_nn_MultiheadAttention_cuda_float32

/home/kshiteej/.conda/envs/pytorch-cuda-dev/lib/python3.10/site-packages/torch/backends/cudnn/__init__.py:73: UserWarning: PyTorch was compiled without cuDNN/MIOpen support. To use cuDNN/MIOpen, rebuild PyTorch making sure the library is visible to the build system.

warnings.warn(

-- Docs: https://docs.pytest.org/en/stable/warnings.html

============================================================================================== slowest 20 durations ==============================================================================================

8.66s call test/test_modules.py::TestModuleCUDA::test_gradgrad_nn_MultiheadAttention_cuda_float64

2.02s call test/test_modules.py::TestModuleCPU::test_gradgrad_nn_MultiheadAttention_cpu_float64

1.89s call test/test_modules.py::TestModuleCUDA::test_grad_nn_MultiheadAttention_cuda_float64

1.01s call test/test_modules.py::TestModuleCUDA::test_factory_kwargs_nn_MultiheadAttention_cuda_float32

0.51s call test/test_modules.py::TestModuleCPU::test_grad_nn_MultiheadAttention_cpu_float64

0.46s call test/test_modules.py::TestModuleCUDA::test_forward_nn_MultiheadAttention_cuda_float32

0.45s call test/test_modules.py::TestModuleCUDA::test_non_contiguous_tensors_nn_MultiheadAttention_cuda_float64

0.44s call test/test_modules.py::TestModuleCUDA::test_non_contiguous_tensors_nn_MultiheadAttention_cuda_float32

0.21s call test/test_modules.py::TestModuleCUDA::test_pickle_nn_MultiheadAttention_cuda_float64

0.21s call test/test_modules.py::TestModuleCUDA::test_pickle_nn_MultiheadAttention_cuda_float32

0.18s call test/test_modules.py::TestModuleCUDA::test_forward_nn_MultiheadAttention_cuda_float64

0.17s call test/test_modules.py::TestModuleCPU::test_non_contiguous_tensors_nn_MultiheadAttention_cpu_float32

0.16s call test/test_modules.py::TestModuleCPU::test_non_contiguous_tensors_nn_MultiheadAttention_cpu_float64

0.11s call test/test_modules.py::TestModuleCUDA::test_factory_kwargs_nn_MultiheadAttention_cuda_float64

0.08s call test/test_modules.py::TestModuleCPU::test_pickle_nn_MultiheadAttention_cpu_float32

0.08s call test/test_modules.py::TestModuleCPU::test_pickle_nn_MultiheadAttention_cpu_float64

0.06s call test/test_modules.py::TestModuleCUDA::test_repr_nn_MultiheadAttention_cuda_float64

0.06s call test/test_modules.py::TestModuleCUDA::test_repr_nn_MultiheadAttention_cuda_float32

0.06s call test/test_modules.py::TestModuleCPU::test_forward_nn_MultiheadAttention_cpu_float32

0.06s call test/test_modules.py::TestModuleCPU::test_forward_nn_MultiheadAttention_cpu_float64

============================================================================================ short test summary info =============================================================================================

=========================================================================== 28 passed, 8 skipped, 336 deselected, 2 warnings in 19.71s ===========================================================================

```

cc albanD mruberry jbschlosser walterddr

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67176

Reviewed By: dagitses

Differential Revision: D33094285

Pulled By: jbschlosser

fbshipit-source-id: 0dd08261b8a457bf8bad5c7f3f6ded14b0beaf0d

2021-12-14 13:21:21 -08:00

Pearu Peterson

48771d1c7f

[BC-breaking] Change dtype of softmax to support TorchScript and MyPy ( #68336 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/68336

Test Plan: Imported from OSS

Reviewed By: VitalyFedyunin

Differential Revision: D32470965

Pulled By: cpuhrsch

fbshipit-source-id: 254b62db155321e6a139bda9600722c948f946d3

2021-11-18 11:26:14 -08:00

Richard Zou

f9ef807f4d

Replace empty with new_empty in nn.functional.pad ( #68565 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68565

This makes it so that we can now vmap over nn.functional.pad (circular

variant). Previously we could not because we were effectively doing

`out.copy_(input)` where the out was created with empty.

This also has the added side effect of cleaning up the code.

Test Plan:

- I tested this using functorch.vmap and can confirm that vmap now

works.

- Unfortunately this doesn't work with the vmap in core so I cannot add

a test for this here.

Reviewed By: albanD

Differential Revision: D32520188

Pulled By: zou3519

fbshipit-source-id: 780a7e8207d7c45fcba645730a5803733ebfd7be

2021-11-18 06:06:50 -08:00

vfdev-5

3da2e09c9b

Added antialias flag to interpolate (CPU only, bilinear) ( #65142 )

...

Summary:

Description:

- Added antialias flag to interpolate (CPU only)

- forward and backward for bilinear mode

- added tests

### Benchmarks

<details>

<summary>

Forward pass, CPU. PTH interpolation vs PIL

</summary>

Cases:

- PTH RGB 3 Channels, float32 vs PIL RGB uint8 (apply vs pears)

- PTH 1 Channel, float32 vs PIL 1 Channel Float

Code: https://gist.github.com/vfdev-5/b173761a567f2283b3c649c3c0574112

```

# OMP_NUM_THREADS=1 python bench_interp_aa_vs_pillow.py

Torch config: PyTorch built with:

- GCC 9.3

- C++ Version: 201402

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- CPU capability usage: AVX2

- CUDA Runtime 11.1

- NVCC architecture flags: -gencode;arch=compute_75,code=sm_75

- CuDNN 8.0.5

- Build settings: BUILD_TYPE=Release, CUDA_VERSION=11.1, CUDNN_VERSION=8.0.5, CXX_COMPILER=/usr/bin/c++, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_PYTORCH_QNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-variable -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Werror=cast-function-type -Wno-stringop-overflow, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.10.0, USE_CUDA=1, USE_CUDNN=1, USE_EIGEN_FOR_BLAS=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=OFF, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=0, USE_OPENMP=ON,

Num threads: 1

[------------------------ Downsampling: torch.Size([1, 3, 906, 438]) -> (320, 196) ------------------------]

| Reference, PIL 8.3.2, mode: RGB | 1.10.0a0+git1e87d91

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 2.9 | 3.1

channels_last non-contiguous torch.float32 | 2.6 | 3.6

Times are in milliseconds (ms).

[------------------------ Downsampling: torch.Size([1, 3, 906, 438]) -> (460, 220) ------------------------]

| Reference, PIL 8.3.2, mode: RGB | 1.10.0a0+git1e87d91

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 3.4 | 4.0

channels_last non-contiguous torch.float32 | 3.4 | 4.8

Times are in milliseconds (ms).

[------------------------ Downsampling: torch.Size([1, 3, 906, 438]) -> (120, 96) -------------------------]

| Reference, PIL 8.3.2, mode: RGB | 1.10.0a0+git1e87d91

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 1.6 | 1.8

channels_last non-contiguous torch.float32 | 1.6 | 1.9

Times are in milliseconds (ms).

[----------------------- Downsampling: torch.Size([1, 3, 906, 438]) -> (1200, 196) ------------------------]

| Reference, PIL 8.3.2, mode: RGB | 1.10.0a0+git1e87d91

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 9.0 | 11.3

channels_last non-contiguous torch.float32 | 8.9 | 12.5

Times are in milliseconds (ms).

[----------------------- Downsampling: torch.Size([1, 3, 906, 438]) -> (120, 1200) ------------------------]

| Reference, PIL 8.3.2, mode: RGB | 1.10.0a0+git1e87d91

1 threads: -------------------------------------------------------------------------------------------------

channels_first contiguous torch.float32 | 2.1 | 1.8

channels_last non-contiguous torch.float32 | 2.1 | 3.4

Times are in milliseconds (ms).

[--------------- Downsampling: torch.Size([1, 1, 906, 438]) -> (320, 196) --------------]

| Reference, PIL 8.3.2, mode: F | 1.10.0a0+git1e87d91

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 1.2 | 1.0

Times are in milliseconds (ms).

[--------------- Downsampling: torch.Size([1, 1, 906, 438]) -> (460, 220) --------------]

| Reference, PIL 8.3.2, mode: F | 1.10.0a0+git1e87d91

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 1.4 | 1.3

Times are in milliseconds (ms).

[--------------- Downsampling: torch.Size([1, 1, 906, 438]) -> (120, 96) ---------------]

| Reference, PIL 8.3.2, mode: F | 1.10.0a0+git1e87d91

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 719.9 | 599.9

Times are in microseconds (us).

[-------------- Downsampling: torch.Size([1, 1, 906, 438]) -> (1200, 196) --------------]

| Reference, PIL 8.3.2, mode: F | 1.10.0a0+git1e87d91

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 3.7 | 3.5

Times are in milliseconds (ms).

[-------------- Downsampling: torch.Size([1, 1, 906, 438]) -> (120, 1200) --------------]

| Reference, PIL 8.3.2, mode: F | 1.10.0a0+git1e87d91

1 threads: ------------------------------------------------------------------------------

contiguous torch.float32 | 834.4 | 605.7

Times are in microseconds (us).

```

</details>

Code is moved from torchvision: https://github.com/pytorch/vision/pull/4208

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65142

Reviewed By: mrshenli

Differential Revision: D32432405

Pulled By: jbschlosser

fbshipit-source-id: b66c548347f257c522c36105868532e8bc1d4c6d

2021-11-17 09:10:15 -08:00

vfdev-5

6adbe044e3

Added nearest-exact interpolation mode ( #64501 )

...

Summary:

Added "nearest-exact" interpolation mode to fix the issues: https://github.com/pytorch/pytorch/issues/34808 and https://github.com/pytorch/pytorch/issues/62237 .

Description:

As we can not fix "nearest" mode without large impact on already trained model [it was suggested](https://github.com/pytorch/pytorch/pull/64501#pullrequestreview-749771815 ) to introduce new mode instead of fixing exising "nearest" mode.

- New mode "nearest-exact" performs index computation for nearest interpolation to match scikit-image, pillow, TF2 and while "nearest" mode still match opencv INTER_NEAREST, which appears to be buggy, see https://ppwwyyxx.com/blog/2021/Where-are-Pixels/#Libraries .

"nearest":

```

input_index_f32 = output_index * scale

input_index = floor(input_index_f32)

```

"nearest-exact"

```

input_index_f32 = (output_index + 0.5) * scale - 0.5

input_index = round(input_index_f32)

```

Comparisions with other libs: https://gist.github.com/vfdev-5/a5bd5b1477b1c82a87a0f9e25c727664

PyTorch version | 1.9.0 "nearest" | this PR "nearest" | this PR "nearest-exact"

---|---|---|---

Resize option: | |

OpenCV INTER_NEAREST result mismatches | 0 | 0 | 10

OpenCV INTER_NEAREST_EXACT result mismatches | 9 | 9 | 9

Scikit-Image result mismatches | 10 | 10 | 0

Pillow result mismatches | 10 | 10 | 7

TensorFlow result mismatches | 10 | 10 | 0

Rescale option: | | |

size mismatches (https://github.com/pytorch/pytorch/issues/62396 ) | 10 | 10 | 10

OpenCV INTER_NEAREST result mismatches | 3 | 3| 5

OpenCV INTER_NEAREST_EXACT result mismatches | 3 | 3| 4

Scikit-Image result mismatches | 4 | 4 | 0

Scipy result mismatches | 4 | 4 | 0

TensorFlow: no such option | - | -

Versions:

```

skimage: 0.19.0.dev0

opencv: 4.5.4-dev

scipy: 1.7.2

Pillow: 8.4.0

TensorFlow: 2.7.0

```

Implementations in other libs:

- Pillow:

- ee079ae67e/src/libImaging/Geometry.c (L889-L899)ee079ae67e/src/libImaging/Geometry.c (L11)38fae50c3f/skimage/transform/_warps.py (L180-L188)47bb6febaa/scipy/ndimage/src/ni_interpolation.c (L775-L779)47bb6febaa/scipy/ndimage/src/ni_interpolation.c (L479)https://github.com/pytorch/pytorch/pull/64501

Reviewed By: anjali411

Differential Revision: D32361901

Pulled By: jbschlosser

fbshipit-source-id: df906f4d25a2b2180e1942ffbab2cc14600aeed2

2021-11-15 14:28:19 -08:00

Junjie Wang

301369a774

[PyTorch][Fix] Pass the arguments of embedding as named arguments ( #67574 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67574

When adding the optional params for sharded embedding op. Found that we cannot get these params from `__torch_function__` override. The reason is that we don't pass them via keyword arguments. So maybe we want to change them to kwargs?

ghstack-source-id: 143029375

Test Plan: CI

Reviewed By: albanD

Differential Revision: D32039152

fbshipit-source-id: c7e598e49eddbabff6e11e3f8cb0818f57c839f6

2021-11-11 15:22:10 -08:00

Kushashwa Ravi Shrimali

9e7b314318

OpInfo for nn.functional.conv1d ( #67747 )

...

Summary:

This PR adds OpInfo for `nn.functional.conv1d`. There is a minor typo fix in the documentation as well.

Issue tracker: https://github.com/pytorch/pytorch/issues/54261

cc: mruberry

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67747

Reviewed By: malfet

Differential Revision: D32309258

Pulled By: mruberry

fbshipit-source-id: add21911b8ae44413e033e19398f398210737c6c

2021-11-11 09:23:04 -08:00

Natalia Gimelshein

8dfbc620d4

don't hardcode mask type in mha ( #68077 )

...

Summary:

Fixes #{issue number}

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68077

Reviewed By: zou3519

Differential Revision: D32292410

Pulled By: ngimel

fbshipit-source-id: 67213cf5474dc3f83e90e28cf5a823abb683a6f9

2021-11-10 09:41:21 -08:00

vfdev-5

49bf24fc83

Updated error message for nn.functional.interpolate ( #66417 )

...

Summary:

Description:

- Updated error message for nn.functional.interpolate

Fixes https://github.com/pytorch/pytorch/issues/63845

cc vadimkantorov

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66417

Reviewed By: albanD

Differential Revision: D31924761

Pulled By: jbschlosser

fbshipit-source-id: ca74c77ac34b4f644aa10440b77b3fcbe4e770ac

2021-10-26 10:33:24 -07:00

kshitij12345

828a9dcc04

[nn] MarginRankingLoss : no batch dim ( #64975 )

...

Summary:

Reference: https://github.com/pytorch/pytorch/issues/60585

cc albanD mruberry jbschlosser walterddr

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64975

Reviewed By: albanD

Differential Revision: D31906528

Pulled By: jbschlosser

fbshipit-source-id: 1127242a859085b1e06a4b71be19ad55049b38ba

2021-10-26 09:03:31 -07:00

Mikayla Gawarecki

5569d5824c

Fix documentation of arguments for torch.nn.functional.Linear ( #66884 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66884

Addressing docs fix mentioned in issue 64978 on Github

ghstack-source-id: 141093449

Test Plan: https://pxl.cl/1Rxkz

Reviewed By: anjali411

Differential Revision: D31767303

fbshipit-source-id: f1ca10fed5bb768749bce3ddc240bbce1dfb3f84

2021-10-20 12:02:58 -07:00

vfdev

62ca5a81c0

Exposed recompute_scale_factor into nn.Upsample ( #66419 )

...

Summary:

Description:

- Exposed recompute_scale_factor into nn.Upsample such that recompute_scale_factor=True option could be used

Context: https://github.com/pytorch/pytorch/pull/64501#discussion_r710205190

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66419

Reviewed By: gchanan

Differential Revision: D31731276

Pulled By: jbschlosser

fbshipit-source-id: 2118489e6f5bc1142f2a64323f4cfd095a9f3c42

2021-10-20 07:59:25 -07:00

kshitij12345

1db50505d5

[nn] MultiLabelSoftMarginLoss : no batch dim support ( #65690 )

...

Summary:

Reference: https://github.com/pytorch/pytorch/issues/60585

cc albanD mruberry jbschlosser walterddr

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65690

Reviewed By: zou3519

Differential Revision: D31731162

Pulled By: jbschlosser

fbshipit-source-id: d26f27555f78afdadd49126e0548a8bfda50cc5a

2021-10-18 15:30:01 -07:00

Kushashwa Ravi Shrimali

909694fd88

Fix nn.functional.max_poolNd dispatch (for arg: return_indices) ( #62544 )

...

Summary:

Please see https://github.com/pytorch/pytorch/issues/62545 for context.

The order of `return_indices, ceil_mode` is different for `nn.functional.max_poolNd` functions to what seen with `torch.nn.MaxPoolNd` (modular form). While this should be resolved in the future, it was decided to first raise a warning that the behavior will be changed in the future. (please see https://github.com/pytorch/pytorch/pull/62544#issuecomment-893770955 for more context)

This PR thus raises appropriate warnings and updates the documentation to show the full signature (along with a note) for `torch.nn.functional.max_poolNd` functions.

**Quick links:**

(_upstream_)

* Documentation of [`nn.functional.max_pool1d`](https://pytorch.org/docs/1.9.0/generated/torch.nn.functional.max_pool1d.html ), [`nn.functional.max_pool2d`](https://pytorch.org/docs/stable/generated/torch.nn.functional.max_pool2d.html ), and [`nn.functional.max_pool3d`](https://pytorch.org/docs/stable/generated/torch.nn.functional.max_pool3d.html ).

(_this branch_)

* Documentation of [`nn.functional.max_pool1d`](https://docs-preview.pytorch.org/62544/generated/torch.nn.functional.max_pool1d.html?highlight=max_pool1d ), [`nn.functional.max_pool2d`](https://docs-preview.pytorch.org/62544/generated/torch.nn.functional.max_pool2d.html?highlight=max_pool2d#torch.nn.functional.max_pool2d ), and [`nn.functional.max_pool3d`](https://docs-preview.pytorch.org/62544/generated/torch.nn.functional.max_pool3d.html?highlight=max_pool3d#torch.nn.functional.max_pool3d ).

cc mruberry jbschlosser

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62544

Reviewed By: gchanan

Differential Revision: D31179038

Pulled By: jbschlosser

fbshipit-source-id: 0a2c7215df9e132ce9ec51448c5b3c90bbc69030

2021-10-18 08:34:38 -07:00

Natalia Gimelshein

4a50b6c490

fix cosine similarity dimensionality check ( #66191 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/66086

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66191

Reviewed By: dagitses, malfet

Differential Revision: D31436997

Pulled By: ngimel

fbshipit-source-id: 363556eea4e1696d928ae08320d298451c286b10

2021-10-06 15:44:51 -07:00

John Clow

36485d36b6

Docathon: Add docs for nn.functional.*d_max_pool ( #63264 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63264

Adding docs to max_pool to resolve docathon issue #60904

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision: D31071491

Pulled By: Gamrix

fbshipit-source-id: f4f6ec319c62ff1dfaeed8bb6bb0464b9514a7e9

2021-09-23 11:59:50 -07:00

kshitij12345

a012216b96

[nn] Fold : no batch dim ( #64909 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/64907

Reference: https://github.com/pytorch/pytorch/issues/60585

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64909

Reviewed By: cpuhrsch, heitorschueroff

Differential Revision: D30991087

Pulled By: jbschlosser

fbshipit-source-id: 91a37e0b1d51472935ff2308719dfaca931513f3

2021-09-23 08:37:32 -07:00

Samantha Andow

c7c711bfb8

Add optional tensor arguments to ( #63967 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/63435

Adds optional tensor arguments to check handling torch function checks. The only one I didn't do this for in the functional file was `multi_head_attention_forward` since that already took care of some optional tensor arguments but not others so it seemed like arguments were specifically chosen

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63967

Reviewed By: albanD

Differential Revision: D30640441

Pulled By: ezyang

fbshipit-source-id: 5ef9554d2fb6c14779f8f45542ab435fb49e5d0f

2021-08-30 19:21:26 -07:00

Thomas J. Fan

d3bcba5f85

ENH Adds label_smoothing to cross entropy loss ( #63122 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/7455

Partially resolves pytorch/vision#4281

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63122

Reviewed By: iramazanli

Differential Revision: D30586076

Pulled By: jbschlosser

fbshipit-source-id: 06afc3aa1f8b9edb07fe9ed68c58968ad1926924

2021-08-29 23:33:04 -07:00

Sameer Deshmukh

809e1e7457

Allow TransformerEncoder and TransformerDecoder to accept 0-dim batch sized tensors. ( #62800 )

...

Summary:

This issue fixes a part of https://github.com/pytorch/pytorch/issues/12013 , which is summarized concretely in https://github.com/pytorch/pytorch/issues/38115 .

This PR allows TransformerEncoder and Decoder (alongwith the inner `Layer` classes) to accept inputs with 0-dimensional batch sizes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62800

Reviewed By: VitalyFedyunin

Differential Revision: D30303240

Pulled By: jbschlosser

fbshipit-source-id: 8f8082a6f2a9f9d7ce0b22a942d286d5db62bd12

2021-08-13 16:11:57 -07:00

Thomas J. Fan

c5f3ab6982

ENH Adds no_batch_dim to FractionalMaxPool2d ( #62490 )

...

Summary:

Towards https://github.com/pytorch/pytorch/issues/60585

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62490

Reviewed By: bdhirsh

Differential Revision: D30287143

Pulled By: jbschlosser

fbshipit-source-id: 1b9dd932157f571adf3aa2c98c3c6b56ece8fa6e

2021-08-13 08:48:40 -07:00

Sameer Deshmukh

9e7b6bb69f

Allow LocalResponseNorm to accept 0 dim batch sizes ( #62801 )

...

Summary:

This issue fixes a part of https://github.com/pytorch/pytorch/issues/12013 , which is summarized concretely in https://github.com/pytorch/pytorch/issues/38115 .

This PR allows `LocalResponseNorm` to accept tensors with 0 dimensional batch size.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62801

Reviewed By: zou3519

Differential Revision: D30165282

Pulled By: jbschlosser

fbshipit-source-id: cce0b2d12dbf47dc8ed6247c267bf2f2305f858a

2021-08-10 06:54:52 -07:00

Natalia Gimelshein

e6a3154519

Allow broadcasting along non-reduction dimension for cosine similarity ( #62912 )

...

Summary:

Checks introduced by https://github.com/pytorch/pytorch/issues/58559 are too strict and disable correctly working cases that people were relying on.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62912

Reviewed By: jbschlosser

Differential Revision: D30165827

Pulled By: ngimel

fbshipit-source-id: f9229a9fc70142fe08a42fbf2d18dae12f679646

2021-08-06 19:17:04 -07:00

James Reed

5542d590d4

[EZ] Fix type of functional.pad default value ( #62095 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/62095

Test Plan: Imported from OSS

Reviewed By: jbschlosser

Differential Revision: D29879898

Pulled By: jamesr66a

fbshipit-source-id: 903d32eca0040f176c60ace17cadd36cd710345b

2021-08-03 17:47:20 -07:00

Joel Schlosser

a42345adee

Support for target with class probs in CrossEntropyLoss ( #61044 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/11959

Alternative approach to creating a new `CrossEntropyLossWithSoftLabels` class. This PR simply adds support for "soft targets" AKA class probabilities to the existing `CrossEntropyLoss` and `NLLLoss` classes.

Implementation is dumb and simple right now, but future work can add higher performance kernels for this case.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61044

Reviewed By: zou3519

Differential Revision: D29876894

Pulled By: jbschlosser

fbshipit-source-id: 75629abd432284e10d4640173bc1b9be3c52af00

2021-07-29 10:04:41 -07:00

Thomas J. Fan

7c588d5d00

ENH Adds no_batch_dim support for pad 2d and 3d ( #62183 )

...

Summary:

Towards https://github.com/pytorch/pytorch/issues/60585

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62183

Reviewed By: ejguan

Differential Revision: D29942250

Pulled By: jbschlosser

fbshipit-source-id: d1df4ddcb90969332dc1a2a7937e66ecf46f0443

2021-07-28 11:10:44 -07:00

Thomas J. Fan

71a6ef17a5

ENH Adds no_batch_dim tests/docs for Maxpool1d & MaxUnpool1d ( #62206 )

...

Summary:

Towards https://github.com/pytorch/pytorch/issues/60585

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62206

Reviewed By: ejguan

Differential Revision: D29942341

Pulled By: jbschlosser

fbshipit-source-id: a3fad774cee30478f7d6cdd49d2eec31be3fc518

2021-07-28 10:15:32 -07:00

Thomas J. Fan

1ec6205bd0

ENH Adds no_batch_dim support for maxpool and unpool for 2d and 3d ( #61984 )

...

Summary:

Towards https://github.com/pytorch/pytorch/issues/60585

(Interesting how the maxpool tests are currently in `test/test_nn.py`)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61984

Reviewed By: suo

Differential Revision: D29883846

Pulled By: jbschlosser

fbshipit-source-id: 1e0637c96f8fa442b4784a9865310c164cbf61c8

2021-07-23 16:14:10 -07:00

Joel Schlosser

f4ffaf0cde

Fix type promotion for cosine_similarity() ( #62054 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/61454

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62054

Reviewed By: suo

Differential Revision: D29881755

Pulled By: jbschlosser

fbshipit-source-id: 10499766ac07b0ae3c0d2f4c426ea818d1e77db6

2021-07-23 15:20:48 -07:00

Thomas J. Fan

48af9de92f

ENH Enables No-batch for *Pad1d Modules ( #61060 )

...

Summary:

Toward https://github.com/pytorch/pytorch/issues/60585

This PR adds a `single_batch_reference_fn` that uses the single batch implementation to check no-batch.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61060

Reviewed By: mrshenli

Differential Revision: D29739823

Pulled By: jbschlosser

fbshipit-source-id: d90d88a3671177a647171801cc6ec7aa3df35482

2021-07-21 07:12:41 -07:00

Joel Schlosser

4d842d909b

Revert FC workaround for ReflectionPad3d ( #61308 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/61248

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61308

Reviewed By: iramazanli

Differential Revision: D29566849

Pulled By: jbschlosser

fbshipit-source-id: 8ab443ffef7fd9840d64d71afc2f2d2b8a410ddb

2021-07-12 14:19:07 -07:00

vfdev

68f9819df4

Typo fix ( #41121 )

...

Summary:

Description:

- Typo fix in the docstring

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41121

Reviewed By: heitorschueroff

Differential Revision: D29660228

Pulled By: ezyang

fbshipit-source-id: fc2b55683ec5263ff55c3b6652df3e6313e02be2

2021-07-12 12:43:47 -07:00

kshitij12345

3faf6a715d

[special] migrate log_softmax ( #60512 )

...

Summary:

Reference: https://github.com/pytorch/pytorch/issues/50345

Rendered Docs: https://14335157-65600975-gh.circle-artifacts.com/0/docs/special.html#torch.special.log_softmax

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60512

Reviewed By: iramazanli

Differential Revision: D29626262

Pulled By: mruberry

fbshipit-source-id: c42d4105531ffb004f11f1ba6ae50be19bc02c91

2021-07-12 11:01:25 -07:00

Natalia Gimelshein

5b118a7f23

Don't reference reflection_pad3d in functional.py ( #60837 )

...

Summary:

To work around FC issue

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60837

Reviewed By: jbschlosser

Differential Revision: D29421142

Pulled By: ngimel

fbshipit-source-id: f5c1d9c324173b628e286f9005edf7109162066f

2021-06-27 20:54:32 -07:00

lezcano

4e347f1242

[docs] Fix backticks in docs ( #60474 )

...

Summary:

There is a very common error when writing docs: One forgets to write a matching `` ` ``, and something like ``:attr:`x`` is rendered in the docs. This PR fixes most (all?) of these errors (and a few others).

I found these running ``grep -r ">[^#<][^<]*\`"`` on the `docs/build/html/generated` folder. The regex finds an HTML tag that does not start with `#` (as python comments in example code may contain backticks) and that contains a backtick in the rendered HTML.

This regex has not given any false positive in the current codebase, so I am inclined to suggest that we should add this check to the CI. Would this be possible / reasonable / easy to do malfet ?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60474

Reviewed By: mrshenli

Differential Revision: D29309633

Pulled By: albanD

fbshipit-source-id: 9621e0e9f87590cea060dd084fa367442b6bd046

2021-06-24 06:27:41 -07:00

Thomas J. Fan

4e51503b1f

DOC Improves input and target docstring for loss functions ( #60553 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/56581

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60553

Reviewed By: VitalyFedyunin

Differential Revision: D29343797

Pulled By: jbschlosser

fbshipit-source-id: cafc29d60a204a21deff56dd4900157d2adbd91e

2021-06-23 20:20:29 -07:00

Thomas J. Fan

c16f87949f

ENH Adds nn.ReflectionPad3d ( #59791 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/27655

This PR adds a C++ and Python version of ReflectionPad3d with structured kernels. The implementation uses lambdas extensively to better share code from the backward and forward pass.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59791

Reviewed By: gchanan

Differential Revision: D29242015

Pulled By: jbschlosser

fbshipit-source-id: 18e692d3b49b74082be09f373fc95fb7891e1b56

2021-06-21 10:53:14 -07:00

Saketh Are

bbd58d5c32

fix :attr: rendering in F.kl_div ( #59636 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59636

Fixes #57538

Test Plan:

Rebuilt docs to verify the fix:

{F623235643}

Reviewed By: zou3519

Differential Revision: D28964825

fbshipit-source-id: 275c7f70e69eda15a807e1774fd852d94bf02864

2021-06-09 12:20:14 -07:00

Thomas J. Fan

8693e288d7

DOC Small rewrite of interpolate recompute_scale_factor docstring ( #58989 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/55909

This PR looks to improve the documentation to describe the following behavior:

8130f2f67a/torch/nn/functional.py (L3673-L3685)https://github.com/pytorch/pytorch/pull/58989

Reviewed By: ejguan

Differential Revision: D28931879

Pulled By: jbschlosser

fbshipit-source-id: d1140ebe1631c5ec75f135c2907daea19499f21a

2021-06-07 12:40:05 -07:00

Joel Schlosser

ef32a29c97

Back out "[pytorch][PR] ENH Adds dtype to nn.functional.one_hot" ( #59080 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59080

Original commit changeset: 3686579517cc

Test Plan: None; reverting diff

Reviewed By: albanD

Differential Revision: D28746799

fbshipit-source-id: 75a7885ab0bf3abadde9a42b56d479f71f57c89c

2021-05-27 15:40:52 -07:00

Adnios

09a8f22bf9

Add mish activation function ( #58648 )

...

Summary:

See issus: https://github.com/pytorch/pytorch/issues/58375

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58648

Reviewed By: gchanan

Differential Revision: D28625390

Pulled By: jbschlosser

fbshipit-source-id: 23ea2eb7d5b3dc89c6809ff6581b90ee742149f4

2021-05-25 10:36:21 -07:00

Thomas J. Fan

a7f4f80903

ENH Adds dtype to nn.functional.one_hot ( #58090 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/33046

Related to https://github.com/pytorch/pytorch/issues/53785

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58090

Reviewed By: zou3519

Differential Revision: D28640893

Pulled By: jbschlosser

fbshipit-source-id: 3686579517ccc75beaa74f0f6d167f5e40a83fd2

2021-05-24 13:48:25 -07:00

Basil Hosmer

90f05c005d

refactor multi_head_attention_forward ( #56674 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56674

`torch.nn.functional.multi_head_attention_forward` supports a long tail of options and variations of the multihead attention computation. Its complexity is mostly due to arbitrating among options, preparing values in multiple ways, and so on - the attention computation itself is a small fraction of the implementation logic, which is relatively simple but can be hard to pick out.

The goal of this PR is to

- make the internal logic of `multi_head_attention_forward` less entangled and more readable, with the attention computation steps easily discernible from their surroundings.

- factor out simple helpers to perform the actual attention steps, with the aim of making them available to other attention-computing contexts.

Note that these changes should leave the signature and output of `multi_head_attention_forward` completely unchanged, so not BC-breaking. Later PRs should present new multihead attention entry points, but deprecating this one is out of scope for now.

Changes are in two parts:

- the implementation of `multi_head_attention_forward` has been extensively resequenced, which makes the rewrite look more total than it actually is. Changes to argument-processing logic are largely confined to a) minor perf tweaks/control flow tightening, b) error message improvements, and c) argument prep changes due to helper function factoring (e.g. merging `key_padding_mask` with `attn_mask` rather than applying them separately)

- factored helper functions are defined just above `multi_head_attention_forward`, with names prefixed with `_`. (A future PR may pair them with corresponding modules, but for now they're private.)

Test Plan: Imported from OSS

Reviewed By: gmagogsfm

Differential Revision: D28344707

Pulled By: bhosmer

fbshipit-source-id: 3bd8beec515182c3c4c339efc3bec79c0865cb9a

2021-05-11 10:09:56 -07:00

Harish Shankam

ad31aa652c

Fixed the error in conv1d example ( #57356 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/51225

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57356

Reviewed By: albanD

Differential Revision: D28173174

Pulled By: malfet

fbshipit-source-id: 5e813306f2e2f7e0412ffaa5d147441134739e00

2021-05-06 07:02:37 -07:00

Joel Schlosser

7d2a9f2dc9

Fix instance norm input size validation + test ( #56659 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/45687

Fix changes the input size check for `InstanceNorm*d` to be more restrictive and correctly reject sizes with only a single spatial element, regardless of batch size, to avoid infinite variance.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56659

Reviewed By: pbelevich

Differential Revision: D27948060

Pulled By: jbschlosser

fbshipit-source-id: 21cfea391a609c0774568b89fd241efea72516bb

2021-04-23 10:53:39 -07:00

M.L. Croci

1f0223d6bb

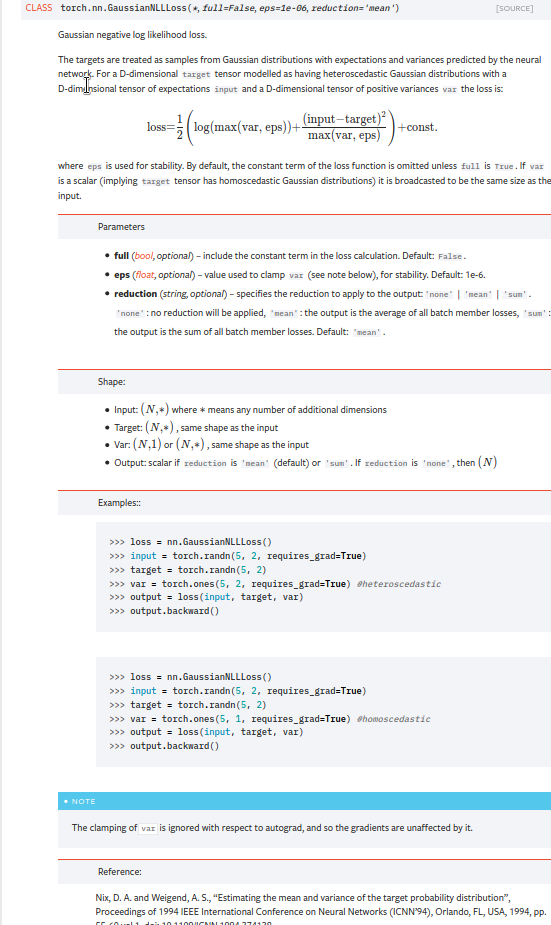

Fix bug in gaussian_nll_loss ( #56469 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964 . cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

2021-04-22 07:43:48 -07:00

Nikita Shulga

6d7d36d255

s/“pad”/"pad"/ in files introduced by #56065 ( #56618 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/56618

Reviewed By: albanD

Differential Revision: D27919343

Pulled By: malfet

fbshipit-source-id: 2fac8ba5f399e050463141eba225da935c97a5ce

2021-04-21 17:40:29 -07:00

Joel Schlosser

8a81c4dc27

Update padding_idx docs for EmbeddingBag to better match Embedding's ( #56065 )

...

Summary:

Match updated `Embedding` docs from https://github.com/pytorch/pytorch/pull/54026 as closely as possible. Additionally, update the C++ side `Embedding` docs, since those were missed in the previous PR.

There are 6 (!) places for docs:

1. Python module form in `sparse.py` - includes an additional line about newly constructed `Embedding`s / `EmbeddingBag`s

2. Python `from_pretrained()` in `sparse.py` (refers back to module docs)

3. Python functional form in `functional.py`

4. C++ module options - includes an additional line about newly constructed `Embedding`s / `EmbeddingBag`s

5. C++ `from_pretrained()` options

6. C++ functional options

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56065

Reviewed By: malfet

Differential Revision: D27908383

Pulled By: jbschlosser

fbshipit-source-id: c5891fed1c9d33b4b8cd63500a14c1a77d92cc78

2021-04-21 12:10:37 -07:00

Sam Estep

e3900d2ba5

Add lint for unqualified noqa ( #56272 )

...

Summary:

As this diff shows, currently there are a couple hundred instances of raw `noqa` in the codebase, which just ignore all errors on a given line. That isn't great, so this PR changes all existing instances of that antipattern to qualify the `noqa` with respect to a specific error code, and adds a lint to prevent more of this from happening in the future.

Interestingly, some of the examples the `noqa` lint catches are genuine attempts to qualify the `noqa` with a specific error code, such as these two:

```

test/jit/test_misc.py:27: print(f"{hello + ' ' + test}, I'm a {test}") # noqa E999

test/jit/test_misc.py:28: print(f"format blank") # noqa F541

```

However, those are still wrong because they are [missing a colon](https://flake8.pycqa.org/en/3.9.1/user/violations.html#in-line-ignoring-errors ), which actually causes the error code to be completely ignored:

- If you change them to anything else, the warnings will still be suppressed.

- If you add the necessary colons then it is revealed that `E261` was also being suppressed, unintentionally:

```

test/jit/test_misc.py:27:57: E261 at least two spaces before inline comment

test/jit/test_misc.py:28:35: E261 at least two spaces before inline comment

```

I did try using [flake8-noqa](https://pypi.org/project/flake8-noqa/ ) instead of a custom `git grep` lint, but it didn't seem to work. This PR is definitely missing some of the functionality that flake8-noqa is supposed to provide, though, so if someone can figure out how to use it, we should do that instead.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56272

Test Plan:

CI should pass on the tip of this PR, and we know that the lint works because the following CI run (before this PR was finished) failed:

- https://github.com/pytorch/pytorch/runs/2365189927

Reviewed By: janeyx99

Differential Revision: D27830127

Pulled By: samestep

fbshipit-source-id: d6dcf4f945ebd18cd76c46a07f3b408296864fcb

2021-04-19 13:16:18 -07:00

Kurt Mohler

3fe4718d16

Add padding_idx argument to EmbeddingBag ( #49237 )

...

Summary:

This PR adds a `padding_idx` parameter to `nn.EmbeddingBag` and `nn.functional.embedding_bag`. As with `nn.Embedding`'s `padding_idx` argument, if an embedding's index is equal to `padding_idx` it is ignored, so it is not included in the reduction.

This PR does not add support for `padding_idx` for quantized or ONNX `EmbeddingBag` for opset10/11 (opset9 is supported). In these cases, an error is thrown if `padding_idx` is provided.

Fixes https://github.com/pytorch/pytorch/issues/3194

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49237

Reviewed By: walterddr, VitalyFedyunin

Differential Revision: D26948258

Pulled By: jbschlosser

fbshipit-source-id: 3ca672f7e768941f3261ab405fc7597c97ce3dfc

2021-04-14 09:38:01 -07:00

Jeff Yang

263d8ef4ef

docs: fix formatting for embedding_bag ( #54666 )

...

Summary:

fixes https://github.com/pytorch/pytorch/issues/43499

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54666

Reviewed By: H-Huang

Differential Revision: D27411027

Pulled By: jbschlosser

fbshipit-source-id: a84cc174155bd725e108d8f953a21bb8de8d9d23

2021-04-07 06:32:16 -07:00

Hameer Abbasi

db3a9d7f8a

Fix __torch_function__ tests. ( #54492 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/54492

Test Plan: Imported from OSS

Reviewed By: ailzhang

Differential Revision: D27292567

Pulled By: ezyang

fbshipit-source-id: dc29daea967c6d8aaf63bdbcb4aff0bb13d7a5f7

2021-03-26 10:59:15 -07:00

Bel H

645119eaef

Lowering NLLLoss/CrossEntropyLoss to ATen code ( #53789 )

...

Summary:

* Lowering NLLLoss/CrossEntropyLoss to ATen dispatch

* This allows the MLC device to override these ops

* Reduce code duplication between the Python and C++ APIs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53789

Reviewed By: ailzhang

Differential Revision: D27345793

Pulled By: albanD

fbshipit-source-id: 99c0d617ed5e7ee8f27f7a495a25ab4158d9aad6

2021-03-26 07:31:08 -07:00

Edward Yang

33b95c6bac

Add __torch_function__ support for torch.nn.functional.embedding ( #54478 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54478

Fixes #54292

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Reviewed By: jbschlosser

Differential Revision: D27264179

Pulled By: ezyang

fbshipit-source-id: cd267e2e668fdd8d7f958bf70a0b93e058ec7c23

2021-03-23 17:22:39 -07:00

Peter Bell

04e0cbf5a9

Add padding='same' mode to conv{1,2,3}d ( #45667 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45667

First part of #3867 (Pooling operators still to do)

This adds a `padding='same'` mode to the interface of `conv{n}d`and `nn.Conv{n}d`. This should match the behaviour of `tensorflow`. I couldn't find it explicitly documented but through experimentation I found `tensorflow` returns the shape `ceil(len/stride)` and always adds any extra asymmetric padding onto the right side of the input.

Since the `native_functions.yaml` schema doesn't seem to support strings or enums, I've moved the function interface into python and it now dispatches between the numerically padded `conv{n}d` and the `_conv{n}d_same` variant. Underscores because I couldn't see any way to avoid exporting a function into the `torch` namespace.

A note on asymmetric padding. The total padding required can be odd if both the kernel-length is even and the dilation is odd. mkldnn has native support for asymmetric padding, so there is no overhead there, but for other backends I resort to padding the input tensor by 1 on the right hand side to make the remaining padding symmetrical. In these cases, I use `TORCH_WARN_ONCE` to notify the user of the performance implications.

Test Plan: Imported from OSS

Reviewed By: ejguan

Differential Revision: D27170744

Pulled By: jbschlosser

fbshipit-source-id: b3d8a0380e0787ae781f2e5d8ee365a7bfd49f22

2021-03-18 16:22:03 -07:00

kshitij12345

c1a39620b8

[nn] nn.Embedding : padding_idx doc update ( #53809 )

...

Summary:

Follow-up of https://github.com/pytorch/pytorch/pull/53447

Reference: https://github.com/pytorch/pytorch/pull/53447#discussion_r590521051

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53809

Reviewed By: bdhirsh

Differential Revision: D27049643

Pulled By: jbschlosser

fbshipit-source-id: 623a2a254783b86391dc2b0777b688506adb4c0e

2021-03-15 11:54:51 -07:00

kshitij12345

45ddf113c9

[fix] nn.Embedding: allow changing the padding vector ( #53447 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53368

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53447

Reviewed By: albanD

Differential Revision: D26946284

Pulled By: jbschlosser

fbshipit-source-id: 54e5eec7da86fa02b1b6e4a235d66976a80764fc

2021-03-10 09:53:27 -08:00

James Reed

215950e2be

Convert type annotations in nn/functional.py to py3 syntax ( #53656 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/53656

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision: D26926018

Pulled By: jamesr66a

fbshipit-source-id: 2381583cf93c9c9d0c9eeaa6e41eddce3729942d

2021-03-09 22:26:22 -08:00

Evelyn Fitzgerald

b4395b046a

Edit SiLU documentation ( #53239 )

...

Summary:

I edited the documentation for `nn.SiLU` and `F.silu` to:

- Explain that SiLU is also known as swish and that it stands for "Sigmoid Linear Unit."

- Ensure that "SiLU" is correctly capitalized.

I believe these changes will help users find the function they're looking for by adding relevant keywords to the docs.

Fixes: N/A

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53239

Reviewed By: jbschlosser

Differential Revision: D26816998

Pulled By: albanD

fbshipit-source-id: b4e9976e6b7e88686e3fa7061c0e9b693bd6d198

2021-03-04 12:51:25 -08:00

Joel Schlosser

e86476f736

Huber loss ( #50553 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/48595 .

## Background

This PR implements HuberLoss, which differs from SmoothL1Loss by a factor of beta. The current implementation does not share logic between the two. Feedback is welcome for the optimal way to minimize code duplication while remaining performant.

I've done some early [benchmarking](https://pytorch.org/tutorials/recipes/recipes/benchmark.html#collecting-instruction-counts-with-callgrind ) with Huber calling in to the Smooth L1 kernel and scaling afterwards; for the simple test case I used, instruction counts are as follows:

```

Huber loss calls dedicated Huber kernel: 2,795,300

Huber loss calls Smooth L1 kernel and scales afterwards: 4,523,612

```

With these numbers, instruction counts are ~62% higher when using the pre-existing Smooth L1 kernel.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50553

Test Plan:

```

python test/test_nn.py TestNN.test_HuberLoss

python test/test_nn.py TestNN.test_HuberLoss_delta

python test/test_nn.py TestNN.test_huber_loss_invalid_delta

python test/test_nn.py TestNNDeviceTypeCPU.test_smooth_l1_loss_vs_huber_loss_cpu

python test/test_nn.py TestNNDeviceTypeCUDA.test_smooth_l1_loss_vs_huber_loss_cuda

python test/test_nn.py TestNNDeviceTypeCPU.test_invalid_reduction_strings_cpu

python test/test_nn.py TestNNDeviceTypeCUDA.test_invalid_reduction_strings_cuda

python test/test_nn.py TestNN.test_loss_equal_input_target_shape

python test/test_nn.py TestNN.test_pointwise_loss_broadcast

python test/test_overrides.py

python test/test_jit.py TestJitGeneratedFunctional.test_nn_huber_loss

python test/test_type_hints.py

python test/test_cpp_api_parity.py

build/bin/test_api

```

## Documentation

<img width="677" alt="Screen Shot 2021-01-14 at 4 25 08 PM" src="https://user-images.githubusercontent.com/75754324/104651224-5a445980-5685-11eb-884b-14ea517958c2.png ">

<img width="677" alt="Screen Shot 2021-01-14 at 4 24 35 PM" src="https://user-images.githubusercontent.com/75754324/104651190-4e589780-5685-11eb-974d-8c63a89c050e.png ">

<img width="661" alt="Screen Shot 2021-01-14 at 4 24 45 PM" src="https://user-images.githubusercontent.com/75754324/104651198-50225b00-5685-11eb-958e-136b36f6f8a8.png ">

<img width="869" alt="Screen Shot 2021-01-14 at 4 25 27 PM" src="https://user-images.githubusercontent.com/75754324/104651208-53b5e200-5685-11eb-9fe4-5ff433aa13c5.png ">

<img width="862" alt="Screen Shot 2021-01-14 at 4 25 48 PM" src="https://user-images.githubusercontent.com/75754324/104651209-53b5e200-5685-11eb-8051-b0cfddcb07d3.png ">

Reviewed By: H-Huang

Differential Revision: D26734071

Pulled By: jbschlosser

fbshipit-source-id: c98c1b5f32a16f7a2a4e04bdce678080eceed5d5

2021-03-02 17:30:45 -08:00

Jeff Yang

316eabe9ba

fix(docs): remove redundant hardsigmoid() in docstring to show up inplace parameter ( #52559 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/50016

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52559

Reviewed By: ailzhang

Differential Revision: D26636347

Pulled By: vkuzo

fbshipit-source-id: da615d0eb6372637a6441e53698e86252591f6d8

2021-02-25 09:09:32 -08:00

Bel H

99a428ab22

Lower ReLu6 to aten ( #52723 )

...

Summary:

-Lower Relu6 to ATen

-Change Python and C++ to reflect change

-adds an entry in native_functions.yaml for that new function

-this is needed as we would like to intercept ReLU6 at a higher level with an XLA-approach codegen.

-Should pass functional C++ tests pass. But please let me know if more tests are required.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52723

Reviewed By: ailzhang

Differential Revision: D26641414

Pulled By: albanD

fbshipit-source-id: dacfc70a236c4313f95901524f5f021503f6a60f

2021-02-25 08:38:11 -08:00

Jeff Yang

f111ec48c1

docs: add fractional_max_pool in nn.functional ( #52557 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/51708

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52557

Reviewed By: bdhirsh

Differential Revision: D26591388

Pulled By: jbschlosser

fbshipit-source-id: 42643864df92ea014e69a8ec5c29333735e98898

2021-02-22 20:45:07 -08:00

Joel Schlosser

a39b1c42c1

MHA: Fix regression and apply bias flag to both in/out proj ( #52537 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/52257

## Background

Reverts MHA behavior for `bias` flag to that of v1.5: flag enables or disables both in and out projection biases.

Updates type annotations for both in and out projections biases from `Tensor` to `Optional[Tensor]` for `torch.jit.script` usage.

Note: With this change, `_LinearWithBias` defined in `torch/nn/modules/linear.py` is no longer utilized. Completely removing it would require updates to quantization logic in the following files:

```

test/quantization/test_quantized_module.py

torch/nn/quantizable/modules/activation.py

torch/nn/quantized/dynamic/modules/linear.py

torch/nn/quantized/modules/linear.py

torch/quantization/quantization_mappings.py

```

This PR takes a conservative initial approach and leaves these files unchanged.

**Is it safe to fully remove `_LinearWithBias`?**

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52537

Test Plan:

```

python test/test_nn.py TestNN.test_multihead_attn_no_bias

```

## BC-Breaking Note

In v1.6, the behavior of `MultiheadAttention`'s `bias` flag was incorrectly changed to affect only the in projection layer. That is, setting `bias=False` would fail to disable the bias for the out projection layer. This regression has been fixed, and the `bias` flag now correctly applies to both the in and out projection layers.

Reviewed By: bdhirsh

Differential Revision: D26583639

Pulled By: jbschlosser

fbshipit-source-id: b805f3a052628efb28b89377a41e06f71747ac5b

2021-02-22 14:47:12 -08:00

Mike Ruberry

594a66d778

Warn about floor_divide performing incorrect rounding ( #50281 ) ( #50281 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50281

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51745

Test Plan: Imported from OSS

Reviewed By: ngimel

Pulled By: mruberry

Differential Revision: D26257855

fbshipit-source-id: e5d497cf07b0c746838ed081c5d0e82fb4cb701b

2021-02-10 03:13:34 -08:00

Brandon Lin

35b3e16091

[pytorch] Fix torch.nn.functional.normalize to be properly scriptable ( #51909 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51909

Several scenarios don't work when trying to script `F.normalize`, notably when you try to symbolically trace through it with using the default argument:

```

import torch.nn.functional as F

import torch

from torch.fx import symbolic_trace

def f(x):

return F.normalize(x)

gm = symbolic_trace(f)

torch.jit.script(gm)

```

which leads to the error

```

RuntimeError:

normalize(Tensor input, float p=2., int dim=1, float eps=9.9999999999999998e-13, Tensor? out=None) -> (Tensor):

Expected a value of type 'float' for argument 'p' but instead found type 'int'.

:

def forward(self, x):

normalize_1 = torch.nn.functional.normalize(x, p = 2, dim = 1, eps = 1e-12, out = None); x = None

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

return normalize_1

Reviewed By: jamesr66a

Differential Revision: D26324308

fbshipit-source-id: 30dd944a6011795d17164f2c746068daac570cea

2021-02-09 07:26:57 -08:00

jiej

4d703d040b

Linear autodiff revert revert ( #51613 )

...

Summary:

patch PR https://github.com/pytorch/pytorch/issues/50856 and rollbak the revert D26105797 (e488e3c443https://github.com/pytorch/pytorch/pull/51613

Reviewed By: mruberry

Differential Revision: D26253999

Pulled By: ngimel

fbshipit-source-id: a20b1591de06dd277e4cd95542e3291a2f5a252c

2021-02-04 16:32:05 -08:00

Natalia Gimelshein

26f9ac98e5

Revert D26105797: [pytorch][PR] Exposing linear layer to fuser

...

Test Plan: revert-hammer

Differential Revision:

D26105797 (e488e3c443

2021-02-02 17:39:17 -08:00

jiej

e488e3c443

Exposing linear layer to fuser ( #50856 )

...

Summary:

1. enabling linear in autodiff;

2. remove control flow in python for linear;

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50856

Reviewed By: pbelevich

Differential Revision: D26105797

Pulled By: eellison

fbshipit-source-id: 6f7cedb9f6e3e46daa24223d2a6080880498deb4

2021-02-02 15:39:01 -08:00

M.L. Croci

8eb90d4865

Add Gaussian NLL Loss ( #50886 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/48520 .

cc albanD (This is a clean retry PR https://github.com/pytorch/pytorch/issues/49807 )

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50886

Reviewed By: ejguan

Differential Revision: D26007435

Pulled By: albanD

fbshipit-source-id: 88fe91b40dea6f72e093e6301f0f04fcc842d2f0

2021-01-22 06:56:49 -08:00

Taylor Robie

6a3fc0c21c

Treat has_torch_function and object_has_torch_function as static False when scripting ( #48966 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48966

This PR lets us skip the `if not torch.jit.is_scripting():` guards on `functional` and `nn.functional` by directly registering `has_torch_function` and `object_has_torch_function` to the JIT as statically False.

**Benchmarks**

The benchmark script is kind of long. The reason is that it's testing all four PRs in the stack, plus threading and subprocessing so that the benchmark can utilize multiple cores while still collecting good numbers. Both wall times and instruction counts were collected. This stack changes dozens of operators / functions, but very mechanically such that there are only a handful of codepath changes. Each row is a slightly different code path (e.g. testing in Python, testing in the arg parser, different input types, etc.)

<details>

<summary> Test script </summary>

```

import argparse

import multiprocessing

import multiprocessing.dummy

import os

import pickle

import queue

import random

import sys

import subprocess

import tempfile

import time

import torch

from torch.utils.benchmark import Timer, Compare, Measurement

NUM_CORES = multiprocessing.cpu_count()

ENVS = {

"ref": "HEAD (current)",

"torch_fn_overhead_stack_0": "#48963 ",

"torch_fn_overhead_stack_1": "#48964 ",

"torch_fn_overhead_stack_2": "#48965 ",

"torch_fn_overhead_stack_3": "#48966 ",

}

CALLGRIND_ENVS = tuple(ENVS.keys())

MIN_RUN_TIME = 3

REPLICATES = {

"longer": 1_000,

"long": 300,

"short": 50,

}

CALLGRIND_NUMBER = {

"overnight": 500_000,

"long": 250_000,

"short": 10_000,

}

CALLGRIND_TIMEOUT = {

"overnight": 800,

"long": 400,

"short": 100,

}

SETUP = """

x = torch.ones((1, 1))

y = torch.ones((1, 1))

w_tensor = torch.ones((1, 1), requires_grad=True)

linear = torch.nn.Linear(1, 1, bias=False)

linear_w = linear.weight

"""

TASKS = {

"C++: unary `.t()`": "w_tensor.t()",

"C++: unary (Parameter) `.t()`": "linear_w.t()",

"C++: binary (Parameter) `mul` ": "x + linear_w",

"tensor.py: _wrap_type_error_to_not_implemented `__floordiv__`": "x // y",

"tensor.py: method `__hash__`": "hash(x)",

"Python scalar `__rsub__`": "1 - x",

"functional.py: (unary) `unique`": "torch.functional.unique(x)",

"functional.py: (args) `atleast_1d`": "torch.functional.atleast_1d((x, y))",

"nn/functional.py: (unary) `relu`": "torch.nn.functional.relu(x)",

"nn/functional.py: (args) `linear`": "torch.nn.functional.linear(x, w_tensor)",

"nn/functional.py: (args) `linear (Parameter)`": "torch.nn.functional.linear(x, linear_w)",

"Linear(..., bias=False)": "linear(x)",

}

def _worker_main(argv, fn):

parser = argparse.ArgumentParser()

parser.add_argument("--output_file", type=str)

parser.add_argument("--single_task", type=int, default=None)

parser.add_argument("--length", type=str)

args = parser.parse_args(argv)

single_task = args.single_task

conda_prefix = os.getenv("CONDA_PREFIX")

assert torch.__file__.startswith(conda_prefix)

env = os.path.split(conda_prefix)[1]

assert env in ENVS

results = []

for i, (k, stmt) in enumerate(TASKS.items()):

if single_task is not None and single_task != i:

continue

timer = Timer(

stmt=stmt,

setup=SETUP,

sub_label=k,

description=ENVS[env],

)

results.append(fn(timer, args.length))

with open(args.output_file, "wb") as f: