Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

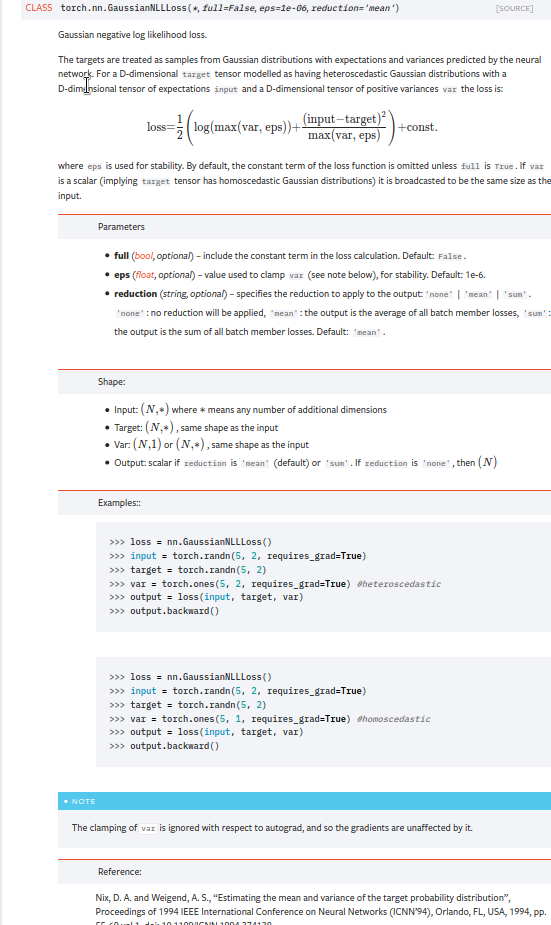

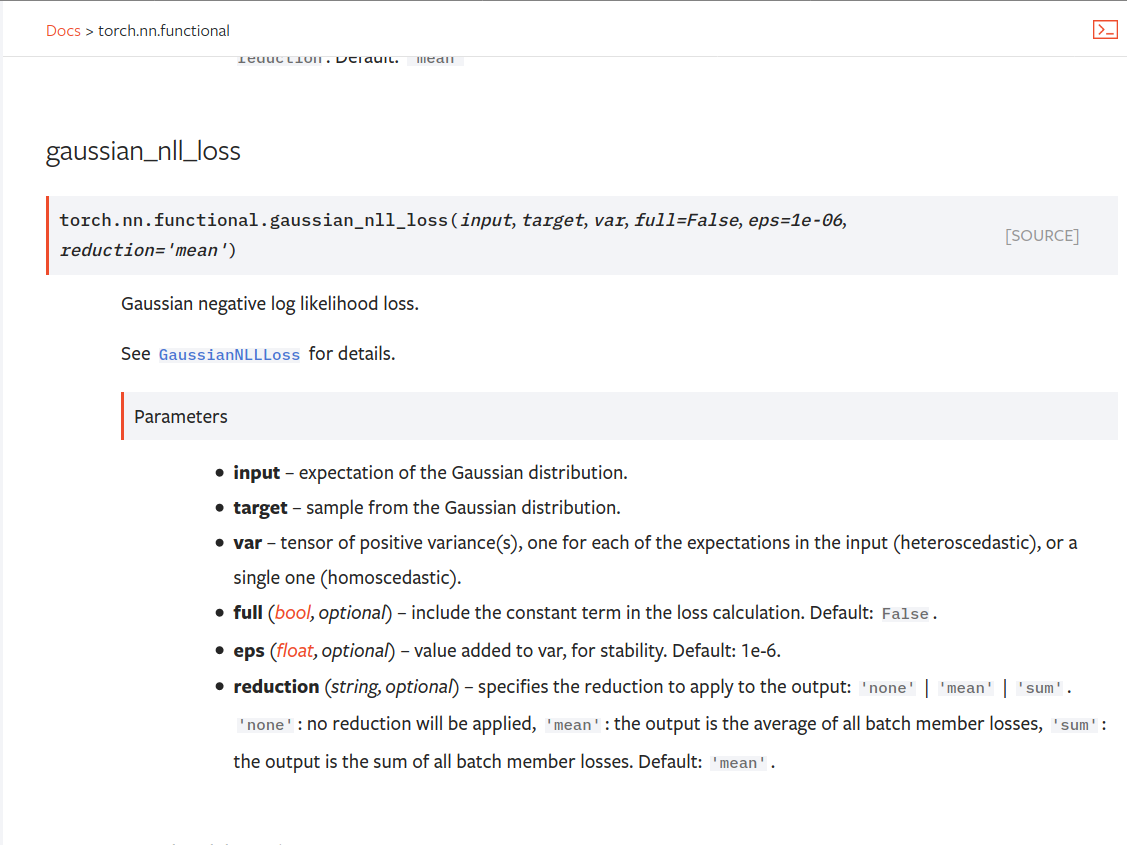

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

Summary:

Match updated `Embedding` docs from https://github.com/pytorch/pytorch/pull/54026 as closely as possible. Additionally, update the C++ side `Embedding` docs, since those were missed in the previous PR.

There are 6 (!) places for docs:

1. Python module form in `sparse.py` - includes an additional line about newly constructed `Embedding`s / `EmbeddingBag`s

2. Python `from_pretrained()` in `sparse.py` (refers back to module docs)

3. Python functional form in `functional.py`

4. C++ module options - includes an additional line about newly constructed `Embedding`s / `EmbeddingBag`s

5. C++ `from_pretrained()` options

6. C++ functional options

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56065

Reviewed By: malfet

Differential Revision: D27908383

Pulled By: jbschlosser

fbshipit-source-id: c5891fed1c9d33b4b8cd63500a14c1a77d92cc78

Summary:

As this diff shows, currently there are a couple hundred instances of raw `noqa` in the codebase, which just ignore all errors on a given line. That isn't great, so this PR changes all existing instances of that antipattern to qualify the `noqa` with respect to a specific error code, and adds a lint to prevent more of this from happening in the future.

Interestingly, some of the examples the `noqa` lint catches are genuine attempts to qualify the `noqa` with a specific error code, such as these two:

```

test/jit/test_misc.py:27: print(f"{hello + ' ' + test}, I'm a {test}") # noqa E999

test/jit/test_misc.py:28: print(f"format blank") # noqa F541

```

However, those are still wrong because they are [missing a colon](https://flake8.pycqa.org/en/3.9.1/user/violations.html#in-line-ignoring-errors), which actually causes the error code to be completely ignored:

- If you change them to anything else, the warnings will still be suppressed.

- If you add the necessary colons then it is revealed that `E261` was also being suppressed, unintentionally:

```

test/jit/test_misc.py:27:57: E261 at least two spaces before inline comment

test/jit/test_misc.py:28:35: E261 at least two spaces before inline comment

```

I did try using [flake8-noqa](https://pypi.org/project/flake8-noqa/) instead of a custom `git grep` lint, but it didn't seem to work. This PR is definitely missing some of the functionality that flake8-noqa is supposed to provide, though, so if someone can figure out how to use it, we should do that instead.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56272

Test Plan:

CI should pass on the tip of this PR, and we know that the lint works because the following CI run (before this PR was finished) failed:

- https://github.com/pytorch/pytorch/runs/2365189927

Reviewed By: janeyx99

Differential Revision: D27830127

Pulled By: samestep

fbshipit-source-id: d6dcf4f945ebd18cd76c46a07f3b408296864fcb

Summary:

This PR adds a `padding_idx` parameter to `nn.EmbeddingBag` and `nn.functional.embedding_bag`. As with `nn.Embedding`'s `padding_idx` argument, if an embedding's index is equal to `padding_idx` it is ignored, so it is not included in the reduction.

This PR does not add support for `padding_idx` for quantized or ONNX `EmbeddingBag` for opset10/11 (opset9 is supported). In these cases, an error is thrown if `padding_idx` is provided.

Fixes https://github.com/pytorch/pytorch/issues/3194

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49237

Reviewed By: walterddr, VitalyFedyunin

Differential Revision: D26948258

Pulled By: jbschlosser

fbshipit-source-id: 3ca672f7e768941f3261ab405fc7597c97ce3dfc

Summary:

* Lowering NLLLoss/CrossEntropyLoss to ATen dispatch

* This allows the MLC device to override these ops

* Reduce code duplication between the Python and C++ APIs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53789

Reviewed By: ailzhang

Differential Revision: D27345793

Pulled By: albanD

fbshipit-source-id: 99c0d617ed5e7ee8f27f7a495a25ab4158d9aad6

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45667

First part of #3867 (Pooling operators still to do)

This adds a `padding='same'` mode to the interface of `conv{n}d`and `nn.Conv{n}d`. This should match the behaviour of `tensorflow`. I couldn't find it explicitly documented but through experimentation I found `tensorflow` returns the shape `ceil(len/stride)` and always adds any extra asymmetric padding onto the right side of the input.

Since the `native_functions.yaml` schema doesn't seem to support strings or enums, I've moved the function interface into python and it now dispatches between the numerically padded `conv{n}d` and the `_conv{n}d_same` variant. Underscores because I couldn't see any way to avoid exporting a function into the `torch` namespace.

A note on asymmetric padding. The total padding required can be odd if both the kernel-length is even and the dilation is odd. mkldnn has native support for asymmetric padding, so there is no overhead there, but for other backends I resort to padding the input tensor by 1 on the right hand side to make the remaining padding symmetrical. In these cases, I use `TORCH_WARN_ONCE` to notify the user of the performance implications.

Test Plan: Imported from OSS

Reviewed By: ejguan

Differential Revision: D27170744

Pulled By: jbschlosser

fbshipit-source-id: b3d8a0380e0787ae781f2e5d8ee365a7bfd49f22

Summary:

I edited the documentation for `nn.SiLU` and `F.silu` to:

- Explain that SiLU is also known as swish and that it stands for "Sigmoid Linear Unit."

- Ensure that "SiLU" is correctly capitalized.

I believe these changes will help users find the function they're looking for by adding relevant keywords to the docs.

Fixes: N/A

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53239

Reviewed By: jbschlosser

Differential Revision: D26816998

Pulled By: albanD

fbshipit-source-id: b4e9976e6b7e88686e3fa7061c0e9b693bd6d198

Summary:

-Lower Relu6 to ATen

-Change Python and C++ to reflect change

-adds an entry in native_functions.yaml for that new function

-this is needed as we would like to intercept ReLU6 at a higher level with an XLA-approach codegen.

-Should pass functional C++ tests pass. But please let me know if more tests are required.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52723

Reviewed By: ailzhang

Differential Revision: D26641414

Pulled By: albanD

fbshipit-source-id: dacfc70a236c4313f95901524f5f021503f6a60f

Summary:

Fixes https://github.com/pytorch/pytorch/issues/52257

## Background

Reverts MHA behavior for `bias` flag to that of v1.5: flag enables or disables both in and out projection biases.

Updates type annotations for both in and out projections biases from `Tensor` to `Optional[Tensor]` for `torch.jit.script` usage.

Note: With this change, `_LinearWithBias` defined in `torch/nn/modules/linear.py` is no longer utilized. Completely removing it would require updates to quantization logic in the following files:

```

test/quantization/test_quantized_module.py

torch/nn/quantizable/modules/activation.py

torch/nn/quantized/dynamic/modules/linear.py

torch/nn/quantized/modules/linear.py

torch/quantization/quantization_mappings.py

```

This PR takes a conservative initial approach and leaves these files unchanged.

**Is it safe to fully remove `_LinearWithBias`?**

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52537

Test Plan:

```

python test/test_nn.py TestNN.test_multihead_attn_no_bias

```

## BC-Breaking Note

In v1.6, the behavior of `MultiheadAttention`'s `bias` flag was incorrectly changed to affect only the in projection layer. That is, setting `bias=False` would fail to disable the bias for the out projection layer. This regression has been fixed, and the `bias` flag now correctly applies to both the in and out projection layers.

Reviewed By: bdhirsh

Differential Revision: D26583639

Pulled By: jbschlosser

fbshipit-source-id: b805f3a052628efb28b89377a41e06f71747ac5b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51909

Several scenarios don't work when trying to script `F.normalize`, notably when you try to symbolically trace through it with using the default argument:

```

import torch.nn.functional as F

import torch

from torch.fx import symbolic_trace

def f(x):

return F.normalize(x)

gm = symbolic_trace(f)

torch.jit.script(gm)

```

which leads to the error

```

RuntimeError:

normalize(Tensor input, float p=2., int dim=1, float eps=9.9999999999999998e-13, Tensor? out=None) -> (Tensor):

Expected a value of type 'float' for argument 'p' but instead found type 'int'.

:

def forward(self, x):

normalize_1 = torch.nn.functional.normalize(x, p = 2, dim = 1, eps = 1e-12, out = None); x = None

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

return normalize_1

Reviewed By: jamesr66a

Differential Revision: D26324308

fbshipit-source-id: 30dd944a6011795d17164f2c746068daac570cea

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48965

This PR pulls `__torch_function__` checking entirely into C++, and adds a special `object_has_torch_function` method for ops which only have one arg as this lets us skip tuple construction and unpacking. We can now also do away with the Python side fast bailout for `Tensor` (e.g. `if any(type(t) is not Tensor for t in tensors) and has_torch_function(tensors)`) because they're actually slower than checking with the Python C API.

Test Plan: Existing unit tests. Benchmarks are in #48966

Reviewed By: ezyang

Differential Revision: D25590732

Pulled By: robieta

fbshipit-source-id: 6bd74788f06cdd673f3a2db898143d18c577eb42

Summary:

Fixes https://github.com/pytorch/pytorch/issues/47979

For MHA module, it is preferred to use the combined weight branch as much as possible when query/key/value are same (in case of same values by `torch.equal` or exactly same object by `is` ops). This PR will enable the faster branch when a single object with `nan` is passed to MHA.

For the background knowledge

```

import torch

a = torch.tensor([float('NaN'), 1, float('NaN'), 2, 3])

print(a is a) # True

print(torch.equal(a, a)) # False

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48126

Reviewed By: gchanan

Differential Revision: D25042082

Pulled By: zhangguanheng66

fbshipit-source-id: 6bb17a520e176ddbb326ddf30ee091a84fcbbf27

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46758

It's in general helpful to support int32 indices and offsets, especially when such tensors are large and need to be transferred to accelerator backends. Since it may not be very useful to support the combination of int32 indices and int64 offsets, here we enforce that these two must have the same type.

Test Plan: unit tests

Reviewed By: ngimel

Differential Revision: D24470808

fbshipit-source-id: 94b8a1d0b7fc9fe3d128247aa042c04d7c227f0b

Summary:

Fix https://github.com/pytorch/pytorch/issues/44601

I added bicubic grid sampler in both cpu and cuda side, but haven't in AVX2

There is a [colab notebook](https://colab.research.google.com/drive/1mIh6TLLj5WWM_NcmKDRvY5Gltbb781oU?usp=sharing) show some test results. The notebook use bilinear for test, since I could only use distributed version of pytorch in it. You could just download it and modify the `mode_torch=bicubic` to show the results.

There are some duplicate code about getting and setting values, since the helper function used in bilinear at first clip the coordinate beyond boundary, and then get or set the value. However, in bicubic, there are more points should be consider. I could refactor that part after making sure the overall calculation are correct.

Thanks

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44780

Reviewed By: mrshenli

Differential Revision: D24681114

Pulled By: mruberry

fbshipit-source-id: d39c8715e2093a5a5906cb0ef040d62bde578567

Summary:

Many of our functions contain same warnings about results reproducibility. Make them use common template.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45748

Reviewed By: colesbury

Differential Revision: D24089114

Pulled By: ngimel

fbshipit-source-id: e6aa4ce6082f6e0f4ce2713c2bf1864ee1c3712a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44433

Not entirely sure why, but changing the type of beta from `float` to `double in autocast_mode.cpp and FunctionsManual.h fixes my compiler errors, failing instead at link time

fixing some type errors, updated fn signature in a few more files

removing my usage of Scalar, making beta a double everywhere instead

Test Plan: Imported from OSS

Reviewed By: mrshenli

Differential Revision: D23636720

Pulled By: bdhirsh

fbshipit-source-id: caea2a1f8dd72b3b5fd1d72dd886b2fcd690af6d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43680

As discussed [here](https://github.com/pytorch/pytorch/issues/43342),

adding in a Python-only implementation of the triplet-margin loss that takes a

custom distance function. Still discussing whether this is necessary to add to

PyTorch Core.

Test Plan:

python test/run_tests.py

Imported from OSS

Reviewed By: albanD

Differential Revision: D23363898

fbshipit-source-id: 1cafc05abecdbe7812b41deaa1e50ea11239d0cb

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44486

SmoothL1Loss had a completely different (and incorrect, see #43228) path when target.requires_grad was True.

This PR does the following:

1) adds derivative support for target via the normal derivatives.yaml route

2) kill the different (and incorrect) path for when target.requires_grad was True

3) modify the SmoothL1Loss CriterionTests to verify that the target derivative is checked.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D23630699

Pulled By: gchanan

fbshipit-source-id: 0f94d1a928002122d6b6875182867618e713a917

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43025

- Use new overloads that better reflect the arguments to interpolate.

- More uniform interface for upsample ops allows simplifying the Python code.

- Also reorder overloads in native_functions.yaml to give them priority.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37177

ghstack-source-id: 106938111

Test Plan:

test_nn has pretty good coverage.

Relying on CI for ONNX, etc.

Didn't test FC because this change is *not* forward compatible.

To ensure backwards compatibility, I ran this code before this change

```python

def test_func(arg):

interp = torch.nn.functional.interpolate

with_size = interp(arg, size=(16,16))

with_scale = interp(arg, scale_factor=[2.1, 2.2], recompute_scale_factor=False)

with_compute = interp(arg, scale_factor=[2.1, 2.2])

return (with_size, with_scale, with_compute)

traced_func = torch.jit.trace(test_func, torch.randn(1,1,1,1))

sample = torch.randn(1, 3, 7, 7)

output = traced_func(sample)

assert not torch.allclose(output[1], output[2])

torch.jit.save(traced_func, "model.pt")

torch.save((sample, output), "data.pt")

```

then this code after this change

```python

model = torch.jit.load("model.pt")

sample, golden = torch.load("data.pt")

result = model(sample)

for r, g in zip(result, golden):

assert torch.allclose(r, g)

```

Reviewed By: AshkanAliabadi

Differential Revision: D21209991

fbshipit-source-id: 5b2ebb7c3ed76947361fe532d1dbdd6faa3544c8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44471

L1Loss had a completely different (and incorrect, see #43228) path when target.requires_grad was True.

This PR does the following:

1) adds derivative support for target via the normal derivatives.yaml route

2) kill the different (and incorrect) path for when target.requires_grad was True

3) modify the L1Loss CriterionTests to verify that the target derivative is checked.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D23626008

Pulled By: gchanan

fbshipit-source-id: 2828be16b56b8dabe114962223d71b0e9a85f0f5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44437

MSELoss had a completely different (and incorrect, see https://github.com/pytorch/pytorch/issues/43228) path when target.requires_grad was True.

This PR does the following:

1) adds derivative support for target via the normal derivatives.yaml route

2) kill the different (and incorrect) path for when target.requires_grad was True

3) modify the MSELoss CriterionTests to verify that the target derivative is checked.

TODO:

1) do we still need check_criterion_jacobian when we run grad/gradgrad checks?

2) ensure the Module tests check when target.requires_grad

3) do we actually test when reduction='none' and reduction='mean'?

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D23612166

Pulled By: gchanan

fbshipit-source-id: 4f74d38d8a81063c74e002e07fbb7837b2172a10

Summary:

According to pytorch/rfcs#3

From the goals in the RFC:

1. Support subclassing `torch.Tensor` in Python (done here)

2. Preserve `torch.Tensor` subclasses when calling `torch` functions on them (done here)

3. Use the PyTorch API with `torch.Tensor`-like objects that are _not_ `torch.Tensor`

subclasses (done in https://github.com/pytorch/pytorch/issues/30730)

4. Preserve `torch.Tensor` subclasses when calling `torch.Tensor` methods. (done here)

5. Propagating subclass instances correctly also with operators, using

views/slices/indexing/etc. (done here)

6. Preserve subclass attributes when using methods or views/slices/indexing. (done here)

7. A way to insert code that operates on both functions and methods uniformly

(so we can write a single function that overrides all operators). (done here)

8. The ability to give external libraries a way to also define

functions/methods that follow the `__torch_function__` protocol. (will be addressed in a separate PR)

This PR makes the following changes:

1. Adds the `self` argument to the arg parser.

2. Dispatches on `self` as well if `self` is not `nullptr`.

3. Adds a `torch._C.DisableTorchFunction` context manager to disable `__torch_function__`.

4. Adds a `torch::torch_function_enabled()` and `torch._C._torch_function_enabled()` to check the state of `__torch_function__`.

5. Dispatches all `torch._C.TensorBase` and `torch.Tensor` methods via `__torch_function__`.

TODO:

- [x] Sequence Methods

- [x] Docs

- [x] Tests

Closes https://github.com/pytorch/pytorch/issues/28361

Benchmarks in https://github.com/pytorch/pytorch/pull/37091#issuecomment-633657778

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37091

Reviewed By: ngimel

Differential Revision: D22765678

Pulled By: ezyang

fbshipit-source-id: 53f8aa17ddb8b1108c0997f6a7aa13cb5be73de0

Summary:

Raise and assert used to have a hard-coded error message "Exception". User provided error message was ignored. This PR adds support to represent user's error message in TorchScript.

This breaks backward compatibility because now we actually need to script the user's error message, which can potentially contain unscriptable expressions. Such programs can break when scripting, but saved models can still continue to work.

Increased an op count in test_mobile_optimizer.py because now we need aten::format to form the actual exception message.

This is built upon an WIP PR: https://github.com/pytorch/pytorch/pull/34112 by driazati

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41907

Reviewed By: ngimel

Differential Revision: D22778301

Pulled By: gmagogsfm

fbshipit-source-id: 2b94f0db4ae9fe70c4cd03f4048e519ea96323ad

Summary:

Current losses in PyTorch only include a (partial) implementation of Huber loss through `smooth l1` based on Fast RCNN - which essentially uses a delta value of 1. Changing/Renaming the [`_smooth_l1_loss()`](3e1859959a/torch/nn/functional.py (L2487)) and refactoring to include delta, enables to use the actual function.

Supplementary to this, I have also made a functional and criterion versions for anyone that wants to set the delta explicitly - based on the functional `smooth_l1_loss()` and the criterion `Smooth_L1_Loss()`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37599

Differential Revision: D21559311

Pulled By: vincentqb

fbshipit-source-id: 34b2a5a237462e119920d6f55ba5ab9b8e086a8c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41575

Fixes https://github.com/pytorch/pytorch/issues/34294

This updates the C++ argument parser to correctly handle `TensorList` operands. I've also included a number of updates to the testing infrastructure, this is because we're now doing a much more careful job of testing the signatures of aten kernels, using the type information about the arguments as read in from `Declarations.yaml`. The changes to the tests are required because we're now only checking for `__torch_function__` attributes on `Tensor`, `Optional[Tensor]` and elements of `TensorList` operands, whereas before we were checking for `__torch_function__` on all operands, so the relatively simplistic approach the tests were using before -- assuming all positional arguments might be tensors -- doesn't work anymore. I now think that checking for `__torch_function__` on all operands was a mistake in the original design.

The updates to the signatures of the `lambda` functions are to handle this new, more stringent checking of signatures.

I also added override support for `torch.nn.functional.threshold` `torch.nn.functional.layer_norm`, which did not yet have python-level support.

Benchmarks are still WIP.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34725

Reviewed By: mruberry

Differential Revision: D22357738

Pulled By: ezyang

fbshipit-source-id: 0e7f4a58517867b2e3f193a0a8390e2ed294e1f3

Summary:

BCELoss currently uses different broadcasting semantics than numpy. Since previous versions of PyTorch have thrown a warning in these cases telling the user that input sizes should match, and since the CUDA and CPU results differ when sizes do not match, it makes sense to upgrade the size mismatch warning to an error.

We can consider supporting numpy broadcasting semantics in BCELoss in the future if needed.

Closes https://github.com/pytorch/pytorch/issues/40023

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41426

Reviewed By: zou3519

Differential Revision: D22540841

Pulled By: ezyang

fbshipit-source-id: 6c6d94c78fa0ae30ebe385d05a9e3501a42b3652

Summary:

This is a duplicate of https://github.com/pytorch/pytorch/pull/38362

"This PR completes Interpolate's deprecation process for recomputing the scales values, by updating the default value of the parameter recompute_scale_factor as planned for pytorch 1.6.0.

The warning message is also updated accordingly."

I'm recreating this PR as previous one is not being updated.

cc gchanan

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39453

Reviewed By: hl475

Differential Revision: D21955284

Pulled By: houseroad

fbshipit-source-id: 911585d39273a9f8de30d47e88f57562216968d8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37173

This function is only called in one place, so inline it. This eliminates

boilerplate related to overloads and allows for further simplification

of shared logic in later diffs.

All shared local variables have the same names (from closed_over_args),

and no local variables accidentally collide.

ghstack-source-id: 106938108

Test Plan: Existing tests for interpolate.

Differential Revision: D21209995

fbshipit-source-id: acfadf31936296b2aac0833f704764669194b06f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37172

This improves readability by keeping cases with similar behavior close

together. It should also have a very tiny positive impact on perf.

ghstack-source-id: 106938109

Test Plan: Existing tests for interpolate.

Differential Revision: D21209996

fbshipit-source-id: c813e56aa6ba7370b89a2784fcb62cc146005258

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37171

Every one of these branches returns or raises, so there's no need for elif.

This makes it a little easier to reorder and move conditions.

ghstack-source-id: 106938110

Test Plan: Existing test for interpolate.

Differential Revision: D21209992

fbshipit-source-id: 5c517e61ced91464b713f7ccf53349b05e27461c

Summary:

## Description

* Updated assert statement to remove check on 3rd dimension (features) for keys and values in MultiheadAttention / Transform

* The feature dimension for keys and values can now be of different sizes

* Refer to https://github.com/pytorch/pytorch/issues/27623

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39402

Reviewed By: zhangguanheng66

Differential Revision: D21841678

Pulled By: Nayef211

fbshipit-source-id: f0c9e5e0f33259ae2abb6bf9e7fb14e3aa9008eb

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/38478

Before this PR, the QAT ConvBN module inlined the batch normalization code

in order to reproduce Conv+BN folding.

This PR updates the module to use BN directly. This is mathematically

equivalent to previous behavior as long as we properly scale

and fake quant the conv weights, but allows us to reuse the BN code

instead of reimplementing it.

In particular, this should help with speed since we can use dedicated

BN kernels, and also with DDP since we can hook up SyncBatchNorm.

Test Plan:

```

python test/test_quantization.py TestQATModule

```

Imported from OSS

Differential Revision: D21603230

fbshipit-source-id: ecf8afdd833b67c2fbd21a8fd14366079fa55e64

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/38120

Test Plan: build docs locally and attach a screenshot to this PR.

Differential Revision: D21477815

Pulled By: zou3519

fbshipit-source-id: 420bbcfcbd191d1a8e33cdf4a90c95bf00a5d226

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37169

This allows some cleanup of the code below by making lines shorter.

ghstack-source-id: 102773593

Test Plan: Existing tests for interpolate.

Reviewed By: kimishpatel

Differential Revision: D21209988

fbshipit-source-id: cffcdf9a580b15c4f1fa83e3f27b5a69f66bf6f7

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37168

It looks like this was made a separate function because of the `dim` argument,

but that argument is always equal to `input.dim() - 2`. Remove the argument

and consolidate all call sites into one. This also means that this will be

called on paths that previously didn't call it, but all those cases throw

exceptions anyway.

ghstack-source-id: 102773596

Test Plan: Existing tests for interpolate.

Reviewed By: kimishpatel

Differential Revision: D21209993

fbshipit-source-id: 2c274a3a6900ebfdb8d60b311a4c3bd956fa7c37

Summary:

xref gh-32838, gh-34032

This is a major refactor of parts of the documentation to split it up using sphinx's `autosummary` feature which will build out `autofuction` and `autoclass` stub files and link to them. The end result is that the top module pages like torch.nn.rst and torch.rst are now more like table-of-contents to the actual single-class or single-function documentations pages.

Along the way, I modified many of the docstrings to eliminate sphinx warnings when building. I think the only thing I changed from a non-documentation perspective is to add names to `__all__` when adding them to `globals()` in `torch.__init__.py`

I do not know the CI system: are the documentation build artifacts available after the build, so reviewers can preview before merging?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37419

Differential Revision: D21337640

Pulled By: ezyang

fbshipit-source-id: d4ad198780c3ae7a96a9f22651e00ff2d31a0c0f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/36815

Pytorch does not have native channel shuffle op.

This diff adds that for both fp and quantized tensors.

For FP implementation is inefficient one. For quantized there is a native

QNNPACK op for this.

ghstack-source-id: 103267234

Test Plan:

buck run caffe2/test:quantization --

quantization.test_quantized.TestQuantizedOps.test_channel_shuffle

X86 implementation for QNNPACK is sse2 so this may not be the most efficient

for x86.

Reviewed By: dreiss

Differential Revision: D21093841

fbshipit-source-id: 5282945f352df43fdffaa8544fe34dba99a5b97e

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37539

Bug fix

Test Plan:

This passed fbtranslate local integration test when I toggle fp16 to true on GPU.

Also it passed in with D21312488

Reviewed By: zhangguanheng66

Differential Revision: D21311505

fbshipit-source-id: 7ebd7375ef2c1b2ba4ac6fe7be5e7be1a490a319

Summary:

This PR fixes a couple of syntax errors in `torch/` that prevent MyPy from running, fixes simple type annotation errors (e.g. missing `from typing import List, Tuple, Optional`), and adds granular ignores for errors in particular modules as well as for missing typing in third party packages.

As a result, running `mypy` in the root dir of the repo now runs on:

- `torch/`

- `aten/src/ATen/function_wrapper.py` (the only file already covered in CI)

In CI this runs on GitHub Actions, job Lint, sub-job "quick-checks", task "MyPy typecheck". It should give (right now): `Success: no issues found in 329 source files`.

Here are the details of the original 855 errors when running `mypy torch` on current master (after fixing the couple of syntax errors that prevent `mypy` from running through):

<details>

```

torch/utils/tensorboard/_proto_graph.py:1: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.node_def_pb2'

torch/utils/tensorboard/_proto_graph.py:2: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.attr_value_pb2'

torch/utils/tensorboard/_proto_graph.py:3: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.tensor_shape_pb2'

torch/utils/backcompat/__init__.py:1: error: Cannot find implementation or library stub for module named 'torch._C'

torch/for_onnx/__init__.py:1: error: Cannot find implementation or library stub for module named 'torch.for_onnx.onnx'

torch/cuda/nvtx.py:2: error: Cannot find implementation or library stub for module named 'torch._C'

torch/utils/show_pickle.py:59: error: Name 'pickle._Unpickler' is not defined

torch/utils/show_pickle.py:113: error: "Type[PrettyPrinter]" has no attribute "_dispatch"

torch/utils/tensorboard/_onnx_graph.py:1: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.graph_pb2'

torch/utils/tensorboard/_onnx_graph.py:2: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.node_def_pb2'

torch/utils/tensorboard/_onnx_graph.py:3: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.versions_pb2'

torch/utils/tensorboard/_onnx_graph.py:4: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.attr_value_pb2'

torch/utils/tensorboard/_onnx_graph.py:5: error: Cannot find implementation or library stub for module named 'tensorboard.compat.proto.tensor_shape_pb2'

torch/utils/tensorboard/_onnx_graph.py:9: error: Cannot find implementation or library stub for module named 'onnx'

torch/contrib/_tensorboard_vis.py:10: error: Cannot find implementation or library stub for module named 'tensorflow.core.util'

torch/contrib/_tensorboard_vis.py:11: error: Cannot find implementation or library stub for module named 'tensorflow.core.framework'

torch/contrib/_tensorboard_vis.py:12: error: Cannot find implementation or library stub for module named 'tensorflow.python.summary.writer.writer'

torch/utils/hipify/hipify_python.py:43: error: Need type annotation for 'CAFFE2_TEMPLATE_MAP' (hint: "CAFFE2_TEMPLATE_MAP: Dict[<type>, <type>] = ...")

torch/utils/hipify/hipify_python.py:636: error: "object" has no attribute "items"

torch/nn/_reduction.py:27: error: Name 'Optional' is not defined

torch/nn/_reduction.py:27: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/nn/_reduction.py:47: error: Name 'Optional' is not defined

torch/nn/_reduction.py:47: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/utils/tensorboard/_utils.py:17: error: Skipping analyzing 'matplotlib.pyplot': found module but no type hints or library stubs

torch/utils/tensorboard/_utils.py:17: error: Skipping analyzing 'matplotlib': found module but no type hints or library stubs

torch/utils/tensorboard/_utils.py:18: error: Skipping analyzing 'matplotlib.backends.backend_agg': found module but no type hints or library stubs

torch/utils/tensorboard/_utils.py:18: error: Skipping analyzing 'matplotlib.backends': found module but no type hints or library stubs

torch/nn/modules/utils.py:27: error: Name 'List' is not defined

torch/nn/modules/utils.py:27: note: Did you forget to import it from "typing"? (Suggestion: "from typing import List")

caffe2/proto/caffe2_pb2.py:17: error: Unexpected keyword argument "serialized_options" for "FileDescriptor"; did you mean "serialized_pb"?

caffe2/proto/caffe2_pb2.py:25: error: Unexpected keyword argument "serialized_options" for "EnumDescriptor"

caffe2/proto/caffe2_pb2.py:31: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:35: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:39: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:43: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:47: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:51: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:55: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:59: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:63: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:67: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:71: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:75: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:102: error: Unexpected keyword argument "serialized_options" for "EnumDescriptor"

caffe2/proto/caffe2_pb2.py:108: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:112: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:124: error: Unexpected keyword argument "serialized_options" for "EnumDescriptor"

caffe2/proto/caffe2_pb2.py:130: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:134: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:138: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:142: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:146: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:150: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:154: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:158: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:162: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:166: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:170: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:174: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:178: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:182: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:194: error: Unexpected keyword argument "serialized_options" for "EnumDescriptor"

caffe2/proto/caffe2_pb2.py:200: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:204: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:208: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:212: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:224: error: Unexpected keyword argument "serialized_options" for "EnumDescriptor"

caffe2/proto/caffe2_pb2.py:230: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:234: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:238: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:242: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:246: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:250: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:254: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/caffe2_pb2.py:267: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:274: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:281: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:288: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:295: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:302: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:327: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:334: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:341: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:364: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:371: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:378: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:385: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:392: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:399: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:406: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:413: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:420: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:427: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:434: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:441: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:448: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:455: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:462: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:488: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:495: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:502: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:509: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:516: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:523: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:530: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:537: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:544: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:551: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:558: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:565: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:572: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:596: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:603: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:627: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:634: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:641: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:648: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:655: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:662: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:686: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:693: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:717: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:724: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:731: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:738: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:763: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:770: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:777: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:784: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:808: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:815: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:822: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:829: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:836: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:843: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:850: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:857: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:864: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:871: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:878: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:885: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:892: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:916: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:923: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:930: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:937: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:944: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:951: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:958: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:982: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:989: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:996: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1003: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1010: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1017: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1024: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1031: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1038: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1045: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1052: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1059: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1066: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1090: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1097: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1104: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1128: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1135: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1142: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1166: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1173: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1180: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1187: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1194: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1218: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1225: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1232: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1239: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1246: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1253: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1260: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1267: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1274: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1281: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1305: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1312: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1319: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1326: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1333: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1340: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1347: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1354: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1361: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1368: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1375: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1382: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1389: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1396: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1420: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1427: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1434: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1441: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1465: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1472: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1479: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1486: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1493: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1500: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1507: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1514: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1538: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/caffe2_pb2.py:1545: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1552: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1559: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1566: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/caffe2_pb2.py:1667: error: "GeneratedProtocolMessageType" has no attribute "Segment"

torch/multiprocessing/queue.py:4: error: No library stub file for standard library module 'multiprocessing.reduction'

caffe2/proto/torch_pb2.py:18: error: Unexpected keyword argument "serialized_options" for "FileDescriptor"; did you mean "serialized_pb"?

caffe2/proto/torch_pb2.py:27: error: Unexpected keyword argument "serialized_options" for "EnumDescriptor"

caffe2/proto/torch_pb2.py:33: error: Unexpected keyword argument "serialized_options" for "EnumValueDescriptor"

caffe2/proto/torch_pb2.py:50: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/torch_pb2.py:57: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:81: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/torch_pb2.py:88: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:95: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:102: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:109: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:116: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:123: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:130: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:137: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:144: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:151: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:175: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/torch_pb2.py:182: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:189: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:196: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:220: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/torch_pb2.py:227: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:234: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:241: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:265: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/torch_pb2.py:272: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:279: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:286: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:293: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:300: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:307: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:314: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:321: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:328: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:335: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:342: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:366: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/torch_pb2.py:373: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:397: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/torch_pb2.py:404: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:411: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:418: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:425: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/torch_pb2.py:432: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:17: error: Unexpected keyword argument "serialized_options" for "FileDescriptor"; did you mean "serialized_pb"?

caffe2/proto/metanet_pb2.py:29: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/metanet_pb2.py:36: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:43: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:50: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:57: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:64: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:88: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/metanet_pb2.py:95: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:102: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:126: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/metanet_pb2.py:133: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:140: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:164: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/metanet_pb2.py:171: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:178: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:202: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/metanet_pb2.py:209: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:216: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:240: error: Unexpected keyword argument "serialized_options" for "Descriptor"

caffe2/proto/metanet_pb2.py:247: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:254: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:261: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:268: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:275: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:282: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:289: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/metanet_pb2.py:296: error: Unexpected keyword argument "serialized_options" for "FieldDescriptor"

caffe2/proto/__init__.py:13: error: Skipping analyzing 'caffe2.caffe2.fb.session.proto': found module but no type hints or library stubs

torch/multiprocessing/pool.py:3: error: No library stub file for standard library module 'multiprocessing.util'

torch/multiprocessing/pool.py:3: note: (Stub files are from https://github.com/python/typeshed)

caffe2/python/scope.py:10: error: Skipping analyzing 'past.builtins': found module but no type hints or library stubs

caffe2/python/__init__.py:7: error: Module has no attribute "CPU"

caffe2/python/__init__.py:8: error: Module has no attribute "CUDA"

caffe2/python/__init__.py:9: error: Module has no attribute "MKLDNN"

caffe2/python/__init__.py:10: error: Module has no attribute "OPENGL"

caffe2/python/__init__.py:11: error: Module has no attribute "OPENCL"

caffe2/python/__init__.py:12: error: Module has no attribute "IDEEP"

caffe2/python/__init__.py:13: error: Module has no attribute "HIP"

caffe2/python/__init__.py:14: error: Module has no attribute "COMPILE_TIME_MAX_DEVICE_TYPES"; maybe "PROTO_COMPILE_TIME_MAX_DEVICE_TYPES"?

caffe2/python/__init__.py:15: error: Module has no attribute "ONLY_FOR_TEST"; maybe "PROTO_ONLY_FOR_TEST"?

caffe2/python/__init__.py:34: error: Item "_Loader" of "Optional[_Loader]" has no attribute "exec_module"

caffe2/python/__init__.py:34: error: Item "None" of "Optional[_Loader]" has no attribute "exec_module"

caffe2/python/__init__.py:35: error: Module has no attribute "cuda"

caffe2/python/__init__.py:37: error: Module has no attribute "cuda"

caffe2/python/__init__.py:49: error: Module has no attribute "add_dll_directory"

torch/random.py:4: error: Cannot find implementation or library stub for module named 'torch._C'

torch/_classes.py:2: error: Cannot find implementation or library stub for module named 'torch._C'

torch/onnx/__init__.py:1: error: Cannot find implementation or library stub for module named 'torch._C'

torch/hub.py:21: error: Skipping analyzing 'tqdm.auto': found module but no type hints or library stubs

torch/hub.py:24: error: Skipping analyzing 'tqdm': found module but no type hints or library stubs

torch/hub.py:27: error: Name 'tqdm' already defined (possibly by an import)

torch/_tensor_str.py:164: error: Not all arguments converted during string formatting

torch/_ops.py:1: error: Cannot find implementation or library stub for module named 'torch._C'

torch/_linalg_utils.py:26: error: Name 'Optional' is not defined

torch/_linalg_utils.py:26: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_linalg_utils.py:26: error: Name 'Tensor' is not defined

torch/_linalg_utils.py:63: error: Name 'Tensor' is not defined

torch/_linalg_utils.py:63: error: Name 'Optional' is not defined

torch/_linalg_utils.py:63: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_linalg_utils.py:70: error: Name 'Optional' is not defined

torch/_linalg_utils.py:70: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_linalg_utils.py:70: error: Name 'Tensor' is not defined

torch/_linalg_utils.py:88: error: Name 'Tensor' is not defined

torch/_linalg_utils.py:88: error: Name 'Optional' is not defined

torch/_linalg_utils.py:88: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_linalg_utils.py:88: error: Name 'Tuple' is not defined

torch/_linalg_utils.py:88: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/_jit_internal.py:17: error: Need type annotation for 'boolean_dispatched'

torch/_jit_internal.py:474: error: Need type annotation for '_overloaded_fns' (hint: "_overloaded_fns: Dict[<type>, <type>] = ...")

torch/_jit_internal.py:512: error: Need type annotation for '_overloaded_methods' (hint: "_overloaded_methods: Dict[<type>, <type>] = ...")

torch/_jit_internal.py:648: error: Incompatible types in assignment (expression has type "FinalCls", variable has type "_SpecialForm")

torch/sparse/__init__.py:11: error: Name 'Tensor' is not defined

torch/sparse/__init__.py:71: error: Name 'Tensor' is not defined

torch/sparse/__init__.py:71: error: Name 'Optional' is not defined

torch/sparse/__init__.py:71: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/sparse/__init__.py:71: error: Name 'Tuple' is not defined

torch/sparse/__init__.py:71: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/nn/init.py:109: error: Name 'Tensor' is not defined

torch/nn/init.py:126: error: Name 'Tensor' is not defined

torch/nn/init.py:142: error: Name 'Tensor' is not defined

torch/nn/init.py:165: error: Name 'Tensor' is not defined

torch/nn/init.py:180: error: Name 'Tensor' is not defined

torch/nn/init.py:194: error: Name 'Tensor' is not defined

torch/nn/init.py:287: error: Name 'Tensor' is not defined

torch/nn/init.py:315: error: Name 'Tensor' is not defined

torch/multiprocessing/reductions.py:8: error: No library stub file for standard library module 'multiprocessing.util'

torch/multiprocessing/reductions.py:9: error: No library stub file for standard library module 'multiprocessing.reduction'

torch/multiprocessing/reductions.py:17: error: No library stub file for standard library module 'multiprocessing.resource_sharer'

torch/jit/_builtins.py:72: error: Module has no attribute "_no_grad_embedding_renorm_"

torch/jit/_builtins.py:80: error: Module has no attribute "stft"

torch/jit/_builtins.py:81: error: Module has no attribute "cdist"

torch/jit/_builtins.py:82: error: Module has no attribute "norm"

torch/jit/_builtins.py:83: error: Module has no attribute "nuclear_norm"

torch/jit/_builtins.py:84: error: Module has no attribute "frobenius_norm"

torch/backends/cudnn/__init__.py:8: error: Cannot find implementation or library stub for module named 'torch._C'

torch/backends/cudnn/__init__.py:86: error: Need type annotation for '_handles' (hint: "_handles: Dict[<type>, <type>] = ...")

torch/autograd/profiler.py:13: error: Name 'ContextDecorator' already defined (possibly by an import)

torch/autograd/function.py:2: error: Cannot find implementation or library stub for module named 'torch._C'

torch/autograd/function.py:2: note: See https://mypy.readthedocs.io/en/latest/running_mypy.html#missing-imports

torch/autograd/function.py:109: error: Unsupported dynamic base class "with_metaclass"

torch/serialization.py:609: error: "Callable[[Any], Any]" has no attribute "cache"

torch/_lowrank.py:11: error: Name 'Tensor' is not defined

torch/_lowrank.py:13: error: Name 'Optional' is not defined

torch/_lowrank.py:13: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_lowrank.py:14: error: Name 'Optional' is not defined

torch/_lowrank.py:14: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_lowrank.py:14: error: Name 'Tensor' is not defined

torch/_lowrank.py:82: error: Name 'Tensor' is not defined

torch/_lowrank.py:82: error: Name 'Optional' is not defined

torch/_lowrank.py:82: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_lowrank.py:82: error: Name 'Tuple' is not defined

torch/_lowrank.py:82: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/_lowrank.py:130: error: Name 'Tensor' is not defined

torch/_lowrank.py:130: error: Name 'Optional' is not defined

torch/_lowrank.py:130: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_lowrank.py:130: error: Name 'Tuple' is not defined

torch/_lowrank.py:130: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/_lowrank.py:167: error: Name 'Tensor' is not defined

torch/_lowrank.py:167: error: Name 'Optional' is not defined

torch/_lowrank.py:167: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Optional")

torch/_lowrank.py:167: error: Name 'Tuple' is not defined

torch/_lowrank.py:167: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/quantization/observer.py:45: error: Variable "torch.quantization.observer.ABC" is not valid as a type

torch/quantization/observer.py:45: note: See https://mypy.readthedocs.io/en/latest/common_issues.html#variables-vs-type-aliases

torch/quantization/observer.py:45: error: Invalid base class "ABC"

torch/quantization/observer.py:127: error: Name 'Tensor' is not defined

torch/quantization/observer.py:127: error: Name 'Tuple' is not defined

torch/quantization/observer.py:127: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/quantization/observer.py:172: error: Module has no attribute "per_tensor_symmetric"

torch/quantization/observer.py:172: error: Module has no attribute "per_channel_symmetric"

torch/quantization/observer.py:192: error: Name 'Tensor' is not defined

torch/quantization/observer.py:192: error: Name 'Tuple' is not defined

torch/quantization/observer.py:192: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/quantization/observer.py:233: error: Module has no attribute "per_tensor_symmetric"

torch/quantization/observer.py:233: error: Module has no attribute "per_channel_symmetric"

torch/quantization/observer.py:534: error: Name 'Tensor' is not defined

torch/quantization/observer.py:885: error: Name 'Tensor' is not defined

torch/quantization/observer.py:885: error: Name 'Tuple' is not defined

torch/quantization/observer.py:885: note: Did you forget to import it from "typing"? (Suggestion: "from typing import Tuple")

torch/quantization/observer.py:894: error: Cannot determine type of 'max_val'

torch/quantization/observer.py:894: error: Cannot determine type of 'min_val'

torch/quantization/observer.py:899: error: Cannot determine type of 'min_val'

torch/quantization/observer.py:902: error: Name 'Tensor' is not defined

torch/quantization/observer.py:925: error: Name 'Tensor' is not defined

torch/quantization/observer.py:928: error: Cannot determine type of 'min_val'

torch/quantization/observer.py:929: error: Cannot determine type of 'max_val'

torch/quantization/observer.py:946: error: Argument "min" to "histc" has incompatible type "Tuple[Tensor, Tensor]"; expected "Union[int, float, bool]"

torch/quantization/observer.py:946: error: Argument "max" to "histc" has incompatible type "Tuple[Tensor, Tensor]"; expected "Union[int, float, bool]"

torch/quantization/observer.py:1056: error: Module has no attribute "per_tensor_symmetric"

torch/quantization/observer.py:1058: error: Module has no attribute "per_channel_symmetric"

torch/nn/quantized/functional.py:76: error: Name 'Tensor' is not defined

torch/nn/quantized/functional.py:76: error: Name 'BroadcastingList2' is not defined

torch/nn/quantized/functional.py:259: error: Name 'Tensor' is not defined