Rohit Singh Rathaur

c4565c3b94

[distributed] Replace 164 assert statements in fsdp directory ( #165235 )

...

Replace assert statements with explicit if/raise patterns across 20 files:

- _optim_utils.py (38 asserts)

- _flat_param.py (25 asserts)

- _fully_shard/_fsdp_param.py (23 asserts)

- sharded_grad_scaler.py (12 asserts)

- fully_sharded_data_parallel.py (11 asserts)

- wrap.py (10 asserts)

- _state_dict_utils.py (9 asserts)

- _fully_shard/_fsdp_param_group.py (8 asserts)

- _runtime_utils.py (6 asserts)

- _init_utils.py (6 asserts)

- 10 additional files (16 asserts)

This prevents assertions from being disabled with Python -O flag.

Fixes partially #164878

Pull Request resolved: https://github.com/pytorch/pytorch/pull/165235

Approved by: https://github.com/albanD

2025-10-14 18:04:57 +00:00

Xuehai Pan

4ccc0381de

[BE][5/16] fix typos in torch/ (torch/distributed/) ( #156315 )

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/156315

Approved by: https://github.com/Skylion007 , https://github.com/albanD

ghstack dependencies: #156313 , #156314

2025-06-23 02:57:28 +00:00

PyTorch MergeBot

145d4cdc11

Revert "[BE][5/16] fix typos in torch/ (torch/distributed/) ( #156315 )"

...

This reverts commit c2f0292bd5https://github.com/pytorch/pytorch/pull/156315 on behalf of https://github.com/atalman due to export/test_torchbind.py::TestCompileTorchbind::test_compile_error_on_input_aliasing_contents_backend_aot_eager [GH job link](https://github.com/pytorch/pytorch/actions/runs/15804799771/job/44548489912 ) [HUD commit link](c95f7fa874https://github.com/pytorch/pytorch/pull/156313#issuecomment-2994171213 ))

2025-06-22 12:31:57 +00:00

Xuehai Pan

c2f0292bd5

[BE][5/16] fix typos in torch/ (torch/distributed/) ( #156315 )

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/156315

Approved by: https://github.com/Skylion007 , https://github.com/albanD

ghstack dependencies: #156313 , #156314

2025-06-22 08:43:26 +00:00

Aaron Orenstein

c64e657632

PEP585 update - torch/distributed/fsdp ( #145162 )

...

See #145101 for details.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/145162

Approved by: https://github.com/bobrenjc93

2025-01-19 20:04:05 +00:00

bobrenjc93

08be9ec312

Migrate from Tuple -> tuple in torch/distributed ( #144258 )

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/144258

Approved by: https://github.com/aorenste

2025-01-10 08:34:54 +00:00

Xuehai Pan

3b798df853

[BE][Easy] enable UFMT for torch/distributed/{fsdp,optim,rpc}/ ( #128869 )

...

Part of #123062

- #123062

Pull Request resolved: https://github.com/pytorch/pytorch/pull/128869

Approved by: https://github.com/fegin

ghstack dependencies: #128868

2024-06-18 21:49:08 +00:00

Aaron Orenstein

7c12cc7ce4

Flip default value for mypy disallow_untyped_defs [6/11] ( #127843 )

...

See #127836 for details.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/127843

Approved by: https://github.com/oulgen

ghstack dependencies: #127842

2024-06-08 18:49:29 +00:00

Chien-Chin Huang

0dd53650dd

[BE][FSDP] Change the logging level to info ( #126362 )

...

As title

Differential Revision: [D57419445](https://our.internmc.facebook.com/intern/diff/D57419445/ )

Pull Request resolved: https://github.com/pytorch/pytorch/pull/126362

Approved by: https://github.com/awgu , https://github.com/Skylion007

2024-05-16 17:31:06 +00:00

Chien-Chin Huang

2ea38498b0

[FSDP][BE] Only show state_dict log when the debug level is detail ( #118196 )

...

As title

Differential Revision: [D53038704](https://our.internmc.facebook.com/intern/diff/D53038704/ )

Pull Request resolved: https://github.com/pytorch/pytorch/pull/118196

Approved by: https://github.com/rohan-varma , https://github.com/wz337

ghstack dependencies: #118197 , #118195

2024-01-26 09:52:36 +00:00

Matthew Hoffman

68b0db1274

Define the public API for torch.distributed.fsdp ( #109922 )

...

Related: https://github.com/pytorch/pytorch/wiki/Public-API-definition-and-documentation

Related: https://github.com/microsoft/pylance-release/issues/2953

This fixes pylance issues for these classes:

```

"FullyShardedDataParallel" is not exported from module "torch.distributed.fsdp"

```

These classes all have public docs:

* [`BackwardPrefetch`](https://pytorch.org/docs/stable/fsdp.html#torch.distributed.fsdp.BackwardPrefetch )

* [`CPUOffload`](https://pytorch.org/docs/stable/fsdp.html#torch.distributed.fsdp.CPUOffload )

* [`FullyShardedDataParallel`](https://pytorch.org/docs/stable/fsdp.html#torch.distributed.fsdp.FullyShardedDataParallel )

* [`MixedPrecision`](https://pytorch.org/docs/stable/fsdp.html#torch.distributed.fsdp.MixedPrecision )

* [`ShardingStrategy`](https://pytorch.org/docs/stable/fsdp.html#torch.distributed.fsdp.ShardingStrategy )

And it seems like all the newly added classes will have docs once they are released.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/109922

Approved by: https://github.com/wanchaol

2023-09-28 02:15:58 +00:00

Chien-Chin Huang

591cb776af

[FSDP][state_dict][optim_state_dict] Log slow optim and model state_dict paths ( #108290 )

...

This PR adds SimpleProfiler for FSDP state_dict/load_state_dict logging purpose. SimpleProfiler use class variables to record profiling results and it does everything in the Python which can be slow. So it is only suitable for logging slow actions such as initialization and state_dict/load_state_dict.

This PR uses SimpleProfiler to log some critical/slow paths of the model and optimizer state_dict/load_state_dict.

Differential Revision: [D48774406](https://our.internmc.facebook.com/intern/diff/D48774406/ )

Pull Request resolved: https://github.com/pytorch/pytorch/pull/108290

Approved by: https://github.com/wz337

2023-09-01 06:57:59 +00:00

Michael Voznesensky

a832967627

Migrate tuple(handle) -> handle ( #104488 )

...

We strengthen the invariant that one FSDP managed module has one flatparameter, and remove unused code that would have supported 1:many module to flatparam mapping

Pull Request resolved: https://github.com/pytorch/pytorch/pull/104488

Approved by: https://github.com/awgu

2023-07-19 22:33:35 +00:00

Edward Z. Yang

f65732552e

Support FakeTensor with FlatParameter ( #101987 )

...

In this PR we turn FlatParameter into a virtual tensor subclass

which doesn't actually ever get instantiated: __new__ will create

a Parameter instead (or a FakeTensor, if necessary).

Signed-off-by: Edward Z. Yang <ezyang@meta.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/101987

Approved by: https://github.com/awgu , https://github.com/eellison

2023-05-23 23:12:08 +00:00

Yanli Zhao

6ca991cacf

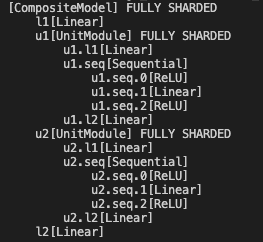

[Composable API] Add fully_shard debug function to print sharded tree structure, module names and managed param fqns ( #99133 )

...

Adding a fully_shard debug function to print sharded tree structure like following format, return module names and their managed parameter fqns as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/99133

Approved by: https://github.com/rohan-varma

2023-04-19 19:27:43 +00:00