Summary:

Fixes https://github.com/pytorch/pytorch/issues/61924

The fused backward kernel was using the weight dtype to detect mixed precision usage, but the weights can be none and the `running_mean` and `running_var` can still be mixed precision. So, I update the check to look at those variables as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61962

Reviewed By: albanD

Differential Revision: D29825516

Pulled By: ngimel

fbshipit-source-id: d087fbf3bed1762770cac46c0dcec30c03a86fda

Summary:

Fixes https://github.com/pytorch/pytorch/issues/58816

- enhance the backward of `nn.SmoothL1Loss` to allow integral `target`

- add test cases in `test_nn.py` to check the `input.grad` between the integral input and its floating counterpart.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61112

Reviewed By: mrshenli

Differential Revision: D29775660

Pulled By: albanD

fbshipit-source-id: 544eabb6ce1ea13e1e79f8f18c70f148e92be508

Summary:

Fixes https://github.com/pytorch/pytorch/issues/61242

Previous code was wrongly checking if a tensor is a buffer in a module by comparing values; fix compares names instead.

Docs need some updating as well- current plan is to bump that to a separate PR, but I'm happy to do it here as well if preferred.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61429

Reviewed By: gchanan

Differential Revision: D29712341

Pulled By: jbschlosser

fbshipit-source-id: 41f29ab746505e60f13de42a9053a6770a3aac22

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61584

add_relu is not working with broadcasting. This registers a scalar version of add_relu in native_functions that casts to tensor before calling the regular function. TensorIterator handles broadcasting analogously to existing add.

ghstack-source-id: 133480068

Test Plan: python3 test/test_nn.py TestAddRelu

Reviewed By: kimishpatel

Differential Revision: D29641768

fbshipit-source-id: 1b0ecfdb7eaf44afed83c9e9e74160493c048cbc

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60517

This is to fix the module support on lazymodulefixin on the bug issue #60132

Check the link: https://github.com/pytorch/pytorch/issues/60132

We will have to update lazy_extension given the dependency on module.py and update the unit test as well.

Test Plan:

Unit test passes

torchrec test passes

Reviewed By: albanD

Differential Revision: D29274068

fbshipit-source-id: 1c20f7f0556e08dc1941457ed20c290868346980

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59987

Similar as GroupNorm, improve numerical stability of LayerNorm by Welford algorithm and pairwise sum.

Test Plan: buck test mode/dev-nosan //caffe2/test:nn -- "LayerNorm"

Reviewed By: ngimel

Differential Revision: D29115235

fbshipit-source-id: 5183346c3c535f809ec7d98b8bdf6d8914bfe790

Summary:

Fixes https://github.com/pytorch/pytorch/issues/24610

Aten Umbrella issue https://github.com/pytorch/pytorch/issues/24507

Related to https://github.com/pytorch/pytorch/issues/59765

The performance does not change between this PR and master with the following benchmark script:

<details>

<summary>Benchmark script</summary>

```python

import torch

import torch.nn as nn

import time

torch.manual_seed(0)

def _time():

torch.cuda.synchronize()

MS_PER_SECOND = 1000

return time.perf_counter() * MS_PER_SECOND

device = "cuda"

C = 30

softmax = nn.LogSoftmax(dim=1)

n_runs = 250

for reduction in ["none", "mean", "sum"]:

for N in [100_000, 500_000, 1_000_000]:

fwd_t = 0

bwd_t = 0

data = torch.randn(N, C, device=device)

target = torch.empty(N, dtype=torch.long, device=device).random_(0, C)

loss = nn.NLLLoss(reduction=reduction)

input = softmax(data)

for i in range(n_runs):

t1 = _time()

result = loss(input, target)

t2 = _time()

fwd_t = fwd_t + (t2 - t1)

fwd_avg = fwd_t / n_runs

print(

f"input size({N}, {C}), reduction: {reduction} "

f"forward time is {fwd_avg:.2f} (ms)"

)

print()

```

</details>

## master

```

input size(100000, 30), reduction: none forward time is 0.02 (ms)

input size(500000, 30), reduction: none forward time is 0.08 (ms)

input size(1000000, 30), reduction: none forward time is 0.15 (ms)

input size(100000, 30), reduction: mean forward time is 1.81 (ms)

input size(500000, 30), reduction: mean forward time is 8.24 (ms)

input size(1000000, 30), reduction: mean forward time is 16.46 (ms)

input size(100000, 30), reduction: sum forward time is 1.66 (ms)

input size(500000, 30), reduction: sum forward time is 8.24 (ms)

input size(1000000, 30), reduction: sum forward time is 16.46 (ms)

```

## this PR

```

input size(100000, 30), reduction: none forward time is 0.02 (ms)

input size(500000, 30), reduction: none forward time is 0.08 (ms)

input size(1000000, 30), reduction: none forward time is 0.15 (ms)

input size(100000, 30), reduction: mean forward time is 1.80 (ms)

input size(500000, 30), reduction: mean forward time is 8.24 (ms)

input size(1000000, 30), reduction: mean forward time is 16.46 (ms)

input size(100000, 30), reduction: sum forward time is 1.66 (ms)

input size(500000, 30), reduction: sum forward time is 8.24 (ms)

input size(1000000, 30), reduction: sum forward time is 16.46 (ms)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60097

Reviewed By: mrshenli

Differential Revision: D29303099

Pulled By: ngimel

fbshipit-source-id: fc0d636543a79ea81158d286dcfb84043bec079a

Summary:

Before this change it was implemented with the assumption, that number of groups, input and output channels are the same, which is not always the case

Extend the implementation to support any number of output channels as long as number of groups equals to the number of input channels (i.e. kernel.size(1) == 1)

Fixes https://github.com/pytorch/pytorch/issues/60176

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60460

Reviewed By: albanD

Differential Revision: D29299693

Pulled By: malfet

fbshipit-source-id: 31130c71ce86535ccfba2f4929eee3e2e287b2f0

Summary:

Fixes #https://github.com/pytorch/pytorch/issues/50192

It has been discussed in the issue that, currently RNN apis do not support inputs with `seq_len=0` and the error message does not reflect this issue clearly. This PR is suggesting a solution to this issue, by adding a more clear error message that, none of RNN api (nn.RNN, nn.GRU and nn.LSTM) do not support `seq_len=0` for neither one-directional nor bi-directional layers.

```

import torch

input_size = 5

hidden_size = 6

rnn = torch.nn.GRU(input_size, hidden_size)

for seq_len in reversed(range(4)):

output, h_n = rnn(torch.zeros(seq_len, 10, input_size))

print('{}, {}'.format(output.shape, h_n.shape))

```

Previously was giving output as :

```

torch.Size([3, 10, 6]), torch.Size([1, 10, 6])

torch.Size([2, 10, 6]), torch.Size([1, 10, 6])

torch.Size([1, 10, 6]), torch.Size([1, 10, 6])

Traceback (most recent call last):

File "test.py", line 8, in <module>

output, h_n = rnn(torch.zeros(seq_len, 10, input_size))

File "/opt/miniconda3/lib/python3.8/site-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/opt/miniconda3/lib/python3.8/site-packages/torch/nn/modules/rnn.py", line 739, in forward

result = _VF.gru(input, hx, self._flat_weights, self.bias, self.num_layers,

RuntimeError: stack expects a non-empty TensorList

```

However, after adding this PR, this error message change for any combination of

[RNN, GRU and LSTM] x [one-directional, bi-directional].

Let's illustrate the change with the following code snippet:

```

import torch

input_size = 5

hidden_size = 6

rnn = torch.nn.LSTM(input_size, hidden_size, bidirectional=True)

output, h_n = rnn(torch.zeros(0, 10, input_size))

```

would give output as following:

```

Traceback (most recent call last):

File "<stdin>", line 2, in <module>

File "/fsx/users/iramazanli/pytorch/torch/nn/modules/module.py", line 1054, in _call_impl

return forward_call(*input, **kwargs)

File "/fsx/users/iramazanli/pytorch/torch/nn/modules/rnn.py", line 837, in forward

result = _VF.gru(input, hx, self._flat_weights, self.bias, self.num_layers,

RuntimeError: Expected sequence length to be larger than 0 in RNN

```

***********************************

The change for Packed Sequence didn't seem to be necessary because from the following code snippet error message looks clear about the issue:

```

import torch

import torch.nn.utils.rnn as rnn_utils

import torch.nn as nn

packed = rnn_utils.pack_sequence([])

```

returns:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/fsx/users/iramazanli/pytorch/torch/nn/utils/rnn.py", line 398, in pack_sequence

return pack_padded_sequence(pad_sequence(sequences), lengths, enforce_sorted=enforce_sorted)

File "/fsx/users/iramazanli/pytorch/torch/nn/utils/rnn.py", line 363, in pad_sequence

return torch._C._nn.pad_sequence(sequences, batch_first, padding_value)

RuntimeError: received an empty list of sequences

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60269

Reviewed By: mrshenli

Differential Revision: D29299914

Pulled By: iramazanli

fbshipit-source-id: 5ca98faa28d4e6a5a2f7600a30049de384a3b132

Summary:

Partially addresses https://github.com/pytorch/pytorch/issues/49825 by improving the testing

- Rename some of the old tests that had "inplace_view" in their names, but actually mean "inplace_[update_]on_view" so there is no confusion with the naming

- Adds some tests in test_view_ops that verify basic behavior

- Add tests that creation meta is properly handled for no-grad, multi-output, and custom function cases

- Add test that verifies that in the cross dtype view case, the inplace views won't be accounted in the backward graph on rebase as mentioned in the issue.

- Update inference mode tests to also check in-place

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59891

Reviewed By: albanD

Differential Revision: D29272546

Pulled By: soulitzer

fbshipit-source-id: b12acf5f0e3f788167ebe268423cdb58481b56f6

Summary:

Fixes https://github.com/pytorch/pytorch/issues/27655

This PR adds a C++ and Python version of ReflectionPad3d with structured kernels. The implementation uses lambdas extensively to better share code from the backward and forward pass.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59791

Reviewed By: gchanan

Differential Revision: D29242015

Pulled By: jbschlosser

fbshipit-source-id: 18e692d3b49b74082be09f373fc95fb7891e1b56

Summary:

Following https://github.com/pytorch/pytorch/issues/59624 I observed some straggling failing tests on Ampere due to TF32 thresholds. This PR just twiddles some more thresholds to fix the (6) failing tests I saw on A100.

CC Flamefire ptrblck ngimel

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60209

Reviewed By: gchanan

Differential Revision: D29220508

Pulled By: ngimel

fbshipit-source-id: 7c83187a246e1b3a24b181334117c0ccf2baf311

Summary:

Makes possible that the first register parametrization depends on a number of parameters rather than just one. Examples of these types of parametrizations are `torch.nn.utils.weight_norm` and low rank parametrizations via the multiplication of a `n x k` tensor by a `k x m` tensor with `k <= m, n`.

Follows the plan outlined in https://github.com/pytorch/pytorch/pull/33344#issuecomment-768574924. A short summary of the idea is: we call `right_inverse` when registering a parametrization to generate the tensors that we are going to save. If `right_inverse` returns a sequence of tensors, then we save them as `original0`, `original1`... If it returns a `Tensor` or a sequence of length 1, we save it as `original`.

We only allow to have many-to-one parametrizations in the first parametrization registered. The next parametrizations would need to be one-to-one.

There were a number of choices in the implementation:

If the `right_inverse` returns a sequence of parameters, then we unpack it in the forward. This is to allow to write code as:

```python

class Sum(nn.Module):

def forward(self, X, Y):

return X + Y

def right_inverse(Z):

return Z, torch.zeros_like(Z)

```

rather than having to unpack manually a list or a tuple within the `forward` function.

At the moment the errors are a bit all over the place. This is to avoid having to check some properties of `forward` and `right_inverse` when they are registered. I left this like this for now, but I believe it'd be better to call these functions when they are registered to make sure the invariants hold and throw errors as soon as possible.

The invariants are the following:

1. The following code should be well-formed

```python

X = module.weight

Y = param.right_inverse(X)

assert isinstance(Y, Tensor) or isinstance(Y, collections.Sequence)

Z = param(Y) if isisntance(Y, Tensor) else param(*Y)

```

in other words, if `Y` is a `Sequence` of `Tensor`s (we check also that the elements of the sequence are Tensors), then it is of the same length as the number parameters `param.forward` accepts.

2. Always: `X.dtype == Z.dtype and X.shape == Z.shape`. This is to protect the user from shooting themselves in the foot, as it's too odd for a parametrization to change the metadata of a tensor.

3. If it's one-to-one: `X.dtype == Y.dtype`. This is to be able to do `X.set_(Y)` so that if a user first instantiates the optimiser and then puts the parametrisation, then we reuse `X` and the user does not need to add a new parameter to the optimiser. Alas, this is not possible when the parametrisation is many-to-one. The current implementation of `spectral_norm` and `weight_norm` does not seem to care about this, so this would not be a regression. I left a warning in the documentation though, as this case is a bit tricky.

I'm still missing to go over the formatting of the documentation, I'll do that tomorrow.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58488

Reviewed By: soulitzer

Differential Revision: D29100708

Pulled By: albanD

fbshipit-source-id: b9e91f439cf6b5b54d5fa210ec97c889efb9da38

Summary:

Implements a number of changes discussed with soulitzer offline.

In particular:

- Initialise `u`, `v` in `__init__` rather than in `_update_vectors`

- Initialise `u`, `v` to some reasonable vectors by doing 15 power iterations at the start

- Simplify the code of `_reshape_weight_to_matrix` (and make it faster) by using `flatten`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59564

Reviewed By: ailzhang

Differential Revision: D29066238

Pulled By: soulitzer

fbshipit-source-id: 6a58e39ddc7f2bf989ff44fb387ab408d4a1ce3d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58950

Use tensor iterator's API to set grain size in order to parallelize gelu op.

ghstack-source-id: 130947174

Test Plan: test_gelu

Reviewed By: ezyang

Differential Revision: D28689819

fbshipit-source-id: 0a02066d47a4d9648323c5ec27d7e0e91f4c303a

Summary:

Make sure tests run explicitely without TF32 don't use TF32 operations

Fixes https://github.com/pytorch/pytorch/issues/52278

After the tf32 accuracy tolerance was increased to 0.05 this is the only remaining change required to fix the above issue (for TestNN.test_Conv3d_1x1x1_no_bias_cuda)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59624

Reviewed By: heitorschueroff

Differential Revision: D28996279

Pulled By: ngimel

fbshipit-source-id: 7f1b165fd52cfa0898a89190055b7a4b0985573a

Summary:

As per title. Resolves https://github.com/pytorch/pytorch/issues/56683.

`gradgradcheck` will fail once `target.requires_grad() == True` because of the limitations of the current double backward implementation.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59447

Reviewed By: agolynski

Differential Revision: D28910140

Pulled By: albanD

fbshipit-source-id: 20934880eb4d22bec34446a6d1be0a38ef95edc7

Summary:

This PR introduces a helper function named `torch.nn.utils.skip_init()` that accepts a module class object + `args` / `kwargs` and instantiates the module while skipping initialization of parameter / buffer values. See discussion at https://github.com/pytorch/pytorch/issues/29523 for more context. Example usage:

```python

import torch

m = torch.nn.utils.skip_init(torch.nn.Linear, 5, 1)

print(m.weight)

m2 = torch.nn.utils.skip_init(torch.nn.Linear, 5, 1, device='cuda')

print(m2.weight)

m3 = torch.nn.utils.skip_init(torch.nn.Linear, in_features=5, out_features=1)

print(m3.weight)

```

```

Parameter containing:

tensor([[-3.3011e+28, 4.5915e-41, -3.3009e+28, 4.5915e-41, 0.0000e+00]],

requires_grad=True)

Parameter containing:

tensor([[-2.5339e+27, 4.5915e-41, -2.5367e+27, 4.5915e-41, 0.0000e+00]],

device='cuda:0', requires_grad=True)

Parameter containing:

tensor([[1.4013e-45, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00]],

requires_grad=True)

```

Bikeshedding on the name / namespace is welcome, as well as comments on the design itself - just wanted to get something out there for discussion.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57555

Reviewed By: zou3519

Differential Revision: D28640613

Pulled By: jbschlosser

fbshipit-source-id: 5654f2e5af5530425ab7a9e357b6ba0d807e967f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48919

move data indexing utils

parallel inference contiguous path

parallel inference channels last path

add dim apply

optimize update stats

add channels last support for backward

Revert "add channels last support for backward"

This reverts commit cc5e29dce44395250f8e2abf9772f0b99f4bcf3a.

Revert "optimize update stats"

This reverts commit 7cc6540701448b9cfd5833e36c745b5015ae7643.

Revert "add dim apply"

This reverts commit b043786d8ef72dee5cf85b5818fcb25028896ecd.

bug fix

add batchnorm nhwc test for cpu, including C=1 and HW=1

Test Plan: Imported from OSS

Reviewed By: glaringlee

Differential Revision: D25399468

Pulled By: VitalyFedyunin

fbshipit-source-id: a4cd7a09cd4e1a8f5cdd79c7c32c696d0db386bd

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48918

enable test case on AvgPool2d channels last for CPU

Test Plan: Imported from OSS

Reviewed By: glaringlee

Differential Revision: D25399466

Pulled By: VitalyFedyunin

fbshipit-source-id: 9477b0c281c0de5ed981a97e2dcbe6072d7f0aef

Summary:

Adds a new file under `torch/nn/utils/parametrizations.py` which should contain all the parametrization implementations

For spectral_norm we add the `SpectralNorm` module which can be registered using `torch.nn.utils.parametrize.register_parametrization` or using a wrapper: `spectral_norm`, the same API the old implementation provided.

Most of the logic is borrowed from the old implementation:

- Just like the old implementation, there should be cases when retrieving the weight should perform another power iteration (thus updating the weight) and cases where it shouldn't. For example in eval mode `self.training=True`, we do not perform power iteration.

There are also some differences/difficulties with the new implementation:

- Using new parametrization functionality as-is there doesn't seem to be a good way to tell whether a 'forward' call was the result of parametrizations are unregistered (and leave_parametrizations=True) or when the injected property's getter was invoked. The issue is that we want perform power iteration in the latter case but not the former, but we don't have this control as-is. So, in this PR I modified the parametrization functionality to change the module to eval mode before triggering their forward call

- Updates the vectors based on weight on initialization to fix https://github.com/pytorch/pytorch/issues/51800 (this avoids silently update weights in eval mode). This also means that we perform twice any many power iterations by the first forward.

- right_inverse is just the identity for now, but maybe it should assert that the passed value already satisfies the constraints

- So far, all the old spectral_norm tests have been cloned, but maybe we don't need so much testing now that the core functionality is already well tested

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57784

Reviewed By: ejguan

Differential Revision: D28413201

Pulled By: soulitzer

fbshipit-source-id: e8f1140f7924ca43ae4244c98b152c3c554668f2

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55189

Currently EmbeddingBag and it variants support either int32 or int64 indices/offsets. We have use cases where there are mix of int32 and int64 indices which are not supported yet. To avoid introducing too many branches we could simply cast offsets type to indices type when they are not the same.

Test Plan: unit tests

Reviewed By: allwu

Differential Revision: D27482738

fbshipit-source-id: deeadd391d49ff65d17d016092df1839b82806cc

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57558Fixes#53359

If someone directly saves an nn.LSTM in PyTorch 1.7 and then loads it in PyTorch

1.8, it errors out with the following:

```

(In PyTorch 1.7)

import torch

model = torch.nn.LSTM(2, 3)

torch.save(model, 'lstm17.pt')

(In PyTorch 1.8)

model = torch.load('lstm17.pt')

AttributeError: 'LSTM' object has no attribute 'proj_size'

```

Although we do not officially support this (directly saving modules via

torch.save), it used to work and the fix is very simple. This PR adds an

extra line to `__setstate__`: if the state we are passed does not have

a `proj_size` attribute, we assume it was saved from PyTorch 1.7 and

older and set `proj_size` equal to 0.

Test Plan:

I wrote a test that tests `__setstate__`. But also,

Run the following:

```

(In PyTorch 1.7)

import torch

x = torch.ones(32, 5, 2)

model = torch.nn.LSTM(2, 3)

torch.save(model, 'lstm17.pt')

y17 = model(x)

(Using this PR)

model = torch.load('lstm17.pt')

x = torch.ones(32, 5, 2)

y18 = model(x)

```

and finally compare y17 and y18.

Reviewed By: mrshenli

Differential Revision: D28198477

Pulled By: zou3519

fbshipit-source-id: e107d1ebdda23a195a1c3574de32a444eeb16191

Summary:

Fix a numerical issue of CUDA channels-last SyncBatchNorm

The added test is a repro for the numerical issue. Thanks for the help from jjsjann123 who identified the root cause. Since pytorch SBN channels-last code was migrated from [nvidia/apex](https://github.com/nvidia/apex), apex SBN channels-last also has this issue. We will submit a fix there soon.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57077

Reviewed By: mruberry

Differential Revision: D28107672

Pulled By: ngimel

fbshipit-source-id: 0c80e79ddb48891058414ad8a9bedd80f0f7f8df

Summary:

Fixes https://github.com/pytorch/pytorch/issues/45687

Fix changes the input size check for `InstanceNorm*d` to be more restrictive and correctly reject sizes with only a single spatial element, regardless of batch size, to avoid infinite variance.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56659

Reviewed By: pbelevich

Differential Revision: D27948060

Pulled By: jbschlosser

fbshipit-source-id: 21cfea391a609c0774568b89fd241efea72516bb

Summary:

Fixes https://github.com/pytorch/pytorch/issues/56380

BC-breaking note:

This changes the behavior of full backward hooks as they will now fire properly even if no input to the Module require gradients.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56693

Reviewed By: ezyang

Differential Revision: D27947030

Pulled By: albanD

fbshipit-source-id: e8353d769ba5a2c1b6bdf3b64e2d61308cf624a2

Summary:

Fixes https://github.com/pytorch/pytorch/issues/55587

The fix converts the binary `TensorIterator` used by softplus backwards to a ternary one, adding in the original input for comparison against `beta * threshold`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56484

Reviewed By: malfet

Differential Revision: D27908372

Pulled By: jbschlosser

fbshipit-source-id: 73323880a5672e0242879690514a17886cbc29cd

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55237

In this PR, we reenable fast-gradcheck and resolve misc issues that arise:

Before landing this PR, land #55182 so that slow tests are still being run periodically.

Bolded indicates the issue is handled in this PR, otherwise it is handled in a previous PR.

**Non-determinism issues**:

- ops that do not have deterministic implementation (as documented https://pytorch.org/docs/stable/generated/torch.use_deterministic_algorithms.html#torch.use_deterministic_algorithms)

- test_pad_cuda (replication_pad2d) (test_nn)

- interpolate (test_nn)

- cummin, cummax (scatter_add_cuda_kernel) (test_ops)

- test_fn_gradgrad_prod_cpu_float64 (test_ops)

Randomness:

- RRelu (new module tests) - we fix by using our own generator as to avoid messing with user RNG state (handled in #54480)

Numerical precision issues:

- jacobian mismatch: test_gelu (test_nn, float32, not able to replicate locally) - we fixed this by disabling for float32 (handled in previous PR)

- cholesky_solve (test_linalg): #56235 handled in previous PR

- **cumprod** (test_ops) - #56275 disabled fast gradcheck

Not yet replicated:

- test_relaxed_one_hot_categorical_2d (test_distributions)

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D27920906

fbshipit-source-id: 894dd7bf20b74f1a91a5bc24fe56794b4ee24656

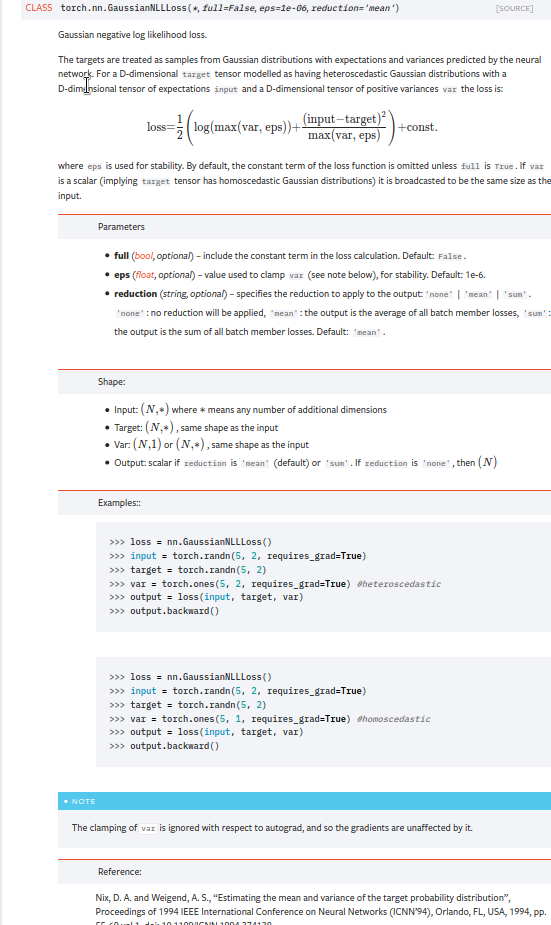

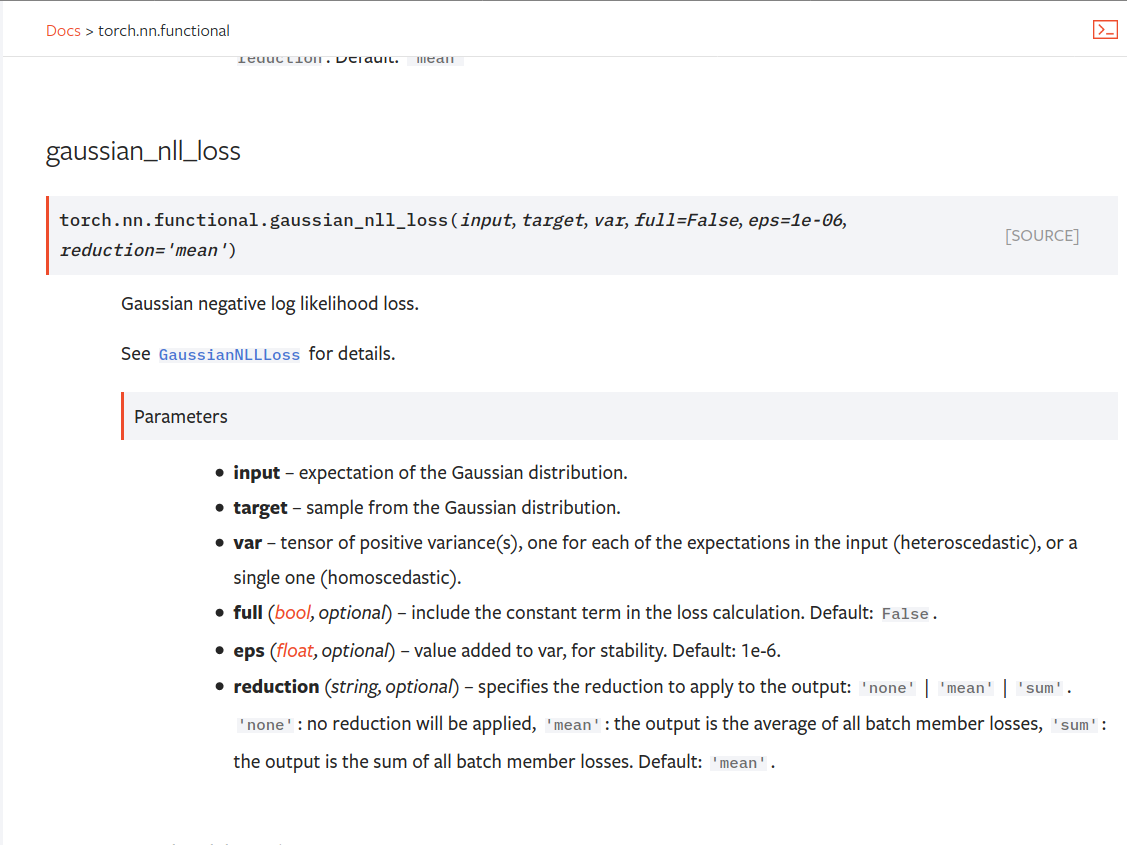

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54812

Needed for quantization since different attribute might refer to the same module instance

Test Plan: Imported from OSS

Reviewed By: vkuzo

Differential Revision: D27408376

fbshipit-source-id: cada85c4a1772d3dd9502c3f6f9a56d690d527e7

Summary:

Fixes https://github.com/pytorch/pytorch/issues/25100#43112

EDIT: pardon my inexperience since this is my first PR here, that I did not realize the doc should not have any trailing white spaces, and `[E712] comparison to False should be 'if cond is False:' or 'if not cond:'`, now both fixed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55285

Reviewed By: mruberry

Differential Revision: D27765694

Pulled By: jbschlosser

fbshipit-source-id: c34774fa065d67c0ac130de20a54e66e608bdbf4

Summary:

This PR adds a `padding_idx` parameter to `nn.EmbeddingBag` and `nn.functional.embedding_bag`. As with `nn.Embedding`'s `padding_idx` argument, if an embedding's index is equal to `padding_idx` it is ignored, so it is not included in the reduction.

This PR does not add support for `padding_idx` for quantized or ONNX `EmbeddingBag` for opset10/11 (opset9 is supported). In these cases, an error is thrown if `padding_idx` is provided.

Fixes https://github.com/pytorch/pytorch/issues/3194

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49237

Reviewed By: walterddr, VitalyFedyunin

Differential Revision: D26948258

Pulled By: jbschlosser

fbshipit-source-id: 3ca672f7e768941f3261ab405fc7597c97ce3dfc

Summary:

Fixes https://github.com/pytorch/pytorch/issues/25100#43112

EDIT: pardon my inexperience since this is my first PR here, that I did not realize the doc should not have any trailing white spaces, and `[E712] comparison to False should be 'if cond is False:' or 'if not cond:'`, now both fixed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55285

Reviewed By: ngimel

Differential Revision: D27710107

Pulled By: jbschlosser

fbshipit-source-id: c4363a4604548c0d84628c4997dd23d6b3afb4d9

Summary:

This PR adds the functionality to use channals_last_3d, aka, NDHWC, in Conv3d. It's only enabled when cuDNN version is greater than or equal to 8.0.5.

Todo:

- [x] add memory_format test

- [x] add random shapes functionality test

Close https://github.com/pytorch/pytorch/pull/52547

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48430

Reviewed By: mrshenli

Differential Revision: D27641452

Pulled By: ezyang

fbshipit-source-id: 0e98957cf30c50c3390903d307dd43bdafd28880

Summary:

There was an error when removing a parametrization with `leave_parametrized=True`. It had escaped the previous tests. This PR should fix that.

**Edit.**

I also took this chance to fix a few mistakes that the documentation had, and to also write the `set_original_` in a more compact way.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55456

Reviewed By: mrshenli

Differential Revision: D27620481

Pulled By: albanD

fbshipit-source-id: f1298ddbcf24566ef48850c62a1eb4d8a3576152

Summary:

Non-backwards-compatible change introduced in https://github.com/pytorch/pytorch/pull/53843 is tripping up a lot of code. Better to set it to False initially and then potentially flip to True in the later version to give people time to adapt.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55169

Reviewed By: mruberry

Differential Revision: D27511150

Pulled By: jbschlosser

fbshipit-source-id: 1ac018557c0900b31995c29f04aea060a27bc525

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48917

max_pool2d channels last support forward path

max_pool2d channels last support backward path

vectorize channels last forward path

rename the header file

fix windows build

combine PoolingKernel.h into Pool.h

add data type check

loosen test_max_pool2d_nhwc to cover device CPU

Test Plan: Imported from OSS

Reviewed By: glaringlee

Differential Revision: D25399470

Pulled By: VitalyFedyunin

fbshipit-source-id: b49b9581f1329a8c2b9c75bb10f12e2650e4c65a

Summary:

This PR enables using MIOpen for RNN FP16 on ROCM.

It does this by altering use_miopen to allow fp16. In the special case where LSTMs use projections we use the default implementation, as it is not implemented in MIOpen at this time. We do send out a warning once to let the user know.

We then remove the various asserts that are no longer necessary since we handle the case.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52475

Reviewed By: H-Huang

Differential Revision: D27449150

Pulled By: malfet

fbshipit-source-id: 06499adb94f28d4aad73fa52890d6ba361937ea6