The dipatcher didn't check attribute dtype, as AttributeProto is a totally different system from InputProto in ONNX. This PR introduces the mapping table for AttributeProto type to python type. And further utilize it in opschema matching.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/105104

Approved by: https://github.com/thiagocrepaldi

## ONNXRegistry

### Motivation

In #100660, we used the torchscript registry to allow dispatcher. However, it doesn't meet the needs of FX exporter. The idea of torchscript exporter is built on top of three points:

(1) Use `_SymbolicFunctionGroup` to dispatch opset version as we need ops to fall back when we don't have it in the current exporter opset version

(2) One aten maps to multiple supported opset versions, and each version maps to one symbolic function

(3) Custom symbolic function is considered prior to default symbolic function

Now that TorchLib will support all aten op across all opset versions, we don't need the opset version dispatch layer. And with onnx overloads created by torchlib, we need a way to support custom operators and prioritize them among all overloads.

### Feature

Introduce a public OnnxRegistry API initiated with fixed opset version which supports user registered operators. **The dispatching opset version is no longer needed as TorchLib is expected to provide full aten support across all opset version. And Dispatcher is expected to prioritize custome operators than the defaults.**

### API:

(1) `register_custom_op(self, function: OnnxFunction, domain: str, op_name: str, overload: Optional[str] = None)`: Register a custom operator into the current OnnxRegistry. This is expected to be used when the default operators don't mee the need of users. **For example, need a different opset version from the registry, or different calculation**.

(2) `is_registered_op(self, domain: str, op_name: str, overload: Optional[str] = None)`: Whether the aten op is registered.

(3) `get_functions(domain: str, op_name: str, overload: Optional[str] = None)`: Return a set of registered SymbolicFunctions under the aten

### TODO:

(1)`remove_op(op_name: str)`: removing the whole support for certain op allows decompose the graph to prims.

(2)Expose OnnxRegistry to users, and disable the opset_version option in export API. Export API should use the ops in registry only.

---

## OnnxDispatcher

The Changes in the function `dispatch` and `_find_the_perfect_or_nearest_match_onnxfunction` are meant to allow complex type and custom operator supports.

### Respect Custom Ops

(1) Override: Check if we can find the perfect match in custom operator overloads prior to defaults

(2) Tie breaker: If we have the same nearest match of default and custom overload, we choose the custom.

### Supplementary

[Design discussion doc](https://microsoft-my.sharepoint.com/:w:/p/thiagofc/EW-5Q3jWhFNMtQHHtPpJiAQB-P2qAcVRkYjfbmeSddnjWA?e=QUX9zG&wdOrigin=TEAMS-ELECTRON.p2p.bim&wdExp=TEAMS-TREATMENT&wdhostclicktime=1687554493295&web=1)

Please check the Registry and Dispatcher sections.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/103943

Approved by: https://github.com/BowenBao, https://github.com/justinchuby

Introduce `Analysis` to analyze fx graphmodule and emit diagnostics. This class

can be extended to interact with `Transform` (passes) to decide if a pass should

trigger based on graph analysis result. E.g., if decomp needs to run by checking

operator namespace in nodes. For now leaving it as out of scope but can revisit

if maintaining multi fx extractor becomes reality.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/100451

Approved by: https://github.com/titaiwangms

* CI Test environment to install onnx and onnx-script.

* Add symbolic function for `bitwise_or`, `convert_element_type` and `masked_fill_`.

* Update symbolic function for `slice` and `arange`.

* Update .pyi signature for `_jit_pass_onnx_graph_shape_type_inference`.

Co-authored-by: Wei-Sheng Chin <wschin@outlook.com>

Co-authored-by: Ti-Tai Wang <titaiwang@microsoft.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94564

Approved by: https://github.com/abock

All this time, PyTorch and ONNX has different strategy for None in output. And in internal test, we flatten the torch outputs to see if the rest of them matched. However, this doesn't work anymore in scripting after Optional node is introduced, since some of None would be kept.

#83184 forces script module to keep all Nones from Pytorch, but in ONNX, the model only keeps the ones generated with Optional node, and deletes those meaningless None.

This PR uses Optional node to keep those meaningless None in output as well, so when it comes to script module result comparison, Pytorch and ONNX should have the same amount of Nones.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84789

Approved by: https://github.com/BowenBao

Fix#82589

Why:

1. **full_check** works in `onnx::checker::check_model` function as it turns on **strict_mode** in `onnx::shape_inference::InferShapes()` which I think that was the intention of this part of code.

2. **strict_mode** catches failed shape type inference (invalid ONNX model from onnx perspective) and ONNXRUNTIME can't run these invalid models, as ONNXRUNTIME actually rely on ONNX shape type inference to optimize ONNX graph. Why we don't set it True for default? >>> some of existing users use other platform, such as caffe2 to run ONNX model which doesn't need valid ONNX model to run.

3. This PR doesn't change the original behavior of `check_onnx_proto`, but add a warning message for those models which can't pass strict shape type inference, saying the models would fail on onnxruntime.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/83186

Approved by: https://github.com/justinchuby, https://github.com/thiagocrepaldi, https://github.com/jcwchen, https://github.com/BowenBao

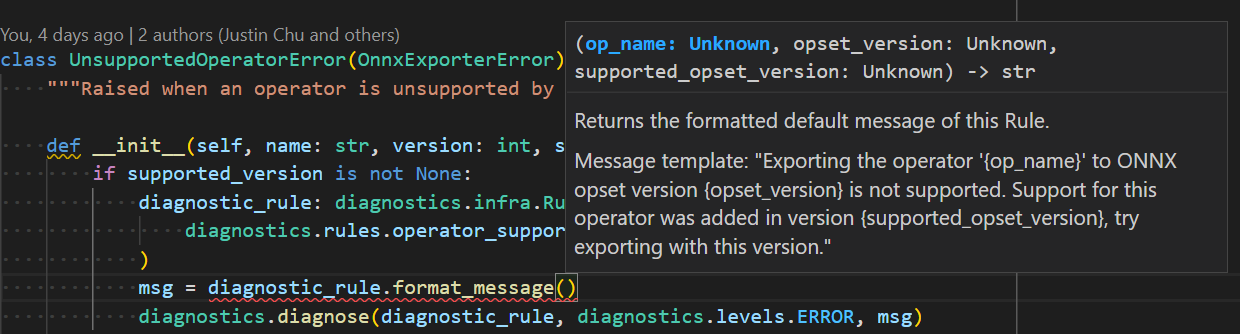

* Reflect required arguments in method signature for each diagnostic rule. Previous design accepts arbitrary sized tuple which is hard to use and prone to error.

* Removed `DiagnosticTool` to keep things compact.

* Removed specifying supported rule set for tool(context) and checking if rule of reported diagnostic falls inside the set, to keep things compact.

* Initial overview markdown file.

* Change `full_description` definition. Now `text` field should not be empty. And its markdown should be stored in `markdown` field.

* Change `message_default_template` to allow only named fields (excluding numeric fields). `field_name` provides clarity on what argument is expected.

* Added `diagnose` api to `torch.onnx._internal.diagnostics`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87830

Approved by: https://github.com/abock

Introduce `_jit_pass_onnx_assign_node_and_value_names` to parse and assign

scoped name for nodes and values in exported onnx graph.

Module layer information is obtained from `ONNXScopeName` captured in `scope`

attribute in nodes. For nodes, the processed onnx node name are stored in

attribute `onnx_name`. For values, the processed onnx output name are stored

as `debugName`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82040

Approved by: https://github.com/AllenTiTaiWang, https://github.com/justinchuby, https://github.com/abock

Introduce `_jit_pass_onnx_assign_node_and_value_names` to parse and assign

scoped name for nodes and values in exported onnx graph.

Module layer information is obtained from `ONNXScopeName` captured in `scope`

attribute in nodes. For nodes, the processed onnx node name are stored in

attribute `onnx_name`. For values, the processed onnx output name are stored

as `debugName`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82040

Approved by: https://github.com/AllenTiTaiWang, https://github.com/justinchuby, https://github.com/abock

Add flag (inline_autograd) to enable inline export of model consisting of autograd functions. Currently, this flag should only be used in TrainingMode.EVAL and not for training.

An example:

If a model containing ``autograd.Function`` is as follows

```

class AutogradFunc(torch.autograd.Function):

@staticmethod

def forward(ctx, i):

result = i.exp()

result = result.log()

ctx.save_for_backward(result)

return result

```

Then the model is exported as

```

graph(%0 : Float):

%1 : Float = ^AutogradFunc(%0)

return (%1)

```

If inline_autograd is set to True, this will be exported as

```

graph(%0 : Float):

%1 : Float = onnx::Exp(%0)

%2 : Float = onnx::Log(%1)

return (%2)

```

If one of the ops within the autograd module is not supported, that particular node is exported as is mirroring ONNX_FALLTHROUGH mode

Fixes: #61813

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74765

Approved by: https://github.com/BowenBao, https://github.com/malfet

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73284

Some important ops won't support optional type until opset 16,

so we can't fully test things end-to-end, but I believe this should

be all that's needed. Once ONNX Runtime supports opset 16,

we can do more testing and fix any remaining bugs.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D34625646

Pulled By: malfet

fbshipit-source-id: 537fcbc1e9d87686cc61f5bd66a997e99cec287b

Co-authored-by: BowenBao <bowbao@microsoft.com>

Co-authored-by: neginraoof <neginmr@utexas.edu>

Co-authored-by: Nikita Shulga <nshulga@fb.com>

(cherry picked from commit 822e79f31ae54d73407f34f166b654f4ba115ea5)

Summary:

Add ONNX exporter logging facility. Supporting both C++/Python logging api. Logging can be turned on/off. Logging output stream can be either set to `stdout` or `stderr`.

A few other changes:

* When exception is raised in passes, the current IR graph being processed will be logged.

* When exception is raised from `_jit_pass_onnx` (the pass that converts nodes from namespace `ATen` to `ONNX`), both ATen IR graph and ONNX IR graph under construction will be logged.

* Exception message for ConstantFolding is truncated to avoid being too verbose.

* Update the final printed IR graph with node name in ONNX ModelProto as node attribute. Torch IR Node does not have name. Adding this to printed IR graph helps debugging.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/71342

Reviewed By: msaroufim

Differential Revision: D34433473

Pulled By: malfet

fbshipit-source-id: 4b137dfd6a33eb681a5f2612f19aadf5dfe3d84a

(cherry picked from commit 67a8ebed5192c266f604bdcca931df6fe589699f)

Enables local function export to capture annotated attributes.

For example:

```python

class M(torch.nn.Module):

num_layers: int

def __init__(self, num_layers):

super().__init__()

self.num_layers = num_layers

def forward(self, args):

...

```

`num_layers` will now be captured as attribute of local function `M`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72883

Summary:

Based on past PRs, here is an non-exhaustive list of files to consider for extension. The PR is not meant to be final. Based on feedback and discussion, files could be dropped from the list, or PR could be updated to move code around such that extension is no longer needed.

List of files below and description:

* These files are for converting from IR to ONNX proto. These should be used only for ONNX.

```

"torch/csrc/jit/serialization/export.*",

"torch/csrc/jit/serialization/onnx.*",

```

* This file is touched whenever pass signature is updated.

```

"torch/_C/__init__.pyi.in",

```

* These files are touched whenever pass signature is updated. Somehow it's been convention that onnx passes are also added here, but it could be possible to move them. Let me know what you think.

~~"torch/csrc/jit/python/init.cpp",~~

~~"torch/csrc/jit/python/script_init.cpp",~~

Update: Bowen will move onnx passes to files under onnx folder.

* ~~Touched when need new attr::xxx, or onnx::xxx.~~

~~"aten/src/ATen/core/interned_strings.h"~~

Update: Nikita will help separate this file.

malfet

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72297

Reviewed By: H-Huang

Differential Revision: D34254666

Pulled By: malfet

fbshipit-source-id: 032cfa590cbedf4648b7335fe8f09a2380ab14cb

(cherry picked from commit 88653eadbf)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68490

The use of ATEN as a fallback operator during ONNX conversion is important for increasing operator coverage or even provide more efficient implementations over some ONNX ops.

Currently this feature is available through `OperatorExportTypes.ONNX_ATEN_FALLBACK`,

but it also performs changes to the graph that are runnable by Caffe2, only.

This PR introduces restricts caffe2-specific graph transformations for `ONNX_ATEN_FALLBACK`

operator export type for when pytorch is built with caffe2 support (aka BUILD_CAFFE2=1 during build)

The first version of this PR introduced a new operator export type `ONNX_ATEN__STRICT_FALLBACK`,

which essentially is the same as `ONNX_ATEN_FALLBACK` but without caffe2 transformations.

It was preferred to not introduce a new operator export type, but to refine the existing aten fallback one

## BC-breaking note

### The global constant `torch.onnx.PYTORCH_ONNX_CAFFE2_BUNDLE` is removed in favor of

a less visible `torch.onnx._CAFFE2_ATEN_FALLBACK`.

`PYTORCH_ONNX_CAFFE2_BUNDLE` is really a dead code flag always set to False.

One alternative would be fixing it, but #66658 disables Caffe2 build by default.

Making a Caffe2 feature a private one seems to make more sense for future deprecation.

### The method `torch.onnx.export` now defaults to ONNX when `operator_export_type` is not specified.

Previously `torch.onnx.export's operator_export_type` intended to default to `ONNX_ATEN_FALLBACK` when `PYTORCH_ONNX_CAFFE2_BUNDLE` was set, but it would never happen as `PYTORCH_ONNX_CAFFE2_BUNDLE` is always undefined

Co-authored-by: Nikita Shulga <nshulga@fb.com>

Test Plan: Imported from OSS

Reviewed By: jansel

Differential Revision: D32483781

Pulled By: malfet

fbshipit-source-id: e9b447db9466b369e77d747188685495aec3f124

(cherry picked from commit 5fb1eb1b19)

Summary:

- PyTorch and ONNX has supported BFloat16, add this to unblock some mixed-precision training model.

- Support PyTorch TNLG model to use BFloat16 tensors for the inputs/outputs of the layers that run on the NPU.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66788

Reviewed By: jansel

Differential Revision: D32283510

Pulled By: malfet

fbshipit-source-id: 150d69b1465b2b917dd6554505eca58042c1262a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67803

* Addresses comments from #63589

[ONNX] remove torch::onnx::PRODUCER_VERSION (#67107)

Use constants from version.h instead.

This simplifies things since we no longer have to update

PRODUCER_VERSION for each release.

Also add TORCH_VERSION to version.h so that a string is available for

this purpose.

[ONNX] Set `ir_version` based on opset_version. (#67128)

This increases the odds that the exported ONNX model will be usable.

Before this change, we were setting the IR version to a value which may

be higher than what the model consumer supports.

Also some minor clean-up in the test code:

* Fix string replacement.

* Use a temporary file so as to not leave files around in the test

current working directory.

Test Plan: Imported from OSS

Reviewed By: msaroufim

Differential Revision: D32181306

Pulled By: malfet

fbshipit-source-id: 02f136d34ef8f664ade0bc1985a584f0e8c2b663

Co-authored-by: BowenBao <bowbao@microsoft.com>

Co-authored-by: Gary Miguel <garymiguel@microsoft.com>

Co-authored-by: Nikita Shulga <nshulga@fb.com>

Summary:

Delete `-Wno-unused-variable` from top level `CMakeLists.txt`

Still suppress those warnings for tests and `torch_python`

Delete number of unused variables from caffe2 code

Use `(void)var;` to suppress unused variable in range loops

Use `C10_UNUSED` for global constructors and use `constexpr` instead of `static` for global constants

Do not delete `caffe2::OperatorBase::Output` calls as they have side effects

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66041

Reviewed By: ngimel

Differential Revision: D31360142

Pulled By: malfet

fbshipit-source-id: 6fdfb9f91efdc49ca984a2f2a17ee377d28210c8

Summary:

Delete `-Wno-unused-variable` from top level `CMakeLists.txt`

Still suppress those warnings for tests and `torch_python`

Delete number of unused variables from caffe2 code

Use `(void)var;` to suppress unused variable in range loops

Use `C10_UNUSED` for global constructors and use `constexpr` instead of `static` for global constants

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65954

Reviewed By: ngimel

Differential Revision: D31326599

Pulled By: malfet

fbshipit-source-id: 924155f1257a2ba1896c50512f615e45ca1f61f3