Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69789

Add details on how to save and load quantized models without hitting errors

Test Plan:

CI autogenerated docs

Imported from OSS

Reviewed By: jerryzh168

Differential Revision: D33030991

fbshipit-source-id: 8ec4610ae6d5bcbdd3c5e3bb725f2b06af960d52

Summary:

Also fixes the documentation failing to appear and adds a test to validate that op works with multiple devices properly.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69640

Reviewed By: ngimel

Differential Revision: D32965391

Pulled By: mruberry

fbshipit-source-id: 4fe502809b353464da8edf62d92ca9863804f08e

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69104

Add nvidia-smi memory and utilization as native Python API

Test Plan:

testing the function returns the appropriate value.

Unit tests to come.

Reviewed By: malfet

Differential Revision: D32711562

fbshipit-source-id: 01e676203299f8fde4f3ed4065f68b497e62a789

Summary:

Per title.

This PR introduces a global flag that lets pytorch prefer one of the many backend implementations while calling linear algebra functions on GPU.

Usage:

```python

torch.backends.cuda.preferred_linalg_library('cusolver')

```

Available options (str): `'default'`, `'cusolver'`, `'magma'`.

Issue https://github.com/pytorch/pytorch/issues/63992 inspired me to write this PR. No heuristic is perfect on all devices, library versions, matrix shapes, workloads, etc. We can obtain better performance if we can conveniently switch linear algebra backends at runtime.

Performance of linear algebra operators after this PR should be no worse than before. The flag is set to **`'default'`** by default, which makes everything the same as before this PR.

The implementation of this PR is basically following that of https://github.com/pytorch/pytorch/pull/67790.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67980

Reviewed By: mruberry

Differential Revision: D32849457

Pulled By: ngimel

fbshipit-source-id: 679fee7744a03af057995aef06316306073010a6

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69251

This adds some actual documentation for deploy, which is probably useful

since we told everyone it was experimentally available so they will

probably be looking at what the heck it is.

It also wires up various compoenents of the OSS build to actually work

when used from an external project.

Differential Revision:

D32783312

D32783312

Test Plan: Imported from OSS

Reviewed By: wconstab

Pulled By: suo

fbshipit-source-id: c5c0a1e3f80fa273b5a70c13ba81733cb8d2c8f8

Summary:

These APIs are not yet officially released and are still under discussion. Hence, this commit removes those APIs from docs and will add them back when ready.

cc pietern mrshenli pritamdamania87 zhaojuanmao satgera rohan-varma gqchen aazzolini osalpekar jiayisuse SciPioneer H-Huang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69011

Reviewed By: fduwjj

Differential Revision: D32703124

Pulled By: mrshenli

fbshipit-source-id: ea049fc7ab6b0015d38cc40c5b5daf47803b7ea0

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66933

This PR exposes `torch.lu` as `torch.linalg.lu_factor` and

`torch.linalg.lu_factor_ex`.

This PR also adds support for matrices with zero elements both in

the size of the matrix and the batch. Note that this function simply

returns empty tensors of the correct size in this case.

We add a test and an OpInfo for the new function.

This PR also adds documentation for this new function in line of

the documentation in the rest of `torch.linalg`.

Fixes https://github.com/pytorch/pytorch/issues/56590

Fixes https://github.com/pytorch/pytorch/issues/64014

cc jianyuh nikitaved pearu mruberry walterddr IvanYashchuk xwang233 Lezcano

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D32521980

Pulled By: mruberry

fbshipit-source-id: 26a49ebd87f8a41472f8cd4e9de4ddfb7f5581fb

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63568

This PR adds the first solver with structure to `linalg`. This solver

has an API compatible with that of `linalg.solve` preparing these for a

possible future merge of the APIs. The new API:

- Just returns the solution, rather than the solution and a copy of `A`

- Removes the confusing `transpose` argument and replaces it by a

correct handling of conj and strides within the call

- Adds a `left=True` kwarg. This can be achieved via transposes of the

inputs and the result, but it's exposed for convenience.

This PR also implements a dataflow that minimises the number of copies

needed before calling LAPACK / MAGMA / cuBLAS and takes advantage of the

conjugate and neg bits.

This algorithm is implemented for `solve_triangular` (which, for this, is

the most complex of all the solvers due to the `upper` parameters).

Once more solvers are added, we will factor out this calling algorithm,

so that all of them can take advantage of it.

Given the complexity of this algorithm, we implement some thorough

testing. We also added tests for all the backends, which was not done

before.

We also add forward AD support for `linalg.solve_triangular` and improve the

docs of `linalg.solve_triangular`. We also fix a few issues with those of

`torch.triangular_solve`.

Resolves https://github.com/pytorch/pytorch/issues/54258

Resolves https://github.com/pytorch/pytorch/issues/56327

Resolves https://github.com/pytorch/pytorch/issues/45734

cc jianyuh nikitaved pearu mruberry walterddr IvanYashchuk xwang233 Lezcano

Test Plan: Imported from OSS

Reviewed By: jbschlosser

Differential Revision: D32588230

Pulled By: mruberry

fbshipit-source-id: 69e484849deb9ad7bb992cc97905df29c8915910

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63568

This PR adds the first solver with structure to `linalg`. This solver

has an API compatible with that of `linalg.solve` preparing these for a

possible future merge of the APIs. The new API:

- Just returns the solution, rather than the solution and a copy of `A`

- Removes the confusing `transpose` argument and replaces it by a

correct handling of conj and strides within the call

- Adds a `left=True` kwarg. This can be achieved via transposes of the

inputs and the result, but it's exposed for convenience.

This PR also implements a dataflow that minimises the number of copies

needed before calling LAPACK / MAGMA / cuBLAS and takes advantage of the

conjugate and neg bits.

This algorithm is implemented for `solve_triangular` (which, for this, is

the most complex of all the solvers due to the `upper` parameters).

Once more solvers are added, we will factor out this calling algorithm,

so that all of them can take advantage of it.

Given the complexity of this algorithm, we implement some thorough

testing. We also added tests for all the backends, which was not done

before.

We also add forward AD support for `linalg.solve_triangular` and improve the

docs of `linalg.solve_triangular`. We also fix a few issues with those of

`torch.triangular_solve`.

Resolves https://github.com/pytorch/pytorch/issues/54258

Resolves https://github.com/pytorch/pytorch/issues/56327

Resolves https://github.com/pytorch/pytorch/issues/45734

cc jianyuh nikitaved pearu mruberry walterddr IvanYashchuk xwang233 Lezcano

Test Plan: Imported from OSS

Reviewed By: zou3519, JacobSzwejbka

Differential Revision: D32283178

Pulled By: mruberry

fbshipit-source-id: deb672e6e52f58b76536ab4158073927a35e43a8

Summary:

This PR simply updates the documentation following up on https://github.com/pytorch/pytorch/pull/64234, by adding `Union` as a supported type.

Any feedback is welcome!

cc ansley albanD gmagogsfm

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68435

Reviewed By: davidberard98

Differential Revision: D32494271

Pulled By: ansley

fbshipit-source-id: c3e4806d8632e1513257f0295568a20f92dea297

Summary:

The `torch.histogramdd` operator is documented in `torch/functional.py` but does not appear in the generated docs because it is missing from `docs/source/torch.rst`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68273

Reviewed By: cpuhrsch

Differential Revision: D32470522

Pulled By: saketh-are

fbshipit-source-id: a23e73ba336415457a30bae568bda80afa4ae3ed

Summary:

### Create `linalg.cross`

Fixes https://github.com/pytorch/pytorch/issues/62810

As discussed in the corresponding issue, this PR adds `cross` to the `linalg` namespace (**Note**: There is no method variant) which is slightly different in behaviour compared to `torch.cross`.

**Note**: this is NOT an alias as suggested in mruberry's [https://github.com/pytorch/pytorch/issues/62810 comment](https://github.com/pytorch/pytorch/issues/62810#issuecomment-897504372) below

> linalg.cross being consistent with the Python Array API (over NumPy) makes sense because NumPy has no linalg.cross. I also think we can implement linalg.cross without immediately deprecating torch.cross, although we should definitely refer users to linalg.cross. Deprecating torch.cross will require additional review. While it's not used often it is used, and it's unclear if users are relying on its unique behavior or not.

The current default implementation of `torch.cross` is extremely weird and confusing. This has also been reported multiple times previously. (See https://github.com/pytorch/pytorch/issues/17229, https://github.com/pytorch/pytorch/issues/39310, https://github.com/pytorch/pytorch/issues/41850, https://github.com/pytorch/pytorch/issues/50273)

- [x] Add `torch.linalg.cross` with default `dim=-1`

- [x] Add OpInfo and other tests for `torch.linalg.cross`

- [x] Add broadcasting support to `torch.cross` and `torch.linalg.cross`

- [x] Remove out skip from `torch.cross` OpInfo

- [x] Add docs for `torch.linalg.cross`. Improve docs for `torch.cross` mentioning `linalg.cross` and the difference between the two. Also adds a warning to `torch.cross`, that it may change in the future (we might want to deprecate it later)

---

### Additional Fixes to `torch.cross`

- [x] Fix Doc for Tensor.cross

- [x] Fix torch.cross in `torch/overridres.py`

While working on `linalg.cross` I noticed these small issues with `torch.cross` itself.

[Tensor.cross docs](https://pytorch.org/docs/stable/generated/torch.Tensor.cross.html) still mentions `dim=-1` default which is actually wrong. It should be `dim=None` after the behaviour was updated in PR https://github.com/pytorch/pytorch/issues/17582 but the documentation for the `method` or `function` variant wasn’t updated. Later PR https://github.com/pytorch/pytorch/issues/41850 updated the documentation for the `function` variant i.e `torch.cross` and also added the following warning about the weird behaviour.

> If `dim` is not given, it defaults to the first dimension found with the size 3. Note that this might be unexpected.

But still, the `Tensor.cross` docs were missed and remained outdated. I’m finally fixing that here. Also fixing `torch/overrides.py` for `torch.cross` as well now, with `dim=None`.

To verify according to the docs the default behaviour of `dim=-1` should raise, you can try the following.

```python

a = torch.randn(3, 4)

b = torch.randn(3, 4)

b.cross(a) # this works because the implementation finds 3 in the first dimension and the default behaviour as shown in documentation is actually not true.

>>> tensor([[ 0.7171, -1.1059, 0.4162, 1.3026],

[ 0.4320, -2.1591, -1.1423, 1.2314],

[-0.6034, -1.6592, -0.8016, 1.6467]])

b.cross(a, dim=-1) # this raises as expected since the last dimension doesn't have a 3

>>> RuntimeError: dimension -1 does not have size 3

```

Please take a closer look (particularly the autograd part, this is the first time I'm dealing with `derivatives.yaml`). If there is something missing, wrong or needs more explanation, please let me know. Looking forward to the feedback.

cc mruberry Lezcano IvanYashchuk rgommers

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63285

Reviewed By: gchanan

Differential Revision: D32313346

Pulled By: mruberry

fbshipit-source-id: e68c2687c57367274e8ddb7ef28ee92dcd4c9f2c

Summary:

https://github.com/pytorch/pytorch/issues/67578 disabled reduced precision reductions for FP16 GEMMs. After benchmarking, we've found that this has substantial performance impacts for common GEMM shapes (e.g., those found in popular instantiations of multiheaded-attention) on architectures such as Volta. As these performance regressions may come as a surprise to current users, this PR adds a toggle to disable reduced precision reductions

`torch.backends.cuda.matmul.allow_fp16_reduced_precision_reduction = `

rather than making it the default behavior.

CC ngimel ptrblck

stas00 Note that the behavior after the previous PR can be replicated with

`torch.backends.cuda.matmul.allow_fp16_reduced_precision_reduction = False`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67946

Reviewed By: zou3519

Differential Revision: D32289896

Pulled By: ngimel

fbshipit-source-id: a1ea2918b77e27a7d9b391e030417802a0174abe

Summary:

When I run this part of the code on the document with PyTorch version 1.10.0, I found some differences between the output and the document, as follows:

```python

import torch

import torch.fx as fx

class M(torch.nn.Module):

def forward(self, x, y):

return x + y

# Create an instance of `M`

m = M()

traced = fx.symbolic_trace(m)

print(traced)

print(traced.graph)

traced.graph.print_tabular()

```

I get the result:

```shell

def forward(self, x, y):

add = x + y; x = y = None

return add

graph():

%x : [#users=1] = placeholder[target=x]

%y : [#users=1] = placeholder[target=y]

%add : [#users=1] = call_function[target=operator.add](args = (%x, %y), kwargs = {})

return add

opcode name target args kwargs

------------- ------ ----------------------- ------ --------

placeholder x x () {}

placeholder y y () {}

call_function add <built-in function add> (x, y) {}

output output output (add,) {}

```

This pr modified the document。

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68043

Reviewed By: driazati

Differential Revision: D32287178

Pulled By: jamesr66a

fbshipit-source-id: 48ebd0e6c09940be9950cd57ba0c03274a849be5

Summary:

**Summary:** This commit adds the `torch.nn.qat.dynamic.modules.Linear`

module, the dynamic counterpart to `torch.nn.qat.modules.Linear`.

Functionally these are very similar, except the dynamic version

expects a memoryless observer and is converted into a dynamically

quantized module before inference.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67325

Test Plan:

`python3 test/test_quantization.py TestQuantizationAwareTraining.test_dynamic_qat_linear`

**Reviewers:** Charles David Hernandez, Jerry Zhang

**Subscribers:** Charles David Hernandez, Supriya Rao, Yining Lu

**Tasks:** 99696812

**Tags:** pytorch

Reviewed By: malfet, jerryzh168

Differential Revision: D32178739

Pulled By: andrewor14

fbshipit-source-id: 5051bdd7e06071a011e4e7d9cc7769db8d38fd73

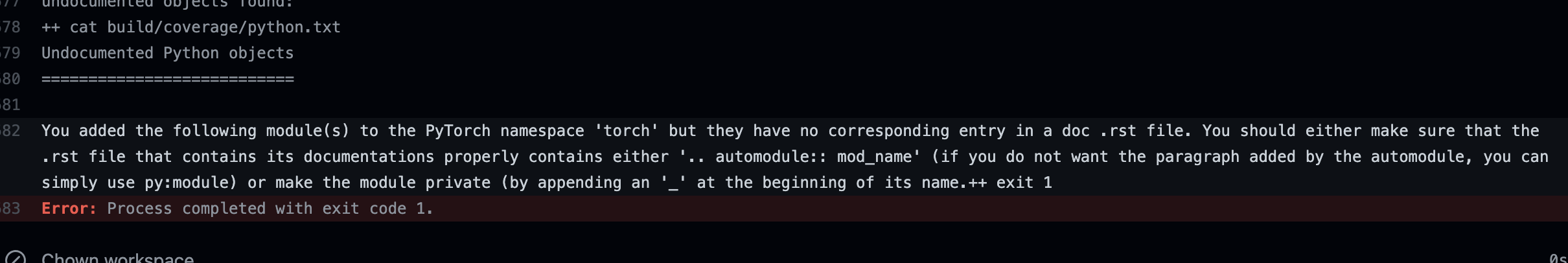

Summary:

Add check to make sure we do not add new submodules without documenting them in an rst file.

This is especially important because our doc coverage only runs for modules that are properly listed.

temporarily removed "torch" from the list to make sure the failure in CI looks as expected. EDIT: fixed now

This is what a CI failure looks like for the top level torch module as an example:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67440

Reviewed By: jbschlosser

Differential Revision: D32005310

Pulled By: albanD

fbshipit-source-id: 05cb2abc2472ea4f71f7dc5c55d021db32146928

Summary:

Adds `torch.argwhere` as an alias to `torch.nonzero`

Currently, `torch.nonzero` is actually provides equivalent functionality to `np.argwhere`.

From NumPy docs,

> np.argwhere(a) is almost the same as np.transpose(np.nonzero(a)), but produces a result of the correct shape for a 0D array.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64257

Reviewed By: qihqi

Differential Revision: D32049884

Pulled By: saketh-are

fbshipit-source-id: 016e49884698daa53b83e384435c3f8f6b5bf6bb

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67449

Adds a description of what the current custom module API does

and API examples for Eager mode and FX graph mode to the main

PyTorch quantization documentation page.

Test Plan:

```

cd docs

make html

python -m http.server

// check the docs page, it renders correctly

```

Reviewed By: jbschlosser

Differential Revision: D31994641

Pulled By: vkuzo

fbshipit-source-id: d35a62947dd06e71276eb6a0e37950d3cc5abfc1

Summary:

This reduces the chance of a newly added functions to be ignored by mistake.

The only test that this impacts is the coverage test that runs as part of the python doc build. So if that one works, it means that the update to the list here is correct.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67395

Reviewed By: jbschlosser

Differential Revision: D31991936

Pulled By: albanD

fbshipit-source-id: 5b4ce7764336720827501641311cc36f52d2e516

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66143

Delete test_list_remove. There's no point in testing conversion of

this model since TorchScript doesn't support it.

Add a link to an issue tracking test_embedding_bag_dynamic_input.

[ONNX] fix docs (#65379)

Mainly fix the sphinx build by inserting empty before

bulleted lists.

Also some minor improvements:

Remove superfluous descriptions of deprecated and ignored args.

The user doesn't need to know anything other than that they are

deprecated and ignored.

Fix custom_opsets description.

Make indentation of Raises section consistent with Args section.

[ONNX] publicize func for discovering unconvertible ops (#65285)

* [ONNX] Provide public function to discover all unconvertible ATen ops

This can be more productive than finding and fixing a single issue at a

time.

* [ONNX] Reorganize test_utility_funs

Move common functionality into a base class that doesn't define any

tests.

Add a new test for opset-independent tests. This lets us avoid running

the tests repeatedly for each opset.

Use simple inheritance rather than the `type()` built-in. It's more

readable.

* [ONNX] Use TestCase assertions rather than `assert`

This provides better error messages.

* [ONNX] Use double quotes consistently.

[ONNX] Fix code block formatting in doc (#65421)

Test Plan: Imported from OSS

Reviewed By: jansel

Differential Revision: D31424093

fbshipit-source-id: 4ced841cc546db8548dede60b54b07df9bb4e36e

Summary:

Adds `torch.argwhere` as an alias to `torch.nonzero`

Currently, `torch.nonzero` is actually provides equivalent functionality to `np.argwhere`.

From NumPy docs,

> np.argwhere(a) is almost the same as np.transpose(np.nonzero(a)), but produces a result of the correct shape for a 0D array.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64257

Reviewed By: dagitses

Differential Revision: D31474901

Pulled By: saketh-are

fbshipit-source-id: 335327a4986fa327da74e1fb8624cc1e56959c70

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66577

There was a rebase artifact erroneously landed to quantization docs,

this PR removes it.

Test Plan:

CI

Imported from OSS

Reviewed By: soulitzer

Differential Revision: D31651350

fbshipit-source-id: bc254cbb20724e49e1a0ec6eb6d89b28491f9f78