Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903

### Remaining Tasks

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP).

### Summary

1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed.

2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415).

It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now.

3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now.

4. One test is currently being skipped -

[test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines.

The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d.

Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses.

Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code.

Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests.

### Testing

1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2.

Only one test had to be modified, as it was hardcoded for AVX2.

2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support.

### Would the downclocking caused by AVX512 pose an issue?

I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance.

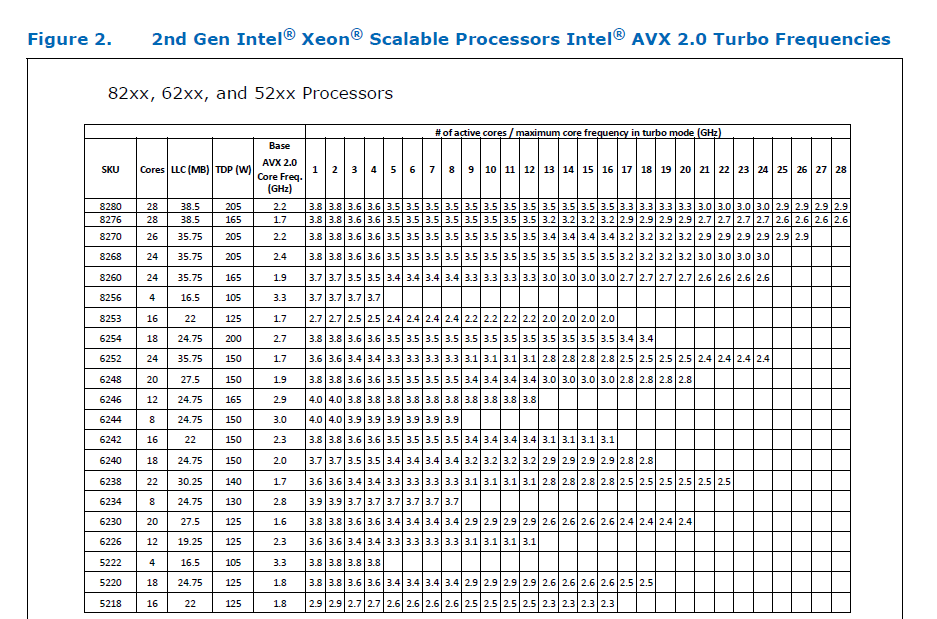

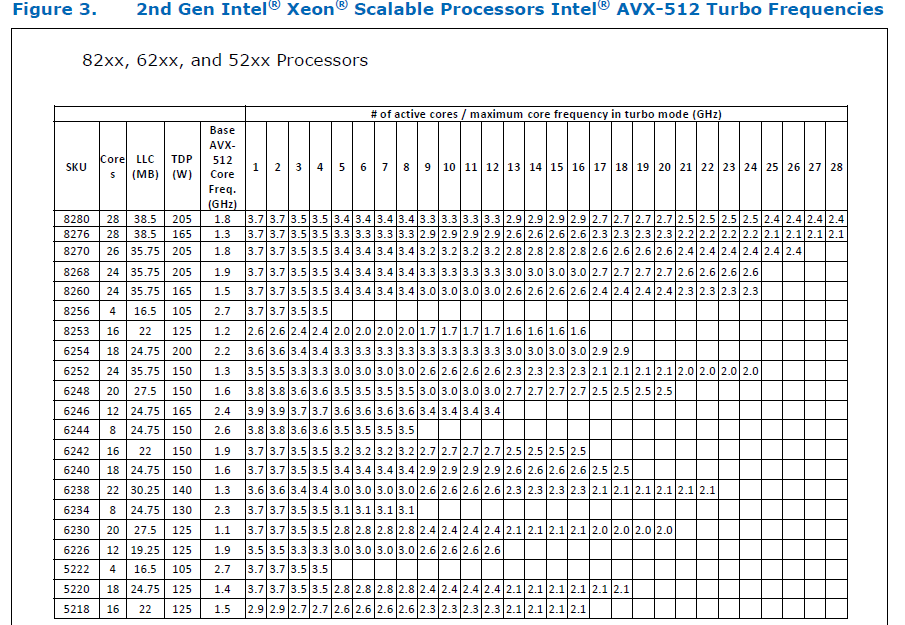

This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance.

Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -

The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them.

### Is PyTorch always faster with AVX512?

No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512.

It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed.

Original pull request: https://github.com/pytorch/pytorch/pull/56992

Reviewed By: soulitzer

Differential Revision: D29266289

Pulled By: ezyang

fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

Summary:

This is a PR on build system that provides support for cross compiling on Jetson platforms.

The major change is:

1. Disable try runs for cross compiling in `COMPILER_WORKS`, `BLAS`, and `CUDA`. They will not be able to perform try run on a cross compile setup

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59764

Reviewed By: soulitzer

Differential Revision: D29524363

Pulled By: malfet

fbshipit-source-id: f06d1ad30b704c9a17d77db686c65c0754db07b8

Summary:

Before that, only dynamically linked OpenBLAS compield with OpenMP could

be found.

Also get rid of hardcoded codepath for libgfortran.a in FindLAPACK.cmake

Only affects aarch64 linux builds

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59428

Reviewed By: agolynski

Differential Revision: D28891314

Pulled By: malfet

fbshipit-source-id: 5af55a14c85ac66551ad2805c5716bbefe8d55b2

Summary:

While trying to build PyTorch with BLIS as the backend library,

we found a build issue due to some missing include files.

This was caused by a missing directory in the search path.

This patch adds that path in FindBLIS.cmake.

Fixes #{issue number}

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58166

Reviewed By: zou3519

Differential Revision: D28640460

Pulled By: malfet

fbshipit-source-id: d0cd3a680718a0a45788c46a502871b88fbadd52

Summary:

These changes provide the user with an additional option to choose the DNNL+BLIS path for PyTorch.

This assumes BLIS is already downloaded or built from source and the necessary library file is available at the location: $BLIS_HOME/lib/libblis.so and include files are available at: $BLIS_HOME/include/blis/blis.h and $BLIS_HOME/include/blis/cblas.h

Export the below variables to build PyTorch with MKLDNN+BLIS and proceed with the regular installation procedure as below:

$export BLIS_HOME=path-to-BLIS

$export PATH=$BLIS_HOME/include/blis:$PATH LD_LIBRARY_PATH=$BLIS_HOME/lib:$LD_LIBRARY_PATH

$export BLAS=BLIS USE_MKLDNN_CBLAS=ON WITH_BLAS=blis

$python setup.py install

CPU only Dockerfile to build PyTorch with AMD BLIS is available at : docker/cpu-blis/Dockerfile

Example command line to build using the Dockerfile:

sudo DOCKER_BUILDKIT=1 docker build . -t docker-image-repo-name

Example command line to run the built docker container:

sudo docker run --name container-name -it docker-image-repo-name

Fixes #{issue number}

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54953

Reviewed By: glaringlee

Differential Revision: D27466799

Pulled By: malfet

fbshipit-source-id: e03bae9561be3a67429df3b1be95a79005c63050

Summary:

*Context:* https://github.com/pytorch/pytorch/issues/53406 added a lint for trailing whitespace at the ends of lines. However, in order to pass FB-internal lints, that PR also had to normalize the trailing newlines in four of the files it touched. This PR adds an OSS lint to normalize trailing newlines.

The changes to the following files (made in 54847d0adb9be71be4979cead3d9d4c02160e4cd) are the only manually-written parts of this PR:

- `.github/workflows/lint.yml`

- `mypy-strict.ini`

- `tools/README.md`

- `tools/test/test_trailing_newlines.py`

- `tools/trailing_newlines.py`

I would have liked to make this just a shell one-liner like the other three similar lints, but nothing I could find quite fit the bill. Specifically, all the answers I tried from the following Stack Overflow questions were far too slow (at least a minute and a half to run on this entire repository):

- [How to detect file ends in newline?](https://stackoverflow.com/q/38746)

- [How do I find files that do not end with a newline/linefeed?](https://stackoverflow.com/q/4631068)

- [How to list all files in the Git index without newline at end of file](https://stackoverflow.com/q/27624800)

- [Linux - check if there is an empty line at the end of a file [duplicate]](https://stackoverflow.com/q/34943632)

- [git ensure newline at end of each file](https://stackoverflow.com/q/57770972)

To avoid giving false positives during the few days after this PR is merged, we should probably only merge it after https://github.com/pytorch/pytorch/issues/54967.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54737

Test Plan:

Running the shell script from the "Ensure correct trailing newlines" step in the `quick-checks` job of `.github/workflows/lint.yml` should print no output and exit in a fraction of a second with a status of 0. That was not the case prior to this PR, as shown by this failing GHA workflow run on an earlier draft of this PR:

- https://github.com/pytorch/pytorch/runs/2197446987?check_suite_focus=true

In contrast, this run (after correcting the trailing newlines in this PR) succeeded:

- https://github.com/pytorch/pytorch/pull/54737/checks?check_run_id=2197553241

To unit-test `tools/trailing_newlines.py` itself (this is run as part of our "Test tools" GitHub Actions workflow):

```

python tools/test/test_trailing_newlines.py

```

Reviewed By: malfet

Differential Revision: D27409736

Pulled By: samestep

fbshipit-source-id: 46f565227046b39f68349bbd5633105b2d2e9b19

Summary:

Context: https://github.com/pytorch/pytorch/pull/53299#discussion_r587882857

These are the only hand-written parts of this diff:

- the addition to `.github/workflows/lint.yml`

- the file endings changed in these four files (to appease FB-internal land-blocking lints):

- `GLOSSARY.md`

- `aten/src/ATen/core/op_registration/README.md`

- `scripts/README.md`

- `torch/csrc/jit/codegen/fuser/README.md`

The rest was generated by running this command (on macOS):

```

git grep -I -l ' $' -- . ':(exclude)**/contrib/**' ':(exclude)third_party' | xargs gsed -i 's/ *$//'

```

I looked over the auto-generated changes and didn't see anything that looked problematic.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53406

Test Plan:

This run (after adding the lint but before removing existing trailing spaces) failed:

- https://github.com/pytorch/pytorch/runs/2043032377

This run (on the tip of this PR) succeeded:

- https://github.com/pytorch/pytorch/runs/2043296348

Reviewed By: walterddr, seemethere

Differential Revision: D26856620

Pulled By: samestep

fbshipit-source-id: 3f0de7f7c2e4b0f1c089eac9b5085a58dd7e0d97

Summary:

Fix accidental regression introduced by https://github.com/pytorch/pytorch/issues/47940

`FIND_PACKAGE(OpenBLAS)` does not validate that discovered library can actually be used, while `check_fortran_libraries` does that

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53168

Test Plan: Build PyTorch with static OpenBLAS and check that `torch.svd(torch.ones(3, 3)).S` do not raise an exception

Reviewed By: walterddr

Differential Revision: D26772345

Pulled By: malfet

fbshipit-source-id: 3e4675c176b30dfe4f0490d7d3dfe4f9a4037134

Summary:

When compiling libtorch on macOS there is the option to use the `vecLib` BLAS library from Apple's (Accelerate)[https://developer.apple.com/documentation/accelerate] framework. Recent versions of macOS have changed the location of veclib.h, this change adds the new locations to `FindvecLib.cmake`

To test run the following command:

```

BLAS=vecLib python setup.py install --cmake --cmake-only

```

The choice of BLAS library is confirmed in the output:

```

-- Trying to find preferred BLAS backend of choice: vecLib

-- Found vecLib: /Library/Developer/CommandLineTools/SDKs/MacOSX10.15.sdk/System/Library/Frameworks/Accelerate.framework/Versions/Current/Frameworks/vecLib.framework/Versions/Current/Headers

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51288

Reviewed By: jbschlosser

Differential Revision: D26531136

Pulled By: malfet

fbshipit-source-id: ce86807ccbf66973f33b3acb99b7f40cfd182b9b

Summary:

Since version 1.6, oneDNN has provided limited support for AArch64 builds.

This minor change is to detect an AArch64 CPU and permit the use of

`USE_MKLDNN` in that case.

Build flags for oneDNN are also modified accordingly.

Note: oneDNN on AArch64, by default, will use oneDNN's reference C++ kernels.

These are not optimised for AArch64, but oneDNN v1.7 onwards provides support

for a limited set of primitives based Arm Compute Library.

See: https://github.com/oneapi-src/oneDNN/pull/795

and: https://github.com/oneapi-src/oneDNN/pull/820

for more details. Support for ACL-based oneDNN primitives in PyTorch

will require some further modification,

Fixes #{issue number}

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50400

Reviewed By: izdeby

Differential Revision: D25886589

Pulled By: malfet

fbshipit-source-id: 2c81277a28ad4528c2d2211381e7c6692d952bc1

Summary:

### Pytorch Vec256 ppc64le support

implemented types:

- double

- float

- int16

- int32

- int64

- qint32

- qint8

- quint8

- complex_float

- complex_double

Notes:

All basic vector operations are implemented:

There are a few problems:

- minimum maximum nan propagation for ppc64le is missing and was not checked

- complex multiplication, division, sqrt, abs are implemented as PyTorch x86. they can overflow and have precision problems than std ones. That's why they were either excluded or tested in smaller domain range

- precisions of the implemented float math functions

~~Besides, I added CPU_CAPABILITY for power. but as because of quantization errors for DEFAULT I had to undef and use vsx for DEFAULT too~~

#### Details

##### Supported math functions

+ plus sign means vectorized, - minus sign means missing, (implementation notes are added inside braces)

(notes). Example: -(both ) means it was also missing on x86 side

g( func_name) means vectorization is using func_name

sleef - redirected to the Sleef

unsupported

function_name | float | double | complex float | complex double

|-- | -- | -- | -- | --|

acos | sleef | sleef | f(asin) | f(asin)

asin | sleef | sleef | +(pytorch impl) | +(pytorch impl)

atan | sleef | sleef | f(log) | f(log)

atan2 | sleef | sleef | unsupported | unsupported

cos | +((ppc64le:avx_mathfun) ) | sleef | -(both) | -(both)

cosh | f(exp) | -(both) | -(both) |

erf | sleef | sleef | unsupported | unsupported

erfc | sleef | sleef | unsupported | unsupported

erfinv | - (both) | - (both) | unsupported | unsupported

exp | + | sleef | - (x86:f()) | - (x86:f())

expm1 | f(exp) | sleef | unsupported | unsupported

lgamma | sleef | sleef | |

log | + | sleef | -(both) | -(both)

log10 | f(log) | sleef | f(log) | f(log)

log1p | f(log) | sleef | unsupported | unsupported

log2 | f(log) | sleef | f(log) | f(log)

pow | + f(exp) | sleef | -(both) | -(both)

sin | +((ppc64le:avx_mathfun) ) | sleef | -(both) | -(both)

sinh | f(exp) | sleef | -(both) | -(both)

tan | sleef | sleef | -(both) | -(both)

tanh | f(exp) | sleef | -(both) | -(both)

hypot | sleef | sleef | -(both) | -(both)

nextafter | sleef | sleef | -(both) | -(both)

fmod | sleef | sleef | -(both) | -(both)

[Vec256 Test cases Pr https://github.com/pytorch/pytorch/issues/42685](https://github.com/pytorch/pytorch/pull/42685)

Current list:

- [x] Blends

- [x] Memory: UnAlignedLoadStore

- [x] Arithmetics: Plus,Minu,Multiplication,Division

- [x] Bitwise: BitAnd, BitOr, BitXor

- [x] Comparison: Equal, NotEqual, Greater, Less, GreaterEqual, LessEqual

- [x] MinMax: Minimum, Maximum, ClampMin, ClampMax, Clamp

- [x] SignManipulation: Absolute, Negate

- [x] Interleave: Interleave, DeInterleave

- [x] Rounding: Round, Ceil, Floor, Trunc

- [x] Mask: ZeroMask

- [x] SqrtAndReciprocal: Sqrt, RSqrt, Reciprocal

- [x] Trigonometric: Sin, Cos, Tan

- [x] Hyperbolic: Tanh, Sinh, Cosh

- [x] InverseTrigonometric: Asin, ACos, ATan, ATan2

- [x] Logarithm: Log, Log2, Log10, Log1p

- [x] Exponents: Exp, Expm1

- [x] ErrorFunctions: Erf, Erfc, Erfinv

- [x] Pow: Pow

- [x] LGamma: LGamma

- [x] Quantization: quantize, dequantize, requantize_from_int

- [x] Quantization: widening_subtract, relu, relu6

Missing:

- [ ] Constructors, initializations

- [ ] Conversion , Cast

- [ ] Additional: imag, conj, angle (note: imag and conj only checked for float complex)

#### Notes on tests and testing framework

- some math functions are tested within domain range

- mostly testing framework randomly tests against std implementation within the domain or within the implementation domain for some math functions.

- some functions are tested against the local version. ~~For example, std::round and vector version of round differs. so it was tested against the local version~~

- round was tested against pytorch at::native::round_impl. ~~for double type on **Vsx vec_round failed for (even)+0 .5 values**~~ . it was solved by using vec_rint

- ~~**complex types are not tested**~~ **After enabling complex testing due to precision and domain some of the complex functions failed for vsx and x86 avx as well. I will either test it against local implementation or check within the accepted domain**

- ~~quantizations are not tested~~ Added tests for quantizing, dequantize, requantize_from_int, relu, relu6, widening_subtract functions

- the testing framework should be improved further

- ~~For now `-DBUILD_MOBILE_TEST=ON `will be used for Vec256Test too~~

Vec256 Test cases will be built for each CPU_CAPABILITY

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41541

Reviewed By: zhangguanheng66

Differential Revision: D23922049

Pulled By: VitalyFedyunin

fbshipit-source-id: bca25110afccecbb362cea57c705f3ce02f26098

Summary:

Fixes https://github.com/pytorch/pytorch/issues/45838

`ARCH_OPT_FLAGS` was the old name of `MKLDNN_ARCH_OPT_FLAGS`, which has been renamed in [this commit](2a011ff02e (diff-a0abcbf647ed740b80615fb5b1614a44L97)), but not updated in pytorch.

As its default value will be set to sse4.1, some kernels are going to fail on the legacy arch that does not support SSE4.1. This patch was to make this flag effective.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46082

Reviewed By: glaringlee

Differential Revision: D24252149

Pulled By: agolynski

fbshipit-source-id: 7079deed373d664763c5888feb28795e5235caa8

Summary:

## Motivation

This PR upgrades MKL-DNN from v0.20 to DNNL v1.2 and resolves https://github.com/pytorch/pytorch/issues/30300.

DNNL (Deep Neural Network Library) is the new brand of MKL-DNN, which improves performance, quality, and usability over the old version.

This PR focuses on the migration of all existing functionalities, including minor fixes, performance improvement and code clean up. It serves as the cornerstone of our future efforts to accommodate new features like OpenCL support, BF16 training, INT8 inference, etc. and to let the Pytorch community derive more benefits from the Intel Architecture.

<br>

## What's included?

Even DNNL has many breaking changes to the API, we managed to absorb most of them in ideep. This PR contains minimalist changes to the integration code in pytorch. Below is a summary of the changes:

<br>

**General:**

1. Replace op-level allocator with global-registered allocator

```

// before

ideep::sum::compute<AllocForMKLDNN>(scales, {x, y}, z);

// after

ideep::sum::compute(scales, {x, y}, z);

```

The allocator is now being registeted at `aten/src/ATen/native/mkldnn/IDeepRegistration.cpp`. Thereafter all tensors derived from the `cpu_engine` (by default) will use the c10 allocator.

```

RegisterEngineAllocator cpu_alloc(

ideep::engine::cpu_engine(),

[](size_t size) {

return c10::GetAllocator(c10::DeviceType::CPU)->raw_allocate(size);

},

[](void* p) {

c10::GetAllocator(c10::DeviceType::CPU)->raw_deallocate(p);

}

);

```

------

2. Simplify group convolution

We had such a scenario in convolution where ideep tensor shape mismatched aten tensor: when `groups > 1`, DNNL expects weights tensors to be 5-d with an extra group dimension, e.g. `goihw` instead of `oihw` in 2d conv case.

As shown below, a lot of extra checks came with this difference in shape before. Now we've completely hidden this difference in ideep and all tensors are going to align with pytorch's definition. So we could safely remove these checks from both aten and c2 integration code.

```

// aten/src/ATen/native/mkldnn/Conv.cpp

if (w.ndims() == x.ndims() + 1) {

AT_ASSERTM(

groups > 1,

"Only group _mkldnn_conv2d weights could have been reordered to 5d");

kernel_size[0] = w.get_dim(0) * w.get_dim(1);

std::copy_n(

w.get_dims().cbegin() + 2, x.ndims() - 1, kernel_size.begin() + 1);

} else {

std::copy_n(w.get_dims().cbegin(), x.ndims(), kernel_size.begin());

}

```

------

3. Enable DNNL built-in cache

Previously, we stored DNNL jitted kernels along with intermediate buffers inside ideep using an LRU cache. Now we are switching to the newly added DNNL built-in cache, and **no longer** caching buffers in order to reduce memory footprint.

This change will be mainly reflected in lower memory usage from memory profiling results. On the code side, we removed couple of lines of `op_key_` that depended on the ideep cache before.

------

4. Use 64-bit integer to denote dimensions

We changed the type of `ideep::dims` from `vector<int32_t>` to `vector<int64_t>`. This renders ideep dims no longer compatible with 32-bit dims used by caffe2. So we use something like `{stride_.begin(), stride_.end()}` to cast parameter `stride_` into a int64 vector.

<br>

**Misc changes in each commit:**

**Commit:** change build options

Some build options were slightly changed, mainly to avoid name collisions with other projects that include DNNL as a subproject. In addition, DNNL built-in cache is enabled by option `DNNL_ENABLE_PRIMITIVE_CACHE`.

Old | New

-- | --

WITH_EXAMPLE | MKLDNN_BUILD_EXAMPLES

WITH_TEST | MKLDNN_BUILD_TESTS

MKLDNN_THREADING | MKLDNN_CPU_RUNTIME

MKLDNN_USE_MKL | N/A (not use MKL anymore)

------

**Commit:** aten reintegration

- aten/src/ATen/native/mkldnn/BinaryOps.cpp

Implement binary ops using new operation `binary` provided by DNNL

- aten/src/ATen/native/mkldnn/Conv.cpp

Clean up group convolution checks

Simplify conv backward integration

- aten/src/ATen/native/mkldnn/MKLDNNConversions.cpp

Simplify prepacking convolution weights

- test/test_mkldnn.py

Fixed an issue in conv2d unit test: it didn't check conv results between mkldnn and aten implementation before. Instead, it compared the mkldnn with mkldnn as the default cpu path will also go into mkldnn. Now we use `torch.backends.mkldnn.flags` to fix this issue

- torch/utils/mkldnn.py

Prepack weight tensor on module `__init__` to achieve better performance significantly

------

**Commit:** caffe2 reintegration

- caffe2/ideep/ideep_utils.h

Clean up unused type definitions

- caffe2/ideep/operators/adam_op.cc & caffe2/ideep/operators/momentum_sgd_op.cc

Unify tensor initialization with `ideep::tensor::init`. Obsolete `ideep::tensor::reinit`

- caffe2/ideep/operators/conv_op.cc & caffe2/ideep/operators/quantization/int8_conv_op.cc

Clean up group convolution checks

Revamp convolution API

- caffe2/ideep/operators/conv_transpose_op.cc

Clean up group convolution checks

Clean up deconv workaround code

------

**Commit:** custom allocator

- Register c10 allocator as mentioned above

<br><br>

## Performance

We tested inference on some common models based on user scenarios, and most performance numbers are either better than or on par with DNNL 0.20.

ratio: new / old | Latency (batch=1 4T) | Throughput (batch=64 56T)

-- | -- | --

pytorch resnet18 | 121.4% | 99.7%

pytorch resnet50 | 123.1% | 106.9%

pytorch resnext101_32x8d | 116.3% | 100.1%

pytorch resnext50_32x4d | 141.9% | 104.4%

pytorch mobilenet_v2 | 163.0% | 105.8%

caffe2 alexnet | 303.0% | 99.2%

caffe2 googlenet-v3 | 101.1% | 99.2%

caffe2 inception-v1 | 102.2% | 101.7%

caffe2 mobilenet-v1 | 356.1% | 253.7%

caffe2 resnet101 | 100.4% | 99.8%

caffe2 resnet152 | 99.8% | 99.8%

caffe2 shufflenet | 141.1% | 69.0% †

caffe2 squeezenet | 98.5% | 99.2%

caffe2 vgg16 | 136.8% | 100.6%

caffe2 googlenet-v3 int8 | 100.0% | 100.7%

caffe2 mobilenet-v1 int8 | 779.2% | 943.0%

caffe2 resnet50 int8 | 99.5% | 95.5%

_Configuration:

Platform: Skylake 8180

Latency Test: 4 threads, warmup 30, iteration 500, batch size 1

Throughput Test: 56 threads, warmup 30, iteration 200, batch size 64_

† Shufflenet is one of the few models that require temp buffers during inference. The performance degradation is an expected issue since we no longer cache any buffer in the ideep. As for the solution, we suggest users opt for caching allocator like **jemalloc** as a drop-in replacement for system allocator in such heavy workloads.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32422

Test Plan:

Perf results: https://our.intern.facebook.com/intern/fblearner/details/177790608?tab=Experiment%20Results

10% improvement for ResNext with avx512, neutral on avx2

More results: https://fb.quip.com/ob10AL0bCDXW#NNNACAUoHJP

Reviewed By: yinghai

Differential Revision: D20381325

Pulled By: dzhulgakov

fbshipit-source-id: 803b906fd89ed8b723c5fcab55039efe3e4bcb77

Summary:

When building with FFMPEG, I encountered compilation error due to missing include/library.

I also find the change in video_input_op.h will improve build on Windows.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27589

Differential Revision: D19700351

Pulled By: ezyang

fbshipit-source-id: feff25daa43bd2234d5e75c66b9865b672a8fb51

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/31152

Per apaszke: I can't find any reasonable references to libIRC online, so

I decided to remove this.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D19262582

Pulled By: ezyang

fbshipit-source-id: a1d47462427a3e0ca469062321d608e0badf8548

Summary:

Fixes https://github.com/pytorch/pytorch/issues/24334.

I'm still kind of confused why `FindMKL.cmake` was unable to locate my MKL libraries. They are in the standard `/opt/intel/mkl` installation prefix on macOS. But at least with this more detailed error message, it will be easier for people to figure out how to fix the problem.

zhangguanheng66 xkszltl soumith

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28779

Differential Revision: D18170998

Pulled By: soumith

fbshipit-source-id: 47e61baadd84c758267dca566eb1fb8a081de92f

Summary:

1. upgrade MKL-DNN to v0.20.3

2. allow user to change the capability of primitive cache in mkldnn-bridge by environment value LRU_CACHE_CAPACITY

3. support to fill all tensor elements by one scalar

4. fix the link issue if build with private MKLML other than pre-installed MKL

5. add rnn support in mkldnn-bridge

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22910

Differential Revision: D16365998

Pulled By: VitalyFedyunin

fbshipit-source-id: b8d2bb454cbfbcd4b8983b1a8fa3b83e55ad01c3

Summary:

Currently once user has set `USE_NATIVE_ARCH` to OFF, they will never be able to turn it on for MKLDNN again by simply changing `USE_NATIVE_ARCH`. This commit fixes this issue.

Following up 09ba4df031

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23608

Differential Revision: D16599600

Pulled By: ezyang

fbshipit-source-id: 88bbec1b1504b5deba63e56f78632937d003a1f6

Summary:

Currently there is no way to build MKLDNN more optimized than sse4. This commit let MKLDNN build respect USE_NATIVE_ARCH.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23445

Differential Revision: D16542275

Pulled By: ezyang

fbshipit-source-id: 550976531d6a52db9128c0e3d4589a33715feee2

Summary:

Illegal instruction is encountered in pre-built package in MKL-DNN. https://github.com/pytorch/pytorch/issues/23231

To avoid such binary compatibility issue, the HostOpts option in MKL-DNN is disabled in order to build MKL-DNN for generic arch.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23292

Differential Revision: D16488773

Pulled By: soumith

fbshipit-source-id: 9e13c76fb9cb9338103cb767d7463c10891d294a

Summary:

---

How does the current code subsume all detections in the deleted `nccl.py`?

- The dependency of `USE_NCCL` on the OS and `USE_CUDA` is handled as dependency options in `CMakeLists.txt`.

- The main NCCL detection happens in [FindNCCL.cmake](8377d4b32c/cmake/Modules/FindNCCL.cmake), which is called by [nccl.cmake](8377d4b32c/cmake/External/nccl.cmake). When `USE_SYSTEM_NCCL` is false, the previous Python code defer the detection to `find_package(NCCL)`. The change in `nccl.cmake` retains this.

- `USE_STATIC_NCCL` in the previous Python code simply changes the name of the detected library. This is done in `IF (USE_STATIC_NCCL)`.

- Now we only need to look at how the lines below line 20 in `nccl.cmake` are subsumed. These lines list paths to header and library directories that NCCL headers and libraries may reside in and try to search these directories for the key header and library files in turn. These are done by `find_path` for headers and `find_library` for the library files in `FindNCCL.cmake`.

* The call of [find_path](https://cmake.org/cmake/help/v3.8/command/find_path.html) (Search for `NO_DEFAULT_PATH` in the link) by default searches for headers in `<prefix>/include` for each `<prefix>` in `CMAKE_PREFIX_PATH` and `CMAKE_SYSTEM_PREFIX_PATH`. Like the Python code, this commit sets `CMAKE_PREFIX_PATH` to search for `<prefix>` in `NCCL_ROOT_DIR` and home to CUDA. `CMAKE_SYSTEM_PREFIX_PATH` includes the standard directories such as `/usr/local` and `/usr`. `NCCL_INCLUDE_DIR` is also specifically handled.

* Similarly, the call of [find_library](https://cmake.org/cmake/help/v3.8/command/find_library.html) (Search for `NO_DEFAULT_PATH` in the link) by default searches for libraries in directories including `<prefix>/lib` for each `<prefix>` in `CMAKE_PREFIX_PATH` and `CMAKE_SYSTEM_PREFIX_PATH`. But it also handles the edge cases intended to be solved in the Python code more properly:

- It only searches for `<prefix>/lib64` (and `<prefix>/lib32`) if it is appropriate on the system.

- It only searches for `<prefix>/lib/<arch>` for the right `<arch>`, unlike the Python code searches for `lib/<arch>` in a generic way (e.g., the Python code searches for `/usr/lib/x86_64-linux-gnu` but in reality systems have `/usr/lib/x86_64-some-customized-name-linux-gnu`, see https://unix.stackexchange.com/a/226180/38242 ).

---

Regarding for relevant issues:

- https://github.com/pytorch/pytorch/issues/12063 and https://github.com/pytorch/pytorch/issues/2877: These are properly handled, as explained in the updated comment.

- https://github.com/pytorch/pytorch/issues/2941 does not changes NCCL detection specifically for Windows (it changed CUDA detection).

- b7e258f81e A versioned library detection is added, but the order is reversed: The unversioned library becomes preferred. This is because normally unversioned libraries are linked to versioned libraries and preferred by users, and local installation by users are often unversioned. Like the document of [find_library](https://cmake.org/cmake/help/v3.8/command/find_library.html) suggests:

> When using this to specify names with and without a version suffix, we recommend specifying the unversioned name first so that locally-built packages can be found before those provided by distributions.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22930

Differential Revision: D16440275

Pulled By: ezyang

fbshipit-source-id: 11fe80743d4fe89b1ed6f96d5d996496e8ec01aa

Summary:

MKL-DNN is the main library for computation when we use ideep device. It can use kernels implemented by different algorithms (including JIT, CBLAS, etc.) for computation. We add the "USE_MKLDNN_CBLAS" (default OFF) build option so that users can decide whether to use CBLAS computation methods or not.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19014

Differential Revision: D16094090

Pulled By: ezyang

fbshipit-source-id: 3f0b1d1a59a327ea0d1456e2752f2edd78d96ccc

Summary:

`cmake/public/LoadHIP.cmake` calls `find_package(miopen)`, which uses the CMake module in MIOpen installation (It includes the line `set(miopen_DIR ${MIOPEN_PATH}/lib/cmake/miopen)`). `cmake/Modules/FindMIOpen.cmake` is not used.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22244

Differential Revision: D16000771

Pulled By: bddppq

fbshipit-source-id: 07bb40fdf033521e8427fc351715d47e6e30ed34

Summary:

Dear All,

The proposed patch fixes the test code snippets used in cmake infrastructure, and implicit failure to set properly the ```CAFFE2_COMPILER_SUPPORTS_AVX2_EXTENSIONS``` flag. The libcaffe2.so will have some ```UND``` avx2 related references, rendering it unusable.

* Using GCC 9 test code from cmake build infra always fails:

```

$ gcc -O2 -g -pipe -Wall -m64 -mtune=generic -fopenmp -DCXX_HAS_AVX_1 -fPIE -o test.o -c test.c -mavx2

test.c: In function ‘main’:

test.c:11:26: error: incompatible type for argument 1 of ‘_mm256_extract_epi64’

11 | _mm256_extract_epi64(x, 0); // we rely on this in our AVX2 code

| ^

| |

| __m256 {aka __vector(8) float}

In file included from /usr/lib/gcc/x86_64-redhat-linux/9/include/immintrin.h:51,

from test.c:4:

/usr/lib/gcc/x86_64-redhat-linux/9/include/avxintrin.h:550:31: note: expected ‘__m256i’ {aka ‘__vector(4) long long int’} but argument is of type ‘__m256’ {aka ‘__vector(8) float’}

550 | _mm256_extract_epi64 (__m256i __X, const int __N)

|

$ gcc -v

Using built-in specs.

COLLECT_GCC=gcc

COLLECT_LTO_WRAPPER=/usr/libexec/gcc/x86_64-redhat-linux/9/lto-wrapper

OFFLOAD_TARGET_NAMES=nvptx-none

OFFLOAD_TARGET_DEFAULT=1

Target: x86_64-redhat-linux

Configured with: ../configure --enable-bootstrap --enable-languages=c,c++,fortran,objc,obj-c++,ada,go,d,lto --prefix=/usr --mandir=/usr/share/man --infodir=/usr/share/info --with-bugurl=http://bugzilla.redhat.com/bugzilla --enable-shared --enable-threads=posix --enable-checking=release --enable-multilib --with-system-zlib --enable-__cxa_atexit --disable-libunwind-exceptions --enable-gnu-unique-object --enable-linker-build-id --with-gcc-major-version-only --with-linker-hash-style=gnu --enable-plugin --enable-initfini-array --with-isl --enable-offload-targets=nvptx-none --without-cuda-driver --enable-gnu-indirect-function --enable-cet --with-tune=generic --with-arch_32=i686 --build=x86_64-redhat-linux

Thread model: posix

gcc version 9.0.1 20190328 (Red Hat 9.0.1-0.12) (GCC)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18991

Differential Revision: D14821838

Pulled By: ezyang

fbshipit-source-id: 7eb3a854a1a831f6fda8ed7ad089746230b529d7

Summary:

Our AVX2 routines use functions such as _mm256_extract_epi64

that do not exist on 32 bit systems even when they have AVX2.

This disables AVX2 when _mm256_extract_epi64 does not exist.

This fixes the "local" part of #17901 (except disabling FBGEMM),

but there also is sleef to be updated and NNPACK to be fixed,

see the bug report for further discussion.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17915

Differential Revision: D14437338

Pulled By: soumith

fbshipit-source-id: d4ef7e0801b5d1222a855a38ec207dd88b4680da

Summary:

The request changes are to support building Pytorch 1.0 on the Jetson Xavier with Openblas. Jetson Xavier with Jetpack 3.3 has generic lapack installed. To pick up the CUDA accelerated BLAS/Lapack, I had to build Openblas and build/link pytorch from source. Otherwise, I got a runtime error indicating lapack routines were not cuda enabled.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15660

Differential Revision: D13571324

Pulled By: soumith

fbshipit-source-id: 9b148d081d6e7fa7e1824dfdd93283c67f69e683

Summary:

Upgrade MKl-DNN to 0.17 and static build MKL-DNN to fix the potentail build error due to old mkldnn version in host system.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15504

Differential Revision: D13547885

Pulled By: soumith

fbshipit-source-id: 46f790a3d9289c1e153e51c62be17c5206ea8f9a

Summary:

The motivational of this PR is to enforce mkldnn to use the same omp version of caffe2 framework.

Meanwhile, do not change other assumptions within mkldnn.

Previously, the MKL_cmake_included is set in caffe2 in order to disable omp seeking in mkldnn.

But, with such change, mkldnn has no chance to adapt for mkl found by caffe2.

Then, some building flags of mkl will be not set in mkldnn.

For example, USE_MKL, USE_CBLAS, etc.

In this PR, we enforce set the MKLIOMP5LIB for mkldnn according to caffe2, and tell the mkl root path in MKLROOT for mkldnn. Then, mkldnn is built as expected.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13449

Differential Revision: D12899504

Pulled By: yinghai

fbshipit-source-id: 22a196bd00b4ef0a11d350a32c049304613edf52

Summary:

Performance oriented code will use AVX/AVX2, so we don't need SSE specific code anymore. This will also reduce the probability of running into an error on legacy CPUs.

On top of this convolve is covered by modern libraries such as MKLDNN, which are much more performant and which we now build against by default (even for builds from source).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/12109

Differential Revision: D10055134

Pulled By: colesbury

fbshipit-source-id: 789b8a34d5936d9c144bcde410c30f7eb1c776fa

Summary:

This is to fix cmake-time compilation error.

When we change script to build Caffe2 with mkldnn, we run into some cmake-time compilation support check (like in libsleef) failed due to incorrect setting of CMAKE_REQUIRED_LIBRARIES. It is a global setting which can interfere camke compilation if it is not clean up properly. FindBLAS.cmake and FindLAPACK.cmake didn't clean this flag, and causes incorrect building of libsleef.so.

yinghai gujinghui

Pull Request resolved: https://github.com/pytorch/pytorch/pull/12195

Differential Revision: D10159314

Pulled By: yinghai

fbshipit-source-id: 04908738f7d005579605b9c2a58d54f035d3baf4

Summary:

This PR establish a baseline so that we can build IDEEP ops in the new work flow. From this baseline, we need to

- Merge the CMakefile of MKLDNN from caffe2 and Pytorch

- Get rid of `USE_MKL=ON`.

Build command from now on:

```

EXTRA_CAFFE2_CMAKE_FLAGS="-DUSE_MKL=ON -DINTEL_COMPILER_DIR=/opt/IntelComposerXE/2017.0.098" python setup.py build_deps

```

gujinghui

Pull Request resolved: https://github.com/pytorch/pytorch/pull/12026

Differential Revision: D10041199

Pulled By: yinghai

fbshipit-source-id: b7310bd84a494ac899d8e25da368b63feed4eeaf

Summary:

this is a fix that's needed for building extensions with a

pre-packaged pytorch. Consider the scenario where

(1) pytorch is compiled and packaged on machine A

(2) the package is downloaded and installed on machine B

(3) an extension is compiled on machine B, using the downloaded package

Before this patch, stage (1) would embed absolute paths to the system

installation of mkl into the generated Caffe2Config.cmake, leading to

failures in stage (3) if mkl was not at the same location on B as on

A. After this patch, only a reference to the wrapper library is

embedded, which is re-resolved on machine B.

We are already using a similar approach for cuda.

Testing: built a package on jenkins, downloaded locally and compiled an extension. Works with this patch, fails without.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/11298

Differential Revision: D9683150

Pulled By: anderspapitto

fbshipit-source-id: 06a80c3cd2966860ce04f76143b358de15f94aa4

Summary:

* first integration of MIOpen for batch norm and conv on ROCm

* workaround a ROCm compiler bug exposed by elementwise_kernel through explicit capture of variables in the densest packing

* workaround a ROCm compiler bug exposed by having `extern "C" __host__` as a definition and just `__host__` in the implementation through the hipify script

* use fabs() in accordance with C++11 for double absolute, not ::abs() which is integer-only on ROCm

* enable test_sparse set on CI, skip tests that don't work currently on ROCm

* enable more tests in test_optim after the elementwise_bug got fixed

* enable more tests in test_dataloader

* improvements to hipification and ROCm build

With this, resnet18 on CIFAR data trains without hang or crash in our tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/10612

Reviewed By: bddppq

Differential Revision: D9423872

Pulled By: ezyang

fbshipit-source-id: 22c0c985217d65c593f35762b3eb16969ad96bdd

Summary:

I am using this to test a CI job to upload pip packages, and so am using the Caffe2 namespace to avoid affecting the existing pytorch packages.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/9544

Reviewed By: orionr

Differential Revision: D9267111

Pulled By: pjh5

fbshipit-source-id: a68162ed29d2eb9ce353d8435ccb5f16c3b0b894

Summary:

This PR does 3 things

- Reorder the search order of `intel_lp64` and `gf_lp64` as the first one is more essential and should have high priority.

- Avoid repetitive searching of MKL libraries in `ideep` and `mkldnn` submodule if we already found those in `FindMKL`

- Avoid adding more MKL dependencies to IDEEP if MKL is also found.

TODO: provide an option for user to chose iomp or gomp.

Closes https://github.com/pytorch/pytorch/pull/8955

Reviewed By: bddppq

Differential Revision: D8666960

Pulled By: yinghai

fbshipit-source-id: 669d3142204a8b47c19a900444246fc44a139012

If CUDNN_INCLUDE_DIR, CUDNN_LIB_DIR, and/or CUDNN_ROOT_DIR were set,

but USE_CUDNN was not explicitly set, the code in

cmake/Dependencies.cmake would set USE_CUDNN=OFF even though it could

be found. This caused an issue in ATen, where it includes its CuDNN

bindings if the variable CUDNN_FOUND is set. This was the case,

because the find_package call in cmake/public/cuda.cmake searches for

CuDNN and ends up finding it. The net result is that ATen tried to

compile CuDNN bits, but the caffe2::cudnn target is never defined let

alone added as dependency, and the build fails on not being able to

find the header cudnn.h.

This change does two things:

1) Restore CuDNN autodetection by setting USE_CUDNN=ON if it is found.

2) Remove obsolete FindCuDNN.cmake module. This functionality now

lives in cmake/public/cuda.cmake.

* [c10d] Process Group NCCL implementation

* Addressed comments

* Added one missing return and clang format again

* Use cmake/Modules for everything and fix gloo build

* Fixed compiler warnings

* Deleted duplicated FindNCCL

* Have PyTorch depend on minimal libcaffe2.so instead of libATen.so

* Build ATen tests as a part of Caffe2 build

* Hopefully cufft and nvcc fPIC fixes

* Make ATen install components optional

* Add tests back for ATen and fix TH build

* Fixes for test_install.sh script

* Fixes for cpp_build/build_all.sh

* Fixes for aten/tools/run_tests.sh

* Switch ATen cmake calls to USE_CUDA instead of NO_CUDA

* Attempt at fix for aten/tools/run_tests.sh

* Fix typo in last commit

* Fix valgrind call after pushd

* Be forgiving about USE_CUDA disable like PyTorch

* More fixes on the install side

* Link all libcaffe2 during test run

* Make cuDNN optional for ATen right now

* Potential fix for non-CUDA builds

* Use NCCL_ROOT_DIR environment variable

* Pass -fPIC through nvcc to base compiler/linker

* Remove THCUNN.h requirement for libtorch gen

* Add Mac test for -Wmaybe-uninitialized

* Potential Windows and Mac fixes

* Move MSVC target props to shared function

* Disable cpp_build/libtorch tests on Mac

* Disable sleef for Windows builds

* Move protos under BUILD_CAFFE2

* Remove space from linker flags passed with -Wl

* Remove ATen from Caffe2 dep libs since directly included

* Potential Windows fixes

* Preserve options while sleef builds

* Force BUILD_SHARED_LIBS flag for Caffe2 builds

* Set DYLD_LIBRARY_PATH and LD_LIBRARY_PATH for Mac testing

* Pass TORCH_CUDA_ARCH_LIST directly in cuda.cmake

* Fixes for the last two changes

* Potential fix for Mac build failure

* Switch Caffe2 to build_caffe2 dir to not conflict

* Cleanup FindMKL.cmake

* Another attempt at Mac cpp_build fix

* Clear cpp-build directory for Mac builds

* Disable test in Mac build/test to match cmake

* [bootcamp] Improve "Shape" operator to support axes specification

To improve .shape operator of Caffe2 to support x.shape(tensor, axes), which takes an optional int array "axes" as input. For example, x.shape(tensor, [1, 0]) will return the dimension for axis 1 and 0 following the specified order. For current version, "axes" input allows duplications and can have arbitrary length.

* Back out "Add barrier net that runs before training nets"

Original commit changeset: b373fdc9c30f. Need additional changes to some callers to support barrier failures.

* Change warning to verbose log to reduce log spam

The `LOG(WARNING)` was a bit spammy for regular use so lets just make it a `VLOG`.

* Extract the shared code from different caffe2_benchmark binaries

The OSS benchmark and Internal benchmark will share most functions in the benchmark.

* Support MFR in sequence training

As titled.

* Make knowledge distillation work with using logged prediction feature as teacher label.

1) Add loading raw dense feature as teacher label.

2) Optional calibration function for teacher label

3) Add teacher label into generic unit test

4) Deprecated TTSN workflow version using feature_options to config teacher label

* [C2/CUDA]: unjoined cross entropy sigmoid

as desc

* Add async_scheduling executor into deferrable_net_exec_test

Add async_scheduling into tests and fix some exception cases

* Fix Event disabled error

When disabling event in RNN ops make sure we don't call Finish on disabled

event from op's RunAsync

* cuda ensure cpu output op can handle both TensorCPU and TensorCUDA

as desc.

* [C2 Core] Infer input device option in C2 hypothesis_test checkers

Improve how we default input blob device options.

Previously it defaults as where op lives but it is not necessarily the case.

For example:

CopyCPUToGPU

* [C2 Op]SplitByLengthsOp CPU/GPU implementation

[C2 Op]SplitByLengthsOp CPU/GPU implementation

* fix undefined symbol error

not sure why we're getting undefined symbol even with link_whole = True

Need to figure out why but need this workaround for now

* Add tools in DAIPlayground platform to help debugging models

Add additional tools to allow Plauground override individual method defined in AnyExp. This will allow user to create module that specificly change certain default method behavior. An example included in this diff is deactivating test model and checkpointing. When debugging any model problems, switching off components helps me quickly narrow down the location of the bug. The technique is extensively used in task T27038712 (Steady memory increase in EDPM, eventually resulting in gloo/cuda.cu:34: out of memory)

* add shape and type inference for int8 conversion operator

* Fix flaky test for group_norm

Fix flaky test for group_norm

* Fix group_norm_op_test flaky

Fix group_norm_op_test flaky

* Implementation of composite learning rate policy

In many state-of-the-arts deep learning works, people use a simple trick to

schedule the learning rate: use a fixed learning rate until error plateaus

and then switch to a different fixed learning rate, and so on. In this diff,

we implemented a simple version of the composite learning rate. The user gives

a set of learning rates policies and corresponding iteration nums, and the

optimizer will change the learning rate policy based on the number of iterations so far.

For example, the user give two learning rate policies, one is FixedLearningRate

and PolyLearningRate, with an iteration number of 1k. Then the first 1k iteration,

we use FixedLearningRate. For the following iterations, we use PolyLearningRate.

* Split two use cases of CachedReader into two classes, DBFileReader and CachedReader

# Use Cases:

1). input: DB file -> output: DatasetReader.

Use DBFileReader.

2). input: Reader -> build cache DB file -> output: DatasetReader.

Use CachedReader.

# Changes to CachedReader:

1). Move db_path to the constructor.

Because in mock reader. cache will always be built ahead.

# Changes to tests:

1). Make a separate TestCase class for CachedReader and DBFileReader.

2). Make it possible to add more test functions by adding setUp, tearDown and _make_temp_path.

3). Make delete db_path more general. `db_path` could be a file for `log_file_db`, but could also be a directory for `leveldb`.

* Back out "On Mobile phones, call GlobalInit with no arguments in predictor in case we need to perform initialization"

Original commit changeset: 4489c6133f11

* Fix LARS bug

Fixed a bug in the LARS implementation which caused all subsequent blobs not using LARS to have the LARS learning rate multiplier applied to them.

* [tum] support sparse init & add uniformFill option

as title

* Propagate exception for async nets

Capture the exception when an exception is thrown in async nets and re-throw it after wait(). This allows exceptions to be propagated up to the caller.

This diff was a part of D7752068. We split the diff so that C2 core files changes are in a separate diff.

* Automatic update of fbcode/onnx to 69894f207dfcd72d1e70497d387201cec327efbc

Previous import was 403ccfbd0161c38f0834413d790bad0874afbf9a

Included changes:

- **[69894f2](https://github.com/onnx/onnx/commit/69894f2)**: Use op schema.all tensor types in random like definitions (#865) <Scott McKay>

- **[b9d6b90](https://github.com/onnx/onnx/commit/b9d6b90)**: Clarify random like operators (#846) <Scott McKay>

- **[fc6b5fb](https://github.com/onnx/onnx/commit/fc6b5fb)**: Refactor shape inference implementation (#855) <anderspapitto>

- **[b7d8dc8](https://github.com/onnx/onnx/commit/b7d8dc8)**: fix cmake warning message (#863) <Eric S. Yu>

- **[f585c5d](https://github.com/onnx/onnx/commit/f585c5d)**: add pytorch-operator test for tile (#831) <Wenhao Hu>

- **[993fe70](https://github.com/onnx/onnx/commit/993fe70)**: add install step (#832) <Eric S. Yu>

- **[68bc26c](https://github.com/onnx/onnx/commit/68bc26c)**: add type inference for traditional ml ops except classifier ops. (#857) <Ke Zhang>

- **[9cc0cda](https://github.com/onnx/onnx/commit/9cc0cda)**: fix string representation of scalar types (#858) <G. Ramalingam>

- **[1078925](https://github.com/onnx/onnx/commit/1078925)**: fix y in pow test case to scalar (#852) <Wenhao Hu>

- **[c66fb6f](https://github.com/onnx/onnx/commit/c66fb6f)**: Add some math function shape inference (#845) <anderspapitto>

- **[ff667d1](https://github.com/onnx/onnx/commit/ff667d1)**: Refactor return type and docs for ONNXIFI_BACKEND_DIRECTX_ID (#853) <Marat Dukhan>

- **[11c6876](https://github.com/onnx/onnx/commit/11c6876)**: clear initializer names when clear initializer (#849) <Wenhao Hu>

- **[73c34ae](https://github.com/onnx/onnx/commit/73c34ae)**: Clarify FeatureVectorizer description. (#843) <Scott McKay>

- **[1befb9b](https://github.com/onnx/onnx/commit/1befb9b)**: Remove useless text in docs (#850) <Lu Fang>

- **[e84788f](https://github.com/onnx/onnx/commit/e84788f)**: Fix SELU attributes' default values (#839) <Lu Fang>

- **[ebac046](https://github.com/onnx/onnx/commit/ebac046)**: Add tile test case (#823) <Wenhao Hu>

- **[8b7a925](https://github.com/onnx/onnx/commit/8b7a925)**: a few more shape inference functions (#772) <anderspapitto>

- **[9718f42](https://github.com/onnx/onnx/commit/9718f42)**: Make the coefficient non optional for LinearClassifier (#836) <Jaliya Ekanayake>

- **[ef083d0](https://github.com/onnx/onnx/commit/ef083d0)**: Add save_tensor and load_tensor functions for Protos (#770) <Lu Fang>

- **[45ceb55](https://github.com/onnx/onnx/commit/45ceb55)**: Check if CMAKE_BUILD_TYPE set before project(). (#812) <Sergii Dymchenko>

- **[4b3d2b0](https://github.com/onnx/onnx/commit/4b3d2b0)**: [WIP] reenable shape inference tests (#834) <anderspapitto>

- **[22d17ee](https://github.com/onnx/onnx/commit/22d17ee)**: RNN tests: LSTM, GRU, SimpleRNN (#739) <Peyman Manikashani>

- **[de65b95](https://github.com/onnx/onnx/commit/de65b95)**: dimension denotation (#443) <Tian Jin>

- **[eccc76e](https://github.com/onnx/onnx/commit/eccc76e)**: fix field number issue in onnx operator proto and enable its build (#829) <Ke Zhang>

- **[d582beb](https://github.com/onnx/onnx/commit/d582beb)**: disable shape inference test to unbreak ci (#830) <Lu Fang>

- **[485b787](https://github.com/onnx/onnx/commit/485b787)**: function proto for composite op. (#802) <Ke Zhang>

- **[cd58928](https://github.com/onnx/onnx/commit/cd58928)**: specify defaults for attributes of Affine op (#820) <G. Ramalingam>

- **[7ee2cf9](https://github.com/onnx/onnx/commit/7ee2cf9)**: merge the dummy backend back into the main one (#743) <anderspapitto>

- **[1c03a5a](https://github.com/onnx/onnx/commit/1c03a5a)**: [Proposal] ONNX Interface for Framework Integration (previously ONNX Backend API) header and docs (#551) <Marat Dukhan>

- **[3769a98](https://github.com/onnx/onnx/commit/3769a98)**: Rename real model test case from VGG-16 to ZFNet (#821) <Lu Fang>

* [C2]ReluN Op

relu n op.

tf reference: https://www.tensorflow.org/api_docs/python/tf/nn/relu6

* Call destructor when assigning a blob value

* Add executor overrides

Add executor overrides flag to enable migration to async_scheduling executor

* Add barrier net that runs before training nets - attempt #2

Add a synchonize barrier net that is run before training nets. With this net, shards that are faster will wait for other shards before start training. This reduce chances of the faster shards timing out during GLOO AllReduce.

Removed explicit data_parallel_model.py.synchronize call in holmes workflow.

This change was landed previously but caused errors for some EDPM workflows - See https://fb.facebook.com/groups/1426530000692545/permalink/1906766366002237/ - because EDPM assumes any call to CreateOrCloneCommonWorld and Gloo ops are wrapped in exception handlers but in this case exception thrown in the barrier init net is not handled.

To address this issue, we add _CreateOrCloneCommonWorld to the param_init_net instead of a new barrier init net. Since errors for param_init_net run is handled gracefully and re-rendezvous, it should fixes the problem.

* Handle empty nets in async_scheduling

Make sure we don't get stuck on empty nets

* use CUDA_ARCH for conditional compile

* [C2 fix] infer function for ensure_cpu_output_op

* Update group_norm test to reduce flaky test

* Fix lr_multiplier for GPU

* Remove OpenGL code from benchmark

* Make it possible to print plot in the ipython notbook

* Create the blob if the blob is not specified in the init net

* Do not use gf library for MKL. Even after I install the entire MKL library it is still not found. After removing it, the MKL code can still run

Summary:

Historically, for interface dependent libraries (glog, gflags and protobuf), exposing them in Caffe2Config.cmake is usually difficult.

New versions of glog and gflags ship with new-style cmake targets, so one does not need to use variables. New-style targets also make it easier for people to depend on them in installed config files.

This diff modernizes the gflags library, and still provides a fallback path if the installed gflags does not have cmake config files coming with it.

It does change one behavior of the build process though - when one specifies -DUSE_GFLAGS=ON but gflags cannot be found, the old script automatically turns it off but the new script crashes, forcing the user to specify USE_GFLAGS=OFF.

Closes https://github.com/caffe2/caffe2/pull/1819

Differential Revision: D6826604

Pulled By: Yangqing

fbshipit-source-id: 210f3926f291c8bfeb24eb9671e5adfcbf8cf7fe

Summary:

Latest version of Gloo takes care of MPI_Init/MPI_Finalize for us, so

this commit removes handling that from caffe2/contrib/gloo. It also

imports CMake NCCL module changes from Gloo to stay consistent and

allow setting NCCL_INCLUDE_DIR and NCCL_LIB_DIR separately.

Closes https://github.com/caffe2/caffe2/pull/1295

Reviewed By: dzhulgakov

Differential Revision: D5979364

Pulled By: pietern

fbshipit-source-id: 794b00b0a445317c30a13cc8f0f4dc38e590cc77

Summary:

Here is the buggy behavior which this change fixes:

* On the first configure with CMake, a system-wide benchmark installation is not found, so we use the version in `third_party/` ([see here](https://github.com/caffe2/caffe2/blob/v0.8.1/cmake/Dependencies.cmake#L98-L100))

* On installation, the benchmark sub-project installs its headers to `CMAKE_INSTALL_PREFIX` ([see here](https://github.com/google/benchmark/blob/4bf28e611b/src/CMakeLists.txt#L41-L44))

* On a rebuild, CMake searches the system again for a benchmark installation (see https://github.com/caffe2/caffe2/issues/916 for details on why the first search is not cached)

* CMake includes `CMAKE_INSTALL_PREFIX` when searching the system ([docs](https://cmake.org/cmake/help/v3.0/variable/CMAKE_SYSTEM_PREFIX_PATH.html))

* Voila, a "system" installation of benchmark is found at `CMAKE_INSTALL_PREFIX`

* On a rebuild, `-isystem $CMAKE_INSTALL_PREFIX/include` is added to every build target ([see here](https://github.com/caffe2/caffe2/blob/v0.8.1/cmake/Dependencies.cmake#L97)). e.g:

cd /caffe2/build/caffe2/binaries && ccache /usr/bin/c++ -I/caffe2/build -isystem /caffe2/third_party/googletest/googletest/include -isystem /caffe2/install/include -isystem /usr/include/opencv -isystem /caffe2/third_party/eigen -isystem /usr/include/python2.7 -isystem /usr/lib/python2.7/dist-packages/numpy/core/include -isystem /caffe2/third_party/pybind11/include -isystem /usr/local/cuda/include -isystem /caffe2/third_party/cub -I/caffe2 -I/caffe2/build_host_protoc/include -fopenmp -std=c++11 -O2 -fPIC -Wno-narrowing -O3 -DNDEBUG -o CMakeFiles/split_db.dir/split_db.cc.o -c /caffe2/caffe2/binaries/split_db.cc

This causes two issues:

1. Since the headers and libraries at `CMAKE_INSTALL_PREFIX` have a later timestamp than the built files, an unnecessary rebuild is triggered

2. Out-dated headers from the install directory are used during compilation, which can lead to strange build errors (which can usually be fixed by `rm -rf`'ing the install directory)

Possible solutions:

* Stop searching the system for an install of benchmark, and always use the version in `third_party/`

* Cache the initial result of the system-wide search for benchmark, so we don't accidentally pick up the installed version later

* Hack CMake to stop looking for headers and libraries in the installation directory

This PR is an implementation of the first solution. Feel free to close this and fix the issue in another way if you like.

Closes https://github.com/caffe2/caffe2/pull/1112

Differential Revision: D5761750

Pulled By: Yangqing

fbshipit-source-id: 2240088994ffafdb6eedb3626d898b505a4ba564

Summary:

Use HINTS instead of PATHS for find_library so that you can specify

-DNCCL_ROOT_DIR and it will use this NCCL installation regardless of

what else is installed on your system. Also add a path hint to include

the default base path for NCCL 2 libraries.

Closes https://github.com/caffe2/caffe2/pull/1152

Reviewed By: Yangqing

Differential Revision: D5740053

Pulled By: pietern

fbshipit-source-id: 43f0908a63e8a9b90320dece0bbb558827433b48

Summary:

The PATHS suggestion to find_library is searched after everything

else. By using HINTS, it searches CUDNN_ROOT_DIR much earlier, avoiding

potential conflicts with other paths that have the CuDNN header.

Closes https://github.com/caffe2/caffe2/pull/1122

Reviewed By: Yangqing

Differential Revision: D5701822

Pulled By: pietern

fbshipit-source-id: 3f15757701aff167e7ae2a3e8a4ccf5d96763a0c

Summary:

I successfully built caffe2 using MSVC 2015 and the Ninja Generator. I use vcpkg to build glfags, glog, lmdb and protobuf. Here is my build procedure:

1. Install vcpkg and set it up according to vcpkg docs

2. Install dependencies

```

$> vcpkg install gflags glog lmdb protobuf eigen3 --triplet x64-windows-static

```

3. Run CMake with this batch file

```Batch

setlocal

if NOT DEFINED VCPKG_DIR ( echo "Please defined VCPKG_DIR" && exit /b 1 )

if NOT DEFINED CMAKE_BUILD_TYPE set CMAKE_BUILD_TYPE=Release

if NOT DEFINED BUILD_DIR set BUILD_DIR=build_%CMAKE_BUILD_TYPE%

if NOT DEFINED USE_CUDA set USE_CUDA=OFF

call "%VS140COMNTOOLS%\..\..\VC\vcvarsall.bat" amd64

if NOT EXIST %BUILD_DIR% (mkdir %BUILD_DIR%)

pushd %BUILD_DIR%

set CMAKE_GENERATOR=Ninja

set ZLIB_LIBRARY=%VCPKG_DIR%\installed\x64-windows-static\lib\zlib.lib

cmake -G"%CMAKE_GENERATOR%" ^

-DBUILD_SHARED_LIBS=OFF ^

-DCMAKE_VERBOSE_MAKEFILE=1 ^

-DBUILD_TEST=OFF ^

-DBUILD_SHARED_LIBS=OFF ^

-DCMAKE_BUILD_TYPE=%CMAKE_BUILD_TYPE% ^

-DUSE_CUDA=%USE_CUDA% ^

-DZLIB_LIBRARY:FILEPATH="%ZLIB_LIBRARY%" ^

-DVCPKG_TARGET_TRIPLET=x64-windows-static ^

-DVCPKG_APPLOCAL_DEPS:BOOL=OFF ^

-DCMAKE_TOOLCHAIN_FILE:FILEPATH=%VCPKG_DIR%\scripts\buildsystems\vcpkg.cmake ^

-DPROTOBUF_PROTOC_EXECUTABLE:FILEPATH=%VCPKG_DIR%\installed\x64-windows-static\tools\protoc.exe ^

..\

ninja

popd

endlocal

```

Closes https://github.com/caffe2/caffe2/pull/880

Differential Revision: D5497384

Pulled By: Yangqing

fbshipit-source-id: e0d81d3dbd3286ab925eddef0e6fbf99eb6375a5

Summary:

libpthreadpool is needed during the linking stage and is missing when user chooses to use an external nnpack installation (from system libraries).

Fixes GitHub issue #459.

Detailed discussion on [this comment](https://github.com/caffe2/caffe2/issues/459#issuecomment-308831547).

Closes https://github.com/caffe2/caffe2/pull/808

Differential Revision: D5430318

Pulled By: Yangqing

fbshipit-source-id: 5e10332fb01e54d8360bb929c1a82b0eef580bbb

Summary:

MKL on windows works with this change. Tested with MKL 2017 Update 3 (https://software.intel.com/en-us/articles/intel-math-kernel-library-intel-mkl-2017-release-notes).

Should fix#544

With MKL 2017 Update 3 #514 should not happen too.

Note: I used Anaconda which ships with its own MKL, so I had to make sure that the MKL 2017 Update 3 version was loaded by replacing the .dll in the `%AnacondaPrefix%\Library\bin` folder. Otherwise, numpy would load it's own version and I would have all sorts of missing procedures errors. Now that the same version is available through `conda` this is easily fixed with `conda install mkl==2017.0.3`

Closes https://github.com/caffe2/caffe2/pull/929

Differential Revision: D5429664

Pulled By: Yangqing

fbshipit-source-id: eaa150bab563ee4ce8348faee1624ac4af477513

Summary:

This PR changes the cmake of Caffe2 to look for system dependencies before resorting to the submodules in `third-party`. Only googletest should logically be in third-party, the other libraries should ideally be installed as system dependencies by the user. This PR adds system dependency checks for Gloo, CUB, pybind11, Eigen and benchmark, as these were missing from the cmake files.

In addition it removes the execution of `git submodule update --init` in cmake. This seems like bad behavior to me, it should be up to the user to download submodules and manage the git repository.

Closes https://github.com/caffe2/caffe2/pull/382

Differential Revision: D5124123

Pulled By: Yangqing

fbshipit-source-id: cc34dda58ffec447874a89d01058721c02a52476

Summary: Adding a simple video data layer which allows to read video data from frames, videos and output 5D tensor. It also allows multiple labels. The current implementation is based on ffmpeg

Differential Revision: D4801798

fbshipit-source-id: 46448e9c65fb055c2d71855447383a33ade0e444

Summary:

(Note: previous revert was due to a race condition between D4657831 and

D4659953 that I failed to catch.)

After this, we should have contbuild guarding the Windows build both with

and without CUDA.

This includes a series of changes that are needed to make Windows build,

specifically:

(1) Various flags that are needed in the cmake system, specially dealing

with /MD, /MT, cuda, cudnn, whole static linking, etc.

(2) Contbuild scripts based on appveyo.

(3) For Windows build, note that one will need to use "cmake --build" to

build stuff so that the build type is consistent between configuration and

actual build. see scripts\build_windows.bat for details.

(4) In logging.h, ERROR is already defined by Windows. I don't have a good

solution now, and as a result, LOG(ERROR) on windows is going to be

LOG(INFO).

(5) variable length array is not supported by MSVC (and it is not part of

C++ standard). As a result I replaced them with vectors.

(6) sched.h is not available on Windows, so akyrola 's awesome simple

async net might encounter some slowdown due to no affinity setting on

Windows.

(7) MSVC has a bug that does not work very well with template calls inide

a templated function call, which is a known issue that should be fixed in

MSVC 2017. However for now this means changes to conv_op_impl.h and

recurrent_net_op.h. No actual functionalities are changed.

(8) std host function calls are not supported in CUDA8+MSVC, so I changed

lp_pool (and maybe a few others) to use cuda device functions.

(9) The current Scale and Axpy has heavy templating that does not work

well with MSVC. As a result I reverted azzolini 's changes to the Scale

and Axpy interface, moved the fixed-length version to ScaleFixedSize and

AxpyFixedSize.

(10) CUDA + MSVC does not deal with Eigen well, so I guarded all Eigen

parts to only the non-CUDA part.

(11) In conclusion, it is fun but painful to deal with visual c++.

Differential Revision: D4666745

fbshipit-source-id: 3c9035083067bdb19a16d9c345c1ce66b6a86600

Summary:

After this, we should have contbuild guarding the Windows build both with

and without CUDA.

This includes a series of changes that are needed to make Windows build,

specifically:

(1) Various flags that are needed in the cmake system, specially dealing

with /MD, /MT, cuda, cudnn, whole static linking, etc.

(2) Contbuild scripts based on appveyo.

(3) For Windows build, note that one will need to use "cmake --build" to

build stuff so that the build type is consistent between configuration and

actual build. see scripts\build_windows.bat for details.

(4) In logging.h, ERROR is already defined by Windows. I don't have a good

solution now, and as a result, LOG(ERROR) on windows is going to be

LOG(INFO).

(5) variable length array is not supported by MSVC (and it is not part of

C++ standard). As a result I replaced them with vectors.

(6) sched.h is not available on Windows, so akyrola 's awesome simple

async net might encounter some slowdown due to no affinity setting on

Windows.

(7) MSVC has a

Closes https://github.com/caffe2/caffe2/pull/183

Reviewed By: ajtulloch

Differential Revision: D4657831

Pulled By: Yangqing

fbshipit-source-id: 070ded372ed78a7e3e3919fdffa1d337640f146e

Summary: Github import didn't work and the manual import lost some files.

Reviewed By: Yangqing

Differential Revision: D4408509

fbshipit-source-id: ec8edb8c02876410f0ef212bde6847a7ba327fe4