Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29762

Rename this API as discussed, since it's use cases extend beyond only

model parallelism.

ghstack-source-id: 94020627

Test Plan: Unit tests pass

Differential Revision: D18491743

fbshipit-source-id: d07676bb14f072c64da0ce99ee818bcc582efc57

Summary:

Small fixes to rpc docs:

- mark as experimental and subject to change

- Reference the distributed autograd design document in pytorch notes page.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29857

Differential Revision: D18526252

Pulled By: rohan-varma

fbshipit-source-id: e09757fa60a9f8fe9c76a868a418a1cd1c300eae

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29927

With the docs page now up, we can update the links in the design doc

to point to the docs page.

ghstack-source-id: 94055423

Test Plan: waitforbuildbot

Differential Revision: D18541878

fbshipit-source-id: f44702d9a8296ccc0a5d58d56c3b6dc8a822b520

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29175

Updates our docs to include a design doc for distributed autograd.

Currently, this doc only covers the FAST mode algorithm. The Smart mode

algorithm section just refers to the original RFC.

There is a section for Distributed Optimizer that we can complete once we've

finalized the API for the same.

ghstack-source-id: 93701129

Test Plan: look at docs.

Differential Revision: D18318949

fbshipit-source-id: 670ea1b6bb84692f07facee26946bbc6ce8c650c

Summary:

cumsum/cumprod perform their own respective operations over a desired dimension, but there is no reduction in dimensions in the process, i.e. they are not reduction operations and hence just keep the input names of the tensor on which the operation is performed

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29453

Differential Revision: D18455683

Pulled By: anjali411

fbshipit-source-id: 9e250d3077ff3d8f3405d20331f4b6ff05151a28

Summary:

Fixes https://github.com/pytorch/pytorch/issues/28658

I have added the link to the docs for `flatten_parameters`.

RNNBase is a superclass of RNN, LSTM and GRM classes. Should I add a link to `flatten_parameters()` in those sections as well ?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29196

Differential Revision: D18326815

Pulled By: ezyang

fbshipit-source-id: 4239019112e77753a0820aea95c981a2c868f5b0

Summary:

At the encouragement of Pyro developers and https://github.com/pytorch/pytorch/issues/13811, I have opened this PR to move the (2D) von Mises distribution upstream.

CC: fritzo neerajprad

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17168

Differential Revision: D18249048

Pulled By: ezyang

fbshipit-source-id: 3e6df9006c7b85da7c4f55307c5bfd54c2e254e6

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27254

`MultiplicativeLR` consumes a function providing the multiplicative factor at each epoch. It mimics `LambdaLR` in its syntax.

Test Plan: Imported from OSS

Differential Revision: D17728088

Pulled By: vincentqb

fbshipit-source-id: 1c4a8e19a4f24c87b5efccda01630c8a970dc5c9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27927

This fixes

`WARNING: html_static_path entry '_images' does not exist`

by removing '_images' from conf.py. As far as I can tell, '_images' in

`html_static_path` is only necessary if images already exist in the

`_images` folder; otherwise, sphinx is able to auto-generate _images

into the build directory and populate it correctly.

Test Plan: - build and view the docs locally.

Differential Revision: D17915109

Pulled By: zou3519

fbshipit-source-id: ebcc1f331475f52c0ceadd3e97c3a4a0d606e14b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27850

Many of these are real problems in the documentation (i.e., link or

bullet point doesn't display correctly).

Test Plan: - built and viewed the documentation for each change locally.

Differential Revision: D17908123

Pulled By: zou3519

fbshipit-source-id: 65c92a352c89b90fb6b508c388b0874233a3817a

Summary:

People get confused with partial support otherwise: https://github.com/pytorch/pytorch/issues/27811#27729

Suggestions on where else put warnings are welcomed (probably in tutorials - cc SethHWeidman )

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27829

Differential Revision: D17910931

Pulled By: dzhulgakov

fbshipit-source-id: 37a169a4bef01b94be59fe62a8f641c3ec5e9b7c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27782

Warnings show up when running `make html` to build documentation. All of

the warnings are very reasonable and point to bugs in our docs. This PR

attempts to fix most of those warnings.

In the future we will add something to the CI that asserts that there

are no warnings in our docs.

Test Plan: - build and view changes locally

Differential Revision: D17887067

Pulled By: zou3519

fbshipit-source-id: 6bf4d08764759133b20983d6cd7f5d27e5ee3166

Summary:

resolves issues:

https://github.com/pytorch/pytorch/issues/27703

Updates to index for v1.3.0

* add javasphinx to the required sphinx plugins

* Update "Package Reference" to "Python API"

* Add in torchaudio and torchtext reference links so they show up across all docs not just the main page

* Add "Other Languages" section, add in C++ docs, add in Javadocs

* Add link to XLA docs under Notes: http://pytorch.org/xla/

this includes changes to:

docs/source/conf.py

docs/source/index.rst

docs/source/nn.rst

docs/requirements.txt

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27721

Differential Revision: D17881973

Pulled By: jlin27

fbshipit-source-id: ccc1e9e4da17837ad99d25df997772613f76aea8

Summary:

- Update torch.rst to remove certain autofunction calls

- Add reference to Quantization Functions section in nn.rst

- Update javadocs for v1.3.0

- Update index.rst:

- Update "Package Reference" to "Python API"

- Add in torchaudio and torchtext reference links so they show up across all docs not just the main page

- Add "Other Languages" section, add in C++ docs, add in Javadocs

- Add link to XLA docs under Notes: http://pytorch.org/xla/

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27676

Differential Revision: D17850696

Pulled By: brianjo

fbshipit-source-id: 3de146f065222d1acd9a33aae3b543927a63532a

Summary:

This was written by Raghu, Jessica, Dmytro and myself.

This PR will accumulate additional changes (there are a few more things we need to add to this actual rst file). I'll probably add the related image files to this PR as well.

I'm breaking draft PR https://github.com/pytorch/pytorch/pull/27553 into more easily digestible pieces.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27559

Differential Revision: D17843414

Pulled By: gottbrath

fbshipit-source-id: 434689f255ac1449884acf81f10e0148d0d8d302

Summary:

Added Complex support with AVX to unary ops and binary ops.

I need to add nan propagation to minimum() and maximum() in the future.

In-tree changes to pytorch to support complex numbers are being submitted here.

Out-of-tree support for complex numbers is here: pytorch-cpu-strided-complex extension

Preliminary Benchmarks are here.

I tried rrii and riri and found that riri is better in most situations.

Divide is very slow because you can't reduce 1/(x+y)

Sqrt is also very slow.

Reciprocal could be sped up after I add conj()

Everything else is typically within 20% of the real number performance.

Questions:

Why does macOS not support mil? #if AT_MKL_ENABLED() && !defined(__APPLE__) in vml.h. MKL does support some complex operations like Abs, so I was curious about trying it.

Is MKL just calling AVX?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26500

Differential Revision: D17835431

Pulled By: ezyang

fbshipit-source-id: 6746209168fbeb567af340c22bf34af28286bd54

Summary:

According to https://github.com/pytorch/pytorch/issues/27285 , seems we do not intend to use shebang as an indication of Python version, thus

we enable EXE001 flake8 check.

For violations, we either remove shebang from non-executable Python scripts or grant them executable permission.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27560

Differential Revision: D17831782

Pulled By: ezyang

fbshipit-source-id: 6282fd3617b25676a6d959af0d318faf05c09b26

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27173

`docs/source/named_tensor.rst` is the entry point; most users will land

either here or the named tensor tutorial when looking to use named

tensors. We should strive to make this as readable, concise, and understandable

as possible.

`docs/source/name_inference.rst` lists all of the name inference rules.

It should be clear but it's hard to make it concise.

Please let me know if anything doesn't make sense and please propose

alternative wordings and/or restructuring to improve the documentation.

This should ultimately get cherry-picked into the 1.3 branch as one

monolithic commit so it would be good to get all necessary changes made

in this PR and not have any follow ups.

Test Plan: - built and reviewed locally with `cd docs/ && make html`.

Differential Revision: D17763046

Pulled By: zou3519

fbshipit-source-id: c7872184fc4b189d405b18dad77cad6899ae1522

Summary:

Adds comprehensive memory instrumentation to the CUDA caching memory allocator.

# Counters

Added comprehensive instrumentation for the following stats:

- Allocation requests (`allocation`)

- Allocated memory (`allocated_bytes`)

- Reserved segments from cudaMalloc (`segment`)

- Reserved memory (`reserved_bytes`)

- Active memory blocks (`active`)

- Active memory (`active_bytes`)

- Inactive, non-releasable blocks (`inactive_split`)

- Inactive, non-releasable memory (`inactive_split_bytes`)

- Number of failed cudaMalloc calls that result in a cache flush and retry (`cuda_malloc_retries`)

- Number of OOMs (`num_ooms`)

Except for the last two, these stats are segmented between all memory, large blocks, and small blocks. Along with the current value of each stat, historical counts of allocs/frees as well as peak usage are tracked by the allocator.

# Snapshots

Added the capability to get a "memory snapshot" – that is, to generate a complete dump of the allocator block/segment state.

# Implementation: major changes

- Added `torch.cuda.memory_stats()` (and associated C++ changes) which returns all instrumented stats as a dictionary.

- Added `torch.cuda.snapshot()` (and associated C++ changes) which returns a complete dump of the allocator block/segment state as a list of segments.

- Added memory summary generator in `torch.cuda.memory_summary()` for ease of client access to the instrumentation stats. Potentially useful to dump when catching OOMs. Sample output here: https://pastebin.com/uKZjtupq

# Implementation: minor changes

- Add error-checking helper functions for Python dicts and lists in `torch/csrc/utils/`.

- Existing memory management functions in `torch.cuda` moved from `__init__.py` to `memory.py` and star-imported to the main CUDA module.

- Add various helper functions to `torch.cuda` to return individual items from `torch.cuda.memory_stats()`.

- `torch.cuda.reset_max_memory_cached()` and `torch.cuda.reset_max_memory_allocated()` are deprecated in favor of `reset_peak_stats`. It's a bit difficult to think of a case where only one of those stats should be reset, and IMO this makes the peak stats collectively more consistent.

- `torch.cuda.memory_cached()` and `torch.cuda.max_memory_cached()` are deprecated in favor of `*memory_reserved()`.

- Style (add access modifiers in the allocator class, random nit fixes, etc.)

# Testing

- Added consistency check for stats in `test_cuda.py`. This verifies that the data from `memory_stats()` is faithful to the data from `snapshot()`.

- Ran on various basic workflows (toy example, CIFAR)

# Performance

Running the following speed benchmark: https://pastebin.com/UNndQg50

- Before this PR: 45.98 microseconds per tensor creation

- After this PR: 46.65 microseconds per tensor creation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27361

Differential Revision: D17758747

Pulled By: jma127

fbshipit-source-id: 5a84e82d696c40c505646b9a1b4e0c3bba38aeb6

Summary:

10 lines of error context (on both sides) is overkill, especially now

that we have line numbers. With a compilation stack of a couple

functions, it becomes a pain to scroll to the top of the stack to see

the real error every time.

This also fixes class names in the compilation stack to a format of

`ClassName.method_name` instead of the the full qualified name

Old output

```

clip_boxes_to_image(Tensor boxes, (int, int) size) -> (Tensor):

Expected a value of type 'Tuple[int, int]' for argument 'size' but instead found type 'Tuple[int, int, int]'.

:

at /home/davidriazati/dev/vision/torchvision/models/detection/rpn.py:365:20

top_n_idx = self._get_top_n_idx(objectness, num_anchors_per_level)

batch_idx = torch.arange(num_images, device=device)[:, None]

objectness = objectness[batch_idx, top_n_idx]

levels = levels[batch_idx, top_n_idx]

proposals = proposals[batch_idx, top_n_idx]

final_boxes = []

final_scores = []

for boxes, scores, lvl, img_shape in zip(proposals, objectness, levels, image_shapes):

boxes = box_ops.clip_boxes_to_image(boxes, img_shape)

~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

keep = box_ops.remove_small_boxes(boxes, self.min_size)

boxes, scores, lvl = boxes[keep], scores[keep], lvl[keep]

# non-maximum suppression, independently done per level

keep = box_ops.batched_nms(boxes, scores, lvl, self.nms_thresh)

# keep only topk scoring predictions

keep = keep[:self.post_nms_top_n]

boxes, scores = boxes[keep], scores[keep]

final_boxes.append(boxes)

final_scores.append(scores)

'RegionProposalNetwork.filter_proposals' is being compiled since it was called from 'RegionProposalNetwork.forward'

at /home/davidriazati/dev/vision/torchvision/models/detection/rpn.py:446:8

num_images = len(anchors)

num_anchors_per_level = [o[0].numel() for o in objectness]

objectness, pred_bbox_deltas = \

concat_box_prediction_layers(objectness, pred_bbox_deltas)

# apply pred_bbox_deltas to anchors to obtain the decoded proposals

# note that we detach the deltas because Faster R-CNN do not backprop through

# the proposals

proposals = self.box_coder.decode(pred_bbox_deltas.detach(), anchors)

proposals = proposals.view(num_images, -1, 4)

boxes, scores = self.filter_proposals(proposals, objectness, images.image_sizes, num_anchors_per_level)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

losses = {}

if self.training:

assert targets is not None

labels, matched_gt_boxes = self.assign_targets_to_anchors(anchors, targets)

regression_targets = self.box_coder.encode(matched_gt_boxes, anchors)

loss_objectness, loss_rpn_box_reg = self.compute_loss(

objectness, pred_bbox_deltas, labels, regression_targets)

losses = {

'RegionProposalNetwork.forward' is being compiled since it was called from 'MaskRCNN.forward'

at /home/davidriazati/dev/vision/torchvision/models/detection/generalized_rcnn.py:53:8

"""

if self.training and targets is None:

raise ValueError("In training mode, targets should be passed")

original_image_sizes = [(img.shape[-2], img.shape[-3]) for img in images]

images, targets = self.transform(images, targets)

features = self.backbone(images.tensors)

if isinstance(features, torch.Tensor):

features = OrderedDict([(0, features)])

proposals, proposal_losses = self.rpn(images, features, targets)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

detections, detector_losses = self.roi_heads(features, proposals, images.image_sizes, targets)

detections = self.transform.postprocess(detections, images.image_sizes, original_image_sizes)

losses = {}

losses.update(detector_losses)

losses.update(proposal_losses)

# TODO: multiple return types??

# if self.training:

```

New output

```

RuntimeError:

clip_boxes_to_image(Tensor boxes, (int, int) size) -> (Tensor):

Expected a value of type 'Tuple[int, int]' for argument 'size' but instead found type 'Tuple[int, int, int]'.

:

at /home/davidriazati/dev/vision/torchvision/models/detection/rpn.py:365:20

final_scores = []

for boxes, scores, lvl, img_shape in zip(proposals, objectness, levels, image_shapes):

boxes = box_ops.clip_boxes_to_image(boxes, img_shape)

~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

keep = box_ops.remove_small_boxes(boxes, self.min_size)

boxes, scores, lvl = boxes[keep], scores[keep], lvl[keep]

'RegionProposalNetwork.filter_proposals' is being compiled since it was called from 'RegionProposalNetwork.forward'

at /home/davidriazati/dev/vision/torchvision/models/detection/rpn.py:446:8

proposals = self.box_coder.decode(pred_bbox_deltas.detach(), anchors)

proposals = proposals.view(num_images, -1, 4)

boxes, scores = self.filter_proposals(proposals, objectness, images.image_sizes, num_anchors_per_level)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

losses = {}

'RegionProposalNetwork.forward' is being compiled since it was called from 'MaskRCNN.forward'

at /home/davidriazati/dev/vision/torchvision/models/detection/generalized_rcnn.py:53:8

if isinstance(features, torch.Tensor):

features = OrderedDict([(0, features)])

proposals, proposal_losses = self.rpn(images, features, targets)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

detections, detector_losses = self.roi_heads(features, proposals, images.image_sizes, targets)

detections = self.transform.postprocess

```

](https://our.intern.facebook.com/intern/diff/17560963/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26765

Pulled By: driazati

Differential Revision: D17560963

fbshipit-source-id: e463548744b505ca17f0158079b80e08fda47d49

Summary:

Adds the method `add_hparams` to `torch.utils.tensorboard` API docs. Will want to have this in PyTorch 1.3 release.

cc sanekmelnikov lanpa natalialunova

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27344

Differential Revision: D17753689

Pulled By: orionr

fbshipit-source-id: cc8636e0bdcf3f434444cd29471c62105491039d

Summary:

Resubmit of https://github.com/pytorch/pytorch/pull/25980.

Our old serialization was in tar (like `resnet18-5c106cde.pth` was in this format) so let's only support automatically unzip if checkpoints are zipfiles.

We can still manage to get it work with tarfile, but let's delay it when there's an ask.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26723

Differential Revision: D17551795

Pulled By: ailzhang

fbshipit-source-id: 00b4e7621f1e753ca9aa07b1fe356278c6693a1e

Summary:

This PR does a few small improvements to hub:

- add support `verbose` option in `torch.load`. Note that this mutes hitting cache message but keeps the message of first download as suggested. fixes https://github.com/pytorch/pytorch/issues/24791

- add support loading state dict from tar file or zip file in `torch.hub.load_state_dict_from_url`.

- add `torch.hub.download_url_to_file` as public API, and add BC bit for `_download_url_to_file`.

- makes hash check in filename optional through `check_hash`, many users don't have control over the naming, relaxing this constraint could potentially avoid duplicating download code on user end.

- move pytorch CI off `pytorch/vision` and use `ailzhang/torchhub_example` as a dedicated test repo. fixes https://github.com/pytorch/pytorch/issues/25865

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25980

Differential Revision: D17495679

Pulled By: ailzhang

fbshipit-source-id: 695df3e803ad5f9ca33cfbcf62f1a4f8cde0dbbe

Summary:

Changelog:

- Remove `torch.gels` which was deprecated in v1.2.0

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26480

Test Plan: - No tests were changed and all callsites for `torch.gels` where modified to `torch.lstsq` when `torch.lstsq` was introduced

Differential Revision: D17527207

Pulled By: zou3519

fbshipit-source-id: 28e2fa3a3bf30eb6b9029bb5aab198c4d570a950

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26240

In particular adds support for empty/empty_like which is needed for memory layouts to work.

Test Plan: Imported from OSS

Differential Revision: D17443220

Pulled By: dzhulgakov

fbshipit-source-id: 9c9e25981999c0edaf40be104a5741e9c62a1333

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25263

This adds an api to return true in script and false in eager, which together with ignore allows guarding of not yet supported JIT features. Bikeshedding requested please.

cc zou3519

```

def foo():

if not torch.jit.is_scripting():

return torch.linear(...)

else:

return addmm(...)

```

Test Plan: Imported from OSS

Differential Revision: D17272443

Pulled By: eellison

fbshipit-source-id: de0f769c7eaae91de0007b98969183df93a91f42

Summary:

Improve handling of mixed-type tensor operations.

This PR affects the arithmetic (add, sub, mul, and div) operators implemented via TensorIterator (so dense but not sparse tensor ops).

For these operators, we will now promote to reasonable types where possible, following the rules defined in https://github.com/pytorch/pytorch/issues/9515, and error in cases where the cast would require floating point -> integral or non-boolean to boolean downcasts.

The details of the promotion rules are described here:

https://github.com/nairbv/pytorch/blob/promote_types_strict/docs/source/tensor_attributes.rst

Some specific backwards incompatible examples:

* now `int_tensor * float` will result in a float tensor, whereas previously the floating point operand was first cast to an int. Previously `torch.tensor(10) * 1.9` => `tensor(10)` because the 1.9 was downcast to `1`. Now the result will be the more intuitive `tensor(19)`

* Now `int_tensor *= float` will error, since the floating point result of this operation can't be cast into the in-place integral type result.

See more examples/detail in the original issue (https://github.com/pytorch/pytorch/issues/9515), in the above linked tensor_attributes.rst doc, or in the test_type_promotion.py tests added in this PR:

https://github.com/nairbv/pytorch/blob/promote_types_strict/test/test_type_promotion.py

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22273

Reviewed By: gchanan

Differential Revision: D16582230

Pulled By: nairbv

fbshipit-source-id: 4029cca891908cdbf4253e4513c617bba7306cb3

Summary:

All of the code examples should now run as unit tests, save for those

that require interaction (i.e. show `pdb` usage) and those that use

CUDA.

`save` had to be moved before `load` in `jit/__init__.py` so `load`

could use the file generated by `save`

](https://our.intern.facebook.com/intern/diff/17192417/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25668

Pulled By: driazati

Differential Revision: D17192417

fbshipit-source-id: 931b310ae0c3d2cc6affeabccae5296f53fe42bc

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25262

Preserve the type of ignore'd functions on serialization. Currently we first compile an ignore'd function with it's annotated type when first compiling, but do not preserve it. This is important for being able to compile models with not-yet-supported features in JIT.

```

torch.jit.ignore

def unsupported(x):

return x

def foo():

if not torch.jit._is_scripting():

return torch.linear(...)

else:

return unsupported(...)

```

Test Plan: Imported from OSS

Reviewed By: driazati

Differential Revision: D17199043

Pulled By: eellison

fbshipit-source-id: 1196fd94c207b9fbee1087e4b2ef7d4656a6647f

Summary:

Adds links to torchaudio and torchtext to docs index. We should eventually evolve this to bring the audio and text docs builds in like torchvision.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24245

Differential Revision: D17163539

Pulled By: soumith

fbshipit-source-id: 5754bdf7579208e291e53970b40f73ef119b758f

Summary:

I think...

I'm having issues building the site, but it appears to get rid of the error.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25544

Differential Revision: D17157327

Pulled By: ezyang

fbshipit-source-id: 170235c52008ca78ff0d8740b2d7f5b67397b614

Summary:

I presume this is what was intended.

cc t-vi

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25011

Differential Revision: D16980939

Pulled By: soumith

fbshipit-source-id: c55b22e119f3894bd124eb1dce4f92a719ac047a

Summary:

Another pass over the docs, this covers most of the remaining stuff

* content updates for new API

* adds links to functions instead of just names

* removes some useless indentations

* some more code examples + `testcode`s

](https://our.intern.facebook.com/intern/diff/16847964/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24445

Pulled By: driazati

Differential Revision: D16847964

fbshipit-source-id: cd0b403fe4a89802ce79289f7cf54ee0cea45073

Summary:

Stacked PRs

* #24445 - [jit] Misc doc updates #2

* **#24435 - [jit] Add docs to CI**

This integrates the [doctest](http://www.sphinx-doc.org/en/master/usage/extensions/doctest.html) module into `jit.rst` so that we can run our code examples as unit tests. They're added to `test_jit.py` under the `TestDocs` class (which takes about 30s to run). This should help prevent things like #24429 from happening in the future. They can be run manually by doing `cd docs && make doctest`.

* The test setup requires a hack since `doctest` defines everything in the `builtins` module which upsets `inspect`

* There are several places where the code wasn't testable (i.e. it threw an exception on purpose). This may be resolvable, but I'd prefer to leave that for a follow up. For now there are `TODO` comments littered around.

](https://our.intern.facebook.com/intern/diff/16840882/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24435

Pulled By: driazati

Differential Revision: D16840882

fbshipit-source-id: c4b26e7c374cd224a5a4a2d523163d7b997280ed

Summary:

This patch writes documentation for `Tensor.record_stream()`, which is not a documented API currently. I've discussed publishing it with colesbury in https://github.com/pytorch/pytorch/issues/23729.

The documentation is based on [the introduction at `CUDACachingAllocator.cpp`](25d1496d58/c10/cuda/CUDACachingAllocator.cpp (L47-L50)). ~~I didn't explain full details of the life cycle of memory blocks or stream awareness of the allocator for the consistent level of details with other documentations.~~ I explained about the stream awareness in a note block.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24078

Differential Revision: D16743526

Pulled By: zou3519

fbshipit-source-id: 05819c3cc96733e2ba93c0a7c0ca06933acb22f3

Summary:

This is a bunch of changes to the docs for stylistic changes,

correctness, and updates to the new script API / recent TorchScript

changes (i.e. namedtuple)

For reviewers, ping me to see a link of the rendered output.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24371

Pulled By: driazati

Differential Revision: D16832417

fbshipit-source-id: a28e748cf1b590964ca0ae2dfb5d8259c766a203

Summary:

Stacked PRs

* #24258 - [jit] Add `trace_module` to docs

* **#24208 - [jit] Cleanup documentation around `script` and `trace`**

Examples / info was duplicated between `ScriptModule`, `script`, and

`trace`, so this PR consolidates it and moves some things around to make

the docs more clear.

For reviewers, if you want to see the rendered output, ping me for a

link

](https://our.intern.facebook.com/intern/diff/16746236/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24208

Pulled By: driazati

Differential Revision: D16746236

fbshipit-source-id: fac3c6e762a31c897b132b8421baa8d4d61f694c

Summary:

**Patch Description**:

Update the docs to reflect one no longer needs to install tensorboard nightly, as Tensorboard 1.14.0 was [released last week](https://github.com/tensorflow/tensorboard/releases/tag/1.14.0).

**Testing**:

Haven't actually tested pytorch with tensorboard 1.14 yet. I'll update this PR once I have.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22026

Differential Revision: D16772136

Pulled By: orionr

fbshipit-source-id: 2e1e17300f304f50026837abbbc6ffb25704aac0

Summary:

This was previously buggy and not being displayed on master. This fixes

the issues with the script to generate the builtin function schemas and

moves it to its own page (it's 6000+ lines of schemas)

Sphinx looks like it will just keep going if it hits errors when importing modules, we should find out how to turn that off and put it in the CI

This also includes some other small fixes:

* removing internal only args from `script()` and `trace()` docs, this also requires manually keeping these argument lists up to date but I think the cleanliness is worth it

* removes outdated note about early returns

Pull Request resolved: https://github.com/pytorch/pytorch/pull/24056

Pulled By: driazati

Differential Revision: D16742406

fbshipit-source-id: 9102ba14215995ffef5aaafcb66a6441113fad59

Summary:

Adds new people and reorders sections to make more sense

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23693

Differential Revision: D16618230

Pulled By: dzhulgakov

fbshipit-source-id: 74191b50c6603309a9e6d14960b7c666eec6abdd

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23376

This uses master version of sphinxcontrib-katex as it only

recently got prerender support.

Fixes#20984

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D16582064

Pulled By: ezyang

fbshipit-source-id: 9ef24c5788c19572515ded2db2e8ebfb7a5ed44d

Summary:

Changelog:

- Rename `gels` to `lstsq`

- Fix all callsites

- Rename all tests

- Create a tentative alias for `lstsq` under the name `gels` and add a deprecation warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23460

Test Plan: - All tests should pass to confirm that the patch is correct

Differential Revision: D16547834

Pulled By: colesbury

fbshipit-source-id: b3bdb8f4c5d14c7716c3d9528e40324cc544e496

Summary:

Use the recursive script API in the existing docs

TODO:

* Migration guide for 1.1 -> 1.2

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21612

Pulled By: driazati

Differential Revision: D16553734

fbshipit-source-id: fb6be81a950224390bd5d19b9b3de2d97b3dc515

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/23417

Test Plan:

cd docs; make html

Imported from OSS

Differential Revision: D16523781

Pulled By: ilia-cher

fbshipit-source-id: d6c09e8a85d39e6185bbdc4b312fea44fcdfff06

Summary:

Thanks adefazio for the feedback, adding a note to the Contribution guide so that folks don't start working on code without checking with the maintainers.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23513

Differential Revision: D16546685

Pulled By: soumith

fbshipit-source-id: 1ee8ade963703c88374aedecb8c9e5ed39d7722d

Summary:

This is still work in progress.

There are several more items to add to complete this doc, including

- [x] LHS indexing, index assignments.

- [x] Tensor List.

- [x] ~Shape/Type propagation.~

- [x] FAQs

Please review and share your thoughts, feel free to add anything that you think should be included as well. houseroad spandantiwari lara-hdr neginraoof

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23185

Differential Revision: D16459647

Pulled By: houseroad

fbshipit-source-id: b401c005f848d957541ba3b00e00c93ac2f4609b

Summary:

With this change you can now list multiple interfaces separated by

comma. ProcessGroupGloo creates a single Gloo context for every device

in the list (a context represents a connection to every other

rank). For every collective that is called, it will select the context

in a round robin fashion. The number of worker threads responsible for

executing the collectives is set to be twice the number of devices.

If you have a single physical interface, and wish to employ increased

parallelism, you can also specify

`GLOO_SOCKET_IFNAME=eth0,eth0,eth0,eth0`. This makes ProcessGroupGloo

use 4 connections per rank, 4 I/O threads, and 8 worker threads

responsible for executing the collectives.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22978

ghstack-source-id: 87006270

Differential Revision: D16339962

fbshipit-source-id: 9aa1dc93d8e131c1714db349b0cbe57e9e7266f1

Summary:

Covering fleet-wide profiling, api logging, etc.

It's my first time writing rst, so suggestions are definitely welcomed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/23010

Differential Revision: D16456721

Pulled By: dzhulgakov

fbshipit-source-id: 3d3018f41499d04db0dca865bb3a9652d8cdf90a

Summary:

I manually went through all functions in `torch.*` and corrected any mismatch between the arguments mentioned in doc and the ones actually taken by the function. This fixes https://github.com/pytorch/pytorch/issues/8698.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/22973

Differential Revision: D16419602

Pulled By: yf225

fbshipit-source-id: 5562c9b0b95a0759abee41f967c45efacf2267c2

Summary:

This cleans up the `torch.utils.tensorboard` API to remove all kwargs usage (which isn't clear to the user) and removes the "experimental" warning in prep for our 1.2 release.

We also don't need the additional PyTorch version checks now that we are in the codebase itself.

cc ezyang lanpa natalialunova

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21786

Reviewed By: natalialunova

Differential Revision: D15854892

Pulled By: orionr

fbshipit-source-id: 06b8498826946e578824d4b15c910edb3c2c20c6

Summary:

# What is this?

This is an implementation of the AdamW optimizer as implemented in [the fastai library](803894051b/fastai/callback.py) and as initially introduced in the paper [Decoupled Weight Decay Regularization](https://arxiv.org/abs/1711.05101). It decouples the weight decay regularization step from the optimization step during training.

There have already been several abortive attempts to push this into pytorch in some form or fashion: https://github.com/pytorch/pytorch/pull/17468, https://github.com/pytorch/pytorch/pull/10866, https://github.com/pytorch/pytorch/pull/3740, https://github.com/pytorch/pytorch/pull/4429. Hopefully this one goes through.

# Why is this important?

Via a simple reparameterization, it can be shown that L2 regularization has a weight decay effect in the case of SGD optimization. Because of this, L2 regularization became synonymous with the concept of weight decay. However, it can be shown that the equivalence of L2 regularization and weight decay breaks down for more complex adaptive optimization schemes. It was shown in the paper [Decoupled Weight Decay Regularization](https://arxiv.org/abs/1711.05101) that this is the reason why models trained with SGD achieve better generalization than those trained with Adam. Weight decay is a very effective regularizer. L2 regularization, in and of itself, is much less effective. By explicitly decaying the weights, we can achieve state-of-the-art results while also taking advantage of the quick convergence properties that adaptive optimization schemes have.

# How was this tested?

There were test cases added to `test_optim.py` and I also ran a [little experiment](https://gist.github.com/mjacar/0c9809b96513daff84fe3d9938f08638) to validate that this implementation is equivalent to the fastai implementation.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21250

Differential Revision: D16060339

Pulled By: vincentqb

fbshipit-source-id: ded7cc9cfd3fde81f655b9ffb3e3d6b3543a4709

Summary:

This is a modified version of https://github.com/pytorch/pytorch/pull/14705 since commit structure for that PR is quite messy.

1. Add `IterableDataset`.

3. So we have 2 data loader mods: `Iterable` and `Map`.

1. `Iterable` if the `dataset` is an instance of `IterableDataset`

2. `Map` o.w.

3. Add better support for non-batch loading (i.e., `batch_size=None` and `batch_sampler=None`). This is useful in doing things like bulk loading.

3. Refactor `DataLoaderIter` into two classes, `_SingleProcessDataLoaderIter` and `_MultiProcessingDataLoaderIter`. Rename some methods to be more generic, e.g., `get_batch` -> `get_data`.

4. Add `torch.utils.data.get_worker_info` which returns worker information in a worker proc (e.g., worker id, dataset obj copy, etc.) and can be used in `IterableDataset.__iter__` and `worker_init_fn` to do per-worker configuration.

5. Add `ChainDataset`, which is the analog of `ConcatDataset` for `IterableDataset`.

7. Import torch.utils.data in `torch/__init__.py`

9. data loader examples and documentations

10. Use `get_worker_info` to detect whether we are in a worker process in `default_collate`

Closes https://github.com/pytorch/pytorch/issues/17909, https://github.com/pytorch/pytorch/issues/18096, https://github.com/pytorch/pytorch/issues/19946, and some of https://github.com/pytorch/pytorch/issues/13023

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19228

Reviewed By: bddppq

Differential Revision: D15058152

fbshipit-source-id: 9e081a901a071d7e4502b88054a34b450ab5ddde

Summary:

Accidentally rebased the old PR and make it too messy. Find it here (https://github.com/pytorch/pytorch/pull/19274)

Create a PR for comments. The model is still WIP but I want to have some feedbacks before moving too far. The transformer model depends on several modules, like MultiheadAttention (landed).

Transformer is implemented based on the paper (https://papers.nips.cc/paper/7181-attention-is-all-you-need.pdf). Users have the flexibility to build a transformer with self-defined and/or built-in components (i.e encoder, decoder, encoder_layer, decoder_layer). Users could use Transformer class to build a standard transformer model and modify sub-layers as needed.

Add a few unit tests for the transformer module, as follow:

TestNN.test_Transformer_cell

TestNN.test_transformerencoderlayer

TestNN.test_transformerdecoderlayer

TestNN.test_transformer_args_check

TestScript.test_scriptmodule_transformer_cuda

There is another demonstration example for applying transformer module on the word language problem. https://github.com/pytorch/examples/pull/555

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20170

Differential Revision: D15417983

Pulled By: zhangguanheng66

fbshipit-source-id: 7ce771a7e27715acd9a23d60bf44917a90d1d572

Summary:

Something flaky is going on with `test_inplace_view_saved_output` on Windows.

With my PR #20598 applied, the test fails, even though there is no obvious reason it should be related, so the PR was reverted.

Based on commenting out various parts of my change and re-building, I think the problem is with the name -- renaming everything from `T` to `asdf` seems to make the test stop failing. I can't be sure that this is actually the case though, since I could just be seeing patterns in non-deterministic build output...

I spoke with colesbury offline and we agreed that it is okay to just disable this test on Windows for now and not block landing the main change. He will look into why it is failing.

**Test Plan:** I will wait to make sure the Windows CI suite passes before landing this.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21175

Differential Revision: D15566970

Pulled By: umanwizard

fbshipit-source-id: edf223375d41faaab0a3a14dca50841f08030da3

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20665

Add gelu activation forward on CPU in pytorch

Compare to current python implemented version of gelu in BERT model like

def gelu(self, x):

x * 0.5 * (1.0 + torch.erf(x / self.sqrt_two))

The torch.nn.functional.gelu function can reduce the forward time from 333ms to 109ms (with MKL) / 112ms (without MKL) for input size = [64, 128, 56, 56] on a devvm.

Reviewed By: zheng-xq

Differential Revision: D15400974

fbshipit-source-id: f606b43d1dd64e3c42a12c4991411d47551a8121

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21196

we'll add `quantize(quantizer)` as a tensor method later when we expose `quantizer` in Python frontend

Python

```

torch.quantize_linear(t, ...)

```

C++

```

at::quantize_linear(t, ...)

```

Differential Revision: D15577123

fbshipit-source-id: d0abeea488418fa9ab212f84b0b97ee237124240

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21156

we'll add `quantize(quantizer)` as a tensor method later when we expose `quantizer` in Python frontend

Python

```

torch.quantize_linear(t, ...)

```

C++

```

at::quantize_linear(t, ...)

```

Differential Revision: D15558784

fbshipit-source-id: 0b194750c423f51ad1ad5e9387a12b4d58d969a9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20874

A criteria for what should go in Tensor method is whether numpy has it, for this one it does not

so we are removing it as a Tensor method, we can still call it as function.

Python

```

torch.quantize_linear(t, ...), torch.dequantize(t)

```

C++

```

at::quantize_linear(t, ...), at::dequantize(t)

```

Reviewed By: dzhulgakov

Differential Revision: D15477933

fbshipit-source-id: c8aa81f681e02f038d72e44f0c700632f1af8437

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20938

Dequantize_linear need not be exposed to the front end users.

It will only be used for the jit passes for q-dq insertion and op

substitution.

Differential Revision: D15446097

fbshipit-source-id: a5fbcf2bb72115122c9653e5089d014e2a2e891d

Summary:

I started adding support for the new **[mesh/point cloud](https://github.com/tensorflow/graphics/blob/master/tensorflow_graphics/g3doc/tensorboard.md)** data type introduced to TensorBoard recently.

I created the functions to add the data, created the appropriate summaries.

This new data type however requires a **Merged** summary containing the data for the vertices, colors and faces.

I got stuck at this stage. Maybe someone can help. lanpa?

I converted the example code by Google to PyTorch:

```python

import numpy as np

import trimesh

import torch

from torch.utils.tensorboard import SummaryWriter

sample_mesh = 'https://storage.googleapis.com/tensorflow-graphics/tensorboard/test_data/ShortDance07_a175_00001.ply'

log_dir = 'runs/torch'

batch_size = 1

# Camera and scene configuration.

config_dict = {

'camera': {'cls': 'PerspectiveCamera', 'fov': 75},

'lights': [

{

'cls': 'AmbientLight',

'color': '#ffffff',

'intensity': 0.75,

}, {

'cls': 'DirectionalLight',

'color': '#ffffff',

'intensity': 0.75,

'position': [0, -1, 2],

}],

'material': {

'cls': 'MeshStandardMaterial',

'roughness': 1,

'metalness': 0

}

}

# Read all sample PLY files.

mesh = trimesh.load_remote(sample_mesh)

vertices = np.array(mesh.vertices)

# Currently only supports RGB colors.

colors = np.array(mesh.visual.vertex_colors[:, :3])

faces = np.array(mesh.faces)

# Add batch dimension, so our data will be of shape BxNxC.

vertices = np.expand_dims(vertices, 0)

colors = np.expand_dims(colors, 0)

faces = np.expand_dims(faces, 0)

# Create data placeholders of the same shape as data itself.

vertices_tensor = torch.as_tensor(vertices)

faces_tensor = torch.as_tensor(faces)

colors_tensor = torch.as_tensor(colors)

writer = SummaryWriter(log_dir)

writer.add_mesh('mesh_color_tensor', vertices=vertices_tensor, faces=faces_tensor,

colors=colors_tensor, config_dict=config_dict)

writer.close()

```

I tried adding only the vertex summary, hence the others are supposed to be optional.

I got the following error from TensorBoard and it also didn't display the points:

```

Traceback (most recent call last):

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/werkzeug/serving.py", line 302, in run_wsgi

execute(self.server.app)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/werkzeug/serving.py", line 290, in execute

application_iter = app(environ, start_response)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/backend/application.py", line 309, in __call__

return self.data_applications[clean_path](environ, start_response)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/werkzeug/wrappers/base_request.py", line 235, in application

resp = f(*args[:-2] + (request,))

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/plugins/mesh/mesh_plugin.py", line 252, in _serve_mesh_metadata

tensor_events = self._collect_tensor_events(request)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/plugins/mesh/mesh_plugin.py", line 188, in _collect_tensor_events

tensors = self._multiplexer.Tensors(run, instance_tag)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/backend/event_processing/plugin_event_multiplexer.py", line 400, in Tensors

return accumulator.Tensors(tag)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/backend/event_processing/plugin_event_accumulator.py", line 437, in Tensors

return self.tensors_by_tag[tag].Items(_TENSOR_RESERVOIR_KEY)

KeyError: 'mesh_color_tensor_COLOR'

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20413

Differential Revision: D15500737

Pulled By: orionr

fbshipit-source-id: 426e8b966037d08c065bce5198fd485fd80a2b67

Summary:

To say that we don't do refinement on module attributes

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20912

Differential Revision: D15496453

Pulled By: eellison

fbshipit-source-id: a1ab9fb0157a30fa1bb71d0793fcc9b1670c4926

Summary:

The current variance kernels compute mean at the same time. Many times we want both statistics together, so it seems reasonable to have a kwarg/function that allows us to get both values without launching an extra kernel.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18731

Differential Revision: D14726082

Pulled By: ifedan

fbshipit-source-id: 473cba0227b69eb2240dca5e61a8f4366df0e029

Summary:

As a part of supporting writing data into TensorBoard readable format, we show more example on how to use the function in addition to the API docs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20008

Reviewed By: natalialunova

Differential Revision: D15261502

Pulled By: orionr

fbshipit-source-id: 16611695a27e74bfcdf311e7cad40196e0947038

Summary:

This adds method details and corrects example on the page that didn't run properly. I've now confirmed that it runs in colab with nightly.

For those with internal access the rendered result can be seen at https://home.fburl.com/~orionr/pytorch-docs/tensorboard.html

cc lanpa, soumith, ezyang, brianjo

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19915

Differential Revision: D15137430

Pulled By: orionr

fbshipit-source-id: 833368fb90f9d75231b8243b43de594b475b2cb1

Summary:

This PR adds TensorBoard logging support natively within PyTorch. It is based on the tensorboardX code developed by lanpa and relies on changes inside the tensorflow/tensorboard repo landing at https://github.com/tensorflow/tensorboard/pull/2065.

With these changes users can simply `pip install tensorboard; pip install torch` and then log PyTorch data directly to the TensorBoard protobuf format using

```

import torch

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter()

s1 = torch.rand(1)

writer.add_scalar('data/scalar1', s1[0], 0)

writer.close()

```

Design:

- `EventFileWriter` and `RecordWriter` from tensorboardX now live in tensorflow/tensorboard

- `SummaryWriter` and PyTorch-specific conversion from tensors, nn modules, etc. now live in pytorch/pytorch. We also support Caffe2 blobs and nets.

Action items:

- [x] `from torch.utils.tensorboard import SummaryWriter`

- [x] rename functions

- [x] unittests

- [x] move actual writing function to tensorflow/tensorboard in https://github.com/tensorflow/tensorboard/pull/2065

Review:

- Please review for PyTorch standard formatting, code usage, etc.

- Please verify unittest usage is correct and executing in CI

Any significant changes made here will likely be synced back to github.com/lanpa/tensorboardX/ in the future.

cc orionr, ezyang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16196

Differential Revision: D15062901

Pulled By: orionr

fbshipit-source-id: 3812eb6aa07a2811979c5c7b70810261f9ea169e

Summary:

Changelog:

- Rename `potri` to `cholesky_inverse` to remain consistent with names of `cholesky` methods (`cholesky`, `cholesky_solve`)

- Fix all callsites

- Rename all tests

- Create a tentative alias for `cholesky_inverse` under the name `potri` and add a deprecation warning to not promote usage

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19498

Differential Revision: D15029901

Pulled By: ezyang

fbshipit-source-id: 2074286dc93d8744cdc9a45d54644fe57df3a57a

Summary:

This is a simple yet useful addition to the torch.nn modules: an identity module. This is a first draft - please let me know what you think and I will edit my PR.

There is no identity module - nn.Sequential() can be used, however it is argument sensitive so can't be used interchangably with any other module. This adds nn.Identity(...) which can be swapped with any module because it has dummy arguments. It's also more understandable than seeing an empty Sequential inside a model.

See discussion on #9160. The current solution is to use nn.Sequential(). However this won't work as follows:

```python

batch_norm = nn.BatchNorm2d

if dont_use_batch_norm:

batch_norm = Identity

```

Then in your network, you have:

```python

nn.Sequential(

...

batch_norm(N, momentum=0.05),

...

)

```

If you try to simply set `Identity = nn.Sequential`, this will fail since `nn.Sequential` expects modules as arguments. Of course there are many ways to get around this, including:

- Conditionally adding modules to an existing Sequential module

- Not using Sequential but writing the usual `forward` function with an if statement

- ...

**However, I think that an identity module is the most pythonic strategy,** assuming you want to use nn.Sequential.

Using the very simple class (this isn't the same as the one in my commit):

```python

class Identity(nn.Module):

def __init__(self, *args, **kwargs):

super().__init__()

def forward(self, x):

return x

```

we can get around using nn.Sequential, and `batch_norm(N, momentum=0.05)` will work. There are of course other situations this would be useful.

Thank you.

Best,

Miles

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19249

Differential Revision: D15012969

Pulled By: ezyang

fbshipit-source-id: 9f47e252137a1679e306fd4c169dca832eb82c0c

Summary:

A few improvements while doing bert model

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19247

Differential Revision: D14989345

Pulled By: ailzhang

fbshipit-source-id: f4846813f62b6d497fbe74e8552c9714bd8dc3c7

Summary:

* `torch.hub.list('pytorch/vision')` - show all available hub models in `pytorch/vision`

* `torch.hub.show('pytorch/vision', 'resnet18')` - show docstring & example for `resnet18` in `pytorch/vision`

* Moved `torch.utils.model_zoo.load_url` to `torch.hub.load_state_dict_from_url` and deprecate `torch.utils.model_zoo`

* We have too many env to control where the cache dir is, it's not very necessary. I actually want to unify `TORCH_HUB_DIR`, `TORCH_HOME` and `TORCH_MODEL_ZOO`, but haven't done it. (more suggestions are welcome!)

* Simplify `pytorch/vision` example in doc, it was used to show how how hub entrypoint can be written so had some confusing unnecessary args.

An example of hub usage is shown below

```

In [1]: import torch

In [2]: torch.hub.list('pytorch/vision', force_reload=True)

Downloading: "https://github.com/pytorch/vision/archive/master.zip" to /private/home/ailzhang/.torch/hub/master.zip

Out[2]: ['resnet18', 'resnet50']

In [3]: torch.hub.show('pytorch/vision', 'resnet18')

Using cache found in /private/home/ailzhang/.torch/hub/vision_master

Resnet18 model

pretrained (bool): a recommended kwargs for all entrypoints

args & kwargs are arguments for the function

In [4]: model = torch.hub.load('pytorch/vision', 'resnet18', pretrained=True)

Using cache found in /private/home/ailzhang/.torch/hub/vision_master

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18758

Differential Revision: D14883651

Pulled By: ailzhang

fbshipit-source-id: 6db6ab708a74121782a9154c44b0e190b23e8309

Summary:

Changelog:

- Rename `btrisolve` to `lu_solve` to remain consistent with names of solve methods (`cholesky_solve`, `triangular_solve`, `solve`)

- Fix all callsites

- Rename all tests

- Create a tentative alias for `lu_solve` under the name `btrisolve` and add a deprecation warning to not promote usage

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18726

Differential Revision: D14726237

Pulled By: zou3519

fbshipit-source-id: bf25f6c79062183a4153015e0ec7ebab2c8b986b

Summary:

This is a minimalist PR to add MKL-DNN tensor per discussion from Github issue: https://github.com/pytorch/pytorch/issues/16038

Ops with MKL-DNN tensor will be supported in following-up PRs to speed up imperative path.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17748

Reviewed By: dzhulgakov

Differential Revision: D14614640

Pulled By: bddppq

fbshipit-source-id: c58de98e244b0c63ae11e10d752a8e8ed920c533

Summary:

Per our offline discussion, allow Tensors, ints, and floats to be casted to be bool when used in a conditional

Fix for https://github.com/pytorch/pytorch/issues/18381

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18755

Reviewed By: driazati

Differential Revision: D14752476

Pulled By: eellison

fbshipit-source-id: 149960c92afcf7e4cc4997bccc57f4e911118ff1

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18230

Implementing minimum qtensor API to unblock other workstreams in quantization

Changes:

- Added Quantizer which represents different quantization schemes

- Added qint8 as a data type for QTensor

- Added a new ScalarType QInt8

- Added QTensorImpl for QTensor

- Added following user facing APIs

- quantize_linear(scale, zero_point)

- dequantize()

- q_scale()

- q_zero_point()

Reviewed By: dzhulgakov

Differential Revision: D14524641

fbshipit-source-id: c1c0ae0978fb500d47cdb23fb15b747773429e6c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18628

ghimport-source-id: d94b81a6f303883d97beaae25344fd591e13ce52

Stack from [ghstack](https://github.com/ezyang/ghstack):

* #18629 Provide flake8 install instructions.

* **#18628 Delete duplicated technical content from contribution_guide.rst**

There's useful guide in contributing_guide.rst, but the

technical bits were straight up copy-pasted from CONTRIBUTING.md,

and I don't think it makes sense to break the CONTRIBUTING.md

link. Instead, I deleted the duplicate bits and added a cross

reference to the rst document.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision: D14701003

fbshipit-source-id: 3bbb102fae225cbda27628a59138bba769bfa288

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18598

ghimport-source-id: c74597e5e7437e94a43c163cee0639b20d0d0c6a

Stack from [ghstack](https://github.com/ezyang/ghstack):

* **#18598 Turn on F401: Unused import warning.**

This was requested by someone at Facebook; this lint is turned

on for Facebook by default. "Sure, why not."

I had to noqa a number of imports in __init__. Hypothetically

we're supposed to use __all__ in this case, but I was too lazy

to fix it. Left for future work.

Be careful! flake8-2 and flake8-3 behave differently with

respect to import resolution for # type: comments. flake8-3 will

report an import unused; flake8-2 will not. For now, I just

noqa'd all these sites.

All the changes were done by hand.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision: D14687478

fbshipit-source-id: 30d532381e914091aadfa0d2a5a89404819663e3

Summary:

Changelog:

- Renames `btriunpack` to `lu_unpack` to remain consistent with the `lu` function interface.

- Rename all relevant tests, fix callsites

- Create a tentative alias for `lu_unpack` under the name `btriunpack` and add a deprecation warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18529

Differential Revision: D14683161

Pulled By: soumith

fbshipit-source-id: 994287eaa15c50fd74c2f1c7646edfc61e8099b1

Summary:

Changelog:

- Renames `btrifact` and `btrifact_with_info` to `lu`to remain consistent with other factorization methods (`qr` and `svd`).

- Now, we will only have one function and methods named `lu`, which performs `lu` decomposition. This function takes a get_infos kwarg, which when set to True includes a infos tensor in the tuple.

- Rename all tests, fix callsites

- Create a tentative alias for `lu` under the name `btrifact` and `btrifact_with_info`, and add a deprecation warning to not promote usage.

- Add the single batch version for `lu` so that users don't have to unsqueeze and squeeze for a single square matrix (see changes in determinant computation in `LinearAlgebra.cpp`)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18435

Differential Revision: D14680352

Pulled By: soumith

fbshipit-source-id: af58dfc11fa53d9e8e0318c720beaf5502978cd8

Summary:

This implements a cyclical learning rate (CLR) schedule with an optional inverse cyclical momentum. More info about CLR: https://github.com/bckenstler/CLR

This is finishing what #2016 started. Resolves#1909.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18001

Differential Revision: D14451845

Pulled By: sampepose

fbshipit-source-id: 8f682e0c3dee3a73bd2b14cc93fcf5f0e836b8c9

Summary:

There are a number of pages in the docs that serve insecure content. AFAICT this is the sole source of that.

I wasn't sure if docs get regenerated for old versions as part of the automation, or if those would need to be manually done.

cf. https://github.com/pytorch/pytorch.github.io/pull/177

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18508

Differential Revision: D14645665

Pulled By: zpao

fbshipit-source-id: 003563b06048485d4f539feb1675fc80bab47c1b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18507

ghimport-source-id: 1c3642befad2da78a7e5f39d6d58732b85c76267

Stack from [ghstack](https://github.com/ezyang/ghstack):

* **#18507 Upgrade flake8-bugbear to master, fix the new lints.**

It turns out Facebobok is internally using the unreleased master

flake8-bugbear, so upgrading it grabs a few more lints that Phabricator

was complaining about but we didn't get in open source.

A few of the getattr sites that I fixed look very suspicious (they're

written as if Python were a lazy language), but I didn't look more

closely into the matter.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision: D14633682

fbshipit-source-id: fc3f97c87dca40bbda943a1d1061953490dbacf8

Summary:

This depend on https://github.com/pytorch/pytorch/pull/16039

This prevent people (reviewer, PR author) from forgetting adding things to `tensors.rst`.

When something new is added to `_tensor_doc.py` or `tensor.py` but intentionally not in `tensors.rst`, people should manually whitelist it in `test_docs_coverage.py`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16057

Differential Revision: D14619550

Pulled By: ezyang

fbshipit-source-id: e1c6dd6761142e2e48ec499e118df399e3949fcc

Summary:

This PR adds a Global Site Tag to the site.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17690

Differential Revision: D14620816

Pulled By: zou3519

fbshipit-source-id: c02407881ce08340289123f5508f92381744e8e3

Summary:

`SobolEngine` is a quasi-random sampler used to sample points evenly between [0,1]. Here we use direction numbers to generate these samples. The maximum supported dimension for the sampler is 1111.

Documentation has been added, tests have been added based on Balandat 's references. The implementation is an optimized / tensor-ized implementation of Balandat 's implementation in Cython as provided in #9332.

This closes#9332 .

cc: soumith Balandat

Pull Request resolved: https://github.com/pytorch/pytorch/pull/10505

Reviewed By: zou3519

Differential Revision: D9330179

Pulled By: ezyang

fbshipit-source-id: 01d5588e765b33b06febe99348f14d1e7fe8e55d

Summary:

This is to fix#16141 and similar issues.

The idea is to track a reference to every shared CUDA Storage and deallocate memory only after a consumer process deallocates received Storage.

ezyang Done with cleanup. Same (insignificantly better) performance as in file-per-share solution, but handles millions of shared tensors easily. Note [ ] documentation in progress.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16854

Differential Revision: D13994490

Pulled By: VitalyFedyunin

fbshipit-source-id: 565148ec3ac4fafb32d37fde0486b325bed6fbd1

Summary:

* Adds more headers for easier scanning

* Adds some line breaks so things are displayed correctly

* Minor copy/spelling stuff

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18234

Reviewed By: ezyang

Differential Revision: D14567737

Pulled By: driazati

fbshipit-source-id: 046d991f7aab8e00e9887edb745968cb79a29441

Summary:

Changelog:

- Renames `trtrs` to `triangular_solve` to remain consistent with `cholesky_solve` and `solve`.

- Rename all tests, fix callsites

- Create a tentative alias for `triangular_solve` under the name `trtrs`, and add a deprecation warning to not promote usage.

- Move `isnan` to _torch_docs.py

- Remove unnecessary imports

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18213

Differential Revision: D14566902

Pulled By: ezyang

fbshipit-source-id: 544f57c29477df391bacd5de700bed1add456d3f

Summary:

Fixes Typo and a Link in the `docs/source/community/contribution_guide.rst`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18237

Differential Revision: D14566907

Pulled By: ezyang

fbshipit-source-id: 3a75797ab6b27d28dd5566d9b189d80395024eaf

Summary:

Changelog:

- Renames `gesv` to `solve` to remain consistent with `cholesky_solve`.

- Rename all tests, fix callsites

- Create a tentative alias for `solve` under the name `gesv`, and add a deprecated warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18060

Differential Revision: D14503117

Pulled By: zou3519

fbshipit-source-id: 99c16d94e5970a19d7584b5915f051c030d49ff5

Summary:

Fix a very common typo in my name.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17949

Differential Revision: D14475162

Pulled By: ezyang

fbshipit-source-id: 91c2c364c56ecbbda0bd530e806a821107881480

Summary: Adding new documents to the PyTorch website to describe how PyTorch is governed, how to contribute to the project, and lists persons of interest.

Reviewed By: orionr

Differential Revision: D14394573

fbshipit-source-id: ad98b807850c51de0b741e3acbbc3c699e97b27f

Summary:

as title

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17476

Differential Revision: D14218312

Pulled By: suo

fbshipit-source-id: 64df096a3431a6f25cd2373f0959d415591fed15

Summary:

Based on https://github.com/pytorch/pytorch/pull/12413, with the following additional changes:

- Inside `native_functions.yml` move those outplace operators right next to everyone's corresponding inplace operators for convenience of checking if they match when reviewing

- `matches_jit_signature: True` for them

- Add missing `scatter` with Scalar source

- Add missing `masked_fill` and `index_fill` with Tensor source.

- Add missing test for `scatter` with Scalar source

- Add missing test for `masked_fill` and `index_fill` with Tensor source by checking the gradient w.r.t source

- Add missing docs to `tensor.rst`

Differential Revision: D14069925

Pulled By: ezyang

fbshipit-source-id: bb3f0cb51cf6b756788dc4955667fead6e8796e5

Summary:

one_hot docs is missing [here](https://pytorch.org/docs/master/nn.html#one-hot).

I dug around and could not find a way to get this working properly.

Differential Revision: D14104414

Pulled By: zou3519

fbshipit-source-id: 3f45c8a0878409d218da167f13b253772f5cc963

Summary:

This prevent people (reviewer, PR author) from forgetting adding things to `torch.rst`.

When something new is added to `_torch_doc.py` or `functional.py` but intentionally not in `torch.rst`, people should manually whitelist it in `test_docs_coverage.py`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16039

Differential Revision: D14070903

Pulled By: ezyang

fbshipit-source-id: 60f2a42eb5efe81be073ed64e54525d143eb643e

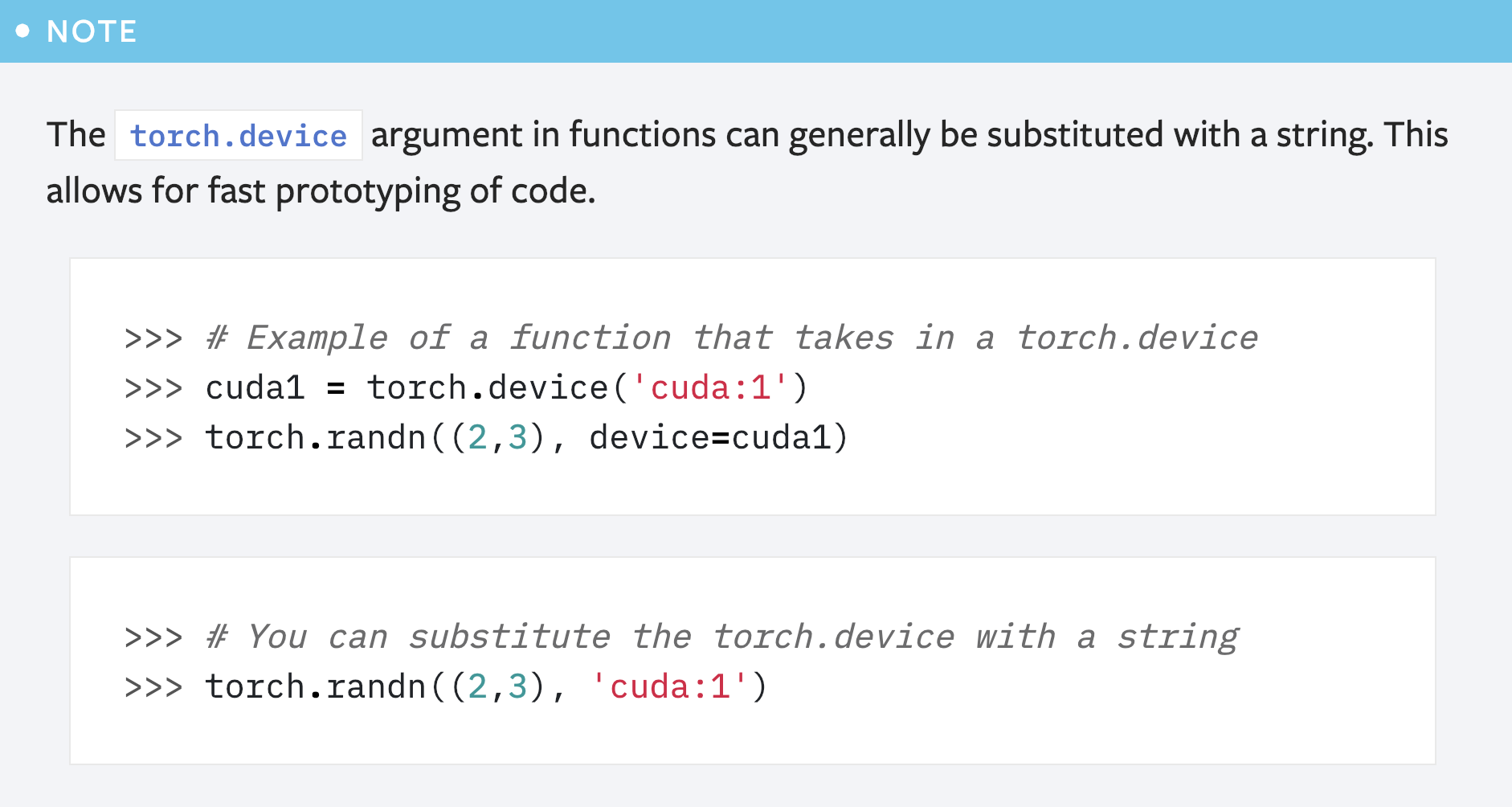

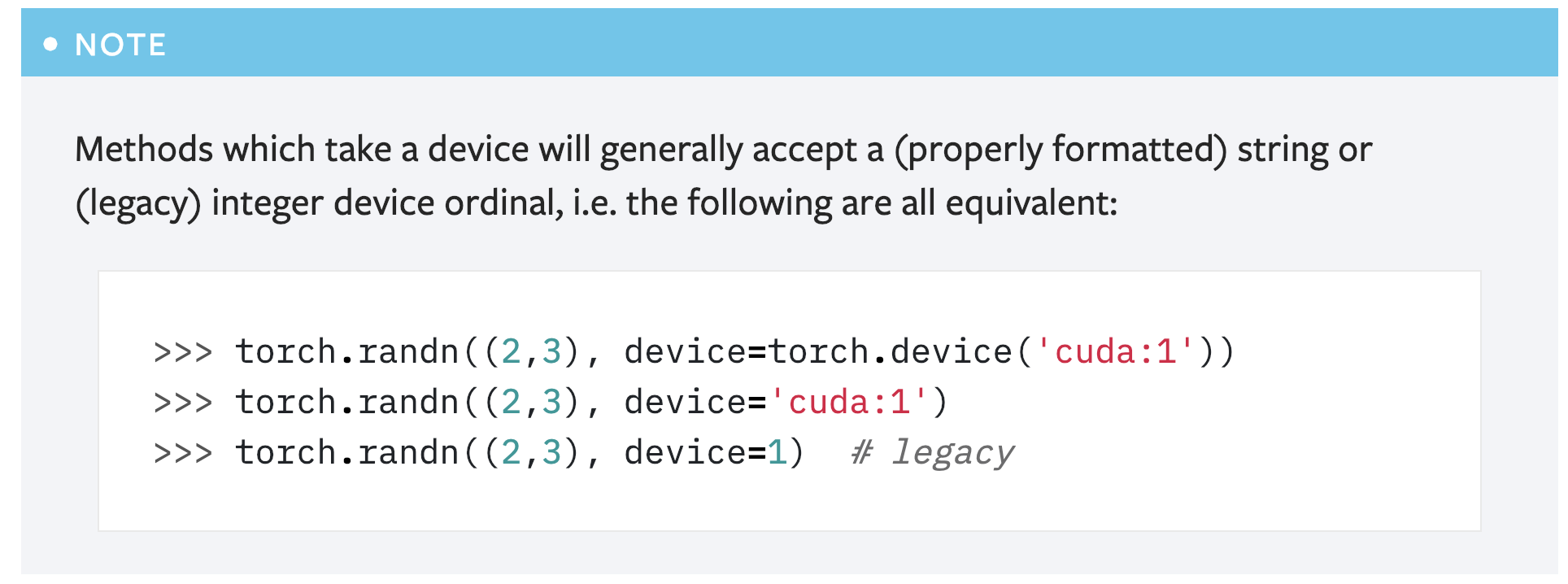

Summary:

This PR is a simple fix for the mistake in the first note for `torch.device` in the "tensor attributes" doc.

```

>>> # You can substitute the torch.device with a string

>>> torch.randn((2,3), 'cuda:1')

```

Above code will cause error like below:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-53-abdfafb67ab1> in <module>()

----> 1 torch.randn((2,3), 'cuda:1')

TypeError: randn() received an invalid combination of arguments - got (tuple, str), but expected one of:

* (tuple of ints size, torch.Generator generator, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool requires_grad)

* (tuple of ints size, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool requires_grad)

```

Simply adding the argument name `device` solves the problem: `torch.randn((2,3), device='cuda:1')`.

However, another concern is that this note seems redundant as **there is already another note covering this usage**:

So maybe it's better to just remove this note?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16839

Reviewed By: ezyang

Differential Revision: D13989209

Pulled By: gchanan

fbshipit-source-id: ac255d52528da053ebfed18125ee6b857865ccaf

Summary:

Some batched updates:

1. bool is a type now

2. Early returns are allowed now

3. The beginning of an FAQ section with some guidance on the best way to do GPU training + CPU inference

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16866

Differential Revision: D13996729

Pulled By: suo

fbshipit-source-id: 3b884fd3a4c9632c9697d8f1a5a0e768fc918916

Summary:

Now that `cuda.get/set_rng_state` accept `device` objects, the default value should be an device object, and doc should mention so.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14324

Reviewed By: ezyang

Differential Revision: D13528707

Pulled By: soumith

fbshipit-source-id: 32fdac467dfea6d5b96b7e2a42dc8cfd42ba11ee

Summary:

Changelog:

- Renames `potrs` to `cholesky_solve` to remain consistent with Tensorflow and Scipy (not really, they call their function chol_solve)

- Default argument for upper in cholesky_solve is False. This will allow a seamless interface between `cholesky` and `cholesky_solve`, since the `upper` argument in both function are the same.

- Rename all tests

- Create a tentative alias for `cholesky_solve` under the name `potrs`, and add deprecated warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15334

Differential Revision: D13507724

Pulled By: soumith

fbshipit-source-id: b826996541e49d2e2bcd061b72a38c39450c76d0

Summary:

Some of the codeblocks were showing up as normal text and the "unsupported modules" table was formatted incorrectly

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15227

Differential Revision: D13468847

Pulled By: driazati

fbshipit-source-id: eb7375710d4f6eca1d0f44dfc43c7c506300cb1e

Summary:

Documents what is supported in the script standard library.

* Adds `my_script_module._get_method('forward').schema()` method to get function schema from a `ScriptModule`

* Removes `torch.nn.functional` from the list of builtins. The only functions not supported are `nn.functional.fold` and `nn.functional.unfold`, but those currently just dispatch to their corresponding aten ops, so from a user's perspective it looks like they work.

* Allow printing of `IValue::Device` by getting its string representation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14912

Differential Revision: D13385928

Pulled By: driazati

fbshipit-source-id: e391691b2f87dba6e13be05d4aa3ed2f004e31da