Summary:

**BC-breaking note**

For ease of exposition let a_min be the value of the "min" argument to clamp, and a_max be the value of the "max" argument to clamp.

This PR changes the behavior of torch.clamp to always compute min(max(a, a_min), a_max). torch.clamp currently computes this in its vectorized CPU specializations:

78b95b6204/aten/src/ATen/cpu/vec256/vec256_double.h (L304)

but in other places it clamps differently:

78b95b6204/aten/src/ATen/cpu/vec256/vec256_base.h (L624)78b95b6204/aten/src/ATen/native/cuda/UnaryOpsKernel.cu (L160)

These implementations are the same when a_min < a_max, but divergent when a_min > a_max. This divergence is easily triggered:

```

t = torch.arange(200).to(torch.float)

torch.clamp(t, 4, 2)[0]

: tensor(2.)

torch.clamp(t.cuda(), 4, 2)[0]

: tensor(4., device='cuda:0')

torch.clamp(torch.tensor(0), 4, 2)

: tensor(4)

```

This PR makes the behavior consistent with NumPy's clip. C++'s std::clamp's behavior is undefined when a_min > a_max, but Clang's std::clamp will return 10 in this case (although the program, per the above comment, is in error). Python has no standard clamp implementation.

**PR Summary**

Fixes discrepancy between AVX, CUDA, and base vector implementation for clamp, such that all implementations are consistent and use min(max_vec, max(min_vec, x) formula, thus making it equivalent to numpy.clip in all implementations.

The same fix as in https://github.com/pytorch/pytorch/issues/32587 but isolated to the kernel change only, so that the internal team can benchmark.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43288

Reviewed By: colesbury

Differential Revision: D24079453

Pulled By: mruberry

fbshipit-source-id: 67f30d2f2c86bbd3e87080b32f00e8fb131a53f7

Summary:

This PR adds support for complex-valued input for `torch.symeig`.

TODO:

- [ ] complex cuda tests raise `RuntimeError: _th_bmm_out not supported on CUDAType for ComplexFloat`

Update: Added xfailing tests for complex dtypes on CUDA. Once support for complex `bmm` is added these tests will work.

Fixes https://github.com/pytorch/pytorch/issues/45061.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45121

Reviewed By: mrshenli

Differential Revision: D24049649

Pulled By: anjali411

fbshipit-source-id: 2cd11f0e47d37c6ad96ec786762f2da57f25dac5

Summary:

Per feedback in the recent design review. Also tweaks the documentation to clarify what "deterministic" means and adds a test for the behavior.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45410

Reviewed By: ngimel

Differential Revision: D23974988

Pulled By: mruberry

fbshipit-source-id: e48307da9c90418fc6834fbd67b963ba2fe0ba9d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45069

`torch.abs` is a `C -> R` function for complex input. Following the general semantics in torch, the in-place version of abs should be disabled for complex input.

Test Plan: Imported from OSS

Reviewed By: glaringlee, malfet

Differential Revision: D23818397

Pulled By: anjali411

fbshipit-source-id: b23b8d0981c53ba0557018824d42ed37ec13d4e2

Summary:

- The thresholds of some tests are bumped up. Depending on the random generator, sometimes these tests fail with things like 0.0059 is not smaller than 0.005. I ran `test_nn.py` and `test_torch.py` for 10+ times to check these are no longer flaky.

- Add `tf32_on_and_off` to new `matrix_exp` tests.

- Disable TF32 on test suites other than `test_nn.py` and `test_torch.py`

cc: ptrblck

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44240

Reviewed By: mruberry

Differential Revision: D23882498

Pulled By: ngimel

fbshipit-source-id: 44a9ec08802c93a2efaf4e01d7487222478b6df8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39955

resolves https://github.com/pytorch/pytorch/issues/36323 by adding `torch.sgn` for complex tensors.

`torch.sgn` returns `x/abs(x)` for `x != 0` and returns `0 + 0j` for `x==0`

This PR doesn't test the correctness of the gradients. It will be done as a part of auditing all the ops in future once we decide the autograd behavior (JAX vs TF) and add gradchek.

Test Plan: Imported from OSS

Reviewed By: mruberry

Differential Revision: D23460526

Pulled By: anjali411

fbshipit-source-id: 70fc4e14e4d66196e27cf188e0422a335fc42f92

Summary:

This PR was originally authored by slayton58. I steal his implementation and added some tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44986

Reviewed By: mruberry

Differential Revision: D23806039

Pulled By: ngimel

fbshipit-source-id: 305d66029b426d8039fab3c3e011faf2bf87aead

Summary:

Fixes https://github.com/pytorch/pytorch/issues/43699

- Changed the order of `TORCH_CHECK` and `if (options.layout() == kSparse && self.is_sparse())`

inside `empty_like` method.

- [x] Added tests

EDIT:

More details on that and why we can not take zeros_like approach.

Python code :

```python

res = torch.zeros_like(input_coalesced, memory_format=torch.preserve_format)

```

is routed to

```c++

// TensorFactories.cpp

Tensor zeros_like(

const Tensor& self,

const TensorOptions& options,

c10::optional<c10::MemoryFormat> optional_memory_format) {

if (options.layout() == kSparse && self.is_sparse()) {

auto res = at::empty({0}, options); // to be resized

res.sparse_resize_and_clear_(

self.sizes(), self.sparse_dim(), self.dense_dim());

return res;

}

auto result = at::empty_like(self, options, optional_memory_format);

return result.zero_();

}

```

and passed to `if (options.layout() == kSparse && self.is_sparse())`

When we call in Python

```python

res = torch.empty_like(input_coalesced, memory_format=torch.preserve_format)

```

it is routed to

```c++

Tensor empty_like(

const Tensor& self,

const TensorOptions& options_,

c10::optional<c10::MemoryFormat> optional_memory_format) {

TORCH_CHECK(

!(options_.has_memory_format() && optional_memory_format.has_value()),

"Cannot set memory_format both in TensorOptions and explicit argument; please delete "

"the redundant setter.");

TensorOptions options =

self.options()

.merge_in(options_)

.merge_in(TensorOptions().memory_format(optional_memory_format));

TORCH_CHECK(

!(options.layout() != kStrided &&

optional_memory_format.has_value()),

"memory format option is only supported by strided tensors");

if (options.layout() == kSparse && self.is_sparse()) {

auto result = at::empty({0}, options); // to be resized

result.sparse_resize_and_clear_(

self.sizes(), self.sparse_dim(), self.dense_dim());

return result;

}

```

cc pearu

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44058

Reviewed By: albanD

Differential Revision: D23672494

Pulled By: mruberry

fbshipit-source-id: af232274dd2b516dd6e875fc986e3090fa285658

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44393

torch.quantile now correctly propagates nan and implemented torch.nanquantile similar to numpy.nanquantile.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D23649613

Pulled By: heitorschueroff

fbshipit-source-id: 5201d076745ae1237cedc7631c28cf446be99936

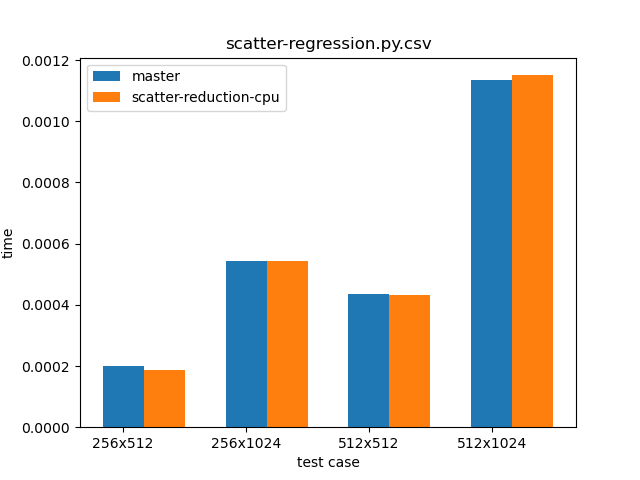

Summary:

Fixes https://github.com/pytorch/pytorch/issues/33394 .

This PR does two things:

1. Implement CUDA scatter reductions with revamped GPU atomic operations.

2. Remove support for divide and subtract for CPU reduction as was discussed with ngimel .

I've also updated the docs to reflect the existence of only multiply and add.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41977

Reviewed By: mruberry

Differential Revision: D23748888

Pulled By: ngimel

fbshipit-source-id: ea643c0da03c9058e433de96db02b503514c4e9c

Summary:

per title. If `beta=0` and slow path was taken, `nan` and `inf` in the result were not masked as is the case with other linear algebra functions. Similarly, since `mv` is implemented as `addmv` with `beta=0`, wrong results were sometimes produced for `mv` slow path.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44681

Reviewed By: mruberry

Differential Revision: D23708653

Pulled By: ngimel

fbshipit-source-id: e2d5d3e6f69b194eb29b327e1c6f70035f3b231c

Summary:

This PR:

- updates div to perform true division

- makes torch.true_divide an alias of torch.div

This follows on work in previous PyTorch releases that first deprecated div performing "integer" or "floor" division, then prevented it by throwing a runtime error.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42907

Reviewed By: ngimel

Differential Revision: D23622114

Pulled By: mruberry

fbshipit-source-id: 414c7e3c1a662a6c3c731ad99cc942507d843927

Summary:

Noticed this bug in `torch.movedim` (https://github.com/pytorch/pytorch/issues/41480). [`std::unique`](https://en.cppreference.com/w/cpp/algorithm/unique) only guarantees uniqueness for _sorted_ inputs. The current check lets through non-unique values when they aren't adjacent to each other in the list, e.g. `(0, 1, 0)` wouldn't raise an exception and instead the algorithm fails later with an internal assert.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44307

Reviewed By: mrshenli

Differential Revision: D23598311

Pulled By: zou3519

fbshipit-source-id: fd6cc43877c42bb243cfa85341c564b6c758a1bf

Summary:

This PR fixes three OpInfo-related bugs and moves some functions from TestTorchMathOps to be tested using the OpInfo pattern. The bugs are:

- A skip test path in test_ops.py incorrectly formatted its string argument

- Decorating the tests in common_device_type.py was incorrectly always applying decorators to the original test, not the op-specific variant of the test. This could cause the same decorator to be applied multiple times, overriding past applications.

- make_tensor was incorrectly constructing tensors in some cases

The functions moved are:

- asin

- asinh

- sinh

- acosh

- tan

- atan

- atanh

- tanh

- log

- log10

- log1p

- log2

In a follow-up PR more or all of the remaining functions in TestTorchMathOps will be refactored as OpInfo-based tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44277

Reviewed By: mrshenli, ngimel

Differential Revision: D23617361

Pulled By: mruberry

fbshipit-source-id: edb292947769967de9383f6a84eb327f027509e0

Summary:

This PR fixes three OpInfo-related bugs and moves some functions from TestTorchMathOps to be tested using the OpInfo pattern. The bugs are:

- A skip test path in test_ops.py incorrectly formatted its string argument

- Decorating the tests in common_device_type.py was incorrectly always applying decorators to the original test, not the op-specific variant of the test. This could cause the same decorator to be applied multiple times, overriding past applications.

- make_tensor was incorrectly constructing tensors in some cases

The functions moved are:

- asin

- asinh

- sinh

- acosh

- tan

- atan

- atanh

- tanh

- log

- log10

- log1p

- log2

In a follow-up PR more or all of the remaining functions in TestTorchMathOps will be refactored as OpInfo-based tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44277

Reviewed By: ngimel

Differential Revision: D23568330

Pulled By: mruberry

fbshipit-source-id: 03e69fccdbfd560217c34ce4e9a5f20e10d05a5e

Summary:

1) Ports nonzero from THC to ATen

2) replaces most thrust uses with cub, to avoid synchronization and to improve performance. There is still one necessary synchronization point, communicating number of nonzero elements from GPU to CPU

3) slightly changes algorithm, now we first compute the number of nonzeros, and then allocate correct-sized output, instead of allocating full-sized output as was done before, to account for possibly all elements being non-zero

4) unfortunately, since the last transforms are still done with thrust, 2) is slightly beside the point, however it is a step towards a future without thrust

4) hard limits the number of elements in the input tensor to MAX_INT. Previous implementation allocated a Long tensor with the size ndim*nelements, so that would be at least 16 GB for a tensor with MAX_INT elements. It is reasonable to say that larger tensors could not be used anyway.

Benchmarking is done for tensors with approximately half non-zeros

<details><summary>Benchmarking script</summary>

<p>

```

import torch

from torch.utils._benchmark import Timer

from torch.utils._benchmark import Compare

import sys

device = "cuda"

results = []

for numel in (1024 * 128,):#, 1024 * 1024, 1024 * 1024 * 128):

inp = torch.randint(2, (numel,), device="cuda", dtype=torch.float)

for ndim in range(2,3):#(1,4):

if ndim == 1:

shape = (numel,)

elif ndim == 2:

shape = (1024, numel // 1024)

else:

shape = (1024, 128, numel // 1024 // 128)

inp = inp.reshape(shape)

repeats = 3

timer = Timer(stmt="torch.nonzero(inp, as_tuple=False)", label="Nonzero", sub_label=f"number of elts {numel}",

description = f"ndim {ndim}", globals=globals())

for i in range(repeats):

results.append(timer.blocked_autorange())

print(f"\rnumel {numel} ndim {ndim}", end="")

sys.stdout.flush()

comparison = Compare(results)

comparison.print()

```

</p>

</details>

### Results

Before:

```

[--------------------------- Nonzero ---------------------------]

| ndim 1 | ndim 2 | ndim 3

1 threads: ------------------------------------------------------

number of elts 131072 | 55.2 | 71.7 | 90.5

number of elts 1048576 | 113.2 | 250.7 | 497.0

number of elts 134217728 | 8353.7 | 23809.2 | 54602.3

Times are in microseconds (us).

```

After:

```

[-------------------------- Nonzero --------------------------]

| ndim 1 | ndim 2 | ndim 3

1 threads: ----------------------------------------------------

number of elts 131072 | 48.6 | 79.1 | 90.2

number of elts 1048576 | 64.7 | 134.2 | 161.1

number of elts 134217728 | 3748.8 | 7881.3 | 9953.7

Times are in microseconds (us).

```

There's a real regression for smallish 2D tensor due to added work of computing number of nonzero elements, however, for other sizes there are significant gains, and there are drastically lower memory requirements. Perf gains would be even larger for tensors with fewer nonzeros.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44259

Reviewed By: izdeby

Differential Revision: D23581955

Pulled By: ngimel

fbshipit-source-id: 0b99a767fd60d674003d83f0848dc550d7a363dc

Summary:

When var and std are called without args (other than unbiased) they currently call into TH or THC. This PR:

- Removes the THC var_all and std_all functions and updates CUDA var and std to use the ATen reduction

- Fixes var's docs, which listed its arguments in the incorrect order

- Adds new tests comparing var and std with their NumPy counterparts

Performance appears to have improved as a result of this change. I ran experiments on 1D tensors, 1D tensors with every other element viewed ([::2]), 2D tensors and 2D transposed tensors. Some notable datapoints:

- torch.randn((8000, 8000))

- var measured 0.0022215843200683594s on CUDA before the change

- var measured 0.0020322799682617188s on CUDA after the change

- torch.randn((8000, 8000)).T

- var measured .015128850936889648 on CUDA before the change

- var measured 0.001912832260131836 on CUDA after the change

- torch.randn(8000 ** 2)

- std measured 0.11031460762023926 on CUDA before the change

- std measured 0.0017833709716796875 on CUDA after the change

Timings for var and std are, as expected, similar.

On the CPU, however, the performance change from making the analogous update was more complicated, and ngimel and I decided not to remove CPU var_all and std_all. ngimel wrote the following script that showcases how single-threaded CPU inference would suffer from this change:

```

import torch

import numpy as np

from torch.utils._benchmark import Timer

from torch.utils._benchmark import Compare

import sys

base = 8

multiplier = 1

def stdfn(a):

meanv = a.mean()

ac = a-meanv

return torch.sqrt(((ac*ac).sum())/a.numel())

results = []

num_threads=1

for _ in range(7):

size = base*multiplier

input = torch.randn(size)

tasks = [("torch.var(input)", "torch_var"),

("torch.var(input, dim=0)", "torch_var0"),

("stdfn(input)", "stdfn"),

("torch.sum(input, dim=0)", "torch_sum0")

]

timers = [Timer(stmt=stmt, num_threads=num_threads, label="Index", sub_label=f"{size}",

description=label, globals=globals()) for stmt, label in tasks]

repeats = 3

for i, timer in enumerate(timers * repeats):

results.append(

timer.blocked_autorange()

)

print(f"\r{i + 1} / {len(timers) * repeats}", end="")

sys.stdout.flush()

multiplier *=10

print()

comparison = Compare(results)

comparison.print()

```

The TH timings using this script on my devfair are:

```

[------------------------------ Index ------------------------------]

| torch_var | torch_var0 | stdfn | torch_sum0

1 threads: ----------------------------------------------------------

8 | 16.0 | 5.6 | 40.9 | 5.0

80 | 15.9 | 6.1 | 41.6 | 4.9

800 | 16.7 | 12.0 | 42.3 | 5.0

8000 | 27.2 | 72.7 | 51.5 | 6.2

80000 | 129.0 | 715.0 | 133.0 | 18.0

800000 | 1099.8 | 6961.2 | 842.0 | 112.6

8000000 | 11879.8 | 68948.5 | 20138.4 | 1750.3

```

and the ATen timings are:

```

[------------------------------ Index ------------------------------]

| torch_var | torch_var0 | stdfn | torch_sum0

1 threads: ----------------------------------------------------------

8 | 4.3 | 5.4 | 41.4 | 5.4

80 | 4.9 | 5.7 | 42.6 | 5.4

800 | 10.7 | 11.7 | 43.3 | 5.5

8000 | 69.3 | 72.2 | 52.8 | 6.6

80000 | 679.1 | 676.3 | 129.5 | 18.1

800000 | 6770.8 | 6728.8 | 819.8 | 109.7

8000000 | 65928.2 | 65538.7 | 19408.7 | 1699.4

```

which demonstrates that performance is analogous to calling the existing var and std with `dim=0` on a 1D tensor. This would be a significant performance hit. Another simple script shows the performance is mixed when using multiple threads, too:

```

import torch

import time

# Benchmarking var and std, 1D with varying sizes

base = 8

multiplier = 1

op = torch.var

reps = 1000

for _ in range(7):

size = base * multiplier

t = torch.randn(size)

elapsed = 0

for _ in range(reps):

start = time.time()

op(t)

end = time.time()

elapsed += end - start

multiplier *= 10

print("Size: ", size)

print("Avg. elapsed time: ", elapsed / reps)

```

```

var cpu TH vs ATen timings

Size: 8

Avg. elapsed time: 1.7853736877441406e-05 vs 4.9788951873779295e-06 (ATen wins)

Size: 80

Avg. elapsed time: 1.7803430557250977e-05 vs 6.156444549560547e-06 (ATen wins)

Size: 800

Avg. elapsed time: 1.8569469451904296e-05 vs 1.2302875518798827e-05 (ATen wins)

Size: 8000

Avg. elapsed time: 2.8756141662597655e-05 vs. 6.97789192199707e-05 (TH wins)

Size: 80000

Avg. elapsed time: 0.00026622867584228516 vs. 0.0002447957992553711 (ATen wins)

Size: 800000

Avg. elapsed time: 0.0010556647777557374 vs 0.00030616092681884767 (ATen wins)

Size: 8000000

Avg. elapsed time: 0.009990205764770508 vs 0.002938544034957886 (ATen wins)

std cpu TH vs ATen timings

Size: 8

Avg. elapsed time: 1.6681909561157225e-05 vs. 4.659652709960938e-06 (ATen wins)

Size: 80

Avg. elapsed time: 1.699185371398926e-05 vs. 5.431413650512695e-06 (ATen wins)

Size: 800

Avg. elapsed time: 1.768803596496582e-05 vs. 1.1279821395874023e-05 (ATen wins)

Size: 8000

Avg. elapsed time: 2.7791500091552735e-05 vs 7.031106948852539e-05 (TH wins)

Size: 80000

Avg. elapsed time: 0.00018650460243225096 vs 0.00024368906021118164 (TH wins)

Size: 800000

Avg. elapsed time: 0.0010522041320800782 vs 0.0003039860725402832 (ATen wins)

Size: 8000000

Avg. elapsed time: 0.009976618766784668 vs. 0.0029211788177490234 (ATen wins)

```

These results show the TH solution still performs better than the ATen solution with default threading for some sizes.

It seems like removing CPU var_all and std_all will require an improvement in ATen reductions. https://github.com/pytorch/pytorch/issues/40570 has been updated with this information.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43858

Reviewed By: zou3519

Differential Revision: D23498981

Pulled By: mruberry

fbshipit-source-id: 34bee046c4872d11c3f2ffa1b5beee8968b22050

Summary:

- test beta=0, self=nan

- test transposes

- fixes broadcasting of addmv

- not supporting tf32 yet, will do it in future PR together with other testing fixes

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43980

Reviewed By: mruberry

Differential Revision: D23507559

Pulled By: ngimel

fbshipit-source-id: 14ee39d1a0e13b9482932bede3fccb61fe6d086d

Summary:

- This test is very fast and very important, so it makes no sense in marking it as slowTest

- This test is should also run on CUDA

- This test should check alpha and beta support

- This test should check `out=` support

- manual computation should use list instead of index_put because list is much faster

- precision for TF32 needs to be fixed. Will do it in future PR.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43831

Reviewed By: ailzhang

Differential Revision: D23435032

Pulled By: ngimel

fbshipit-source-id: d1b8350addf1e2fe180fdf3df243f38d95aa3f5a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44001

This is to align with the naming in numpy and in

https://github.com/pytorch/pytorch/pull/43092

Test Plan:

```

python test/test_torch.py TestTorchDeviceTypeCPU.test_aminmax_cpu_float32

python test/test_torch.py TestTorchDeviceTypeCUDA.test_aminmax_cuda_float32

```

Imported from OSS

Reviewed By: jerryzh168

Differential Revision: D23465298

fbshipit-source-id: b599035507156cefa53942db05f93242a21c8d06

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42894

Continuing the min_max kernel implementation, this PR adds the

CPU path when a dim is specified. Next PR will replicate for CUDA.

Note: after a discussion with ngimel, we are taking the fast path

of calculating the values only and not the indices, since that is what

is needed for quantization, and calculating indices would require support

for reductions on 4 outputs which is additional work. So, the API

doesn't fully match `min.dim` and `max.dim`.

Flexible on the name, let me know if something else is better.

Test Plan:

correctness:

```

python test/test_torch.py TestTorchDeviceTypeCPU.test_minmax_cpu_float32

```

performance: seeing a 49% speedup on a min+max tensor with similar shapes

to what we care about for quantization observers (bench:

https://gist.github.com/vkuzo/b3f24d67060e916128a51777f9b89326). For

other shapes (more dims, different dim sizes, etc), I've noticed a

speedup as low as 20%, but we don't have a good use case to optimize

that so perhaps we can save that for a future PR.

Imported from OSS

Reviewed By: jerryzh168

Differential Revision: D23086798

fbshipit-source-id: b24ce827d179191c30eccf31ab0b2b76139b0ad5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42868

Adds a CUDA kernel for the _min_max function.

Note: this is a re-submit of https://github.com/pytorch/pytorch/pull/41805,

was faster to resubmit than to ressurect that one. Thanks to durumu

for writing the original implementation!

Future PRs will add index support, docs, and hook this up to observers.

Test Plan:

```

python test/test_torch.py TestTorchDeviceTypeCUDA.test_minmax_cuda_float32

```

Basic benchmarking shows a 50% reduction in time to calculate min + max:

https://gist.github.com/vkuzo/b7dd91196345ad8bce77f2e700f10cf9

TODO

Imported from OSS

Reviewed By: jerryzh168

Differential Revision: D23057766

fbshipit-source-id: 70644d2471cf5dae0a69343fba614fb486bb0891

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43270

`torch.conj` is a very commonly used operator for complex tensors, but it's mathematically a no op for real tensors. Switching to tensorflow gradients for complex tensors (as discussed in #41857) would involve adding `torch.conj()` to the backward definitions for a lot of operators. In order to preserve autograd performance for real tensors and maintain numpy compatibility for `torch.conj`, this PR updates `torch.conj()` which behaves the same for complex tensors but performs a view/returns `self` tensor for tensors of non-complex dtypes. The documentation states that the returned tensor for a real input shouldn't be mutated. We could perhaps return an immutable tensor for this case in future when that functionality is available (zdevito ezyang ).

Test Plan: Imported from OSS

Reviewed By: mruberry

Differential Revision: D23460493

Pulled By: anjali411

fbshipit-source-id: 3b3bf0af55423b77ff2d0e29f5d2c160291ae3d9

Summary:

Add a max/min operator that only return values.

## Some important decision to discuss

| **Question** | **Current State** |

|---------------------------------------|-------------------|

| Expose torch.max_values to python? | No |

| Remove max_values and only keep amax? | Yes |

| Should amax support named tensors? | Not in this PR |

## Numpy compatibility

Reference: https://numpy.org/doc/stable/reference/generated/numpy.amax.html

| Parameter | PyTorch Behavior |

|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------|

| `axis`: None or int or tuple of ints, optional. Axis or axes along which to operate. By default, flattened input is used. If this is a tuple of ints, the maximum is selected over multiple axes, instead of a single axis or all the axes as before. | Named `dim`, behavior same as `torch.sum` (https://github.com/pytorch/pytorch/issues/29137) |

| `out`: ndarray, optional. Alternative output array in which to place the result. Must be of the same shape and buffer length as the expected output. | Same |

| `keepdims`: bool, optional. If this is set to True, the axes which are reduced are left in the result as dimensions with size one. With this option, the result will broadcast correctly against the input array. | implemented as `keepdim` |

| `initial`: scalar, optional. The minimum value of an output element. Must be present to allow computation on empty slice. | Not implemented in this PR. Better to implement for all reductions in the future. |

| `where`: array_like of bool, optional. Elements to compare for the maximum. | Not implemented in this PR. Better to implement for all reductions in the future. |

**Note from numpy:**

> NaN values are propagated, that is if at least one item is NaN, the corresponding max value will be NaN as well. To ignore NaN values (MATLAB behavior), please use nanmax.

PyTorch has the same behavior

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43092

Reviewed By: ngimel

Differential Revision: D23360705

Pulled By: mruberry

fbshipit-source-id: 5bdeb08a2465836764a5a6fc1a6cc370ae1ec09d

Summary:

Related to https://github.com/pytorch/pytorch/issues/38349

Implement NumPy-like functions `maximum` and `minimum`.

The `maximum` and `minimum` functions compute input tensors element-wise, returning a new array with the element-wise maxima/minima.

If one of the elements being compared is a NaN, then that element is returned, both `maximum` and `minimum` functions do not support complex inputs.

This PR also promotes the overloaded versions of torch.max and torch.min, by re-dispatching binary `torch.max` and `torch.min` to `torch.maximum` and `torch.minimum`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42579

Reviewed By: mrshenli

Differential Revision: D23153081

Pulled By: mruberry

fbshipit-source-id: 803506c912440326d06faa1b71964ec06775eac1

Summary:

These tests are failing on one of my system that does not have lapack

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43566

Reviewed By: ZolotukhinM

Differential Revision: D23325378

Pulled By: mruberry

fbshipit-source-id: 5d795e460df0a2a06b37182d3d4084d8c5c8e751

Summary:

As part of our continued refactoring of test_torch.py, this takes tests for tensor creation ops like torch.eye, torch.randint, and torch.ones_like and puts them in test_tensor_creation_ops.py. There hare three test classes in the new test suite: TestTensorCreation, TestRandomTensorCreation, TestLikeTensorCreation. TestViewOps and tests for construction of tensors from NumPy arrays have been left in test_torch.py. These might be refactored separately into test_view_ops.py and test_numpy_interop.py in the future.

Most of the tests ported from test_torch.py were left as is or received a signature change to make them nominally "device generic." Future work will need to review test coverage and update the tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43104

Reviewed By: ngimel

Differential Revision: D23280358

Pulled By: mruberry

fbshipit-source-id: 469325dd1a734509dd478cc7fe0413e276ffb192

Summary:

This PR:

- ports the tests in TestTorchMathOps to test_unary_ufuncs.py

- removes duplicative tests for the tested unary ufuncs from test_torch.py

- adds a new test, test_reference_numerics, that validates the behavior of our unary ufuncs vs. reference implementations on empty, scalar, 1D, and 2D tensors that are contiguous, discontiguous, and that contain extremal values, for every dtype the unary ufunc supports

- adds support for skipping tests by regex, this behavior is used to make the test suite pass on Windows, MacOS, and ROCm builds, which have a variety of issues, and on Linux builds (see https://github.com/pytorch/pytorch/issues/42952)

- adds a new OpInfo helper, `supports_dtype`, to facilitate test writing

- extends unary ufunc op info to include reference, domain, and extremal value handling information

- adds OpInfos for `torch.acos` and `torch.sin`

These improvements reveal that our testing has been incomplete on several systems, especially with larger float values and complex values, and several TODOs have been added for follow-up investigations. Luckily when writing tests that cover many ops we can afford to spend additional time crafting the tests and ensuring coverage.

Follow-up PRs will:

- refactor TestTorchMathOps into test_unary_ufuncs.py

- continue porting tests from test_torch.py to test_unary_ufuncs.py (where appropriate)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42965

Reviewed By: pbelevich

Differential Revision: D23238083

Pulled By: mruberry

fbshipit-source-id: c6be317551453aaebae9d144f4ef472f0b3d08eb

Summary:

Add ComplexHalf case to toValueType, which fixes the logic how view_as_real and view_as_complex slices complex tensor to the floating point one, as it is used to generate tensor of random complex values, see:

018b4d7abb/aten/src/ATen/native/DistributionTemplates.h (L200)

Also add ability to convert python complex object to `c10::complex<at::Half>`

Add `torch.half` and `torch.complex32` to the list of `test_randn` dtypes

Fixes https://github.com/pytorch/pytorch/issues/43143

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43279

Reviewed By: mrshenli

Differential Revision: D23230296

Pulled By: malfet

fbshipit-source-id: b4bb66c4c81dd867e72ab7c4563d73f6a4d80a44

Summary:

Fixes https://github.com/pytorch/pytorch/issues/41314 among other things.

This PR streamlines layout propagation logic in TensorIterator and removes almost all cases of channels-last hardcoding. The new rules and changes are as follows:

1) behavior of undefined `output` and defined output of the wrong (e.g. 0) size is always the same (before this PR the behavior was divergent)

2) in obvious cases (unary operation on memory-dense tensors, binary operations on memory-dense tensors with the same layout) strides are propagated (before propagation was inconsistent) (see footnote)

3) in other cases the output permutation is obtained as inverse permutation of sorting inputs by strides. Sorting is done with comparator obeying the following rules: strides of broadcasted dimensions are set to 0, and 0 compares equal to anything. Strides of not-broadcasted dimensions (including dimensions of size `1`) participate in sorting. Precedence is given to the first input, in case of a tie in the first input, first the corresponding dimensions are considered, and if that does not indicate that swap is needed, strides of the same dimension in subsequent inputs are considered. See changes in `reorder_dimensions` and `compute_strides`. Note that first inspecting dimensions of the first input allows us to better recover it's permutation (and we select this behavior because it more reliably propagates channels-last strides) but in some rare cases could result in worse traversal order for the second tensor.

These rules are enough to recover previously hard-coded behavior related to channels last, so all existing tests are passing.

In general, these rules will produce intuitive results, and in most cases permutation of the full size input (in case of broadcasted operation) will be recovered, or permutation of the first input (in case of same sized inputs) will be recovered, including cases with trivial (1) dimensions. As an example of the latter, the following tensor

```

x=torch.randn(2,1,3).permute(1,0,2)

```

will produce output with the same stride (3,3,1) in binary operations with 1d tensor. Another example is a tensor of size N1H1 that has strides `H,H,1,1` when contiguous and `H, 1, 1, 1` when channels-last. The output retains these strides in binary operations when another 1d tensor is broadcasted on this one.

Footnote: for ambiguous cases where all inputs are memory dense and have the same physical layout that nevertheless can correspond to different permutations, such as e.g. NC11-sized physically contiguous tensors, regular contiguous tensor is returned, and thus permutation information of the input is lost (so for NC11 channels-last input had the strides `C, 1, C, C`, but output will have the strides `C, 1, 1, 1`). This behavior is unchanged from before and consistent with numpy, but it still makes sense to change it. The blocker for doing it currently is performance of `empty_strided`. Once we make it on par with `empty` we should be able to propagate layouts in these cases. For now, to not slow down common contiguous case, we default to contiguous.

The table below shows how in some cases current behavior loses permutation/stride information, whereas new behavior propagates permutation.

| code | old | new |

|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------------------------|------------------------------------------------------|

| #strided tensors<br>a=torch.randn(2,3,8)[:,:,::2].permute(2,0,1)<br>print(a.stride())<br>print(a.exp().stride())<br>print((a+a).stride())<br>out = torch.empty(0)<br>torch.add(a,a,out=out)<br>print(out.stride()) | (2, 24, 8) <br>(6, 3, 1) <br>(1, 12, 4) <br>(6, 3, 1) | (2, 24, 8)<br>(1, 12, 4)<br>(1, 12, 4)<br>(1, 12, 4) |

| #memory dense tensors<br>a=torch.randn(3,1,1).as_strided((3,1,1), (1,3,3))<br>print(a.stride(), (a+torch.randn(1)).stride())<br>a=torch.randn(2,3,4).permute(2,0,1)<br>print(a.stride())<br>print(a.exp().stride())<br>print((a+a).stride())<br>out = torch.empty(0)<br>torch.add(a,a,out=out)<br>print(out.stride()) | (1, 3, 3) (1, 1, 1)<br>(1, 12, 4)<br>(6, 3, 1)<br>(1, 12, 4)<br>(6, 3, 1) | (1, 3, 3) (1, 3, 3)<br>(1, 12, 4)<br>(1, 12, 4)<br>(1, 12, 4)<br>(1, 12, 4) |

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42922

Reviewed By: ezyang

Differential Revision: D23148204

Pulled By: ngimel

fbshipit-source-id: 670fb6188c7288e506e5ee488a0e11efc8442d1f

Summary:

https://github.com/pytorch/pytorch/issues/40980

I have a few questions during implementing Polygamma function...

so, I made PR prior to complete it.

1. some code blocks brought from cephes library(and I did too)

```

/*

* The following function comes with the following copyright notice.

* It has been released under the BSD license.

*

* Cephes Math Library Release 2.8: June, 2000

* Copyright 1984, 1987, 1992, 2000 by Stephen L. Moshier

*/

```

is it okay for me to use cephes code with this same copyright notice(already in the Pytorch codebases)

2. There is no linting in internal Aten library. (as far as I know, I read https://github.com/pytorch/pytorch/blob/master/CONTRIBUTING.md)

How do I'm sure my code will follow appropriate guidelines of this library..?

3. Actually, there's a digamma, trigamma function already

digamma is needed, however, trigamma function becomes redundant if polygamma function is added.

it is okay for trigamma to be there or should be removed?

btw, CPU version works fine with 3-rd order polygamma(it's what we need to play with variational inference with beta/gamma distribution) now and I'm going to finish GPU version soon.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42499

Reviewed By: gchanan

Differential Revision: D23110016

Pulled By: albanD

fbshipit-source-id: 246f4c2b755a99d9e18a15fcd1a24e3df5e0b53e

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42563

Moved logic for non-named unflatten from python nn module to aten/native to be reused by the nn module later. Fixed some inconsistencies with doc and code logic.

Test Plan: Imported from OSS

Reviewed By: zou3519

Differential Revision: D23030301

Pulled By: heitorschueroff

fbshipit-source-id: 7c804ed0baa5fca960a990211b8994b3efa7c415

Summary:

Addresses some comments that were left unaddressed after PR https://github.com/pytorch/pytorch/issues/41377 was merged:

* Use `check_output` instead of `Popen` to run each subprocess sequentially

* Use f-strings rather than old python format string style

* Provide environment variables to subprocess through the `env` kwarg

* Check for correct error behavior inside the subprocess, and raise another error if incorrect. Then the main process fails the test if any error is raised

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42627

Reviewed By: malfet

Differential Revision: D22969231

Pulled By: ezyang

fbshipit-source-id: 38d5f3f0d641c1590a93541a5e14d90c2e20acec

Summary:

Previously, `at::native::embedding` implicitly assumed that the `weight` argument would be 1-D or greater. Given a 0-D tensor, it would segfault. This change makes it throw a RuntimeError instead.

Fixes https://github.com/pytorch/pytorch/issues/41780

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42550

Reviewed By: smessmer

Differential Revision: D23040744

Pulled By: albanD

fbshipit-source-id: d3d315850a5ee2d2b6fcc0bdb30db2b76ffffb01

Summary:

Per title. Also updates our guidance for adding aliases to clarify interned_string and method_test requirements. The alias is tested by extending test_clamp to also test clip.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42770

Reviewed By: ngimel

Differential Revision: D23020655

Pulled By: mruberry

fbshipit-source-id: f1d8e751de9ac5f21a4f95d241b193730f07b5dc

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42383

Test Plan - Updated existing tests to run for complex dtypes as well.

Also added tests for `torch.addmm`, `torch.badmm`

Test Plan: Imported from OSS

Reviewed By: ezyang

Differential Revision: D22960339

Pulled By: anjali411

fbshipit-source-id: 0805f21caaa40f6e671cefb65cef83a980328b7d

Summary:

For CUDA >= 10.2, the `CUBLAS_WORKSPACE_CONFIG` environment variable must be set to either `:4096:8` or `:16:8` to ensure deterministic CUDA stream usage. This PR adds some logic inside `torch.set_deterministic()` to raise an error if this environment variable is not set properly and CUDA >= 10.2.

Issue https://github.com/pytorch/pytorch/issues/15359

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41377

Reviewed By: malfet

Differential Revision: D22758459

Pulled By: ezyang

fbshipit-source-id: 4b96f1e9abf85d94ba79140fd927bbd0c05c4522

Summary:

Fixes https://github.com/pytorch/pytorch/issues/42418.

The problem was that the non-contiguous batched matrices were passed to `gemmStridedBatched`.

The following code fails on master and works with the proposed patch:

```python

import torch

x = torch.tensor([[1., 2, 3], [4., 5, 6]], device='cuda:0')

c = torch.as_strided(x, size=[2, 2, 2], stride=[3, 1, 1])

torch.einsum('...ab,...bc->...ac', c, c)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42425

Reviewed By: glaringlee

Differential Revision: D22925266

Pulled By: ngimel

fbshipit-source-id: a72d56d26c7381b7793a047d76bcc5bd45a9602c

Summary:

Segfault happens when one tries to deallocate uninitialized generator.

Make `THPGenerator_dealloc` UBSAN-safe by moving implicit cast in the struct definition to reinterpret_cast

Add `TestTorch.test_invalid_generator_raises` that validates that Generator created on invalid device is handled correctly

Fixes https://github.com/pytorch/pytorch/issues/42281

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42510

Reviewed By: pbelevich

Differential Revision: D22917469

Pulled By: malfet

fbshipit-source-id: 5eaa68eef10d899ee3e210cb0e1e92f73be75712

Summary:

Segfault happens when one tries to deallocate unintialized generator

Add `TestTorch.test_invalid_generator_raises` that validates that Generator created on invalid device is handled correctly

Fixes https://github.com/pytorch/pytorch/issues/42281

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42490

Reviewed By: seemethere

Differential Revision: D22908795

Pulled By: malfet

fbshipit-source-id: c5b6a35db381738c0fc984aa54e5cab5ef2cbb76

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/38697

Benchmark (gcc 8.3, Debian Buster, turbo off, Release build, Intel(R)

Xeon(R) E-2136, Parallelization using OpenMP):

```python

import timeit

for dtype in ('torch.double', 'torch.float', 'torch.uint8', 'torch.int8', 'torch.int16', 'torch.int32', 'torch.int64'):

for n, t in [(40_000, 50000),

(400_000, 5000)]:

print(f'torch.arange(0, {n}, dtype={dtype}) for {t} times')

print(timeit.timeit(f'torch.arange(0, {n}, dtype={dtype})', setup=f'import torch', number=t))

```

Before:

```

torch.arange(0, 40000, dtype=torch.double) for 50000 times

1.587841397995362

torch.arange(0, 400000, dtype=torch.double) for 5000 times

0.47885190199303906

torch.arange(0, 40000, dtype=torch.float) for 50000 times

1.5519152240012772

torch.arange(0, 400000, dtype=torch.float) for 5000 times

0.4733216500026174

torch.arange(0, 40000, dtype=torch.uint8) for 50000 times

1.426058754004771

torch.arange(0, 400000, dtype=torch.uint8) for 5000 times

0.43596178699226584

torch.arange(0, 40000, dtype=torch.int8) for 50000 times

1.4289699140063021

torch.arange(0, 400000, dtype=torch.int8) for 5000 times

0.43451592899509706

torch.arange(0, 40000, dtype=torch.int16) for 50000 times

0.5714442400058033

torch.arange(0, 400000, dtype=torch.int16) for 5000 times

0.14837959500437137

torch.arange(0, 40000, dtype=torch.int32) for 50000 times

0.5964003179979045

torch.arange(0, 400000, dtype=torch.int32) for 5000 times

0.15676555599202402

torch.arange(0, 40000, dtype=torch.int64) for 50000 times

0.8390555799996946

torch.arange(0, 400000, dtype=torch.int64) for 5000 times

0.23184613398916554

```

After:

```

torch.arange(0, 40000, dtype=torch.double) for 50000 times

0.6895066159922862

torch.arange(0, 400000, dtype=torch.double) for 5000 times

0.16820953000569716

torch.arange(0, 40000, dtype=torch.float) for 50000 times

1.3640095089940587

torch.arange(0, 400000, dtype=torch.float) for 5000 times

0.39255041000433266

torch.arange(0, 40000, dtype=torch.uint8) for 50000 times

0.3422072059911443

torch.arange(0, 400000, dtype=torch.uint8) for 5000 times

0.0605111670010956

torch.arange(0, 40000, dtype=torch.int8) for 50000 times

0.3449254590086639

torch.arange(0, 400000, dtype=torch.int8) for 5000 times

0.06115841199061833

torch.arange(0, 40000, dtype=torch.int16) for 50000 times

0.7745441729930462

torch.arange(0, 400000, dtype=torch.int16) for 5000 times

0.22106765500211623

torch.arange(0, 40000, dtype=torch.int32) for 50000 times

0.720475220005028

torch.arange(0, 400000, dtype=torch.int32) for 5000 times

0.20230313099455088

torch.arange(0, 40000, dtype=torch.int64) for 50000 times

0.8144655400101328

torch.arange(0, 400000, dtype=torch.int64) for 5000 times

0.23762561299372464

```

Test Plan: Imported from OSS

Reviewed By: ezyang

Differential Revision: D22291236

Pulled By: VitalyFedyunin

fbshipit-source-id: 134dd08b77b11e631d914b5500ee4285b5d0591e

Summary:

`abs` doesn't have an signed overload across all compilers, so applying abs on uint8_t can be ambiguous: https://en.cppreference.com/w/cpp/numeric/math/abs

This may cause unexpected issue when the input is uint8 and is greater

than 128. For example, on MSVC, applying `std::abs` on an unsigned char

variable

```c++

#include <cmath>

unsigned char a(unsigned char x) {

return std::abs(x);

}

```

gives the following warning:

warning C4244: 'return': conversion from 'int' to 'unsigned char',

possible loss of data

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42254

Reviewed By: VitalyFedyunin

Differential Revision: D22860505

Pulled By: mruberry

fbshipit-source-id: 0076d327bb6141b2ee94917a1a21c22bd2b7f23a

Summary:

Fixes https://github.com/pytorch/pytorch/issues/40986.

TensorIterator's test for a CUDA kernel getting too many CPU scalar inputs was too permissive. This update limits the check to not consider outputs and to only be performed if the kernel can support CPU scalars.

A test is added to verify the appropriate error message is thrown in a case where the old error message was thrown previously.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42360

Reviewed By: ngimel

Differential Revision: D22868536

Pulled By: mruberry

fbshipit-source-id: 2bc8227978f8f6c0a197444ff0c607aeb51b0671

Summary:

**BC-Breaking Note:**

BC breaking changes in the case where keepdim=True. Before this change, when calling `torch.norm` with keepdim=True and p='fro' or p=number, leaving all other optional arguments as their default values, the keepdim argument would be ignored. Also, any time `torch.norm` was called with p='nuc', the result would have one fewer dimension than the input, and the dimensions could be out of order depending on which dimensions were being reduced. After the change, for each of these cases, the result has the same number and order of dimensions as the input.

**PR Summary:**

* Fix keepdim behavior

* Throw descriptive errors for unsupported sparse norm args

* Increase unit test coverage for these cases and for complex inputs

These changes were taken from part of PR https://github.com/pytorch/pytorch/issues/40924. That PR is not going to be merged because it overrides `torch.norm`'s interface, which we want to avoid. But these improvements are still useful.

Issue https://github.com/pytorch/pytorch/issues/24802

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41956

Reviewed By: albanD

Differential Revision: D22837455

Pulled By: mruberry

fbshipit-source-id: 509ecabfa63b93737996f48a58c7188b005b7217

Summary:

See https://github.com/pytorch/pytorch/issues/41027.

This adds a helper to resize output to ATen/native/Resize.* and updates TensorIterator to use it. The helper throws a warning if a tensor with one or more elements needs to be resized. This warning indicates that these resizes will become an error in a future PyTorch release.

There are many functions in PyTorch that will resize their outputs and don't use TensorIterator. For example,

985fd970aa/aten/src/ATen/native/cuda/NaiveConvolutionTranspose2d.cu (L243)

And these functions will need to be updated to use this helper, too. This PR avoids their inclusion since the work is separable, and this should let us focus on the function and its behavior in review. A TODO appears in the code to reflect this.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42079

Reviewed By: VitalyFedyunin

Differential Revision: D22846851

Pulled By: mruberry

fbshipit-source-id: d1a413efb97e30853923bce828513ba76e5a495d

Summary:

After being deprecated in 1.5 and throwing a runtime error in 1.6, we can now enable torch.full inferring its dtype when given bool and integer fill values. This PR enables that inference and updates the tests and docs to reflect this.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41912

Reviewed By: albanD

Differential Revision: D22836802

Pulled By: mruberry

fbshipit-source-id: 33dfbe4d4067800c418b314b1f60fab8adcab4e7

Summary:

In preparation for creating the new torch.fft namespace and NumPy-like fft functions, as well as supporting our goal of refactoring and reducing the size of test_torch.py, this PR creates a test suite for our spectral ops.

The existing spectral op tests from test_torch.py and test_cuda.py are moved to test_spectral_ops.py and updated to run under the device generic test framework.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42157

Reviewed By: albanD

Differential Revision: D22811096

Pulled By: mruberry

fbshipit-source-id: e5c50f0016ea6bb8b093cd6df2dbcef6db9bb6b6

Summary:

After being deprecated in 1.5 and throwing a runtime error in 1.6, we can now enable torch.full inferring its dtype when given bool and integer fill values. This PR enables that inference and updates the tests and docs to reflect this.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41912

Reviewed By: pbelevich

Differential Revision: D22790718

Pulled By: mruberry

fbshipit-source-id: 8d1eb01574b1977f00bc0696974ac38ffdd40d9e

Summary:

This uses cub for cum* operations, because, unlike thrust, cub is non-synchronizing.

Cub does not support more than `2**31` element tensors out of the box (in fact, due to cub bugs the cutoff point is even smaller)

so to support that I split the tensor into `2**30` element chunks, and modify the first value of the second and subsequent chunks to contain the cumsum result of the previous chunks. Since modification is done inplace on the source tensor, if something goes wrong and we error out before the source tensor is reverted back to its original state, source tensor will be corrupted, but in most cases errors will invalidate the full coda context.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42036

Reviewed By: ajtulloch

Differential Revision: D22749945

Pulled By: ngimel

fbshipit-source-id: 9fc9b54d466df9c8885e79c4f4f8af81e3f224ef

Summary:

**BC-Breaking Note**

This PR changes the behavior of the torch.tensor, torch.as_tensor, and sparse constructors. When given a tensor as input and a device is not explicitly specified, these constructors now always infer their device from the tensor. Historically, if the optional dtype kwarg was provided then these constructors would not infer their device from tensor inputs. Additionally, for the sparse ctor a runtime error is now thrown if the indices and values tensors are on different devices and the device kwarg is not specified.

**PR Summary**

This PR's functional change is a single line:

```

auto device = device_opt.has_value() ? *device_opt : (type_inference ? var.device() : at::Device(computeDeviceType(dispatch_key)));

```

=>

```

auto device = device_opt.has_value() ? *device_opt : var.device();

```

in `internal_new_from_data`. This line entangled whether the function was performing type inference with whether it inferred its device from an input tensor, and in practice meant that

```

t = torch.tensor((1, 2, 3), device='cuda')

torch.tensor(t, dtype=torch.float64)

```

would return a tensor on the CPU, not the default CUDA device, while

```

t = torch.tensor((1, 2, 3), device='cuda')

torch.tensor(t)

```

would return a tensor on the device of `t`!

This behavior is niche and odd, but came up while aocsa was fixing https://github.com/pytorch/pytorch/issues/40648.

An additional side affect of this change is that the indices and values tensors given to a sparse constructor must be on the same device, or the sparse ctor must specify the dtype kwarg. The tests in test_sparse.py have been updated to reflect this behavior.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41984

Reviewed By: ngimel

Differential Revision: D22721426

Pulled By: mruberry

fbshipit-source-id: 909645124837fcdf3d339d7db539367209eccd48

Summary:

so that testing _min_max on the different devices is easier, and min/max operations have better CUDA test coverage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41908

Reviewed By: mruberry

Differential Revision: D22697032

Pulled By: ngimel

fbshipit-source-id: a796638fdbed8cda90a23f7ff4ee167f45530914

Summary:

This pull request enables the following tests from test_torch, previously skipped on ROCm:

test_pow_-2_cuda_float32/float64

test_sum_noncontig_cuda_float64

test_conv_transposed_large

The first two tests experienced precision issues on earlier ROCm version, whereas the conv_transposed test was hitting a bug in MIOpen which is fixed with the version shipping with ROCm 3.5

ezyang jeffdaily

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41611

Reviewed By: xw285cornell

Differential Revision: D22672690

Pulled By: ezyang

fbshipit-source-id: 5585387c048f301a483c4c0566eb9665555ef874

Summary:

Reland PR https://github.com/pytorch/pytorch/issues/40056

A new overload of upsample_linear1d_backward_cuda was added in a recent commit, so I had to add the nondeterministic alert to it.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41538

Reviewed By: zou3519

Differential Revision: D22608376

Pulled By: ezyang

fbshipit-source-id: 54a2aa127e069197471f1feede6ad8f8dc6a2f82

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41828

This reverts commit fe66bdb498.

This also makes a sense to THTensorEvenMoreMath because sumall was removed, see THTensor_wrap.

Test Plan: Imported from OSS

Reviewed By: orionr

Differential Revision: D22657473

Pulled By: malfet

fbshipit-source-id: 95a806cedf1a3f4df91e6a21de1678252b117489

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41570

For min/max based quantization observers, calculating min and max of a tensor

takes most of the runtime. Since the calculation of min and max is done

on the same tensor, we can speed this up by only reading the tensor

once, and reducing with two outputs.

One question I had is whether we should put this into the quantization

namespace, since the use case is pretty specific.

This PR implements the easier CPU path to get an initial validation.

There is some needed additional work in future PRs, which durumu will

take a look at:

* CUDA kernel and tests

* making this work per channel

* benchmarking on observer

* benchmarking impact on QAT overhead

Test Plan:

```

python test/test_torch.py TestTorch.test_min_and_max

```

quick bench (not representative of real world use case):

https://gist.github.com/vkuzo/7fce61c3456dbc488d432430cafd6eca

```

(pytorch) [vasiliy@devgpu108.ash6 ~/local/pytorch] OMP_NUM_THREADS=1 python ~/nfs/pytorch_scripts/observer_bench.py

tensor(5.0390) tensor(-5.4485) tensor([-5.4485, 5.0390])

min and max separate 11.90243935585022

min and max combined 6.353186368942261

% decrease 0.466228209277153

(pytorch) [vasiliy@devgpu108.ash6 ~/local/pytorch] OMP_NUM_THREADS=4 python ~/nfs/pytorch_scripts/observer_bench.py

tensor(5.5586) tensor(-5.3983) tensor([-5.3983, 5.5586])

min and max separate 3.468616485595703

min and max combined 1.8227086067199707

% decrease 0.4745142294372342

(pytorch) [vasiliy@devgpu108.ash6 ~/local/pytorch] OMP_NUM_THREADS=8 python ~/nfs/pytorch_scripts/observer_bench.py

tensor(5.2146) tensor(-5.2858) tensor([-5.2858, 5.2146])

min and max separate 1.5707778930664062

min and max combined 0.8645427227020264

% decrease 0.4496085496757899

```

Imported from OSS

Reviewed By: supriyar

Differential Revision: D22589349

fbshipit-source-id: c2e3f1b8b5c75a23372eb6e4c885f842904528ed

Summary:

The test loops over `upper` but does not use it effectively running the same test twice which increases test times for no gain.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41583

Reviewed By: soumith, seemethere, izdeby

Differential Revision: D22598475

Pulled By: zou3519

fbshipit-source-id: d100f20143293a116ff3ba08b0f4eaf0cc5a8099

Summary:

https://github.com/pytorch/pytorch/issues/38349

mruberry

Not entirely sure if all the changes are necessary in how functions are added to Pytorch.

Should it throw an error when called with a non-complex tensor? Numpy allows non-complex arrays in its imag() function which is used in its isreal() function but Pytorch's imag() throws an error for non-complex arrays.

Where does assertONNX() get its expected output to compare to?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41298

Reviewed By: ngimel

Differential Revision: D22610500

Pulled By: mruberry

fbshipit-source-id: 817d61f8b1c3670788b81690636bd41335788439

Summary:

lcm was missing an abs. This adds it plus extends the test for NumPy compliance. Also includes a few doc fixes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41552

Reviewed By: ngimel

Differential Revision: D22580997

Pulled By: mruberry

fbshipit-source-id: 5ce1db56f88df4355427e1b682fcf8877458ff4e

Summary:

Before, inverse for division by scalar is calculated in the precision of the non-scalar operands, which can lead to underflow:

```

>>> x = torch.tensor([3388.]).half().to(0)

>>> scale = 524288.0

>>> x.div(scale)

tensor([0.], device='cuda:0', dtype=torch.float16)

>>> x.mul(1. / scale)

tensor([0.0065], device='cuda:0', dtype=torch.float16)

```

This PR makes results of multiplication by inverse and division the same.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41446

Reviewed By: ezyang

Differential Revision: D22542872

Pulled By: ngimel

fbshipit-source-id: b60e3244809573299c2c3030a006487a117606e9

Summary:

Implementing the quantile operator similar to [numpy.quantile](https://numpy.org/devdocs/reference/generated/numpy.quantile.html).

For this implementation I'm reducing it to existing torch operators to get free CUDA implementation. It is more efficient to implement multiple quickselect algorithm instead of sorting but this can be addressed in a future PR.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39417

Reviewed By: mruberry

Differential Revision: D22525217

Pulled By: heitorschueroff

fbshipit-source-id: 27a8bb23feee24fab7f8c228119d19edbb6cea33

Summary:

The test was always running on the CPU. This actually caused it to throw an error on non-MKL builds, since the CUDA test (which ran on the CPU) tried to execute but the test requires MKL (a requirement only checked for the CPU variant of the test).

Fixes https://github.com/pytorch/pytorch/issues/41402.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41523

Reviewed By: ngimel

Differential Revision: D22569344

Pulled By: mruberry

fbshipit-source-id: e9908c0ed4b5e7b18cc7608879c6213fbf787da2

Summary:

This test function is confusing since our `assertEqual` behavior allows for tolerance to be specified, and this is a redundant mechanism.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41514

Reviewed By: ngimel

Differential Revision: D22569348

Pulled By: mruberry

fbshipit-source-id: 2b2ff8aaa9625a51207941dfee8e07786181fe9f

Summary:

The contiguity preprocessing was mistakenly removed in

cd48fb5030 . It causes erroneous output

when the output tensor is not contiguous. Here we restore this

preprocessing.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41286

Reviewed By: zou3519

Differential Revision: D22550822

Pulled By: ezyang

fbshipit-source-id: ebad4e2ba83d2d808e3f958d4adc9a5513a95bec

Summary:

Fixes https://github.com/pytorch/pytorch/issues/36403

Copy-paste of the issue description:

* Escape hatch: Introduce unsafe_* version of the three functions above that have the current behavior (outputs not tracked as views). The documentation will explain in detail why they are unsafe and when it is safe to use them. (basically, only the outputs OR the input can be modified inplace but not both. Otherwise, you will get wrong gradients).

* Deprecation: Use the CreationMeta on views to track views created by these three ops and throw warning when any of the views is modified inplace saying that this is deprecated and will raise an error soon. For users that really need to modify these views inplace, they should look at the doc of the unsafe_* version to make sure their usecase is valid:

* If it is not, then pytorch is computing wrong gradients for their use case and they should not do inplace anymore.

* If it is, then they can use the unsafe_* version to keep the current behavior.

* Removal: Use the CreationMeta on view to prevent any inplace on these views (like we do for all other views coming from multi-output Nodes). The users will still be able to use the unsafe_ versions if they really need to do this.

Note about BC-breaking:

- This PR changes the behavior of the regular function by making them return proper views now. This is a modification that the user will be able to see.

- We skip all the view logic for these views and so the code should behave the same as before (except the change in the `._is_view()` value).

- Even though the view logic is not performed, we do raise deprecation warnings for the cases where doing these ops would throw an error.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39299

Differential Revision: D22432885

Pulled By: albanD

fbshipit-source-id: 324aef091b32ce69dd067fe9b13a3f17d85d0f12

Summary:

Resubmit #40927

Closes https://github.com/pytorch/pytorch/issues/24679, closes https://github.com/pytorch/pytorch/issues/24678

`addbmm` depends on `addmm` so needed to be ported at the same time. I also removed `THTensor_(baddbmm)` which I noticed had already been ported so was just dead code.

After having already written this code, I had to fix merge conflicts with https://github.com/pytorch/pytorch/issues/40354 which revealed there was already an established place for cpu blas routines in ATen. However, the version there doesn't make use of ATen's AVX dispatching so thought I'd wait for comment before migrating this into that style.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40927

Reviewed By: ezyang

Differential Revision: D22468490

Pulled By: ngimel

fbshipit-source-id: f8a22be3216f67629420939455e31a88af20201d

Summary:

Per title. `lgamma` produces a different result for `-inf` compared to scipy, so there comparison is skipped.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41225

Differential Revision: D22473346

Pulled By: ngimel

fbshipit-source-id: e4ebda1b10e2a061bd4cef38d1d7b5bf0f581790

Summary:

When we return to Python from C++ in PyTorch and have warnings and and error, we have the problem of what to do when the warnings throw because we can only throw one error.

Previously, if we had an error, we punted all warnings to the C++ warning handler which would write them to stderr (i.e. system fid 2) or pass them on to glog.

This has drawbacks if an error happened:

- Warnings are not handled through Python even if they don't raise,

- warnings are always printed with no way to suppress this,

- the printing bypasses sys.stderr, so Python modules wanting to

modify this don't work (with the prominent example being Jupyter).

This patch does the following instead:

- Set the warning using standard Python extension mechanisms,

- if Python decides that this warning is an error and we have a

PyTorch error, we print the warning through Python and clear

the error state (from the warning).

This resolves the three drawbacks discussed above, in particular it fixes https://github.com/pytorch/pytorch/issues/37240 .

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41116

Differential Revision: D22456393

Pulled By: albanD

fbshipit-source-id: c3376735723b092efe67319321a8a993402985c7

Summary:

Closes https://github.com/pytorch/pytorch/issues/24679, closes https://github.com/pytorch/pytorch/issues/24678

`addbmm` depends on `addmm` so needed to be ported at the same time. I also removed `THTensor_(baddbmm)` which I noticed had already been ported so was just dead code.

After having already written this code, I had to fix merge conflicts with https://github.com/pytorch/pytorch/issues/40354 which revealed there was already an established place for cpu blas routines in ATen. However, the version there doesn't make use of ATen's AVX dispatching so thought I'd wait for comment before migrating this into that style.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40927

Differential Revision: D22418756

Pulled By: ezyang

fbshipit-source-id: 44e7bb5964263d73ae8cc6adc5f6d4e966476ae6

Summary:

Most time-consuming tests in test_nn (taking about half the time) were gradgradchecks on Conv3d. Reduce their sizes, and, most importantly, run gradgradcheck single-threaded, because that cuts the time of conv3d tests by an order of magnitude, and barely affects other tests.

These changes bring test_nn time down from 1200 s to ~550 s on my machine.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40999

Differential Revision: D22396896

Pulled By: ngimel

fbshipit-source-id: 3b247caceb65d64be54499de1a55de377fdf9506

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40513

This PR makes the following changes:

1. Complex Printing now uses print formatting for it's real and imaginary values and they are joined at the end.

2. Adding 1. naturally fixes the printing of complex tensors in sci_mode=True

```

>>> torch.tensor(float('inf')+float('inf')*1j)

tensor(nan+infj)

>>> torch.randn(2000, dtype=torch.cfloat)

tensor([ 0.3015-0.2502j, -1.1102+1.2218j, -0.6324+0.0640j, ...,

-1.0200-0.2302j, 0.6511-0.1889j, -0.1069+0.1702j])

>>> torch.tensor([1e-3, 3+4j, 1e-5j, 1e-2+3j, 5+1e-6j])

tensor([1.0000e-03+0.0000e+00j, 3.0000e+00+4.0000e+00j, 0.0000e+00+1.0000e-05j,

1.0000e-02+3.0000e+00j, 5.0000e+00+1.0000e-06j])

>>> torch.randn(3, dtype=torch.cfloat)

tensor([ 1.0992-0.4459j, 1.1073+0.1202j, -0.2177-0.6342j])

>>> x = torch.tensor([1e2, 1e-2])

>>> torch.set_printoptions(sci_mode=False)

>>> x

tensor([ 100.0000, 0.0100])

>>> x = torch.tensor([1e2, 1e-2j])

>>> x

tensor([100.+0.0000j, 0.+0.0100j])

```

Test Plan: Imported from OSS

Differential Revision: D22309294

Pulled By: anjali411

fbshipit-source-id: 20edf9e28063725aeff39f3a246a2d7f348ff1e8

Summary:

This PR implements gh-33389.

As a result of this PR, users can now specify various reduction modes for scatter operations. Currently, `add`, `subtract`, `multiply` and `divide` have been implemented, and adding new ones is not hard.

While we now allow dynamic runtime selection of reduction modes, the performance is the same as as was the case for the `scatter_add_` method in the master branch. Proof can be seen in the graph below, which compares `scatter_add_` in the master branch (blue) and `scatter_(reduce="add")` from this PR (orange).

The script used for benchmarking is as follows:

``` python

import os

import sys

import torch

import time

import numpy

from IPython import get_ipython

Ms=256

Ns=512

dim = 0

top_power = 2

ipython = get_ipython()

plot_name = os.path.basename(__file__)

branch = sys.argv[1]

fname = open(plot_name + ".csv", "a+")

for pM in range(top_power):

M = Ms * (2 ** pM)

for pN in range(top_power):

N = Ns * (2 ** pN)

input_one = torch.rand(M, N)

index = torch.tensor(numpy.random.randint(0, M, (M, N)))

res = torch.randn(M, N)

test_case = f"{M}x{N}"

print(test_case)

tobj = ipython.magic("timeit -o res.scatter_(dim, index, input_one, reduce=\"add\")")

fname.write(f"{test_case},{branch},{tobj.average},{tobj.stdev}\n")

fname.close()

```

Additionally, one can see that various reduction modes take almost the same time to execute:

```

op: add

70.6 µs ± 27.3 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

26.1 µs ± 26.5 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

op: subtract

71 µs ± 20.5 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

26.4 µs ± 34.4 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

op: multiply

70.9 µs ± 31.5 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

27.4 µs ± 29.3 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

op: divide

164 µs ± 48.8 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

52.3 µs ± 132 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

```

Script:

``` python

import torch

import time

import numpy

from IPython import get_ipython

ipython = get_ipython()

nrows = 3000

ncols = 10000

dims = [nrows, ncols]

res = torch.randint(5, 10, dims)

idx1 = torch.randint(dims[0], (1, dims[1])).long()

src1 = torch.randint(5, 10, (1, dims[1]))

idx2 = torch.randint(dims[1], (dims[0], 1)).long()

src2 = torch.randint(5, 10, (dims[0], 1))

for op in ["add", "subtract", "multiply", "divide"]:

print(f"op: {op}")

ipython.magic("timeit res.scatter_(0, idx1, src1, reduce=op)")

ipython.magic("timeit res.scatter_(1, idx2, src2, reduce=op)")

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/36447

Differential Revision: D22272631

Pulled By: ngimel

fbshipit-source-id: 3cdb46510f9bb0e135a5c03d6d4aa5de9402ee90

Summary:

BC-breaking NOTE:

In PyTorch 1.6 bool and integral fill values given to torch.full must set the dtype our out keyword arguments. In prior versions of PyTorch these fill values would return float tensors by default, but in PyTorch 1.7 they will return a bool or long tensor, respectively. The documentation for torch.full has been updated to reflect this.

PR NOTE:

This PR causes torch.full to throw a runtime error when it would have inferred a float dtype by being given a boolean or integer value. A versioned symbol for torch.full is added to preserve the behavior of already serialized Torchscript programs. Existing tests for this behavior being deprecated have been updated to reflect it now being unsupported, and a couple new tests have been added to validate the versioned symbol behavior. The documentation of torch.full has also been updated to reflect this change.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40364

Differential Revision: D22176640

Pulled By: mruberry

fbshipit-source-id: b20158ebbcb4f6bf269d05a688bcf4f6c853a965

Summary:

Updates concat kernel for contiguous input to support channels_last contig tensors.

This was tried on squeezenet model on pixel-2 device. It improves model perf by about 25%.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39448

Test Plan: test_cat_in_channels_last

Differential Revision: D22160526

Pulled By: kimishpatel

fbshipit-source-id: 6eee6e74b8a5c66167828283d16a52022a16997f

Summary:

Many of them have already been migrated to ATen

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39102

Differential Revision: D22162193

Pulled By: VitalyFedyunin

fbshipit-source-id: 80db9914fbd792cd610c4e8ab643ab97845fac9f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/38490

A meta tensor is a tensor that is a lot like a normal tensor,

except it doesn't actually have any data associated with it.

You can use them to carry out shape/dtype computations without

actually having to run the actual code; for example, this could

be used to do shape inference in a JIT analysis pass.

Check out the description in DispatchKey.h for more information.

Meta tensors are part of a larger project to rationalize how we

write kernels so that we don't have to duplicate shape logic

in CPU kernel, CUDA kernel and meta kernel (this PR makes the

duplication problem worse!) However, that infrastructure can

be built on top of this proof of concept, which just shows how

you can start writing meta kernels today even without this

infrastructure.

There are a lot of things that don't work:

- I special cased printing for dense tensors only; if you try to

allocate a meta sparse / quantized tensor things aren't going

to work.

- The printing formula implies that torch.tensor() can take an

ellipsis, but I didn't add this.

- I wrote an example formula for binary operators, but it isn't

even right! (It doesn't do type promotion of memory layout

correctly). The most future proof way to do it right is to

factor out the relevant computation out of TensorIterator,

as it is quite involved.