Adds:

```Python

chebyshev_polynomial_t(input, n, *, out=None) -> Tensor

```

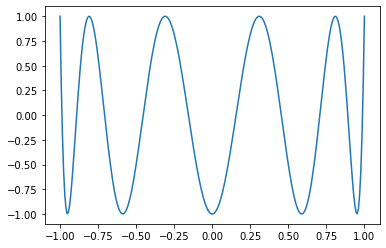

Chebyshev polynomial of the first kind $T_{n}(\text{input})$.

If $n = 0$, $1$ is returned. If $n = 1$, $\text{input}$ is returned. If $n < 6$ or $|\text{input}| > 1$ the recursion:

$$T_{n + 1}(\text{input}) = 2 \times \text{input} \times T_{n}(\text{input}) - T_{n - 1}(\text{input})$$

is evaluated. Otherwise, the explicit trigonometric formula:

$$T_{n}(\text{input}) = \text{cos}(n \times \text{arccos}(x))$$

is evaluated.

## Derivatives

Recommended $k$-derivative formula with respect to $\text{input}$:

$$2^{-1 + k} \times n \times \Gamma(k) \times C_{-k + n}^{k}(\text{input})$$

where $C$ is the Gegenbauer polynomial.

Recommended $k$-derivative formula with respect to $\text{n}$:

$$\text{arccos}(\text{input})^{k} \times \text{cos}(\frac{k \times \pi}{2} + n \times \text{arccos}(\text{input})).$$

## Example

```Python

x = torch.linspace(-1, 1, 256)

matplotlib.pyplot.plot(x, torch.special.chebyshev_polynomial_t(x, 10))

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78196

Approved by: https://github.com/mruberry

Euler beta function:

```Python

torch.special.beta(input, other, *, out=None) → Tensor

```

`reentrant_gamma` and `reentrant_ln_gamma` implementations (using Stirling’s approximation) are provided. I started working on this before I realized we were missing a gamma implementation (despite providing incomplete gamma implementations). Uses the coefficients computed by Steve Moshier to replicate SciPy’s implementation. Likewise, it mimics SciPy’s behavior (instead of the behavior in Cephes).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78031

Approved by: https://github.com/mruberry

We don't have any coverage for meta tensor correctness for backwards

because torch function mode can only allow us to interpose on

Python torch API calls, but backwards invocations happen from C++.

To make this possible, I add torch_dispatch_meta test which runs the

tests with __torch_dispatch__

While doing this, I needed to generate fresh expected failure / skip

lists for the new test suite, and I discovered that my original

scaffolding for this purpose was woefully insufficient. So I rewrote

how the test framework worked, and at the same time rewrote the

__torch_function__ code to also use the new logic. Here's whats

new:

- Expected failure / skip is now done on a per function call basis,

rather than the entire test. This means that separate OpInfo

samples for a function don't affect each other.

- There are now only two lists: expect failure list (where the test

consistently fails on all runs) and skip list (where the test

sometimes passes and fails.

- We explicitly notate the dtype that failed. I considered detecting

when something failed on all dtypes, but this was complicated and

listing everything out seemed to be nice and simple. To keep the

dtypes short, I introduce a shorthand notation for dtypes.

- Conversion to meta tensors is factored into its own class

MetaConverter

- To regenerate the expected failure / skip lists, just run with

PYTORCH_COLLECT_EXPECT and filter on a specific test type

(test_meta or test_dispatch_meta) for whichever you want to update.

Other misc fixes:

- Fix max_pool1d to work with BFloat16 in all circumstances, by making

it dispatch and then fixing a minor compile error (constexpr doesn't

work with BFloat16)

- Add resolve_name for turning random torch API functions into string

names

- Add push classmethod to the Mode classes, so that you can more easily

push a mode onto the mode stack

- Add some more skips for missing LAPACK

- Added an API to let you query if there's already a registration for

a function, added a test to check that we register_meta for all

decompositions (except detach, that decomp is wrong lol), and then

update all the necessary sites to make the test pass.

Signed-off-by: Edward Z. Yang <ezyangfb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77477

Approved by: https://github.com/zou3519

This PR adds `linalg.vander`, the linalg version of `torch.vander`.

We add autograd support and support for batched inputs.

We also take this chance to improve the docs (TODO: Check that they

render correctly!) and add an OpInfo.

**Discussion**: The current default for the `increasing` kwargs is extremely

odd as it is the opposite of the classical definition (see

[wiki](https://en.wikipedia.org/wiki/Vandermonde_matrix)). This is

reflected in the docs, where I explicit both the odd defaults that we

use and the classical definition. See also [this stackoverflow

post](https://stackoverflow.com/a/71758047/5280578), which shows how

people are confused by this defaults.

My take on this would be to correct the default to be `increasing=True`

and document the divergence with NumPy (as we do for other `linalg`

functions) as:

- It is what people expect

- It gives the correct determinant called "the Vandermonde determinant" rather than (-1)^{n-1} times the Vandermonde det (ugh).

- [Minor] It is more efficient (no `flip` needed)

- Since it's under `linalg.vander`, it's strictly not a drop-in replacement for `np.vander`.

We will deprecate `torch.vander` in a PR after this one in this stack

(once we settle on what's the correct default).

Thoughts? mruberry

cc kgryte rgommers as they might have some context for the defaults of

NumPy.

Fixes https://github.com/pytorch/pytorch/issues/60197

Pull Request resolved: https://github.com/pytorch/pytorch/pull/76303

Approved by: https://github.com/albanD, https://github.com/mruberry

This PR adds `linalg.lu_solve`. While doing so, I found a bug in MAGMA

when calling the batched MAGMA backend with trans=True. We work around

that by solving the system solving two triangular systems.

We also update the heuristics for this function, as they were fairly

updated. We found that cuSolver is king, so luckily we do not need to

rely on the buggy backend from magma for this function.

We added tests testing this function left and right. We also added tests

for the different backends. We also activated the tests for AMD, as

those should work as well.

Fixes https://github.com/pytorch/pytorch/issues/61657

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72935

Approved by: https://github.com/IvanYashchuk, https://github.com/mruberry

This PR modifies `lu_unpack` by:

- Using less memory when unpacking `L` and `U`

- Fuse the subtraction by `-1` with `unpack_pivots_stub`

- Define tensors of the correct types to avoid copies

- Port `lu_unpack` to be a strucutred kernel so that its `_out` version

does not incur on extra copies

Then we implement `linalg.lu` as a structured kernel, as we want to

compute its derivative manually. We do so because composing the

derivatives of `torch.lu_factor` and `torch.lu_unpack` would be less efficient.

This new function and `lu_unpack` comes with all the things it can come:

forward and backward ad, decent docs, correctness tests, OpInfo, complex support,

support for metatensors and support for vmap and vmap over the gradients.

I really hope we don't continue adding more features.

This PR also avoids saving some of the tensors that were previously

saved unnecessarily for the backward in `lu_factor_ex_backward` and

`lu_backward` and does some other general improvements here and there

to the forward and backward AD formulae of other related functions.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67833

Approved by: https://github.com/IvanYashchuk, https://github.com/nikitaved, https://github.com/mruberry

This PR adds `linalg.vander`, the linalg version of `torch.vander`.

We add autograd support and support for batched inputs.

We also take this chance to improve the docs (TODO: Check that they

render correctly!) and add an OpInfo.

**Discussion**: The current default for the `increasing` kwargs is extremely

odd as it is the opposite of the classical definition (see

[wiki](https://en.wikipedia.org/wiki/Vandermonde_matrix)). This is

reflected in the docs, where I explicit both the odd defaults that we

use and the classical definition. See also [this stackoverflow

post](https://stackoverflow.com/a/71758047/5280578), which shows how

people are confused by this defaults.

My take on this would be to correct the default to be `increasing=True`

and document the divergence with NumPy (as we do for other `linalg`

functions) as:

- It is what people expect

- It gives the correct determinant called "the Vandermonde determinant" rather than (-1)^{n-1} times the Vandermonde det (ugh).

- [Minor] It is more efficient (no `flip` needed)

- Since it's under `linalg.vander`, it's strictly not a drop-in replacement for `np.vander`.

We will deprecate `torch.vander` in a PR after this one in this stack

(once we settle on what's the correct default).

Thoughts? mruberry

cc kgryte rgommers as they might have some context for the defaults of

NumPy.

Fixes https://github.com/pytorch/pytorch/issues/60197

Pull Request resolved: https://github.com/pytorch/pytorch/pull/76303

Approved by: https://github.com/albanD

This PR adds a function for computing the LDL decomposition and a function that can solve systems of linear equations using this decomposition. The result of `torch.linalg.ldl_factor_ex` is in a compact form and it's required to use it only through `torch.linalg.ldl_solve`. In the future, we could provide `ldl_unpack` function that transforms the compact representation into explicit matrices.

Fixes https://github.com/pytorch/pytorch/issues/54847.

cc @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69828

Approved by: https://github.com/Lezcano, https://github.com/mruberry, https://github.com/albanD

crossref is a new strategy for performing tests when you want

to run a normal PyTorch API call, separately run some variation of

the API call (e.g., same thing but all the arguments are meta tensors)

and then cross-reference the results to see that they are consistent.

Any logic you add to CrossRefMode will get run on *every* PyTorch API

call that is called in the course of PyTorch's test suite. This can

be a good choice for correctness testing if OpInfo testing is not

exhaustive enough.

For now, the crossref test doesn't do anything except verify that

we can validly push a mode onto the torch function mode stack for all

functions.

Signed-off-by: Edward Z. Yang <ezyangfb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/75988

Approved by: https://github.com/seemethere

I figured these out by unconditionally turning on a no-op torch function

mode on the test suite and then fixing errors as they showed up. Here's

what I found:

- _parse_to failed internal assert when __torch_function__'ed because it

claims its name is "to" to the argument parser; added a name override

so we know how to find the correct name

- Infix operator magic methods on Tensor did not uniformly handle

__torch_function__ and TypeError to NotImplemented. Now, we always

do the __torch_function__ handling in

_wrap_type_error_to_not_implemented and your implementation of

__torch_function__ gets its TypeErrors converted to NotImplemented

(for better or for worse; see

https://github.com/pytorch/pytorch/issues/75462 )

- A few cases where code was incorrectly testing if a Tensor was

Tensor-like in the wrong way, now use is_tensor_like (in grad

and in distributions). Also update docs for has_torch_function to

push people to use is_tensor_like.

- is_grads_batched was dropped from grad in handle_torch_function, now

fixed

- Report that you have a torch function even if torch function is

disabled if a mode is enabled. This makes it possible for a mode

to return NotImplemented, pass to a subclass which does some

processing and then pass back to the mode even after the subclass

disables __torch_function__ (so the tensors are treated "as if"

they are regular Tensors). This brings the C++ handling behavior

in line with the Python behavior.

- Make the Python implementation of overloaded types computation match

the C++ version: when torch function is disabled, there are no

overloaded types (because they all report they are not overloaded).

Signed-off-by: Edward Z. Yang <ezyangfb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/75484

Approved by: https://github.com/zou3519

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74226

Update signature of `scatter_reduce_` to match `scatter_/scatter_add_`

`Tensor.scatter_reduce_(int64 dim, Tensor index, Tensor src, str reduce)`

- Add new reduction options in ScatterGatherKernel.cpp and update `scatter_reduce` to call into the cpu kernel for `scatter.reduce`

- `scatter_reduce` now has the same shape constraints as `scatter_` and `scatter_add_`

- Migrate `test/test_torch.py:test_scatter_reduce` to `test/test_scatter_gather_ops.py`

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision: D35222842

Pulled By: mikaylagawarecki

fbshipit-source-id: 84930add2ad30baf872c495251373313cb7428bd

(cherry picked from commit 1b45139482e22eb0dc8b6aec2a7b25a4b58e31df)