Summary:

Original commit changeset: 96813f0fac68

Original Phabricator Diff: D50161780

This breaks the integration test on T166457344

Test Plan: Sandcastle.

Differential Revision: D50344243

Pull Request resolved: https://github.com/pytorch/pytorch/pull/111401

Approved by: https://github.com/izaitsevfb

Added tests for lite interpreter. By default the run_test.sh will use lite interpreter, unless manually set BUILD_LITE_INTERPRETER=0

Also fixed model generation script for android instrumentation test and README.

Verified test can pass for both full jit and lite interpreter. Also tested on emulator and real device using different abis.

Lite interpreter

```

./scripts/build_pytorch_android.sh x86

./android/run_tests.sh

```

Full JIT

```

BUILD_LITE_INTERPRETER=0 ./scripts/build_pytorch_android.sh x86

BUILD_LITE_INTERPRETER=0 ./android/run_tests.sh

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72736

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62419

This diff adds support for cpu only kineto profiler on mobile. Thus

enabling chrome trace generation on mobile. This bring cpp API for

mobile profiling on part with Torchscript.

This is done via:

1. Utilizating debug handle annotations in KinetoEvent.

2. Adding post processing capability, via callbacks, to

KinetoThreadLocalState

3. Creating new RAII stype profiler, KinetoEdgeCPUProfiler, which can be

used in surrounding scope of model execution. This will write chrome

trace to the location specified in profiler constructor.

Test Plan:

MobileProfiler.ModuleHierarchy

Imported from OSS

Reviewed By: raziel

Differential Revision: D29993660

fbshipit-source-id: 0b44f52f9e9c5f5aff81ebbd9273c254c3c03299

Summary:

I build using [Bazel](https://bazel.build/).

When I use `pytorch_android` in latest Android app, I get the following error due to dependencies:

```

$ bazel build //app/src/main:app

WARNING: API level 30 specified by android_ndk_repository 'androidndk' is not available. Using latest known API level 29

INFO: Analyzed target //app/src/main:app (0 packages loaded, 0 targets configured).

INFO: Found 1 target...

ERROR: /home/H1Gdev/android-bazel-app/app/src/main/BUILD.bazel:3:15: Merging manifest for //app/src/main:app failed: (Exit 1): ResourceProcessorBusyBox failed: error executing command bazel-out/k8-opt-exec-2B5CBBC6/bin/external/bazel_tools/src/tools/android/java/com/google/devtools/build/android/ResourceProcessorBusyBox --tool MERGE_MANIFEST -- --manifest ... (remaining 11 argument(s) skipped)

Use --sandbox_debug to see verbose messages from the sandbox ResourceProcessorBusyBox failed: error executing command bazel-out/k8-opt-exec-2B5CBBC6/bin/external/bazel_tools/src/tools/android/java/com/google/devtools/build/android/ResourceProcessorBusyBox --tool MERGE_MANIFEST -- --manifest ... (remaining 11 argument(s) skipped)

Use --sandbox_debug to see verbose messages from the sandbox

Error: /home/H1Gdev/.cache/bazel/_bazel_H1Gdev/29e18157a4334967491de4cc9a879dc0/sandbox/linux-sandbox/914/execroot/__main__/app/src/main/AndroidManifest.xml:19:18-86 Error:

Attribute application@appComponentFactory value=(androidx.core.app.CoreComponentFactory) from [maven//:androidx_core_core] AndroidManifest.xml:19:18-86

is also present at [maven//:com_android_support_support_compat] AndroidManifest.xml:19:18-91 value=(android.support.v4.app.CoreComponentFactory).

Suggestion: add 'tools:replace="android:appComponentFactory"' to <application> element at AndroidManifest.xml:5:5-19:19 to override.

May 19, 2021 10:45:03 AM com.google.devtools.build.android.ManifestMergerAction main

SEVERE: Error during merging manifests

com.google.devtools.build.android.AndroidManifestProcessor$ManifestProcessingException: Manifest merger failed : Attribute application@appComponentFactory value=(androidx.core.app.CoreComponentFactory) from [maven//:androidx_core_core] AndroidManifest.xml:19:18-86

is also present at [maven//:com_android_support_support_compat] AndroidManifest.xml:19:18-91 value=(android.support.v4.app.CoreComponentFactory).

Suggestion: add 'tools:replace="android:appComponentFactory"' to <application> element at AndroidManifest.xml:5:5-19:19 to override.

at com.google.devtools.build.android.AndroidManifestProcessor.mergeManifest(AndroidManifestProcessor.java:186)

at com.google.devtools.build.android.ManifestMergerAction.main(ManifestMergerAction.java:217)

at com.google.devtools.build.android.ResourceProcessorBusyBox$Tool$5.call(ResourceProcessorBusyBox.java:93)

at com.google.devtools.build.android.ResourceProcessorBusyBox.processRequest(ResourceProcessorBusyBox.java:233)

at com.google.devtools.build.android.ResourceProcessorBusyBox.main(ResourceProcessorBusyBox.java:177)

Warning:

See http://g.co/androidstudio/manifest-merger for more information about the manifest merger.

Target //app/src/main:app failed to build

Use --verbose_failures to see the command lines of failed build steps.

INFO: Elapsed time: 2.221s, Critical Path: 1.79s

INFO: 2 processes: 2 internal.

FAILED: Build did NOT complete successfully

```

This is due to conflict between `AndroidX` and `Support Library` on which `pytorch_android_torch` depends.

(In the case of `Gradle`, it is avoided by `android.useAndroidX`.)

I created [Android application](https://github.com/H1Gdev/android-bazel-app) for comparison.

At first, I updated `AppCompat` from `Support Library` to `AndroidX`, but `pytorch_android` and `pytorch_android_torchvision` didn't seem to need any dependencies, so I removed dependencies.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58527

Reviewed By: xta0

Differential Revision: D28585234

Pulled By: IvanKobzarev

fbshipit-source-id: 78aa6b1525543594ae951a6234dd88a3fdbfc062

Summary:

Build lite interpreter as default for android, should wait until https://github.com/pytorch/pytorch/pull/56002 lands

Mainly two changes:

1. Use lite interpreter as default for Android

2. Switch the lite interpreter build test to full jit build test

Test Plan: Imported from OSS

Differential Revision: D27695530

Reviewed By: IvanKobzarev

Pulled By: cccclai

fbshipit-source-id: e1b2c70fee6590accc22c7404b9dd52c7d7c36e2

Summary:

Switching pytorch android to use fbjni from prefab dependencies

Bumping version of fbjni to 0.2.2

soloader version to 0.10.1

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55066

Reviewed By: dreiss

Differential Revision: D27469727

Pulled By: IvanKobzarev

fbshipit-source-id: 2ab22879e81c9f2acf56807c6a133b0ca20bb40a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53567

Updating gradle to version 6.8.3

Proper zip was uploaded to aws.

Successful CI check: https://github.com/pytorch/pytorch/pull/53619

Test Plan: Imported from OSS

Reviewed By: dreiss

Differential Revision: D26928885

Pulled By: IvanKobzarev

fbshipit-source-id: b1081052967d9080cd6934fd48c4dbe933630e49

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51419

## Summary

1. Add an option `BUILD_LITE_INTERPRETER` in `caffe2/CMakeLists.txt` and set `OFF` as default.

2. Update 'build_android.sh' with an argument to swtich `BUILD_LITE_INTERPRETER`, 'OFF' as default.

3. Add a mini demo app `lite_interpreter_demo` linked with `libtorch` library, which can be used for quick test.

## Test Plan

Built lite interpreter version of libtorch and test with Image Segmentation demo app ([android version](https://github.com/pytorch/android-demo-app/tree/master/ImageSegmentation)/[ios version](https://github.com/pytorch/ios-demo-app/tree/master/ImageSegmentation))

### Android

1. **Prepare model**: Prepare the lite interpreter version of model by run the script below to generate the scripted model `deeplabv3_scripted.pt` and `deeplabv3_scripted.ptl`

```

import torch

model = torch.hub.load('pytorch/vision:v0.7.0', 'deeplabv3_resnet50', pretrained=True)

model.eval()

scripted_module = torch.jit.script(model)

# Export full jit version model (not compatible lite interpreter), leave it here for comparison

scripted_module.save("deeplabv3_scripted.pt")

# Export lite interpreter version model (compatible with lite interpreter)

scripted_module._save_for_lite_interpreter("deeplabv3_scripted.ptl")

```

2. **Build libtorch lite for android**: Build libtorch for android for all 4 android abis (armeabi-v7a, arm64-v8a, x86, x86_64) `BUILD_LITE_INTERPRETER=1 ./scripts/build_pytorch_android.sh`. This pr is tested on Pixel 4 emulator with x86, so use cmd `BUILD_LITE_INTERPRETER=1 ./scripts/build_pytorch_android.sh x86` to specify abi to save built time. After the build finish, it will show the library path:

```

...

BUILD SUCCESSFUL in 55s

134 actionable tasks: 22 executed, 112 up-to-date

+ find /Users/chenlai/pytorch/android -type f -name '*aar'

+ xargs ls -lah

-rw-r--r-- 1 chenlai staff 13M Feb 11 11:48 /Users/chenlai/pytorch/android/pytorch_android/build/outputs/aar/pytorch_android-release.aar

-rw-r--r-- 1 chenlai staff 36K Feb 9 16:45 /Users/chenlai/pytorch/android/pytorch_android_torchvision/build/outputs/aar/pytorch_android_torchvision-release.aar

```

3. **Use the PyTorch Android libraries built from source in the ImageSegmentation app**: Create a folder 'libs' in the path, the path from repository root will be `ImageSegmentation/app/libs`. Copy `pytorch_android-release` to the path `ImageSegmentation/app/libs/pytorch_android-release.aar`. Copy 'pytorch_android_torchvision` (downloaded from [here](https://oss.sonatype.org/#nexus-search;quick~torchvision_android)) to the path `ImageSegmentation/app/libs/pytorch_android_torchvision.aar` Update the `dependencies` part of `ImageSegmentation/app/build.gradle` to

```

dependencies {

implementation 'androidx.appcompat:appcompat:1.2.0'

implementation 'androidx.constraintlayout:constraintlayout:2.0.2'

testImplementation 'junit:junit:4.12'

androidTestImplementation 'androidx.test.ext:junit:1.1.2'

androidTestImplementation 'androidx.test.espresso:espresso-core:3.3.0'

implementation(name:'pytorch_android-release', ext:'aar')

implementation(name:'pytorch_android_torchvision', ext:'aar')

implementation 'com.android.support:appcompat-v7:28.0.0'

implementation 'com.facebook.fbjni:fbjni-java-only:0.0.3'

}

```

Update `allprojects` part in `ImageSegmentation/build.gradle` to

```

allprojects {

repositories {

google()

jcenter()

flatDir {

dirs 'libs'

}

}

}

```

4. **Update model loader api**: Update `ImageSegmentation/app/src/main/java/org/pytorch/imagesegmentation/MainActivity.java` by

4.1 Add new import: `import org.pytorch.LiteModuleLoader;`

4.2 Replace the way to load pytorch lite model

```

// mModule = Module.load(MainActivity.assetFilePath(getApplicationContext(), "deeplabv3_scripted.pt"));

mModule = LiteModuleLoader.load(MainActivity.assetFilePath(getApplicationContext(), "deeplabv3_scripted.ptl"));

```

5. **Test app**: Build and run the ImageSegmentation app in Android Studio,

### iOS

1. **Prepare model**: Same as Android.

2. **Build libtorch lite for ios** `BUILD_PYTORCH_MOBILE=1 IOS_PLATFORM=SIMULATOR BUILD_LITE_INTERPRETER=1 ./scripts/build_ios.sh`

3. **Remove Cocoapods from the project**: run `pod deintegrate`

4. **Link ImageSegmentation demo app with the custom built library**:

Open your project in XCode, go to your project Target’s **Build Phases - Link Binaries With Libraries**, click the **+** sign and add all the library files located in `build_ios/install/lib`. Navigate to the project **Build Settings**, set the value **Header Search Paths** to `build_ios/install/include` and **Library Search Paths** to `build_ios/install/lib`.

In the build settings, search for **other linker flags**. Add a custom linker flag below

```

-all_load

```

Finally, disable bitcode for your target by selecting the Build Settings, searching for Enable Bitcode, and set the value to No.

**

5. Update library and api**

5.1 Update `TorchModule.mm``

To use the custom built libraries the project, replace `#import <LibTorch/LibTorch.h>` (in `TorchModule.mm`) which is needed when using LibTorch via Cocoapods with the code below:

```

//#import <LibTorch/LibTorch.h>

#include "ATen/ATen.h"

#include "caffe2/core/timer.h"

#include "caffe2/utils/string_utils.h"

#include "torch/csrc/autograd/grad_mode.h"

#include "torch/script.h"

#include <torch/csrc/jit/mobile/function.h>

#include <torch/csrc/jit/mobile/import.h>

#include <torch/csrc/jit/mobile/interpreter.h>

#include <torch/csrc/jit/mobile/module.h>

#include <torch/csrc/jit/mobile/observer.h>

```

5.2 Update `ViewController.swift`

```

// if let filePath = Bundle.main.path(forResource:

// "deeplabv3_scripted", ofType: "pt"),

// let module = TorchModule(fileAtPath: filePath) {

// return module

// } else {

// fatalError("Can't find the model file!")

// }

if let filePath = Bundle.main.path(forResource:

"deeplabv3_scripted", ofType: "ptl"),

let module = TorchModule(fileAtPath: filePath) {

return module

} else {

fatalError("Can't find the model file!")

}

```

### Unit test

Add `test/cpp/lite_interpreter`, with one unit test `test_cores.cpp` and a light model `sequence.ptl` to test `_load_for_mobile()`, `bc.find_method()` and `bc.forward()` functions.

### Size:

**With the change:**

Android:

x86: `pytorch_android-release.aar` (**13.8 MB**)

IOS:

`pytorch/build_ios/install/lib` (lib: **66 MB**):

```

(base) chenlai@chenlai-mp lib % ls -lh

total 135016

-rw-r--r-- 1 chenlai staff 3.3M Feb 15 20:45 libXNNPACK.a

-rw-r--r-- 1 chenlai staff 965K Feb 15 20:45 libc10.a

-rw-r--r-- 1 chenlai staff 4.6K Feb 15 20:45 libclog.a

-rw-r--r-- 1 chenlai staff 42K Feb 15 20:45 libcpuinfo.a

-rw-r--r-- 1 chenlai staff 39K Feb 15 20:45 libcpuinfo_internals.a

-rw-r--r-- 1 chenlai staff 1.5M Feb 15 20:45 libeigen_blas.a

-rw-r--r-- 1 chenlai staff 148K Feb 15 20:45 libfmt.a

-rw-r--r-- 1 chenlai staff 44K Feb 15 20:45 libpthreadpool.a

-rw-r--r-- 1 chenlai staff 166K Feb 15 20:45 libpytorch_qnnpack.a

-rw-r--r-- 1 chenlai staff 384B Feb 15 21:19 libtorch.a

-rw-r--r-- 1 chenlai staff **60M** Feb 15 20:47 libtorch_cpu.a

```

`pytorch/build_ios/install`:

```

(base) chenlai@chenlai-mp install % du -sh *

14M include

66M lib

2.8M share

```

**Master (baseline):**

Android:

x86: `pytorch_android-release.aar` (**16.2 MB**)

IOS:

`pytorch/build_ios/install/lib` (lib: **84 MB**):

```

(base) chenlai@chenlai-mp lib % ls -lh

total 172032

-rw-r--r-- 1 chenlai staff 3.3M Feb 17 22:18 libXNNPACK.a

-rw-r--r-- 1 chenlai staff 969K Feb 17 22:18 libc10.a

-rw-r--r-- 1 chenlai staff 4.6K Feb 17 22:18 libclog.a

-rw-r--r-- 1 chenlai staff 42K Feb 17 22:18 libcpuinfo.a

-rw-r--r-- 1 chenlai staff 1.5M Feb 17 22:18 libeigen_blas.a

-rw-r--r-- 1 chenlai staff 44K Feb 17 22:18 libpthreadpool.a

-rw-r--r-- 1 chenlai staff 166K Feb 17 22:18 libpytorch_qnnpack.a

-rw-r--r-- 1 chenlai staff 384B Feb 17 22:19 libtorch.a

-rw-r--r-- 1 chenlai staff 78M Feb 17 22:19 libtorch_cpu.a

```

`pytorch/build_ios/install`:

```

(base) chenlai@chenlai-mp install % du -sh *

14M include

84M lib

2.8M share

```

Test Plan: Imported from OSS

Reviewed By: iseeyuan

Differential Revision: D26518778

Pulled By: cccclai

fbshipit-source-id: 4503ffa1f150ecc309ed39fb0549e8bd046a3f9c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40442

Problem:

Nightly builds do not include libtorch headers as local build.

The reason is that on docker images path is different than local path when building with `scripts/build_pytorch_android.sh`

Solution:

Introducing gradle property to be able to specify it and add its specification to gradle build job and snapshots publishing job which run on the same docker image.

Test:

ci-all jobs check https://github.com/pytorch/pytorch/pull/40443

checking that gradle build will result with headers inside aar

Test Plan: Imported from OSS

Differential Revision: D22190955

Pulled By: IvanKobzarev

fbshipit-source-id: 9379458d8ab024ee991ca205a573c21d649e5f8a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39588

Before this diff we used c++_static linking.

Users will dynamically link to libpytorch_jni.so and have at least one more their own shared library that probably uses stl library.

We must have not more than one stl per app. ( https://developer.android.com/ndk/guides/cpp-support#one_stl_per_app )

To have only one stl per app changing ANDROID_STL way to c++_shared, that will add libc++_shared.so to packaging.

Test Plan: Imported from OSS

Differential Revision: D22118031

Pulled By: IvanKobzarev

fbshipit-source-id: ea1e5085ae207a2f42d1fa9f6ab8ed0a21768e96

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39507

Adding gradle task that will be run after `assemble` to add `headers` folder to the aar.

Headers are choosed for the first specified abi, they should be the same for all abis.

Adding headers works through temporary unpacking into gradle `$buildDir`, copying headers to it, zipping aar with headers.

Test Plan: Imported from OSS

Differential Revision: D22118009

Pulled By: IvanKobzarev

fbshipit-source-id: 52e5b1e779eb42d977c67dba79e278f1922b8483

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30175

fbjni was opensourced and java part is published as 'com.facebook.fbjni:fbjni-java-only:0.0.3'

switching to it.

We still need submodule fbjni inside the repo (which is already pointing to https://github.com/facebookincubator/fbjni) for so linking.

**Packaging changes**:

before that `libfbjni.so` came from pytorch_android_fbjni dependency, as we also linked fbjni in `pytorch_android/CMakeLists.txt` - it was built in pytorch_android, but excluded for publishing. As we had 2 libfbjni.so there was a hack to exclude it for publishing and resolve duplication locally.

```

if (rootProject.isPublishing()) {

exclude '**/libfbjni.so'

} else {

pickFirst '**/libfbjni.so'

}

```

After this change fbjni.so will be packaged inside pytorch_android.aar artefact and we do not need this gradle logic.

I will update README in separate PR after landing previous PR to readme(https://github.com/pytorch/pytorch/pull/30128) to avoid conflicts

Test Plan: Imported from OSS

Differential Revision: D18982235

Pulled By: IvanKobzarev

fbshipit-source-id: 5097df2557858e623fa480625819a24a7e8ad840

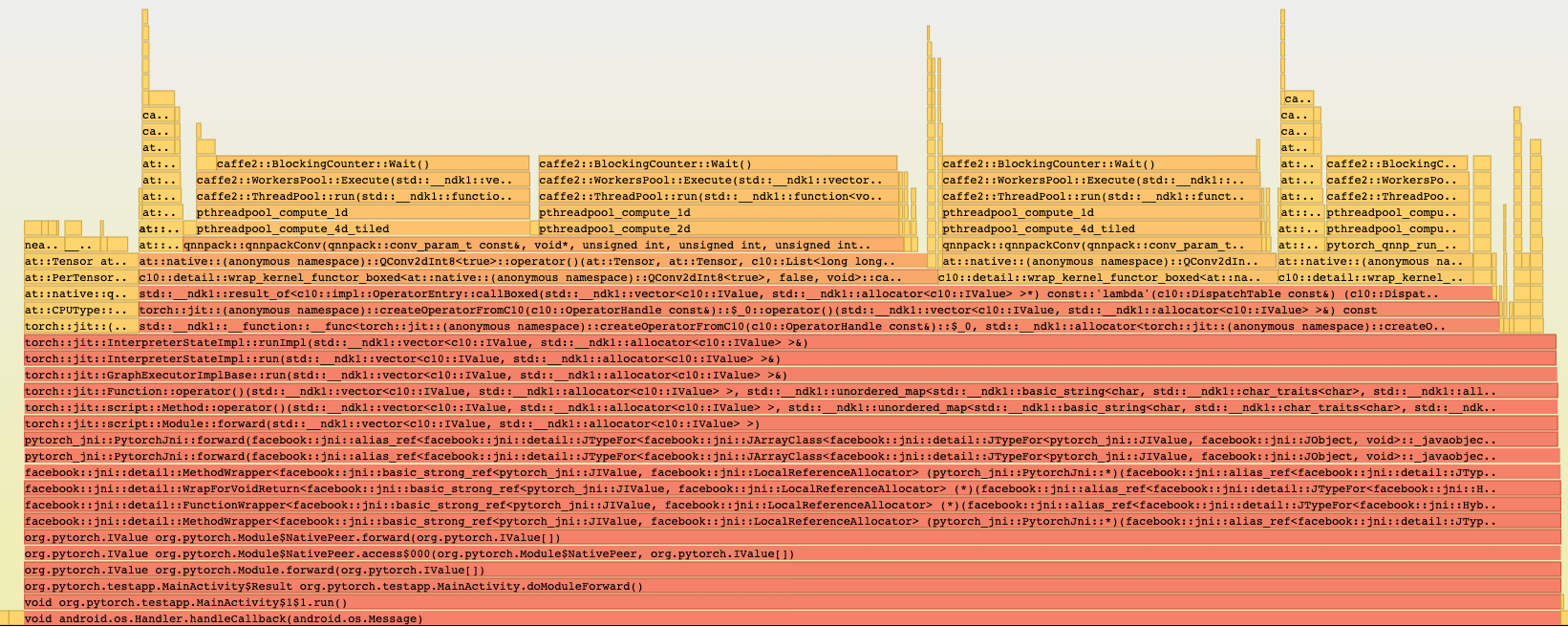

Summary:

Reason:

To have one-step build for test android application based on the current code state that is ready for profiling with simpleperf, systrace etc. to profile performance inside the application.

## Parameters to control debug symbols stripping

Introducing /CMakeLists parameter `ANDROID_DEBUG_SYMBOLS` to be able not to strip symbols for pytorch (not add linker flag `-s`)

which is checked in `scripts/build_android.sh`

On gradle side stripping happens by default, and to prevent it we have to specify

```

android {

packagingOptions {

doNotStrip "**/*.so"

}

}

```

which is now controlled by new gradle property `nativeLibsDoNotStrip `

## Test_App

`android/test_app` - android app with one MainActivity that does inference in cycle

`android/build_test_app.sh` - script to build libtorch with debug symbols for specified android abis and adds `NDK_DEBUG=1` and `-PnativeLibsDoNotStrip=true` to keep all debug symbols for profiling.

Script assembles all debug flavors:

```

└─ $ find . -type f -name *apk

./test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

./test_app/app/build/outputs/apk/resnet/debug/test_app-resnet-debug.apk

```

## Different build configurations

Module for inference can be set in `android/test_app/app/build.gradle` as a BuildConfig parameters:

```

productFlavors {

mobilenetQuant {

dimension "model"

applicationIdSuffix ".mobilenetQuant"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_MOBILENET_QUANT'))

addManifestPlaceholders([APP_NAME: "PyMobileNetQuant"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-mobilenet\"")

}

resnet {

dimension "model"

applicationIdSuffix ".resnet"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_RESNET18'))

addManifestPlaceholders([APP_NAME: "PyResnet"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-resnet\"")

}

```

In that case we can setup several apps on the same device for comparison, to separate packages `applicationIdSuffix`: 'org.pytorch.testapp.mobilenetQuant' and different application names and logcat tags as `manifestPlaceholder` and another BuildConfig parameter:

```

─ $ adb shell pm list packages | grep pytorch

package:org.pytorch.testapp.mobilenetQuant

package:org.pytorch.testapp.resnet

```

In future we can add another BuildConfig params e.g. single/multi threads and other configuration for profiling.

At the moment 2 flavors - for resnet18 and for mobilenetQuantized

which can be installed on connected device:

```

cd android

```

```

gradle test_app:installMobilenetQuantDebug

```

```

gradle test_app:installResnetDebug

```

## Testing:

```

cd android

sh build_test_app.sh

adb install -r test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

```

```

cd $ANDROID_NDK

python simpleperf/run_simpleperf_on_device.py record --app org.pytorch.testapp.mobilenetQuant -g --duration 10 -o /data/local/tmp/perf.data

adb pull /data/local/tmp/perf.data

python simpleperf/report_html.py

```

Simpleperf report has all symbols:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28406

Differential Revision: D18386622

Pulled By: IvanKobzarev

fbshipit-source-id: 3a751192bbc4bc3c6d7f126b0b55086b4d586e7a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26995

Fix current setup, exclude fbjni - we can not use independently pytorch_android:package, for example for testing `gradle pytorch_android:cAT`

But for publishing it works as pytorch_android has dep on fbjni that will be also published

For other cases - we have 2 fbjni.so - one from native build (CMakeLists.txt does add_subdirectory(fbjni_dir)), and from dependency ':fbjni'

We need both of them as ':fbjni' also contains java classes

As a fix: keep excluding for publishing tasks (bintrayUpload, uploadArchives), but else - pickFirst (as we have 2 sources of fbjni.so)

# Testing

gradle cAT works, fbjni.so included

gradle bintrayUpload (dryRun==true) - no fbjni.so

Test Plan: Imported from OSS

Differential Revision: D17637775

Pulled By: IvanKobzarev

fbshipit-source-id: edda56ba555678272249fe7018c1f3a8e179947c

Summary:

fbjni is used during linking `libpytorch.so` and is specified in `pytorch_android/CMakeLists.txt` and as a result its included as separate `libfbjni.so` and is included to `pytorch_android.aar`

We also have java part of fbjni and its connected to pytorch_android as gradle dependency which contains `libfbjni.so`

As a result when we specify gradle dep `'org.pytorch:pytorch_android'` (it has libjni.so) and it has transitive dep `'org.pytorch:pytorch_android_fbjni'` that has `libfbjni.so` and we will have gradle ambiguity error about this

Fix - excluding libfbjni.so from pytorch_android.aar packaging, using `libfbjni.so` from gradle dep `'org.pytorch:pytorch_android_fbjni'`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26382

Differential Revision: D17468723

Pulled By: IvanKobzarev

fbshipit-source-id: fcad648cce283b0ee7e8b2bab0041a2e079002c6

Summary:

Gradle tasks for publishing to bintray and jcenter, mavencentral; snapshot buidls go to oss.sonatype.org

Those gradle changes adds tasks:

bintrayUpload - publishing on bintray, in 'facebook' org

uploadArchives - uploading to maven repos

Gradle tasks are copied from facebook open sourced libraries like https://github.com/facebook/litho, https://github.com/facebookincubator/spectrum

To do the publishing we need to provide somehow (e.g. in ~/.gradle/gradle.properties)

```

signing.keyId=

signing.password=

signing.secretKeyRingFile=

bintrayUsername=

bintrayApiKey=

bintrayGpgPassword=

SONATYPE_NEXUS_USERNAME=

SONATYPE_NEXUS_PASSWORD=

```

android/libs/fbjni is submodule, to be able to add publishing tasks to it (it needs to be published as separate maven dependency) - I created `android/libs/fbjni_local` that has only `build.gradle` with release tasks.

pytorch_android dependency for ':fbjni' changed from implementation -> api as implementation treated as 'private' dependency which is translated to scope=runtime in maven pom file, api works as 'compile'

Testing:

it's already published on bintray with version 0.0.4 and can be used in gradle files as

```

repositories {

maven {

url "https://dl.bintray.com/facebook/maven"

}

}

dependencies {

implementation 'com.facebook:pytorch_android:0.0.4'

implementation 'com.facebook:pytorch_android_torchvision:0.0.4'

}

```

It was published in com.facebook group

I requested sync to jcenter from bintray, that usually takes 2-3 days

Versioning added version suffixes to aar output files and circleCI jobs for android start failing as they expected just pytorch_android.aar pytorch_android_torchvision.aar, without any version

To avoid it - I changed circleCI android jobs to zip *.aar files and publish as single artifact with name artifacts.zip, I will add kostmo to check this part, if circleCI jobs finish ok - everything works :)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25351

Reviewed By: kostmo

Differential Revision: D17135886

Pulled By: IvanKobzarev

fbshipit-source-id: 64eebac670bbccaaafa1b04eeab15760dd5ecdf9

Summary:

Introducing circleCI jobs for pytorch_android gradle builds, the ultimate goal of it at the moment - to run:

```

gradle assembleRelease -p ~/workspace/android/pytorch_android assembleRelease

```

To assemble android gradle build (aar) we need to have results of libtorch-android shared library with headers for 4 android abis, so pytorch_android_gradle_build requires 4 jobs

```

- pytorch_android_gradle_build:

requires:

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_x86_32_build

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_x86_64_build

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_arm_v7a_build

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_arm_v8a_build

```

All jobs use the same base docker_image, differentiate them by committing docker images with different android_abi -suffixes (like it is now for xla and namedtensor): (it's in `&pytorch_linux_build_defaults`)

```

if [[ ${BUILD_ENVIRONMENT} == *"namedtensor"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-namedtensor

elif [[ ${BUILD_ENVIRONMENT} == *"xla"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-xla

elif [[ ${BUILD_ENVIRONMENT} == *"-x86"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-x86

elif [[ ${BUILD_ENVIRONMENT} == *"-arm-v7a"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-arm-v7a

elif [[ ${BUILD_ENVIRONMENT} == *"-arm-v8a"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-arm-v8a

elif [[ ${BUILD_ENVIRONMENT} == *"-x86_64"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-x86_64

else

export COMMIT_DOCKER_IMAGE=$output_image

fi

```

pytorch_android_gradle_build job copies headers and libtorch.so, libc10.so results from libtorch android docker images, to workspace first and to android_abi=x86 docker image afterwards, to run there final gradle build calling `.circleci/scripts/build_android_gradle.sh`

For PR jobs we have only `pytorch_linux_xenial_py3_clang5_android_ndk_r19c_x86_32_build` libtorch android build => it will have separate gradle build `pytorch_android_gradle_build-x86_32` that does not do docker copying,

it calls the same `.circleci/scripts/build_android_gradle.sh` which has only-x86_32 logic by condition on BUILD_ENVIRONMENT:

`[[ "${BUILD_ENVIRONMENT}" == *-gradle-build-only-x86_32* ]]`

And has filtering to un only for PR as for other runs we will have the full build. Filtering checks `-z "${CIRCLE_PULL_REQUEST:-}"`

```

- run:

name: filter_run_only_on_pr

no_output_timeout: "5m"

command: |

echo "CIRCLE_PULL_REQUEST: ${CIRCLE_PULL_REQUEST:-}"

if [ -z "${CIRCLE_PULL_REQUEST:-}" ]; then

circleci step halt

fi

```

Updating docker images to the version with gradle, android_sdk, openjdk - jenkins job with them https://ci.pytorch.org/jenkins/job/pytorch-docker-master/339/

pytorch_android_gradle_build successful run: https://circleci.com/gh/pytorch/pytorch/2604797#artifacts/containers/0

pytorch_android_gradle_build-x86_32 successful run: https://circleci.com/gh/pytorch/pytorch/2608945#artifacts/containers/0

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25286

Reviewed By: kostmo

Differential Revision: D17115861

Pulled By: IvanKobzarev

fbshipit-source-id: bc88fd38b38ed0d0170d719fffa375772bdea142

Summary:

TLDR; initial commit of android java-jni wrapper of pytorchscript c++ api

The main idea is to provide java interface for android developers to use pytorchscript modules.

java API tries to repeat semantic of c++ and python pytorchscript API

org.pytorch.Module (wrapper of torch::jit::script::Module)

- static Module load(String path)

- IValue forward(IValue... inputs)

- IValue runMethod(String methodName, IValue... inputs)

org.pytorch.Tensor (semantic of at::Tensor)

- newFloatTensor(long[] dims, float[] data)

- newFloatTensor(long[] dims, FloatBuffer data)

- newIntTensor(long[] dims, int[] data)

- newIntTensor(long[] dims, IntBuffer data)

- newByteTensor(long[] dims, byte[] data)

- newByteTensor(long[] dims, ByteBuffer data)

org.pytorch.IValue (semantic of at::IValue)

- static factory methods to create pytorchscript supported types

Examples of usage api could be found in PytorchInstrumentedTests.java:

Module module = Module.load(path);

IValue input = IValue.tensor(Tensor.newByteTensor(new long[]{1}, Tensor.allocateByteBuffer(1)));

IValue output = module.forward(input);

Tensor outputTensor = output.getTensor();

ThreadSafety:

Api is not thread safe, all synchronization must be done on caller side.

Mutability:

org.pytorch.Tensor buffer is DirectBuffer with native byte order, can be created with static factory methods specifing DirectBuffer.

At the moment org.pytorch.Tensor does not hold at::Tensor on jni side, it has: long[] dimensions, type, DirectByteBuffer blobData

Input tensors are mutable (can be modified and used for the next inference),

Uses values from buffer on the momment of Module#forward or Module#runMethod calls.

Buffers of input tensors is used directly by input at::Tensor

Output is copied from output at::Tensor and is immutable.

Dependencies:

Jni level is implemented with usage of fbjni library, that was developed in Facebook,

and was already used and opensourced in several opensource projects,

added to the repo as submodule from personal account to be able to switch submodule

when fbjni will be opensourced separately.

ghstack-source-id: b39c848359a70d717f2830a15265e4aa122279c0

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25084

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25105

Reviewed By: dreiss

Differential Revision: D16988107

Pulled By: IvanKobzarev

fbshipit-source-id: 41ca7c9869f8370b8504c2ef8a96047cc16516d4