Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63197

This solves non-determinism from using hash values in sort methods.

Changes in tests are mostly mechanical.

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision: D30292776

Pulled By: ZolotukhinM

fbshipit-source-id: 74f57b53c3afc9d4be45715fd74781271373e055

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63195

This helps us to later switch from using KernelArena with raw pointers

to shared pointers without having to change all our source files at

once.

The changes are mechanical and should not affect any functionality.

With this PR, we're changing the following:

* `Add*` --> `AddPtr`

* `new Add(...)` --> `alloc<Add>(...)`

* `dynamic_cast<Add*>` --> `to<Add>`

* `static_cast<Add*>` --> `static_to<Add>`

Due to some complications with args forwarding, some places became more

verbose, e.g.:

* `new Block({})` --> `new Block(std::vector<ExprPtr>())`

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision: D30292779

Pulled By: ZolotukhinM

fbshipit-source-id: 150301c7d2df56b608b035827b6a9a87f5e2d9e9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63194

This test implements functionality used nowhere, and the author no

longer works on that. This PR also adds test_approx to CMakeLists where

it's been missing before.

Test Plan: Imported from OSS

Reviewed By: VitalyFedyunin

Differential Revision: D30292777

Pulled By: ZolotukhinM

fbshipit-source-id: ab6d98e729320a16f1b02ea0c69734f5e7fb2554

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63348

This change addresses singlaiiit's comment on D30241792 (61b49c8e41), which makes the JIT interpreter's behavior consistent between `future` is set and not.

Test Plan: Enhanced `EnableRethrowCaughtExceptionTest.EnableRethrowCaughtExceptionTestRethrowsCaughtException` to cover the modified code path.

Reviewed By: singlaiiit

Differential Revision: D30347782

fbshipit-source-id: 79ce57283154ca4372e5341217d942398db21ac8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62419

This diff adds support for cpu only kineto profiler on mobile. Thus

enabling chrome trace generation on mobile. This bring cpp API for

mobile profiling on part with Torchscript.

This is done via:

1. Utilizating debug handle annotations in KinetoEvent.

2. Adding post processing capability, via callbacks, to

KinetoThreadLocalState

3. Creating new RAII stype profiler, KinetoEdgeCPUProfiler, which can be

used in surrounding scope of model execution. This will write chrome

trace to the location specified in profiler constructor.

Test Plan:

MobileProfiler.ModuleHierarchy

Imported from OSS

Reviewed By: raziel

Differential Revision: D29993660

fbshipit-source-id: 0b44f52f9e9c5f5aff81ebbd9273c254c3c03299

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62417

This diff adds an option to make enableProfiler enable callbacks only

for certain RecordScopes.

Why?

Profiling has some overhead when we repeatedly execute callbacks for

alls copes. On mobile side when we often have small quantized models

this overhead can be large. We observed that by only profiling top level

op and skipping profiling of other atend ops called within we can limit

this overhead. For example, instead of profling at::conv2d -> at::convolution ->

at::convolution_ and further more if ops like transpose etc. are called,

skipping profiling of those. Of course this limits the visibility, but

at the least this way we get a choice.

Test Plan: Imported from OSS

Reviewed By: ilia-cher

Differential Revision: D29993659

fbshipit-source-id: 852d3ae7822f0d94dc6e507bd4019b60d488ef69

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62228

This diff adds debug handles to events and provides a way to use

RECORD_FUNCTIONs that will pass debug_handles down to profiler, which

will record it in the events.

Why add debug_handles?

For pytorch mobile, with lite interpreter, we generate debug handles

that can be used for lazily symbolicate exception traces to model level

stack trace. Similar to the model level stack trace you get in

TorchScript models. The debug_handles also enable getting module

hierarchy for lite interpreter model, support for which was added to

KinetoProfiler in previous diffs.

Followup plan:

1. Enabled scope callbacks such that lite interpreter can use it to

profiler only top level ops.

2. Enable post processing callbacks that take KinetoEvents and populate

module hierarchy using debug handles.

This will let us use KinetoProfiler for lite interpter use cases on

mobile. Aim is to use RAII guard to similarly generate chrome trace for

mobile usecases as well, although only for top level ops.

Test Plan:

test_misc : RecordDebugHandles.Basic

Imported from OSS

Reviewed By: ilia-cher

Differential Revision: D29935899

fbshipit-source-id: 4f06dc411b6b5fe0ffaebdd26d3274c96f8f389b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61792

KinetoEvent

This PR adds module hierarchy information to events.

What is module hierarchy information attached to events?

During profiling a TorchScript module, when events are added, we ask JIT

what is the module hierarchy associated with the node being

executed. At the time of execution of that node, there might be multiple

frames in the stack of interpreter. For each frame, we find

corresponding node and the corresponding module hierarchy is queried.

Module hierarchy corresponding to the node is associated with node's

InlinedCallStack. InlinedCallStack of node tracks the path via which the

node is inlined. Thus during the inlining process we annotate

module information corresponding to the CallMethod nodes being inlined.

With this PR, chrome trace will contain additional metadata:

"Module Hierarchy". This can look like this:

TOP(ResNet)::forward.SELF(ResNet)::_forward_impl.layer1(Sequential)::forward.0(BasicBlock)::forward.conv1(Conv2d)::forward.SELF(Conv2d)::_conv_forward

It contains module instance, type name and the method name in the

callstack.

Test Plan:

test_profiler

Imported from OSS

Reviewed By: raziel, ilia-cher

Differential Revision: D29745442

fbshipit-source-id: dc8dfaf7c5b8ab256ff0b2ef1e5ec265ca366528

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63073

It turned out that it's less than ideal to print out verbose stacktrace in exception messages in high-QPS services (see the related task) with a non-significant failure rate due to the truncation of long stacktrace which results in losing the original exception message thrown from native code. It is actually desirable to retain only the message of the original exception directly thrown from native code in such a usecase.

This change adds a new flag `torch_jit_disable_exception_stacktrace` to the pytorch jit interpreter to suppress stacktrace in the messages of exception thrown from the interpreter.

Reviewed By: Krovatkin

Differential Revision: D30241792

fbshipit-source-id: c340225c69286663cbd857bd31ba6f1736b1ac4c

Summary:

Add `-Wno-writable-strings`(which is clang's flavor of `-Wwrite-strings`) to list of warnings ignored while compiling torch_python.

Avoid unnecessary copies in range loop

Fix number of signed-unsigned comparisons

Found while building locally on M1

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62930

Reviewed By: albanD

Differential Revision: D30171981

Pulled By: malfet

fbshipit-source-id: 25bd43dab5675f927ca707e32737ed178b04651e

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61895

* Add FLOP count for addmm, should be `2*m*n*k`.

Share the same code path for `addmm` and `mm`.

Test Plan:

Imported from OSS

`python test/test_profiler.py`

Run a sample profile and check that FLOPS for `aten::addmm` is correct.

`[chowar@devbig053.frc2 ~/local/pytorch/build] ninja bin/test_jit`

`[chowar@devbig053.frc2 ~/local/pytorch/build] ./bin/test_jit --gtest_filter='ComputeFlopsTest*'`

Reviewed By: dskhudia

Differential Revision: D29785671

fbshipit-source-id: d1512036202d7234a981bda897af1f75808ccbfe

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63005

Realized I forgot to move the Runtime half of these functions be within the struct.

Test Plan: ci

Reviewed By: pavithranrao

Differential Revision: D30205521

fbshipit-source-id: ccd87d7d78450dd0dd23ba493bbb9d87be4640a5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62796Fixes#62380

* update test functions to call wheel install folder {sitepackages}/torch instead of build/ folder

* add symbolic link for shared libraries which are called by the tests (this is a bit hacky and should be fixed the rpath before compiling -- similar to https://github.com/pytorch/pytorch/blob/master/.jenkins/pytorch/test.sh#L204-L208).

### Test plan

check if all ci workflows pass

Test Plan: Imported from OSS

Reviewed By: driazati

Differential Revision: D30193142

Pulled By: tktrungna

fbshipit-source-id: 1247f9eda1c11c763c31c7383c77545b1ead1a60

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62985

Remove the process_group_agent and faulty_process_group_agent code now that PROCESS_GROUP backend has been deprecated for RPC (https://github.com/pytorch/pytorch/issues/55615). Discussed with xush6528 that it was okay to remove ProcessGroupAgentTest and ProcessGroupAgentBench which depended on process_group_agent.

Test Plan: CI tests

Reviewed By: pritamdamania87

Differential Revision: D30195576

fbshipit-source-id: 8b4381cffadb868b19d481198015d0a67b205811

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62521

This diff did the following few things to enable the tests:

1. Exposed IMethod as TORCH_API.

2. Linked torch_deploy to test_api if USE_DEPLOY == 1.

Test Plan:

./build/bin/test_api --gtest_filter=IMethodTest.*

To be noted, one needs to run `python torch/csrc/deploy/example/generate_examples.py` before the above command.

Reviewed By: ezyang

Differential Revision: D30055372

Pulled By: alanwaketan

fbshipit-source-id: 50eb3689cf84ed0f48be58cd109afcf61ecca508

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62336

This PR was generated by removing `const` for all types of nodes in NNC IR, and fixing compilation errors that were the result of this change.

This is the first step in making all NNC mutations in-place.

Test Plan: Imported from OSS

Reviewed By: iramazanli

Differential Revision: D30049829

Pulled By: navahgar

fbshipit-source-id: ed14e2d2ca0559ffc0b92ac371f405579c85dd63

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61477

It would be nice if the compatibility api was just kinda plug and play with no care about the internals of the api at all. Thats what this diff aims to provide.

The general usage would be something like

< On the Client >

RuntimeCompatibilityInfo runtime_info = get_runtime_compatibility_info();

.

.

.

< On the Server >

ModelCompatibilityInfo model_info = get_model_compatibility_info(<model_path>);

bool compatible = is_compatible(runtime_info, model_info);

Currently RuntimeCompatibilityInfo and ModelCompatibilityInfo are exactly the same, but it seemed feasible to me that they may end up diverging as more information is added to the api (such as a min supported bytecode version being exposed from the runtime).

Test Plan: unit test and ci

Reviewed By: dhruvbird, raziel

Differential Revision: D29624080

fbshipit-source-id: 43c1ce15531f6f1a92f357f9cde4e6634e561700

Summary:

Enable Gelu bf16/fp32 in CPU path using Mkldnn implementation. User doesn't need to_mkldnn() explicitly. New Gelu fp32 performs better than original one.

Add Gelu backward for https://github.com/pytorch/pytorch/pull/53615.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58525

Reviewed By: ejguan

Differential Revision: D29940369

Pulled By: ezyang

fbshipit-source-id: df9598262ec50e5d7f6e96490562aa1b116948bf

Summary:

Fixes https://github.com/pytorch/pytorch/issues/60747

Enhances the C++ versions of `Transformer`, `TransformerEncoderLayer`, and `TransformerDecoderLayer` to support callables as their activation functions. The old way of specifying activation function still works as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62342

Reviewed By: malfet

Differential Revision: D30022592

Pulled By: jbschlosser

fbshipit-source-id: d3c62410b84b1bd8c5ed3a1b3a3cce55608390c4

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62442

For PythonMethodWrapper::setArgumentNames, make sure to use the correct method

specified by method_name_ rather than using the parent model_ obj which itself

_is_ callable, but that callable is not the right signature to extract.

For Python vs Script, unify the behavior to avoid the 'self' parameter, so we only

list the argument names to the unbound arguments which is what we need in practice.

Test Plan: update unit test and it passes

Reviewed By: alanwaketan

Differential Revision: D29965283

fbshipit-source-id: a4e6a1d0f393f2a41c3afac32285548832da3fb4

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62306

Test to see if caching of operators works as expected. When caching operators during model load we look up using the operator name. This test ensures that even if there are multiple operators with the same name (in the same model), the caching distinguishes between the ones that have a different number of arguments specified during the call in the serialized bytecode.

In this specific test, there's a model with 3 methods, 2 of which return a `float32` tensor and one which return an `int64` dtype. Please see the comments in the diff for details.

ghstack-source-id: 134634613

Test Plan:

Test command:

```

cd fbsource/fbcode/

buck test mode/dev //caffe2/test/cpp/jit:jit -- --exact 'caffe2/test/cpp/jit:jit - LiteInterpreterTest.OperatorCacheDifferentiatesDefaultArgs'

```

```

cd fbsource/

buck test xplat/caffe2:test_lite_interpreter

```

Reviewed By: raziel

Differential Revision: D29929116

fbshipit-source-id: 1d42bd3e6d33128631e970c477344564b0337325

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62305

Currently, it's super time consuming to run a lite interpreter test from fbcode since it takes > 10 minutes to build. Recently, I haven't been able to do that either due to low disk space.

Having this test available in fbsource/xplat/ is a great win for productivity since I can re-run it in ~2 minutes even after significant changes!

I've had to disarm some tests that can only run in OSS of fbcode builds (since they need functionality that we don't include for on-device FB builds). They are disarmed using the macro `FB_XPLAT_BUILD`.

ghstack-source-id: 134634611

Test Plan: New test!

Reviewed By: raziel, JacobSzwejbka, cccclai

Differential Revision: D29954943

fbshipit-source-id: e55eab14309472ef6bc9b0afe0af126c561dbdb1

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61983

Trial #2. The previous PR (https://github.com/pytorch/pytorch/pull/61498) was reverted because this caused a failure in `pytorch_linux_backward_compatibility_check_test`. Fixed that now by adding to the exception list in `check_backward_compatibility.py`.

Test Plan: Imported from OSS

Reviewed By: eellison

Differential Revision: D29828830

Pulled By: navahgar

fbshipit-source-id: 947a7b1622ff6e3e575c051b8f34a789e105bcee

Summary:

Background:

The gloo communication implementation is as follow:

1. Construct communication workers and push them into a queue.

2. Initialize a thread pool and each thread run a loop to get worker from the queue and execute it.

Issue:

The recorded profiling time span start from the worker construction and end at finish. So it will include the time of worker waiting in the queue and will result in multiple gloo communication time span overlapping with each other in a same thread in the timeline:

This is because when next work is waiting in the queue, the last work is not finished.

Solution:

This PR delays the profiling start time of gloo communication from worker construction to worker is really executed, so the profiling span will not include the time of waiting in queue. Implementation as follow:

1. Firstly, disable the original record function by specifying 'nullptr' to 'profilingTitle' argument of ProcessGroup::Work

2. Construct a 'recordFunctionBeforeCallback_' and 'recordFunctionEndCallback_' and save it as member of the worker.

3. When the worker is executed, invoke the 'recordFunctionBeforeCallback_'.

4. The 'recordFunctionEndCallback_' will be invoked at finish as before.

After this modification, the gloo profiling span in timeline will not overlap with each other:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61342

Reviewed By: albanD

Differential Revision: D29811656

Pulled By: gdankel

fbshipit-source-id: ff07e8906d90f21a072049998400b4a48791e441

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61933

### Issue:

SubModules with same name are not serialized correctly in bytecode format while using `_save_for_mobile`. These submodules are not distinguished as different modules even though they have different foward, setstate etc if they have the same name.

### Fix:

Mangler creates unique names so that modules and submodules that have same names can be uniquely identified while saving the module. iseeyuan rightly pointed out the underlying issue that mangler is not used in the process of saving bytecode and hence unique references for the submodules are not created. Please refer to the notebook to repro the issue: N777224

### Diff:

The above idea of fix is implemented. The mangled names are used in bytecode thereby the files in `code/` directory now have right reference to the `bytecode.pkl`

Will this have backward compatibility?

iseeyuan please feel free to correct or update this.

Yes. This fix impacts only modules with same name sub modules which were not serialized correctly before. Existing modules should have correct references and `_load_for_mobile` must not see any change. To confirm this the existing test cases need to pass for the diff to be approved and shipped.

ghstack-source-id: 134242696

Test Plan:

```

~/fbsource/fbcode > buck test caffe2/test/cpp/jit:jit -- BackendTest.TestCompositeWithSetStates

Downloaded 0/5 artifacts, 0.00 bytes, 100.0% cache miss (for updated rules)

Building: finished in 19.2 sec (100%) 17619/17619 jobs, 3/17619 updated

Total time: 19.5 sec

More details at https://www.internalfb.com/intern/buck/build/91542d50-25f2-434d-9e1a-b93117f4efe1

Tpx test run coordinator for Facebook. See https://fburl.com/tpx for details.

Running with tpx session id: de9e27cf-4c6c-4980-8bc5-b830b7c9c534

Trace available for this run at /tmp/tpx-20210719-161607.659665/trace.log

Started reporting to test run: https://www.internalfb.com/intern/testinfra/testrun/844425127206388

✓ ListingSuccess: caffe2/test/cpp/jit:jit - main (8.140)

✓ Pass: caffe2/test/cpp/jit:jit - BackendTest.TestCompositeWithSetStates (0.528)

Summary

Pass: 1

ListingSuccess: 1

If you need help understanding your runs, please follow the wiki: https://fburl.com/posting_in_tpx_users

Finished test run: https://www.internalfb.com/intern/testinfra/testrun/844425127206388

```

```

~/fbsource/fbcode > buck test caffe2/test/cpp/jit:jit -- BackendTest.TestConsistencyOfCompositeWithSetStates

Building: finished in 4.7 sec (100%) 6787/6787 jobs, 0/6787 updated

Total time: 5.0 sec

More details at https://www.internalfb.com/intern/buck/build/63d6d871-1dd9-4c72-a63b-ed91900c4dc9

Tpx test run coordinator for Facebook. See https://fburl.com/tpx for details.

Running with tpx session id: 81023cd2-c1a2-498b-81b8-86383d73d23b

Trace available for this run at /tmp/tpx-20210722-160818.436635/trace.log

Started reporting to test run: https://www.internalfb.com/intern/testinfra/testrun/8725724325952153

✓ ListingSuccess: caffe2/test/cpp/jit:jit - main (7.867)

✓ Pass: caffe2/test/cpp/jit:jit - BackendTest.TestConsistencyOfCompositeWithSetStates (0.607)

Summary

Pass: 1

ListingSuccess: 1

If you need help understanding your runs, please follow the wiki: https://fburl.com/posting_in_tpx_users

Finished test run: https://www.internalfb.com/intern/testinfra/testrun/8725724325952153

```

To check the `bytecode.pkl` using module inspector please check:

N1007089

Reviewed By: iseeyuan

Differential Revision: D29669831

fbshipit-source-id: 504dfcb5f7446be5e1c9bd31f0bd9c986ce1a647

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61791

methods from forward

During inlining we attached InlinedCallstack to nodes being inlined. In

the process we attach moodule information as well, such that if

CallMethod is being inlined we know which class instance and class type

the method belongs to. However, CallMethod can be calling a method of

the same object to which the graph belongs. e.g.:

```

def forward(self, input):

x = input + 10

return forward_impl_(x, input)

```

Here forward_impl is method defined on the same class in which forward

is defined. Existing module hierarchy annotation will mislabel this as

unknown instance since the method is not associated with output of

GetAttr node (it would be we had called self.conv.forward_impl_ for

example).

Change in this PR reconciles this by creating a placeholder name "SELF"

for module instance indicating that you can traverse InlinedCallStack

backwards to find first node with name != SELF, which would be the name

of the object.

e.g.:

TOP(ResNet)::forward.SELF(ResNet)::_forward_impl.layer1(Sequential)::forward.0(BasicBlock)::forward.conv1(Conv2d)::forward.SELF(Conv2d)::_conv_forward

Test Plan:

Add test

Imported from OSS

Reviewed By: larryliu0820

Differential Revision: D29745443

fbshipit-source-id: 1525e41df53913341c4c36a56772454782a0ba93

Summary:

As GoogleTest `TEST` macro is non-compliant with it as well as `DEFINE_DISPATCH`

All changes but the ones to `.clang-tidy` are generated using following script:

```

for i in `find . -type f -iname "*.c*" -or -iname "*.h"|xargs grep cppcoreguidelines-avoid-non-const-global-variables|cut -f1 -d:|sort|uniq`; do sed -i "/\/\/ NOLINTNEXTLINE(cppcoreguidelines-avoid-non-const-global-variables)/d" $i; done

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62008

Reviewed By: driazati, r-barnes

Differential Revision: D29838584

Pulled By: malfet

fbshipit-source-id: 1b2f8602c945bd4ce50a9bfdd204755556e31d13

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903

### Remaining Tasks

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP).

### Summary

1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed.

2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415).

It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now.

3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now.

4. One test is currently being skipped -

[test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines.

The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d.

Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses.

Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code.

Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests.

### Testing

1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2.

Only one test had to be modified, as it was hardcoded for AVX2.

2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support.

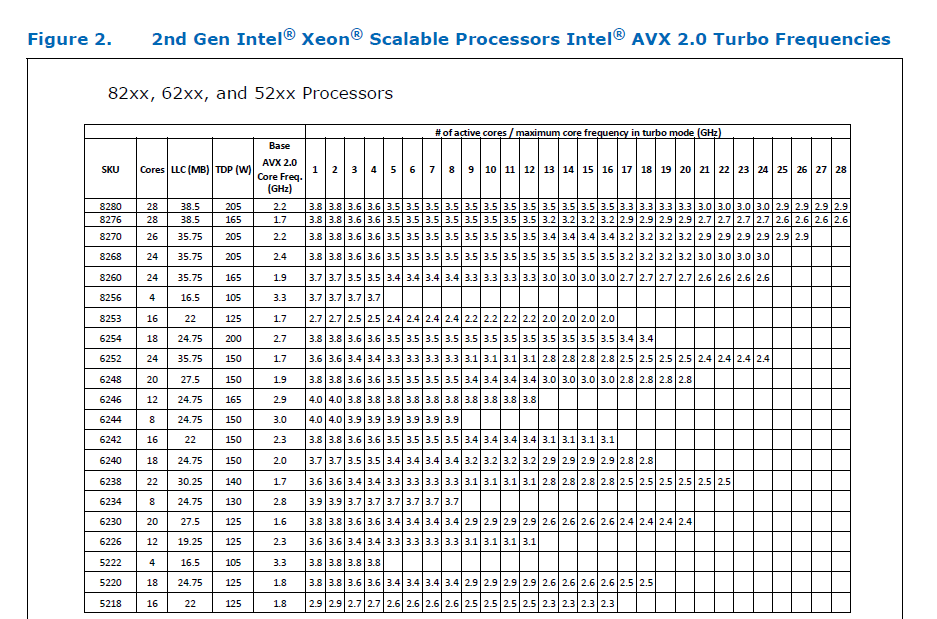

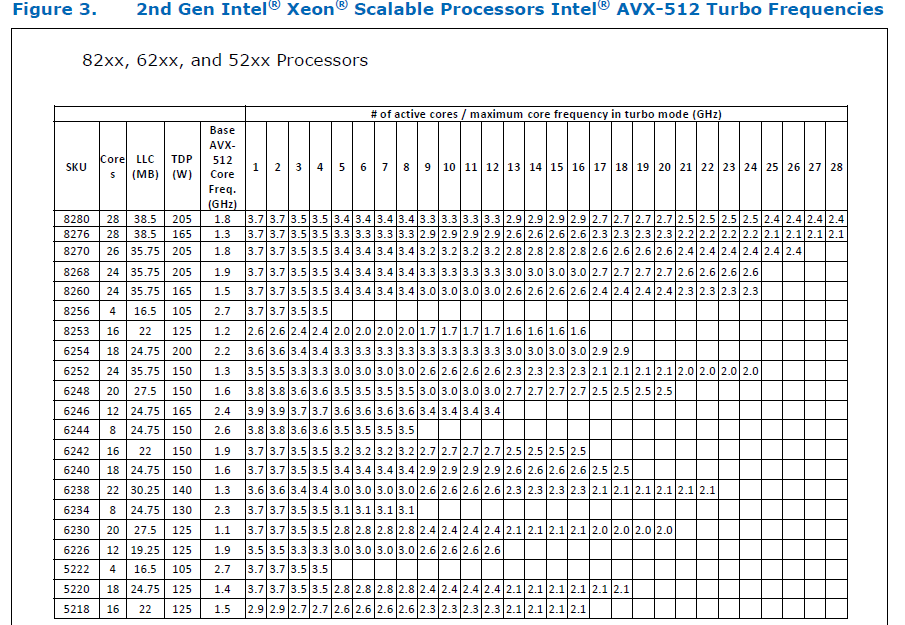

### Would the downclocking caused by AVX512 pose an issue?

I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance.

This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance.

Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -

The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them.

### Is PyTorch always faster with AVX512?

No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512.

It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed.

Original pull request: https://github.com/pytorch/pytorch/pull/56992

Reviewed By: soulitzer

Differential Revision: D29266289

Pulled By: ezyang

fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61594

### Summary:

Added a unit test for the Nnapi delegate's preprocess() function. The

function was previously tested locally, but now a basic test is

added for OSS.

See https://github.com/pytorch/pytorch/pull/61499 for preprocess

implementation. See D29647123 for local testing.

**TODO:**

Add more comprehensive tests.

Add tests for model execution, after the Nnapi delegate's initialization

and execution is implemented T91991928.

**CMakeLists.txt:**

Added a library for the Nnapi delegate

- Explicit linking of torch_python is necessary for the Nnapi delegate's use of pybind

**test_backends.py:**

Added a test for lowering to Nnapi

- Based off https://github.com/pytorch/pytorch/blob/master/test/test_nnapi.py

- Only differences are the loading of the nnapi backend library and the need to change dtype from float64 to float32

### Test Plan:

Running `python test/test_jit.py TestBackendsWithCompiler -v` succeeds. Also saved and examined the model file locally.

Test Plan: Imported from OSS

Reviewed By: iseeyuan

Differential Revision: D29687143

fbshipit-source-id: 9ba9e57f7f856e5ac15e13527f6178d613b32802

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61725

Alloc/free inside a loop isn't really an optimization, and furthermore

it breaks some attempted optimization in the llvm backend: we use alloca for

small allocations, which is efficient since alloca is on the stack, but there's

no corresponding free, so we leak tons of stack. I hit this while building an

rfactor buffer inside a very deeply nested loop.

ghstack-source-id: 133627310

Test Plan:

Unit test which simulates use of a temp buffer in a deeply nested

loop.

Reviewed By: navahgar

Differential Revision: D29533364

fbshipit-source-id: c321f4cb05304cfb9146afe32edc4567b623412e