mirror of

https://github.com/zebrajr/pytorch.git

synced 2025-12-06 12:20:52 +01:00

5bcbbf5373

187 Commits

| Author | SHA1 | Message | Date | |

|---|---|---|---|---|

|

|

dfc7fa03e5 |

lu_backward: more numerically stable and with complex support. (#53994)

Summary: As per title. Numerical stability increased by replacing inverses with solutions to systems of linear triangular equations. Unblocks computing `torch.det` for FULL-rank inputs of complex dtypes via the LU decomposition once https://github.com/pytorch/pytorch/pull/48125/files is merged: ``` LU, pivots = input.lu() P, L, U = torch.lu_unpack(LU, pivots) det_input = P.det() * torch.prod(U.diagonal(0, -1, -2), dim=-1) # P is not differentiable, so we are fine even if it is complex. ``` Unfortunately, since `lu_backward` is implemented as `autograd.Function`, we cannot support both autograd and scripting at the moment. The solution would be to move all the lu-related methods to ATen, see https://github.com/pytorch/pytorch/issues/53364. Resolves https://github.com/pytorch/pytorch/issues/52891 TODOs: * extend lu_backward for tall/wide matrices of full rank. * move lu-related functionality to ATen and make it differentiable. * handle rank-deficient inputs. Pull Request resolved: https://github.com/pytorch/pytorch/pull/53994 Reviewed By: pbelevich Differential Revision: D27188529 Pulled By: anjali411 fbshipit-source-id: 8e053b240413dbf074904dce01cd564583d1f064 |

||

|

|

f48a9712b7 |

Rewrite functional.tensordot to be TorchScript-able (#53672)

Summary: Fixes https://github.com/pytorch/pytorch/issues/53487 Pull Request resolved: https://github.com/pytorch/pytorch/pull/53672 Reviewed By: VitalyFedyunin Differential Revision: D26934392 Pulled By: gmagogsfm fbshipit-source-id: f842af340e4be723bf90b903793b0221af158ca7 |

||

|

|

b0afe945a7 |

Fix pylint error torch.tensor is not callable (#53424)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/53424 Fixes https://github.com/pytorch/pytorch/issues/24807 and supersedes the stale https://github.com/pytorch/pytorch/issues/25093 (Cc Microsheep). If you now run the reproduction ```python import torch if __name__ == "__main__": t = torch.tensor([1, 2, 3], dtype=torch.float64) ``` with `pylint==2.6.0`, you get the following output ``` test_pylint.py:1:0: C0114: Missing module docstring (missing-module-docstring) test_pylint.py:4:8: E1101: Module 'torch' has no 'tensor' member; maybe 'Tensor'? (no- member) test_pylint.py:4:38: E1101: Module 'torch' has no 'float64' member (no-member) ``` Now `pylint` doesn't recognize `torch.tensor` at all, but it is promoted in the stub. Given that it also doesn't recognize `torch.float64`, I think fixing this is out of scope of this PR. --- ## TL;DR This BC-breaking only for users that rely on unintended behavior. Since `torch/__init__.py` loaded `torch/tensor.py` it was populated in `sys.modules`. `torch/__init__.py` then overwrote `torch.tensor` with the actual function. With this `import torch.tensor as tensor` does not fail, but returns the function rather than the module. Users that rely on this import need to change it to `from torch import tensor`. Reviewed By: zou3519 Differential Revision: D26223815 Pulled By: bdhirsh fbshipit-source-id: 125b9ff3d276e84a645cd7521e8d6160b1ca1c21 |

||

|

|

3403babd94 |

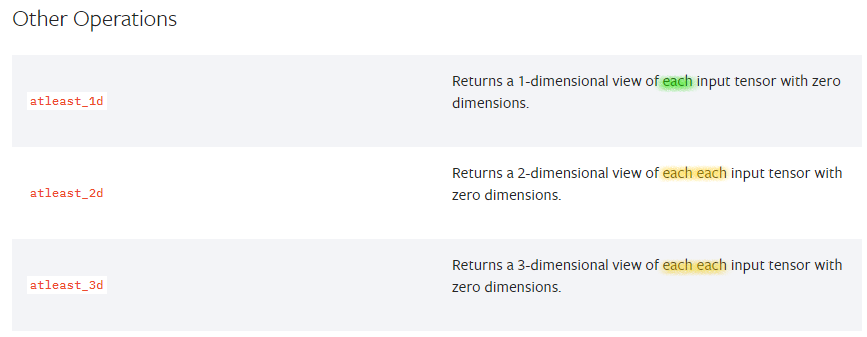

[doc] Fix documentations of torch functions (#52982)

Summary: This PR includes multiple small fixes of docstrings. * Fix documentation for [`torch.atleast_2d`](https://pytorch.org/docs/master/generated/torch.atleast_2d.html) and [`torch.atleast_3d`](https://pytorch.org/docs/master/generated/torch.atleast_3d.html) by adding a new line before `Args::`. * Fix indentation for [`torch.isfinite`](https://pytorch.org/docs/master/generated/torch.isfinite.html) and [`torch.isinf`](https://pytorch.org/docs/master/generated/torch.isinf.html). The "Arguments", "Parameters" and "Examples" sections need to be at the same level as the first description. * Insert a new line after `Example::` where it is missing. This makes difference in the way the documentations are rendered: see [this](https://pytorch.org/docs/master/generated/torch.gt.html) (with a new line) and [this](https://pytorch.org/docs/master/generated/torch.triu_indices.html) (without). As the majority of the docs seems to follow the former style, this PR amends the latter cases. * Fix the "Returns" section of [`torch.block_diag`](https://pytorch.org/docs/master/generated/torch.block_diag.html) and [`torch.cartesian_prod`](https://pytorch.org/docs/master/generated/torch.cartesian_prod.html). The second and the subsequent lines shouldn't be indented, as can be seen in the docstring of [`torch.vander`](https://pytorch.org/docs/master/generated/torch.vander.html). * Fix variable names in the example of `torch.fft.(i)fftn`. Pull Request resolved: https://github.com/pytorch/pytorch/pull/52982 Reviewed By: mruberry Differential Revision: D26724408 Pulled By: H-Huang fbshipit-source-id: c65aa0621f7858b05fd16f497caacf6ea8eb33c9 |

||

|

|

47557b95ef |

Removed typographical error from tech docs (#51286)

Summary: Dublications removed from tech docs.  Pull Request resolved: https://github.com/pytorch/pytorch/pull/51286 Reviewed By: albanD Differential Revision: D26227627 Pulled By: ailzhang fbshipit-source-id: efa0cd90face458673b8530388378d5a7eb0f1cf |

||

|

|

c147aa306c |

Use doctest directly to get docstring examples (#50596)

Summary: This PR addresses [a two-year-old TODO in `test/test_type_hints.py`]( |

||

|

|

6a3fc0c21c |

Treat has_torch_function and object_has_torch_function as static False when scripting (#48966)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/48966 This PR lets us skip the `if not torch.jit.is_scripting():` guards on `functional` and `nn.functional` by directly registering `has_torch_function` and `object_has_torch_function` to the JIT as statically False. **Benchmarks** The benchmark script is kind of long. The reason is that it's testing all four PRs in the stack, plus threading and subprocessing so that the benchmark can utilize multiple cores while still collecting good numbers. Both wall times and instruction counts were collected. This stack changes dozens of operators / functions, but very mechanically such that there are only a handful of codepath changes. Each row is a slightly different code path (e.g. testing in Python, testing in the arg parser, different input types, etc.) <details> <summary> Test script </summary> ``` import argparse import multiprocessing import multiprocessing.dummy import os import pickle import queue import random import sys import subprocess import tempfile import time import torch from torch.utils.benchmark import Timer, Compare, Measurement NUM_CORES = multiprocessing.cpu_count() ENVS = { "ref": "HEAD (current)", "torch_fn_overhead_stack_0": "#48963", "torch_fn_overhead_stack_1": "#48964", "torch_fn_overhead_stack_2": "#48965", "torch_fn_overhead_stack_3": "#48966", } CALLGRIND_ENVS = tuple(ENVS.keys()) MIN_RUN_TIME = 3 REPLICATES = { "longer": 1_000, "long": 300, "short": 50, } CALLGRIND_NUMBER = { "overnight": 500_000, "long": 250_000, "short": 10_000, } CALLGRIND_TIMEOUT = { "overnight": 800, "long": 400, "short": 100, } SETUP = """ x = torch.ones((1, 1)) y = torch.ones((1, 1)) w_tensor = torch.ones((1, 1), requires_grad=True) linear = torch.nn.Linear(1, 1, bias=False) linear_w = linear.weight """ TASKS = { "C++: unary `.t()`": "w_tensor.t()", "C++: unary (Parameter) `.t()`": "linear_w.t()", "C++: binary (Parameter) `mul` ": "x + linear_w", "tensor.py: _wrap_type_error_to_not_implemented `__floordiv__`": "x // y", "tensor.py: method `__hash__`": "hash(x)", "Python scalar `__rsub__`": "1 - x", "functional.py: (unary) `unique`": "torch.functional.unique(x)", "functional.py: (args) `atleast_1d`": "torch.functional.atleast_1d((x, y))", "nn/functional.py: (unary) `relu`": "torch.nn.functional.relu(x)", "nn/functional.py: (args) `linear`": "torch.nn.functional.linear(x, w_tensor)", "nn/functional.py: (args) `linear (Parameter)`": "torch.nn.functional.linear(x, linear_w)", "Linear(..., bias=False)": "linear(x)", } def _worker_main(argv, fn): parser = argparse.ArgumentParser() parser.add_argument("--output_file", type=str) parser.add_argument("--single_task", type=int, default=None) parser.add_argument("--length", type=str) args = parser.parse_args(argv) single_task = args.single_task conda_prefix = os.getenv("CONDA_PREFIX") assert torch.__file__.startswith(conda_prefix) env = os.path.split(conda_prefix)[1] assert env in ENVS results = [] for i, (k, stmt) in enumerate(TASKS.items()): if single_task is not None and single_task != i: continue timer = Timer( stmt=stmt, setup=SETUP, sub_label=k, description=ENVS[env], ) results.append(fn(timer, args.length)) with open(args.output_file, "wb") as f: pickle.dump(results, f) def worker_main(argv): _worker_main( argv, lambda timer, _: timer.blocked_autorange(min_run_time=MIN_RUN_TIME) ) def callgrind_worker_main(argv): _worker_main( argv, lambda timer, length: timer.collect_callgrind(number=CALLGRIND_NUMBER[length], collect_baseline=False)) def main(argv): parser = argparse.ArgumentParser() parser.add_argument("--long", action="store_true") parser.add_argument("--longer", action="store_true") args = parser.parse_args(argv) if args.longer: length = "longer" elif args.long: length = "long" else: length = "short" replicates = REPLICATES[length] num_workers = int(NUM_CORES // 2) tasks = list(ENVS.keys()) * replicates random.shuffle(tasks) task_queue = queue.Queue() for _ in range(replicates): envs = list(ENVS.keys()) random.shuffle(envs) for e in envs: task_queue.put((e, None)) callgrind_task_queue = queue.Queue() for e in CALLGRIND_ENVS: for i, _ in enumerate(TASKS): callgrind_task_queue.put((e, i)) results = [] callgrind_results = [] def map_fn(worker_id): # Adjacent cores often share cache and maxing out a machine can distort # timings so we space them out. callgrind_cores = f"{worker_id * 2}-{worker_id * 2 + 1}" time_cores = str(worker_id * 2) _, output_file = tempfile.mkstemp(suffix=".pkl") try: loop_tasks = ( # Callgrind is long running, and then the workers can help with # timing after they finish collecting counts. (callgrind_task_queue, callgrind_results, "callgrind_worker", callgrind_cores, CALLGRIND_TIMEOUT[length]), (task_queue, results, "worker", time_cores, None)) for queue_i, results_i, mode_i, cores, timeout in loop_tasks: while True: try: env, task_i = queue_i.get_nowait() except queue.Empty: break remaining_attempts = 3 while True: try: subprocess.run( " ".join([ "source", "activate", env, "&&", "taskset", "--cpu-list", cores, "python", os.path.abspath(__file__), "--mode", mode_i, "--length", length, "--output_file", output_file ] + ([] if task_i is None else ["--single_task", str(task_i)])), shell=True, check=True, timeout=timeout, ) break except subprocess.TimeoutExpired: # Sometimes Valgrind will hang if there are too many # concurrent runs. remaining_attempts -= 1 if not remaining_attempts: print("Too many failed attempts.") raise print(f"Timeout after {timeout} sec. Retrying.") # We don't need a lock, as the GIL is enough. with open(output_file, "rb") as f: results_i.extend(pickle.load(f)) finally: os.remove(output_file) with multiprocessing.dummy.Pool(num_workers) as pool: st, st_estimate, eta, n_total = time.time(), None, "", len(tasks) * len(TASKS) map_job = pool.map_async(map_fn, range(num_workers)) while not map_job.ready(): n_complete = len(results) if n_complete and len(callgrind_results): if st_estimate is None: st_estimate = time.time() else: sec_per_element = (time.time() - st_estimate) / n_complete n_remaining = n_total - n_complete eta = f"ETA: {n_remaining * sec_per_element:.0f} sec" print( f"\r{n_complete} / {n_total} " f"({len(callgrind_results)} / {len(CALLGRIND_ENVS) * len(TASKS)}) " f"{eta}".ljust(40), end="") sys.stdout.flush() time.sleep(2) total_time = int(time.time() - st) print(f"\nTotal time: {int(total_time // 60)} min, {total_time % 60} sec") desc_to_ind = {k: i for i, k in enumerate(ENVS.values())} results.sort(key=lambda r: desc_to_ind[r.description]) # TODO: Compare should be richer and more modular. compare = Compare(results) compare.trim_significant_figures() compare.colorize(rowwise=True) # Manually add master vs. overall relative delta t. merged_results = { (r.description, r.sub_label): r for r in Measurement.merge(results) } cmp_lines = str(compare).splitlines(False) print(cmp_lines[0][:-1] + "-" * 15 + "]") print(f"{cmp_lines[1]} |{'':>10}\u0394t") print(cmp_lines[2] + "-" * 15) for l, t in zip(cmp_lines[3:3 + len(TASKS)], TASKS.keys()): assert l.strip().startswith(t) t0 = merged_results[(ENVS["ref"], t)].median t1 = merged_results[(ENVS["torch_fn_overhead_stack_3"], t)].median print(f"{l} |{'':>5}{(t1 / t0 - 1) * 100:>6.1f}%") print("\n".join(cmp_lines[3 + len(TASKS):])) counts_dict = { (r.task_spec.description, r.task_spec.sub_label): r.counts(denoise=True) for r in callgrind_results } def rel_diff(x, x0): return f"{(x / x0 - 1) * 100:>6.1f}%" task_pad = max(len(t) for t in TASKS) print(f"\n\nInstruction % change (relative to `{CALLGRIND_ENVS[0]}`)") print(" " * (task_pad + 8) + (" " * 7).join([ENVS[env] for env in CALLGRIND_ENVS[1:]])) for t in TASKS: values = [counts_dict[(ENVS[env], t)] for env in CALLGRIND_ENVS] print(t.ljust(task_pad + 3) + " ".join([ rel_diff(v, values[0]).rjust(len(ENVS[env]) + 5) for v, env in zip(values[1:], CALLGRIND_ENVS[1:])])) print("\033[4m" + " Instructions per invocation".ljust(task_pad + 3) + " ".join([ f"{v // CALLGRIND_NUMBER[length]:.0f}".rjust(len(ENVS[env]) + 5) for v, env in zip(values[1:], CALLGRIND_ENVS[1:])]) + "\033[0m") print() import pdb pdb.set_trace() if __name__ == "__main__": parser = argparse.ArgumentParser() parser.add_argument("--mode", type=str, choices=("main", "worker", "callgrind_worker"), default="main") args, remaining = parser.parse_known_args() if args.mode == "main": main(remaining) elif args.mode == "callgrind_worker": callgrind_worker_main(remaining) else: worker_main(remaining) ``` </details> **Wall time** <img width="1178" alt="Screen Shot 2020-12-12 at 12 28 13 PM" src="https://user-images.githubusercontent.com/13089297/101994419-284f6a00-3c77-11eb-8dc8-4f69a890302e.png"> <details> <summary> Longer run (`python test.py --long`) is basically identical. </summary> <img width="1184" alt="Screen Shot 2020-12-12 at 5 02 47 PM" src="https://user-images.githubusercontent.com/13089297/102000425-2350e180-3c9c-11eb-999e-a95b37e9ef54.png"> </details> **Callgrind** <img width="936" alt="Screen Shot 2020-12-12 at 12 28 54 PM" src="https://user-images.githubusercontent.com/13089297/101994421-2e454b00-3c77-11eb-9cd3-8cde550f536e.png"> Test Plan: existing unit tests. Reviewed By: ezyang Differential Revision: D25590731 Pulled By: robieta fbshipit-source-id: fe05305ff22b0e34ced44b60f2e9f07907a099dd |

||

|

|

d31a760be4 |

move has_torch_function to C++, and make a special case object_has_torch_function (#48965)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/48965 This PR pulls `__torch_function__` checking entirely into C++, and adds a special `object_has_torch_function` method for ops which only have one arg as this lets us skip tuple construction and unpacking. We can now also do away with the Python side fast bailout for `Tensor` (e.g. `if any(type(t) is not Tensor for t in tensors) and has_torch_function(tensors)`) because they're actually slower than checking with the Python C API. Test Plan: Existing unit tests. Benchmarks are in #48966 Reviewed By: ezyang Differential Revision: D25590732 Pulled By: robieta fbshipit-source-id: 6bd74788f06cdd673f3a2db898143d18c577eb42 |

||

|

|

e6779d4357 |

[*.py] Rename "Arguments:" to "Args:" (#49736)

Summary:

I've written custom parsers and emitters for everything from docstrings to classes and functions. However, I recently came across an issue when I was parsing/generating from the TensorFlow codebase: inconsistent use of `Args:` and `Arguments:` in its docstrings.

```sh

(pytorch#c348fae)$ for name in 'Args:' 'Arguments:'; do

printf '%-10s %04d\n' "$name" "$(rg -IFtpy --count-matches "$name" | paste -s -d+ -- | bc)"; done

Args: 1095

Arguments: 0336

```

It is easy enough to extend my parsers to support both variants, however it looks like `Arguments:` is wrong anyway, as per:

- https://google.github.io/styleguide/pyguide.html#doc-function-args @ [`ddccc0f`](https://github.com/google/styleguide/blob/ddccc0f/pyguide.md)

- https://chromium.googlesource.com/chromiumos/docs/+/master/styleguide/python.md#describing-arguments-in-docstrings @ [`9fc0fc0`](https://chromium.googlesource.com/chromiumos/docs/+/9fc0fc0/styleguide/python.md)

- https://sphinxcontrib-napoleon.readthedocs.io/en/latest/example_google.html @ [`c0ae8e3`](https://github.com/sphinx-contrib/napoleon/blob/c0ae8e3/docs/source/example_google.rst)

Therefore, only `Args:` is valid. This PR replaces them throughout the codebase.

PS: For related PRs, see tensorflow/tensorflow/pull/45420

PPS: The trackbacks automatically appearing below are sending the same changes to other repositories in the [PyTorch](https://github.com/pytorch) organisation.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49736

Reviewed By: albanD

Differential Revision: D25710534

Pulled By: soumith

fbshipit-source-id: 61e8ff01abb433e9f78185c2d1d0cbd7c22c1619

|

||

|

|

5c25f8faf3 |

stft: Change require_complex warning to an error (#49022)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/49022 **BC-breaking note**: Previously torch.stft took an optional `return_complex` parameter that indicated whether the output would be a floating point tensor or a complex tensor. By default `return_complex` was False to be consistent with the previous behavior of torch.stft. This PR changes this behavior so `return_complex` is a required argument. **PR Summary**: * **#49022 stft: Change require_complex warning to an error** Test Plan: Imported from OSS Reviewed By: ngimel Differential Revision: D25658906 Pulled By: mruberry fbshipit-source-id: 11932d1102e93f8c7bd3d2d0b2a607fd5036ec5e |

||

|

|

47c65f8223 |

Revert D25569586: stft: Change require_complex warning to an error

Test Plan: revert-hammer

Differential Revision:

D25569586 (

|

||

|

|

5874925b46 |

stft: Change require_complex warning to an error (#49022)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/49022 Test Plan: Imported from OSS Reviewed By: ngimel Differential Revision: D25569586 Pulled By: mruberry fbshipit-source-id: 09608088f540c2c3fc70465f6a23f2aec5f24f85 |

||

|

|

45b33c83f1 |

Revert "Revert D24923679: Fixed einsum compatibility/performance issues (#46398)" (#49189)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49189

This reverts commit

|

||

|

|

524adfbffd |

Use new FFT operators in stft (#47601)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/47601 Fixes https://github.com/pytorch/pytorch/issues/42175#issuecomment-719933913 Test Plan: Imported from OSS Reviewed By: ngimel Differential Revision: D25457217 Pulled By: mruberry fbshipit-source-id: 455d216edd0b962eb7967ecb47cccc8d6865975b |

||

|

|

54f0556ee4 |

Add missing complex support for torch.norm and torch.linalg.norm (#48284)

Summary: **BC-breaking note:** Previously, when given a complex input, `torch.linalg.norm` and `torch.norm` would return a complex output. `torch.linalg.cond` would sometimes return a complex output and sometimes return a real output when given a complex input, depending on its `p` argument. This PR changes this behavior to match `numpy.linalg.norm` and `numpy.linalg.cond`, so that a complex input will result in the downgraded real number type, consistent with NumPy. **PR Summary:** The following cases were previously unsupported for complex inputs, and this commit adds support: - Frobenius norm - Norm order 2 (vector and matrix) - CUDA vector norm Part of https://github.com/pytorch/pytorch/issues/47833 Pull Request resolved: https://github.com/pytorch/pytorch/pull/48284 Reviewed By: H-Huang Differential Revision: D25420880 Pulled By: mruberry fbshipit-source-id: 11f6a2f3cad57d66476d30921c3f6ab8f3cd4017 |

||

|

|

d307601365 |

Revert D24923679: Fixed einsum compatibility/performance issues (#46398)

Test Plan: revert-hammer

Differential Revision:

D24923679 (

|

||

|

|

ea2a568cca |

Fixed einsum compatibility/performance issues (#46398) (#47860)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/47860 This PR makes torch.einsum compatible with numpy.einsum except for the sublist input option as requested here https://github.com/pytorch/pytorch/issues/21412. It also fixed 2 performance issues linked below and adds a check for reducing to torch.dot instead of torch.bmm which is faster in some cases. fixes #45854, #37628, #30194, #15671 fixes #41467 with benchmark below ```python import torch from torch.utils.benchmark import Timer a = torch.randn(10000, 100, 101, device='cuda') b = torch.randn(10000, 101, 3, device='cuda') c = torch.randn(10000, 100, 1, device='cuda') d = torch.randn(10000, 100, 1, 3, device='cuda') print(Timer( stmt='torch.einsum("bij,bjf->bif", a, b)', globals={'a': a, 'b': b} ).blocked_autorange()) print() print(Timer( stmt='torch.einsum("bic,bicf->bif", c, d)', globals={'c': c, 'd': d} ).blocked_autorange()) ``` ``` <torch.utils.benchmark.utils.common.Measurement object at 0x7fa37c413850> torch.einsum("bij,bjf->bif", a, b) Median: 4.53 ms IQR: 0.00 ms (4.53 to 4.53) 45 measurements, 1 runs per measurement, 1 thread <torch.utils.benchmark.utils.common.Measurement object at 0x7fa37c413700> torch.einsum("bic,bicf->bif", c, d) Median: 63.86 us IQR: 1.52 us (63.22 to 64.73) 4 measurements, 1000 runs per measurement, 1 thread ``` fixes #32591 with benchmark below ```python import torch from torch.utils.benchmark import Timer a = torch.rand(1, 1, 16, 2, 16, 2, 16, 2, 2, 2, 2, device="cuda") b = torch.rand(729, 1, 1, 2, 1, 2, 1, 2, 2, 2, 2, device="cuda") print(Timer( stmt='(a * b).sum(dim = (-3, -2, -1))', globals={'a': a, 'b': b} ).blocked_autorange()) print() print(Timer( stmt='torch.einsum("...ijk, ...ijk -> ...", a, b)', globals={'a': a, 'b': b} ).blocked_autorange()) ``` ``` <torch.utils.benchmark.utils.common.Measurement object at 0x7efe0de28850> (a * b).sum(dim = (-3, -2, -1)) Median: 17.86 ms 2 measurements, 10 runs per measurement, 1 thread <torch.utils.benchmark.utils.common.Measurement object at 0x7efe0de286a0> torch.einsum("...ijk, ...ijk -> ...", a, b) Median: 296.11 us IQR: 1.38 us (295.42 to 296.81) 662 measurements, 1 runs per measurement, 1 thread ``` TODO - [x] add support for ellipsis broadcasting - [x] fix corner case issues with sumproduct_pair - [x] update docs and add more comments - [x] add tests for error cases Test Plan: Imported from OSS Reviewed By: mruberry Differential Revision: D24923679 Pulled By: heitorschueroff fbshipit-source-id: 47e48822cd67bbcdadbdfc5ffa25ee8ba4c9620a |

||

|

|

313e77fc06 |

Add broadcast_shapes() function and use it in MultivariateNormal (#43935)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/43837

This adds a `torch.broadcast_shapes()` function similar to Pyro's [broadcast_shape()](

|

||

|

|

84fafbe49c |

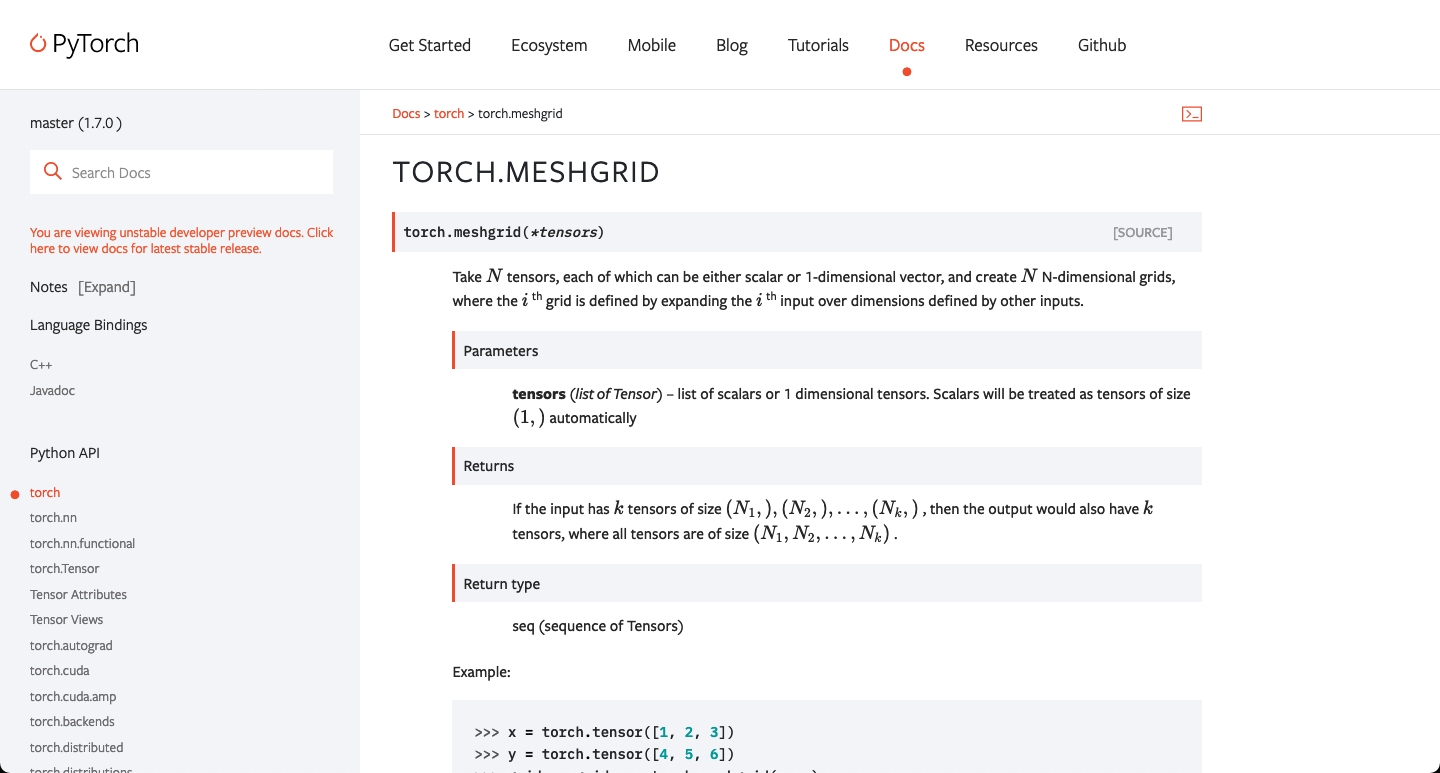

[docs] docstring for no type checked meshgrid (#48471)

Summary: Fixes https://github.com/pytorch/pytorch/issues/48395 I am not sure this is a correct way for fixing tho cc mruberry Locally built preview:  Pull Request resolved: https://github.com/pytorch/pytorch/pull/48471 Reviewed By: mrshenli Differential Revision: D25191033 Pulled By: mruberry fbshipit-source-id: e5d9cb2748f7cb81923a1d4f204ffb330f6da1ee |

||

|

|

b6cb2caa68 |

Revert "Fixed einsum compatibility/performance issues (#46398)" (#47821)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47821

This reverts commit

|

||

|

|

a5c65b86ce |

Fixed einsum compatibility/performance issues (#46398)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/46398 This PR makes torch.einsum compatible with numpy.einsum except for the sublist input option as requested here https://github.com/pytorch/pytorch/issues/21412. It also fixed 2 performance issues linked below and adds a check for reducing to torch.dot instead of torch.bmm which is faster in some cases. fixes #45854, #37628, #30194, #15671 fixes #41467 with benchmark below ```python import torch from torch.utils.benchmark import Timer a = torch.randn(10000, 100, 101, device='cuda') b = torch.randn(10000, 101, 3, device='cuda') c = torch.randn(10000, 100, 1, device='cuda') d = torch.randn(10000, 100, 1, 3, device='cuda') print(Timer( stmt='torch.einsum("bij,bjf->bif", a, b)', globals={'a': a, 'b': b} ).blocked_autorange()) print() print(Timer( stmt='torch.einsum("bic,bicf->bif", c, d)', globals={'c': c, 'd': d} ).blocked_autorange()) ``` ``` <torch.utils.benchmark.utils.common.Measurement object at 0x7fa37c413850> torch.einsum("bij,bjf->bif", a, b) Median: 4.53 ms IQR: 0.00 ms (4.53 to 4.53) 45 measurements, 1 runs per measurement, 1 thread <torch.utils.benchmark.utils.common.Measurement object at 0x7fa37c413700> torch.einsum("bic,bicf->bif", c, d) Median: 63.86 us IQR: 1.52 us (63.22 to 64.73) 4 measurements, 1000 runs per measurement, 1 thread ``` fixes #32591 with benchmark below ```python import torch from torch.utils.benchmark import Timer a = torch.rand(1, 1, 16, 2, 16, 2, 16, 2, 2, 2, 2, device="cuda") b = torch.rand(729, 1, 1, 2, 1, 2, 1, 2, 2, 2, 2, device="cuda") print(Timer( stmt='(a * b).sum(dim = (-3, -2, -1))', globals={'a': a, 'b': b} ).blocked_autorange()) print() print(Timer( stmt='torch.einsum("...ijk, ...ijk -> ...", a, b)', globals={'a': a, 'b': b} ).blocked_autorange()) ``` ``` <torch.utils.benchmark.utils.common.Measurement object at 0x7efe0de28850> (a * b).sum(dim = (-3, -2, -1)) Median: 17.86 ms 2 measurements, 10 runs per measurement, 1 thread <torch.utils.benchmark.utils.common.Measurement object at 0x7efe0de286a0> torch.einsum("...ijk, ...ijk -> ...", a, b) Median: 296.11 us IQR: 1.38 us (295.42 to 296.81) 662 measurements, 1 runs per measurement, 1 thread ``` TODO - [x] add support for ellipsis broadcasting - [x] fix corner case issues with sumproduct_pair - [x] update docs and add more comments - [x] add tests for error cases Test Plan: Imported from OSS Reviewed By: malfet Differential Revision: D24860367 Pulled By: heitorschueroff fbshipit-source-id: 31110ee598fd598a43acccf07929b67daee160f9 |

||

|

|

f3ad7b2919 |

[JIT][Reland] add list() support (#42382)

Summary: Fixes https://github.com/pytorch/pytorch/issues/40869 Resubmit of https://github.com/pytorch/pytorch/pull/33818. Adds support for `list()` by desugaring it to a list comprehension. Last time I landed this it made one of the tests slow, and got unlanded. I think that's bc the previous PR changed the emission of `list()` on a list input or a str input to a list comprehension, which is the more general way of emitting `list()`, but also a little bit slower. I updated this version to emit to the builtin operators for these two case. Hopefully it can land without being reverted this time... Pull Request resolved: https://github.com/pytorch/pytorch/pull/42382 Reviewed By: navahgar Differential Revision: D24767674 Pulled By: eellison fbshipit-source-id: a1aa3d104499226b28f47c3698386d365809c23c |

||

|

|

a8ef4d3f0b |

Provide 'out' parameter for 'tensordot' (#47278)

Summary: Fixes https://github.com/pytorch/pytorch/issues/42102 Added an optional out parameter to the tensordot operation to allow using buffers. Pull Request resolved: https://github.com/pytorch/pytorch/pull/47278 Test Plan: pytest test/test_torch.py -k tensordot -v Reviewed By: agolynski Differential Revision: D24706258 Pulled By: H-Huang fbshipit-source-id: eb4bcd114795f67de3a670291034107d2826ea69 |

||

|

|

cd26d027b3 |

[doc] Fix info on the shape of pivots in torch.lu + more info on what and how they encode permutations. (#46844)

Summary: As per title. Pull Request resolved: https://github.com/pytorch/pytorch/pull/46844 Reviewed By: VitalyFedyunin Differential Revision: D24595538 Pulled By: ezyang fbshipit-source-id: 1bb9c0310170124c3b6e33bd26ce38c22b36e926 |

||

|

|

c31ced4246 |

make torch.lu differentiable. (#46284)

Summary: As per title. Limitations: only for batches of squared full-rank matrices. CC albanD Pull Request resolved: https://github.com/pytorch/pytorch/pull/46284 Reviewed By: zou3519 Differential Revision: D24448266 Pulled By: albanD fbshipit-source-id: d98215166268553a648af6bdec5a32ad601b7814 |

||

|

|

146721f1df |

Fix typing errors in the torch.distributions module (#45689)

Summary: Fixes https://github.com/pytorch/pytorch/issues/42979. Pull Request resolved: https://github.com/pytorch/pytorch/pull/45689 Reviewed By: agolynski Differential Revision: D24229870 Pulled By: xuzhao9 fbshipit-source-id: 5fc87cc428170139962ab65b71cacba494d46130 |

||

|

|

a0a8bc8870 |

Fix mistakes and increase clarity of norm documentation (#42696)

Summary: * Removes incorrect statement that "the vector norm will be applied to the last dimension". * More clearly describe each different combination of `p`, `ord`, and input size. * Moves norm tests from `test/test_torch.py` to `test/test_linalg.py` * Adds test ensuring that `p='fro'` and `p=2` give same results for mutually valid inputs Fixes https://github.com/pytorch/pytorch/issues/41388 Pull Request resolved: https://github.com/pytorch/pytorch/pull/42696 Reviewed By: bwasti Differential Revision: D23876862 Pulled By: mruberry fbshipit-source-id: 36f33ccb6706d5fe13f6acf3de8ae14d7fbdff85 |

||

|

|

78f055272c |

[docs] Add 3D reduction example to tensordot docs (#45697)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/45697 **Summary** This commit adds an example of a reduction over three dimensions with `torch.tensordot`. It is unclear from existing docs whether `dims` should be a list of pairs or a pair of lists. **Test Plan** Built the docs locally. *Before* <img width="864" alt="Captura de Pantalla 2020-10-01 a la(s) 1 35 46 p m" src="https://user-images.githubusercontent.com/4392003/94866838-f0b17f80-03f4-11eb-8692-8f50fe3b9863.png"> *After* <img width="831" alt="Captura de Pantalla 2020-10-05 a la(s) 12 06 28 p m" src="https://user-images.githubusercontent.com/4392003/95121092-670af600-0703-11eb-959f-73c7797a76ee.png"> **Fixes** This commit closes #22748. Test Plan: Imported from OSS Reviewed By: ansley Differential Revision: D24118186 Pulled By: SplitInfinity fbshipit-source-id: c19b0b7e001f8cd099dc4c2e0e8ec39310510b46 |

||

|

|

bb19a55429 |

Improves fft doc consistency and makes deprecation warnings more prominent (#45409)

Summary: This PR makes the deprecation warnings for existing fft functions more prominent and makes the torch.stft deprecation warning consistent with our current deprecation planning. Pull Request resolved: https://github.com/pytorch/pytorch/pull/45409 Reviewed By: ngimel Differential Revision: D23974975 Pulled By: mruberry fbshipit-source-id: b90d8276095122ac3542ab625cb49b991379c1f8 |

||

|

|

6417a70465 |

Updates linalg warning + docs (#45415)

Summary: Changes the deprecation of norm to a docs deprecation, since PyTorch components still rely on norm and some behavior, like automatically flattening tensors, may need to be ported to torch.linalg.norm. The documentation is also updated to clarify that torch.norm and torch.linalg.norm are distinct. Pull Request resolved: https://github.com/pytorch/pytorch/pull/45415 Reviewed By: ngimel Differential Revision: D23958252 Pulled By: mruberry fbshipit-source-id: fd54e807c59a2655453a6bcd9f4073cb2c12e8ac |

||

|

|

caea1adc35 |

Complex support for stft and istft (#43886)

Summary: Ref https://github.com/pytorch/pytorch/issues/42175, fixes https://github.com/pytorch/pytorch/issues/34797 This adds complex support to `torch.stft` and `torch.istft`. Note that there are really two issues with complex here: complex signals, and returning complex tensors. ## Complex signals and windows `stft` currently assumes all signals are real and uses `rfft` with `onesided=True` by default. Similarly, `istft` always takes a complex fourier series and uses `irfft` to return real signals. For `stft`, I now allow complex inputs and windows by calling the full `fft` if either are complex. If the user gives `onesided=True` and the signal is complex, then this doesn't work and raises an error instead. For `istft`, there's no way to automatically know what to do when `onesided=False` because that could either be a redundant representation of a real signal or a complex signal. So there, the user needs to pass the argument `return_complex=True` in order to use `ifft` and get a complex result back. ## stft returning complex tensors The other issue is that `stft` returns a complex result, represented as a `(... X 2)` real tensor. I think ideally we want this to return proper complex tensors but to preserver BC I've had to add a `return_complex` argument to manage this transition. `return_complex` defaults to false for real inputs to preserve BC but defaults to True for complex inputs where there is no BC to consider. In order to `return_complex` by default everywhere without a sudden BC-breaking change, a simple transition plan could be: 1. introduce `return_complex`, defaulted to false when BC is an issue but giving a warning. (this PR) 2. raise an error in cases where `return_complex` defaults to false, making it a required argument. 3. change `return_complex` default to true in all cases. Pull Request resolved: https://github.com/pytorch/pytorch/pull/43886 Reviewed By: glaringlee Differential Revision: D23760174 Pulled By: mruberry fbshipit-source-id: 2fec4404f5d980ddd6bdd941a63852a555eb9147 |

||

|

|

28a23fce4c |

Deprecate torch.norm and torch.functional.norm (#44321)

Summary: Part of https://github.com/pytorch/pytorch/issues/24802 Pull Request resolved: https://github.com/pytorch/pytorch/pull/44321 Reviewed By: mrshenli Differential Revision: D23617273 Pulled By: mruberry fbshipit-source-id: 6f88b5cb097fd0acb9cf0e415172c5a86f94e9f2 |

||

|

|

0c01f136f3 |

[BE] Use f-string in various Python functions (#44161)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/44161 Reviewed By: seemethere Differential Revision: D23515874 Pulled By: malfet fbshipit-source-id: 868cf65aedd58fce943c08f8e079e84e0a36df1f |

||

|

|

573940f8d7 |

Fix type annotation errors in torch.functional (#43446)

Summary: Closes gh-42968 Pull Request resolved: https://github.com/pytorch/pytorch/pull/43446 Reviewed By: albanD Differential Revision: D23280962 Pulled By: malfet fbshipit-source-id: de5386a95a20ecc814c39cbec3e4252112340b3a |

||

|

|

b430347a60 |

Address JIT/Mypy issue with torch._VF (#43454)

Summary: - `torch._VF` is a hack to work around the lack of support for `torch.functional` in the JIT - that hack hides `torch._VF` functions from Mypy - could be worked around by re-introducing a stub file for `torch.functional`, but that's undesirable - so instead try to make both happy at the same time: the type ignore comments are needed for Mypy, and don't seem to affect the JIT after excluding them from the `get_type_line()` logic Encountered this issue while trying to make `mypy` run on `torch/functional.py` in gh-43446. Pull Request resolved: https://github.com/pytorch/pytorch/pull/43454 Reviewed By: glaringlee Differential Revision: D23305579 Pulled By: malfet fbshipit-source-id: 50e490693c1e53054927b57fd9acc7dca57e88ca |

||

|

|

3d46e02ea1 |

Add __torch_function__ for methods (#37091)

Summary: According to pytorch/rfcs#3 From the goals in the RFC: 1. Support subclassing `torch.Tensor` in Python (done here) 2. Preserve `torch.Tensor` subclasses when calling `torch` functions on them (done here) 3. Use the PyTorch API with `torch.Tensor`-like objects that are _not_ `torch.Tensor` subclasses (done in https://github.com/pytorch/pytorch/issues/30730) 4. Preserve `torch.Tensor` subclasses when calling `torch.Tensor` methods. (done here) 5. Propagating subclass instances correctly also with operators, using views/slices/indexing/etc. (done here) 6. Preserve subclass attributes when using methods or views/slices/indexing. (done here) 7. A way to insert code that operates on both functions and methods uniformly (so we can write a single function that overrides all operators). (done here) 8. The ability to give external libraries a way to also define functions/methods that follow the `__torch_function__` protocol. (will be addressed in a separate PR) This PR makes the following changes: 1. Adds the `self` argument to the arg parser. 2. Dispatches on `self` as well if `self` is not `nullptr`. 3. Adds a `torch._C.DisableTorchFunction` context manager to disable `__torch_function__`. 4. Adds a `torch::torch_function_enabled()` and `torch._C._torch_function_enabled()` to check the state of `__torch_function__`. 5. Dispatches all `torch._C.TensorBase` and `torch.Tensor` methods via `__torch_function__`. TODO: - [x] Sequence Methods - [x] Docs - [x] Tests Closes https://github.com/pytorch/pytorch/issues/28361 Benchmarks in https://github.com/pytorch/pytorch/pull/37091#issuecomment-633657778 Pull Request resolved: https://github.com/pytorch/pytorch/pull/37091 Reviewed By: ngimel Differential Revision: D22765678 Pulled By: ezyang fbshipit-source-id: 53f8aa17ddb8b1108c0997f6a7aa13cb5be73de0 |

||

|

|

206db5c127 |

Improve torch.norm functionality, errors, and tests (#41956)

Summary: **BC-Breaking Note:** BC breaking changes in the case where keepdim=True. Before this change, when calling `torch.norm` with keepdim=True and p='fro' or p=number, leaving all other optional arguments as their default values, the keepdim argument would be ignored. Also, any time `torch.norm` was called with p='nuc', the result would have one fewer dimension than the input, and the dimensions could be out of order depending on which dimensions were being reduced. After the change, for each of these cases, the result has the same number and order of dimensions as the input. **PR Summary:** * Fix keepdim behavior * Throw descriptive errors for unsupported sparse norm args * Increase unit test coverage for these cases and for complex inputs These changes were taken from part of PR https://github.com/pytorch/pytorch/issues/40924. That PR is not going to be merged because it overrides `torch.norm`'s interface, which we want to avoid. But these improvements are still useful. Issue https://github.com/pytorch/pytorch/issues/24802 Pull Request resolved: https://github.com/pytorch/pytorch/pull/41956 Reviewed By: albanD Differential Revision: D22837455 Pulled By: mruberry fbshipit-source-id: 509ecabfa63b93737996f48a58c7188b005b7217 |

||

|

|

8c653e05ff |

DOC: fail to build if there are warnings (#41335)

Summary: Merge after gh-41334 and gh-41321 (EDIT: both are merged). Closes gh-38011 This is the last in a series of PRs to build documentation without warnings. It adds `-WT --keepgoing` to the shpinx build which will [fail the build if there are warnings](https://www.sphinx-doc.org/en/master/man/sphinx-build.html#cmdoption-sphinx-build-W), print a [trackeback on error](https://www.sphinx-doc.org/en/master/man/sphinx-build.html#cmdoption-sphinx-build-T) and [finish the build](https://www.sphinx-doc.org/en/master/man/sphinx-build.html#cmdoption-sphinx-build-keep-going) even when there are warnings. It should fail now, but pass once the PRs mentioned at the top are merged. Pull Request resolved: https://github.com/pytorch/pytorch/pull/41335 Reviewed By: pbelevich Differential Revision: D22794425 Pulled By: mruberry fbshipit-source-id: eb2903e50759d1d4f66346ee2ceebeecfac7b094 |

||

|

|

71fdf748e5 |

Add torch.atleast_{1d/2d/3d} (#41317)

Summary: https://github.com/pytorch/pytorch/issues/38349 TODO: * [x] Docs * [x] Tests Pull Request resolved: https://github.com/pytorch/pytorch/pull/41317 Reviewed By: ngimel Differential Revision: D22575456 Pulled By: mruberry fbshipit-source-id: cc79f4cd2ca4164108ed731c33cf140a4d1c9dd8 |

||

|

|

88fe05e106 |

[Docs] Update torch.(squeeze, split, set_printoptions, save) docs. (#39303)

Summary:

I added the following to the docs:

1. `torch.save`.

1. Added doc for `_use_new_zipfile_serialization` argument.

2. Added a note telling that extension does not matter while saving.

3. Added an example showing the use of above argument along with `pickle_protocol=5`.

2. `torch.split`

1. Added an example showing the use of the function.

3. `torch.squeeze`

1. Added a warning for batch_size=1 case.

4. `torch.set_printoptions`

1. Changed the docs of `sci_mode` argument from

```

sci_mode: Enable (True) or disable (False) scientific notation. If

None (default) is specified, the value is defined by `_Formatter`

```

to

```

sci_mode: Enable (True) or disable (False) scientific notation. If

None (default=False) is specified, the value is defined by

`torch._tensor_str._Formatter`.

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39303

Differential Revision: D21904504

Pulled By: zou3519

fbshipit-source-id: 92a324257d09d6bcfa0b410d4578859782b94488

|

||

|

|

ebd4125e7e |

[JIT] Make torch.unique_consecutive compatible (#39339)

Summary: A `unique_consecutive` version of https://github.com/pytorch/pytorch/pull/38156 Pull Request resolved: https://github.com/pytorch/pytorch/pull/39339 Differential Revision: D21823997 Pulled By: eellison fbshipit-source-id: d14596a36ba36497e296da5a344e0376cef56f1b |

||

|

|

d363cf4639 |

Fix incorrect __torch_function__ handling in einsum (#38741)

Summary: Closes gh-38479 Pull Request resolved: https://github.com/pytorch/pytorch/pull/38741 Differential Revision: D21662512 Pulled By: ezyang fbshipit-source-id: 247e3b50b8f2dd842c03be8d6ebe71910b619bc6 |

||

|

|

eb3e9872c9 |

[JIT] make torch.unique compilable (#38156)

Summary: Fix for https://github.com/pytorch/pytorch/issues/37986 Follows the stack in https://github.com/pytorch/pytorch/pull/33783 stack to make functions in `torch/functional.py` resolve to their python implementations. Because the return type of `torch.unique` depends on `return_inverse` and `return_counts` I had to refactor the implementation to use our boolean_dispatch mechanism. Pull Request resolved: https://github.com/pytorch/pytorch/pull/38156 Differential Revision: D21504449 Pulled By: eellison fbshipit-source-id: 7efb1dff3b5c00655da10168403ac4817286ff59 |

||

|

|

5a27ec09b8 |

Add Inverse Short Time Fourier Transform in ATen native (#35569)

Summary:

Ported `torchaudio`'s implementation (test, and documentation as well) to ATen.

Note

- Batch packing/unpacking is performed in Python. ATen implementation expects 4D input tensor.

- The way `hop_length` is initialized in the same way as `stft` implementation. [The Torchaudio's version tried to mimic the same behavior but slightly different](

|

||

|

|

3799d1d74a |

Fix many doc issues (#37099)

Summary: Fix https://github.com/pytorch/pytorch/issues/35643 https://github.com/pytorch/pytorch/issues/37063 https://github.com/pytorch/pytorch/issues/36307 https://github.com/pytorch/pytorch/issues/35861 https://github.com/pytorch/pytorch/issues/35299 https://github.com/pytorch/pytorch/issues/23108 https://github.com/pytorch/pytorch/issues/4661 Just a bunch of small updates on the doc. Pull Request resolved: https://github.com/pytorch/pytorch/pull/37099 Differential Revision: D21185713 Pulled By: albanD fbshipit-source-id: 4ac06d6709dc0da6109a6ad3daae75667ee5863e |

||

|

|

78d5707041 |

Fix type annotations and make MyPy run on torch/ (#36584)