Summary: Adding new documents to the PyTorch website to describe how PyTorch is governed, how to contribute to the project, and lists persons of interest.

Reviewed By: orionr

Differential Revision: D14394573

fbshipit-source-id: ad98b807850c51de0b741e3acbbc3c699e97b27f

Summary:

as title

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17476

Differential Revision: D14218312

Pulled By: suo

fbshipit-source-id: 64df096a3431a6f25cd2373f0959d415591fed15

Summary:

as title. These were already added to the tutorials, but I didn't add them to the cpp docs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17452

Differential Revision: D14206501

Pulled By: suo

fbshipit-source-id: 89b5c8aaac22d05381bc4a7ab60d0bb35e43f6f5

Summary:

Based on https://github.com/pytorch/pytorch/pull/12413, with the following additional changes:

- Inside `native_functions.yml` move those outplace operators right next to everyone's corresponding inplace operators for convenience of checking if they match when reviewing

- `matches_jit_signature: True` for them

- Add missing `scatter` with Scalar source

- Add missing `masked_fill` and `index_fill` with Tensor source.

- Add missing test for `scatter` with Scalar source

- Add missing test for `masked_fill` and `index_fill` with Tensor source by checking the gradient w.r.t source

- Add missing docs to `tensor.rst`

Differential Revision: D14069925

Pulled By: ezyang

fbshipit-source-id: bb3f0cb51cf6b756788dc4955667fead6e8796e5

Summary:

one_hot docs is missing [here](https://pytorch.org/docs/master/nn.html#one-hot).

I dug around and could not find a way to get this working properly.

Differential Revision: D14104414

Pulled By: zou3519

fbshipit-source-id: 3f45c8a0878409d218da167f13b253772f5cc963

Summary:

This prevent people (reviewer, PR author) from forgetting adding things to `torch.rst`.

When something new is added to `_torch_doc.py` or `functional.py` but intentionally not in `torch.rst`, people should manually whitelist it in `test_docs_coverage.py`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16039

Differential Revision: D14070903

Pulled By: ezyang

fbshipit-source-id: 60f2a42eb5efe81be073ed64e54525d143eb643e

Summary:

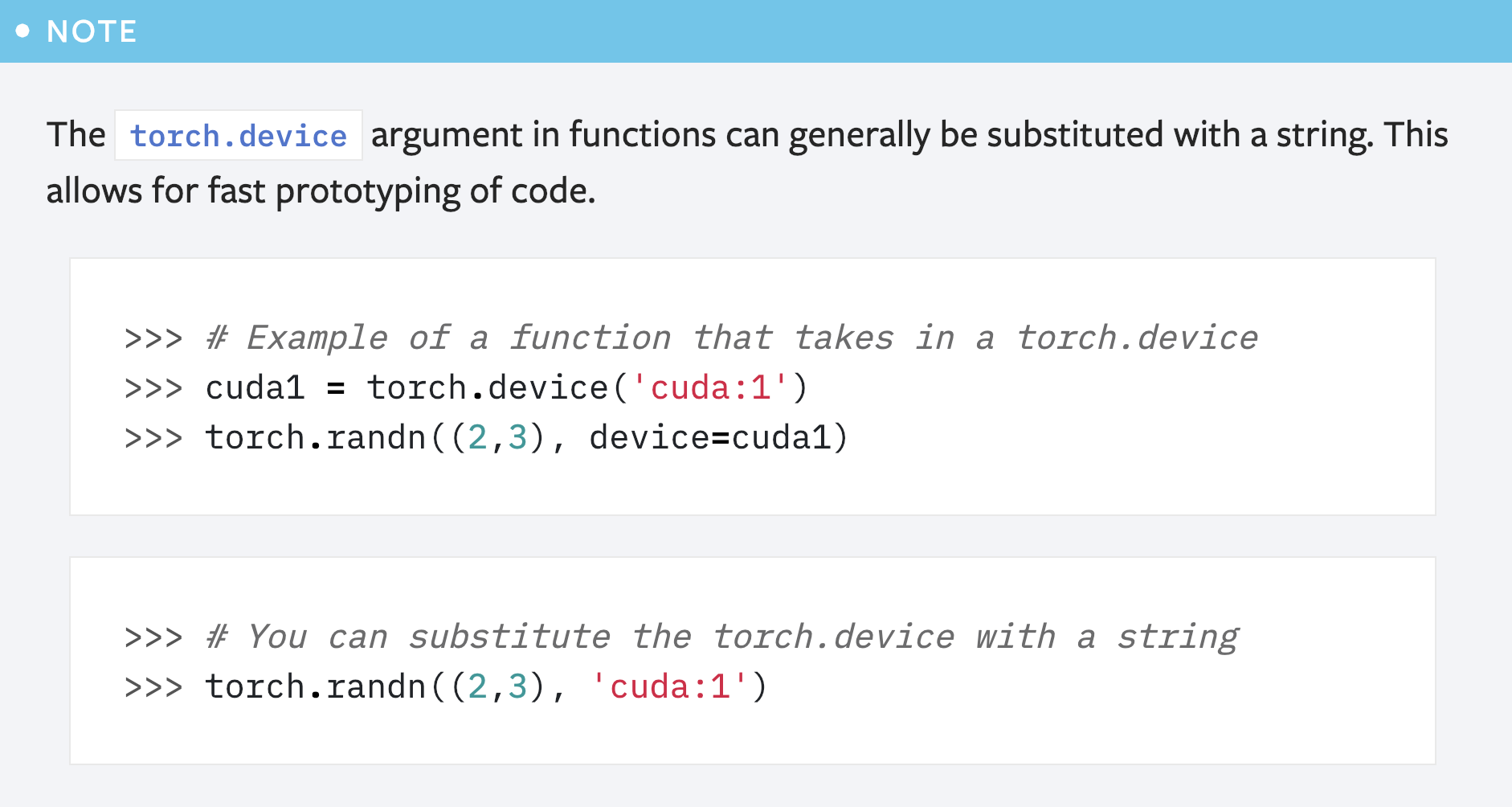

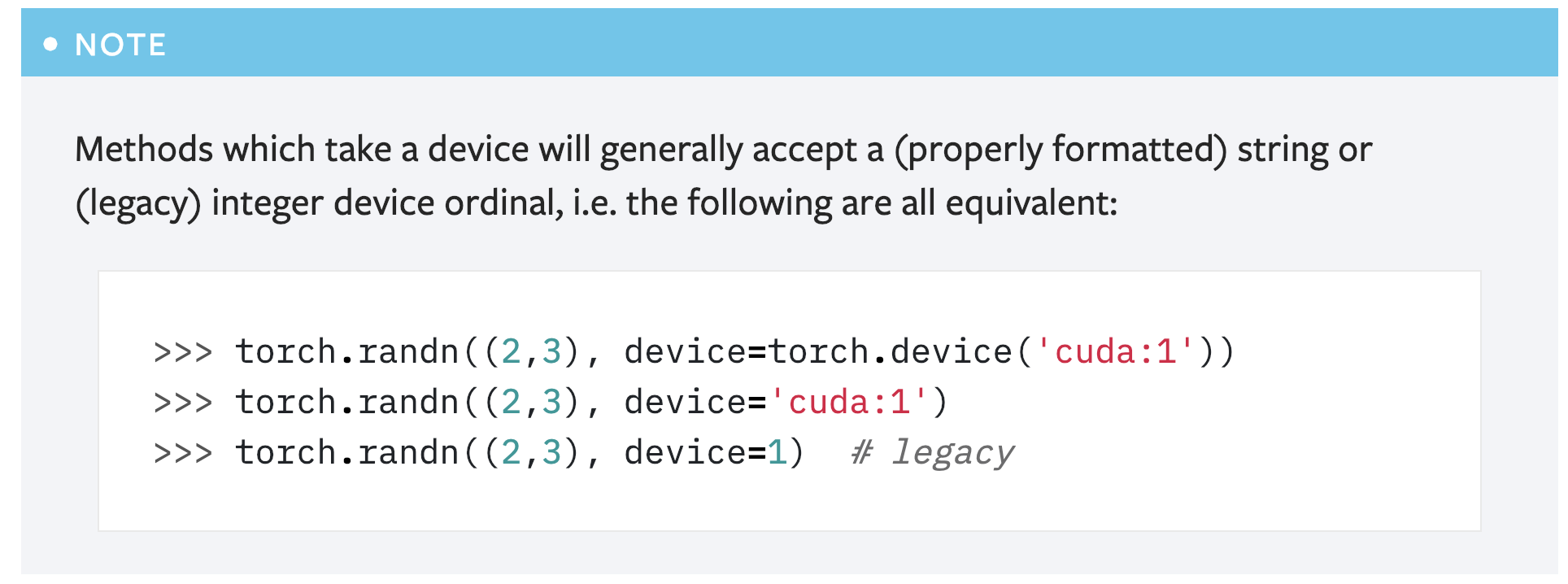

This PR is a simple fix for the mistake in the first note for `torch.device` in the "tensor attributes" doc.

```

>>> # You can substitute the torch.device with a string

>>> torch.randn((2,3), 'cuda:1')

```

Above code will cause error like below:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-53-abdfafb67ab1> in <module>()

----> 1 torch.randn((2,3), 'cuda:1')

TypeError: randn() received an invalid combination of arguments - got (tuple, str), but expected one of:

* (tuple of ints size, torch.Generator generator, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool requires_grad)

* (tuple of ints size, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool requires_grad)

```

Simply adding the argument name `device` solves the problem: `torch.randn((2,3), device='cuda:1')`.

However, another concern is that this note seems redundant as **there is already another note covering this usage**:

So maybe it's better to just remove this note?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16839

Reviewed By: ezyang

Differential Revision: D13989209

Pulled By: gchanan

fbshipit-source-id: ac255d52528da053ebfed18125ee6b857865ccaf

Summary:

Some batched updates:

1. bool is a type now

2. Early returns are allowed now

3. The beginning of an FAQ section with some guidance on the best way to do GPU training + CPU inference

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16866

Differential Revision: D13996729

Pulled By: suo

fbshipit-source-id: 3b884fd3a4c9632c9697d8f1a5a0e768fc918916

Summary:

Fixed a few C++ API callsites to work with v1.0.1.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16221

Differential Revision: D13759207

Pulled By: yf225

fbshipit-source-id: bd92c2b95a0c6ff3ba5d73cb249d0bc88cfdc340

Summary:

1. I fixed the importing process, which had some problems

- **I think `setup_helpers` should not be imported as the top level module. It can lead to many future errors. For example, what if `setup_helpers` imports another module from the upper level?** So we need to change it.

- The code is not consistent with other modules in `tools` package. For example, other

modules in the package imports `from tools.setuptools...` not `from setuptools...`.

- **It should be able to run with `python -m tools.build_libtorch` command** because this module is a part of the tools package. Currently, you cannot do that and I think it's simply wrong.

~~2. I Added platform specific warning messages.

- I constantly forgot that I needed to define some environment variables in advance specific to my platform to build libtorch, especially when I'm working at a non pytorch root directory. So I thought adding warnings for common options would be helpful .~~

~~3. Made the build output path configurable. And a few other changes.~~

orionr ebetica

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15471

Differential Revision: D13709607

Pulled By: ezyang

fbshipit-source-id: 950d5727aa09f857d973538c50b1ab169d88da38

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15316

This starts cleaning up the files in c10 according to the module structure we decided on.

Move to c10/util:

- Half.h, Half-inl.h, Half.cpp, bitcasts.h

Move to c10/core:

- Device.h, Device.cpp

- DeviceType.h, DeviceType.cpp

i-am-not-moving-c2-to-c10

Reviewed By: dzhulgakov

Differential Revision: D13498493

fbshipit-source-id: dfcf1c490474a12ab950c72ca686b8ad86428f63

Summary:

Fix submitted by huntzhan in https://github.com/pytorch/cppdocs/pull/4. The source is in this repo so the patch has to be applied here.

soumith ezyang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15701

Differential Revision: D13591302

Pulled By: goldsborough

fbshipit-source-id: 796957696fd560a9c5fb42265d7b2d018abaebe3

Summary:

Now that `cuda.get/set_rng_state` accept `device` objects, the default value should be an device object, and doc should mention so.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14324

Reviewed By: ezyang

Differential Revision: D13528707

Pulled By: soumith

fbshipit-source-id: 32fdac467dfea6d5b96b7e2a42dc8cfd42ba11ee

Summary:

There was a typo in C++ docs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15527

Differential Revision: D13547858

Pulled By: soumith

fbshipit-source-id: 1f5250206ca6e13b1b1443869b1e1c837a756cb5

Summary:

Current documentation example doesn't compile. This fixes the doc so the example works.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15372

Differential Revision: D13522167

Pulled By: goldsborough

fbshipit-source-id: 5171a5f8e165eafabd9d1a28d23020bf2655f38b

Summary:

Changelog:

- Renames `potrs` to `cholesky_solve` to remain consistent with Tensorflow and Scipy (not really, they call their function chol_solve)

- Default argument for upper in cholesky_solve is False. This will allow a seamless interface between `cholesky` and `cholesky_solve`, since the `upper` argument in both function are the same.

- Rename all tests

- Create a tentative alias for `cholesky_solve` under the name `potrs`, and add deprecated warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15334

Differential Revision: D13507724

Pulled By: soumith

fbshipit-source-id: b826996541e49d2e2bcd061b72a38c39450c76d0

Summary:

Some of the codeblocks were showing up as normal text and the "unsupported modules" table was formatted incorrectly

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15227

Differential Revision: D13468847

Pulled By: driazati

fbshipit-source-id: eb7375710d4f6eca1d0f44dfc43c7c506300cb1e

Summary:

Documents what is supported in the script standard library.

* Adds `my_script_module._get_method('forward').schema()` method to get function schema from a `ScriptModule`

* Removes `torch.nn.functional` from the list of builtins. The only functions not supported are `nn.functional.fold` and `nn.functional.unfold`, but those currently just dispatch to their corresponding aten ops, so from a user's perspective it looks like they work.

* Allow printing of `IValue::Device` by getting its string representation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14912

Differential Revision: D13385928

Pulled By: driazati

fbshipit-source-id: e391691b2f87dba6e13be05d4aa3ed2f004e31da

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14248

This diff also introduces a horrifying hack to override CUDA's DeviceGuardImpl

with a HIPGuardImplMasqueradingAsCUDA, to accommodate PyTorch's current

behavior of pretending CUDA is HIP when you build with ROCm enabled.

Reviewed By: bddppq

Differential Revision: D13145293

fbshipit-source-id: ee0e207b6fd132f0d435512957424a002d588f02

Summary:

Tracing records variable names and we have new types and stuff in the IR, so this updates the graph printouts in the docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14914

Differential Revision: D13385101

Pulled By: jamesr66a

fbshipit-source-id: 6477e4861f1ac916329853763c83ea157be77f23

Summary:

Added a few examples and explains to how publish/load models.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14862

Differential Revision: D13384790

Pulled By: ailzhang

fbshipit-source-id: 008166e84e59dcb62c0be38a87982579524fb20e