Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59491

**Summary**

This commit adds a preamble to the `torch.package` documentation page

that explains briefly what `torch.package` is.

**Test Plan**

Continous integration.

<img width="881" alt="Captura de Pantalla 2021-06-04 a la(s) 3 57 01 p m" src="https://user-images.githubusercontent.com/4392003/120872203-d535e000-c552-11eb-841d-b38df19bc992.png">

Test Plan: Imported from OSS

Reviewed By: Lilyjjo

Differential Revision: D29050630

Pulled By: SplitInfinity

fbshipit-source-id: 70a3fd43f076751c6ea83be3ead291686c641158

Summary:

Fixes https://github.com/pytorch/pytorch/issues/35379

- Adds `retains_grad` attribute backed by cpp as a native function. The python bindings for the function are skipped to be consistent with `is_leaf`.

- Tried writing it without native function, but the jit test `test_tensor_properties` seems to require that it be a native function (or alternatively maybe it could also work if we manually add a prim implementation?).

- Python API now uses `retain_grad` implementation from cpp

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59362

Reviewed By: jbschlosser

Differential Revision: D28969298

Pulled By: soulitzer

fbshipit-source-id: 335f2be50b9fb870cd35dc72f7dadd6c8666cc02

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58925

Cleans up documentation on natively supported backends. In particular:

* adds a section title

* deduplicates information about fbgemm/qnnpack

* clarifies what `torch.backends.quantized.engine` does

* adds code samples with default settings for `fbgemm` and `qnnpack`

Test Plan: Imported from OSS

Reviewed By: jerryzh168

Differential Revision: D28681840

Pulled By: vkuzo

fbshipit-source-id: 51a6ab66934f657553351f6c84a638fd5f7b4e12

Summary:

Closes https://github.com/pytorch/pytorch/issues/51455

I think the current implementation is aggregating over the correct dimensions. The shape of `normalized_shape` is only used to determine the dimensions to aggregate over. The actual values of `normalized_shape` are used when `elementwise_affine=True` to initialize the weights and biases.

This PR updates the docstring to clarify how `normalized_shape` is used. Here is a short script comparing the implementations for tensorflow and pytorch:

```python

import torch

import torch.nn as nn

import tensorflow as tf

from tensorflow.keras.layers import LayerNormalization

rng = np.random.RandomState()

x = rng.randn(10, 20, 64, 64).astype(np.float32)

# slightly non-trival

x[:, :10, ...] = x[:, :10, ...] * 10 + 20

x[:, 10:, ...] = x[:, 10:, ...] * 30 - 100

# Tensorflow Layer norm

x_tf = tf.convert_to_tensor(x)

layer_norm_tf = LayerNormalization(axis=[-3, -2, -1], epsilon=1e-5)

output_tf = layer_norm_tf(x_tf)

output_tf_np = output_tf.numpy()

# PyTorch Layer norm

x_torch = torch.as_tensor(x)

layer_norm_torch = nn.LayerNorm([20, 64, 64], elementwise_affine=False)

output_torch = layer_norm_torch(x_torch)

output_torch_np = output_torch.detach().numpy()

# check tensorflow and pytorch

torch.testing.assert_allclose(output_tf_np, output_torch_np)

# manual comutation

manual_output = ((x_torch - x_torch.mean(dim=(-3, -2, -1), keepdims=True)) /

(x_torch.var(dim=(-3, -2, -1), keepdims=True, unbiased=False) + 1e-5).sqrt())

torch.testing.assert_allclose(output_torch, manual_output)

```

To get to the layer normalization as shown here:

<img width="157" alt="Screen Shot 2021-05-29 at 2 13 52 PM" src="https://user-images.githubusercontent.com/5402633/120080691-1e37f100-c088-11eb-9060-4f263e4cd093.png">

One needs to pass in `normalized_shape` with shape `x.dim() - 1` with the size of the channels and all spatial dimensions.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59178

Reviewed By: ejguan

Differential Revision: D28931877

Pulled By: jbschlosser

fbshipit-source-id: 193e05205b9085bb190c221428c96d2ca29f2a70

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54987

Based off of ezyang (https://github.com/pytorch/pytorch/pull/44799) and bdhirsh (https://github.com/pytorch/pytorch/pull/43702) 's prototype:

Here's a summary of the changes in this PR:

This PR adds a new dispatch key called Conjugate. This enables us to make conjugate operation a view and leverage the specialized library functions that fast path with the hermitian operation (conj + transpose).

1. Conjugate operation will now return a view with conj bit (1) for complex tensors and returns self for non-complex tensors as before. This also means `torch.view_as_real` will no longer be a view on conjugated complex tensors and is hence disabled. To fill the gap, we have added `torch.view_as_real_physical` which would return the real tensor agnostic of the conjugate bit on the input complex tensor. The information about conjugation on the old tensor can be obtained by calling `.is_conj()` on the new tensor.

2. NEW API:

a) `.conj()` -- now returning a view.

b) `.conj_physical()` -- does the physical conjugate operation. If the conj bit for input was set, you'd get `self.clone()`, else you'll get a new tensor with conjugated value in its memory.

c) `.conj_physical_()`, and `out=` variant

d) `.resolve_conj()` -- materializes the conjugation. returns self if the conj bit is unset, else returns a new tensor with conjugated values and conj bit set to 0.

e) `.resolve_conj_()` in-place version of (d)

f) `view_as_real_physical` -- as described in (1), it's functionally same as `view_as_real`, just that it doesn't error out on conjugated tensors.

g) `view_as_real` -- existing function, but now errors out on conjugated tensors.

3. Conjugate Fallback

a) Vast majority of PyTorch functions would currently use this fallback when they are called on a conjugated tensor.

b) This fallback is well equipped to handle the following cases:

- functional operation e.g., `torch.sin(input)`

- Mutable inputs and in-place operations e.g., `tensor.add_(2)`

- out-of-place operation e.g., `torch.sin(input, out=out)`

- Tensorlist input args

- NOTE: Meta tensors don't work with conjugate fallback.

4. Autograd

a) `resolve_conj()` is an identity function w.r.t. autograd

b) Everything else works as expected.

5. Testing:

a) All method_tests run with conjugate view tensors.

b) OpInfo tests that run with conjugate views

- test_variant_consistency_eager/jit

- gradcheck, gradgradcheck

- test_conj_views (that only run for `torch.cfloat` dtype)

NOTE: functions like `empty_like`, `zero_like`, `randn_like`, `clone` don't propagate the conjugate bit.

Follow up work:

1. conjugate view RFC

2. Add neg bit to re-enable view operation on conjugated tensors

3. Update linalg functions to call into specialized functions that fast path with the hermitian operation.

Test Plan: Imported from OSS

Reviewed By: VitalyFedyunin

Differential Revision: D28227315

Pulled By: anjali411

fbshipit-source-id: acab9402b9d6a970c6d512809b627a290c8def5f

Summary:

Adds `is_inference` as a native function w/ manual cpp bindings.

Also changes instances of `is_inference_tensor` to `is_inference` to be consistent with other properties such as `is_complex`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58729

Reviewed By: mruberry

Differential Revision: D28874507

Pulled By: soulitzer

fbshipit-source-id: 0fa6bcdc72a4ae444705e2e0f3c416c1b28dadc7

Summary:

The `UninitializedBuffer` class was previously left out of `nn.rst`, so it was not included in the generated documentation.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59021

Reviewed By: anjali411

Differential Revision: D28723044

Pulled By: jbschlosser

fbshipit-source-id: 71e15b0c7fabaf57e8fbdf7fbd09ef2adbdb36ad

Summary:

Adds a note explaining the difference between several often conflated mechanisms in the autograd note

Also adds a link to this note from the docs in `grad_mode` and `nn.module`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58513

Reviewed By: gchanan

Differential Revision: D28651129

Pulled By: soulitzer

fbshipit-source-id: af9eb1749b641fc1b632815634eea36bf7979156

Summary:

This PR introduces a helper function named `torch.nn.utils.skip_init()` that accepts a module class object + `args` / `kwargs` and instantiates the module while skipping initialization of parameter / buffer values. See discussion at https://github.com/pytorch/pytorch/issues/29523 for more context. Example usage:

```python

import torch

m = torch.nn.utils.skip_init(torch.nn.Linear, 5, 1)

print(m.weight)

m2 = torch.nn.utils.skip_init(torch.nn.Linear, 5, 1, device='cuda')

print(m2.weight)

m3 = torch.nn.utils.skip_init(torch.nn.Linear, in_features=5, out_features=1)

print(m3.weight)

```

```

Parameter containing:

tensor([[-3.3011e+28, 4.5915e-41, -3.3009e+28, 4.5915e-41, 0.0000e+00]],

requires_grad=True)

Parameter containing:

tensor([[-2.5339e+27, 4.5915e-41, -2.5367e+27, 4.5915e-41, 0.0000e+00]],

device='cuda:0', requires_grad=True)

Parameter containing:

tensor([[1.4013e-45, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00]],

requires_grad=True)

```

Bikeshedding on the name / namespace is welcome, as well as comments on the design itself - just wanted to get something out there for discussion.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57555

Reviewed By: zou3519

Differential Revision: D28640613

Pulled By: jbschlosser

fbshipit-source-id: 5654f2e5af5530425ab7a9e357b6ba0d807e967f

Summary:

Will not land before the release, but would be good to have this function documented in master for its use in distributed debugability.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58322

Reviewed By: SciPioneer

Differential Revision: D28595405

Pulled By: rohan-varma

fbshipit-source-id: fb00fa22fbe97a38c396eae98a904d1c4fb636fa

Summary:

Temporary fix for https://github.com/pytorch/pytorch/issues/42218.

Numerically, grid_sampler should be fine in fp32 or fp16. So grid_sampler really belongs on the promote list. But performancewise, native grid_sampler backward kernels use gpuAtomicAdd, which is notoriously slow in fp16. So the simplest functionality fix is to put grid_sampler on the fp32 list.

In https://github.com/pytorch/pytorch/pull/58618 I implement the right long-term fix (refactoring kernels to use fp16-friendly fastAtomicAdd and moving grid_sampler to the promote list). But that's more invasive, and for 1.9 ngimel says this simple temporary fix is preferred.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58679

Reviewed By: soulitzer

Differential Revision: D28576559

Pulled By: ngimel

fbshipit-source-id: d653003f37eaedcbb3eaac8d7fec26c343acbc07

Summary:

* Fix lots of links.

* Minor improvements for consistency, clarity or grammar.

* Update jit_python_reference to note the limitations on __exit__.

(Related to https://github.com/pytorch/pytorch/issues/41420).

* Fix a comment in exit_transforms.cpp: removed the word "not" which

made the comment say the opposite of the truth.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57991

Reviewed By: malfet

Differential Revision: D28522247

Pulled By: SplitInfinity

fbshipit-source-id: fc63a59d19ea6c89f957c9f7d451be17d1c5fc91

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58056

This PR addresses an action item in #3428: disabling search engine

indexing of master documentation. This is desireable because we want to

direct users to our stable documentation (instead of master

documentation) because they are more likely to have a stable version of

PyTorch installed.

Test Plan:

1. run `make html`, check that the noindex tags are there

2. run `make html-stable, check that the noindex tags aren't there

Reviewed By: bdhirsh

Differential Revision: D28490504

Pulled By: zou3519

fbshipit-source-id: 695c944c4962b2bd484dd7a5e298914a37abe787

Summary:

Added a simple section indicating distributed profiling is expected to work similar to other torch operators, and is supported for all communication backends out of the box.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58286

Reviewed By: bdhirsh

Differential Revision: D28436489

Pulled By: rohan-varma

fbshipit-source-id: ce1905a987c0ede8011e8086a2c30edc777b4a38

Summary:

Adds a new file under `torch/nn/utils/parametrizations.py` which should contain all the parametrization implementations

For spectral_norm we add the `SpectralNorm` module which can be registered using `torch.nn.utils.parametrize.register_parametrization` or using a wrapper: `spectral_norm`, the same API the old implementation provided.

Most of the logic is borrowed from the old implementation:

- Just like the old implementation, there should be cases when retrieving the weight should perform another power iteration (thus updating the weight) and cases where it shouldn't. For example in eval mode `self.training=True`, we do not perform power iteration.

There are also some differences/difficulties with the new implementation:

- Using new parametrization functionality as-is there doesn't seem to be a good way to tell whether a 'forward' call was the result of parametrizations are unregistered (and leave_parametrizations=True) or when the injected property's getter was invoked. The issue is that we want perform power iteration in the latter case but not the former, but we don't have this control as-is. So, in this PR I modified the parametrization functionality to change the module to eval mode before triggering their forward call

- Updates the vectors based on weight on initialization to fix https://github.com/pytorch/pytorch/issues/51800 (this avoids silently update weights in eval mode). This also means that we perform twice any many power iterations by the first forward.

- right_inverse is just the identity for now, but maybe it should assert that the passed value already satisfies the constraints

- So far, all the old spectral_norm tests have been cloned, but maybe we don't need so much testing now that the core functionality is already well tested

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57784

Reviewed By: ejguan

Differential Revision: D28413201

Pulled By: soulitzer

fbshipit-source-id: e8f1140f7924ca43ae4244c98b152c3c554668f2

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58039

The new function has the following signature

`inv_ex(Tensor inpit, *, bool check_errors=False) -> (Tensor inverse, Tensor info)`.

When `check_errors=True`, an error is thrown if the matrix is not invertible; `check_errors=False` - responsibility for checking the result is on the user.

`linalg_inv` is implemented using calls to `linalg_inv_ex` now.

Resolves https://github.com/pytorch/pytorch/issues/25095

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision: D28405148

Pulled By: mruberry

fbshipit-source-id: b8563a6c59048cb81e206932eb2f6cf489fd8531

Summary:

Fixes https://github.com/pytorch/pytorch/issues/56608

- Adds binding to the `c10::InferenceMode` RAII class in `torch._C._autograd.InferenceMode` through pybind. Also binds the `torch.is_inference_mode` function.

- Adds context manager `torch.inference_mode` to manage an instance of `c10::InferenceMode` (global). Implemented in `torch.autograd.grad_mode.py` to reuse the `_DecoratorContextManager` class.

- Adds some tests based on those linked in the issue + several more for just the context manager

Issues/todos (not necessarily for this PR):

- Improve short inference mode description

- Small example

- Improved testing since there is no direct way of checking TLS/dispatch keys

-

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58045

Reviewed By: agolynski

Differential Revision: D28390595

Pulled By: soulitzer

fbshipit-source-id: ae98fa036c6a2cf7f56e0fd4c352ff804904752c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58160

This PR updates the Torch Distributed Elastic documentation with references to the new `c10d` backend.

ghstack-source-id: 128783809

Test Plan: Visually verified the correct

Reviewed By: tierex

Differential Revision: D28384996

fbshipit-source-id: a40b0c37989ce67963322565368403e2be5d2592

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57341

Require that users be explicit about what they are going to be

interning. There are a lot of changes that are enabled by this. The new

overall scheme is:

PackageExporter maintains a dependency graph. Users can add to it,

either explicitly (by issuing a `save_*` call) or explicitly (through

dependency resolution). Users can also specify what action to take when

PackageExporter encounters a module (deny, intern, mock, extern).

Nothing (except pickles, tho that can be changed with a small amount

of work) is written to the zip archive until we are finalizing the

package. At that point, we consult the dependency graph and write out

the package exactly as it tells us to.

This accomplishes two things:

1. We can gather up *all* packaging errors instead of showing them one at a time.

2. We require that users be explicit about what's going in packages, which is a common request.

Differential Revision: D28114185

Test Plan: Imported from OSS

Reviewed By: SplitInfinity

Pulled By: suo

fbshipit-source-id: fa1abf1c26be42b14c7e7cf3403ecf336ad4fc12

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58170

Now comm hook can be supported on MPI and GLOO backends besides NCCL. No longer need these warnings and check.

ghstack-source-id: 128799123

Test Plan: N/A

Reviewed By: agolynski

Differential Revision: D28388861

fbshipit-source-id: f56a7b9f42bfae1e904f58cdeccf7ceefcbb0850

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58023

Clearly state that some features of RPC aren't yet compatible with CUDA.

ghstack-source-id: 128688856

Test Plan: None

Reviewed By: agolynski

Differential Revision: D28347605

fbshipit-source-id: e8df9a4696c61a1a05f7d2147be84d41aeeb3b48

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50048

To reflect the many changes introduced recently.

In my mind, CUDAFuture should be considered a "private" subclass, which in practice should always be returned as a downcast pointer to an ivalue::Future. Hence, we should document the CUDA behavior in the superclass, even if it's CUDA-agnostic, since that's the interface the users will see also for CUDA-enabled futures.

ghstack-source-id: 128640983

Test Plan: Built locally and looked at them.

Reviewed By: mrshenli

Differential Revision: D25757474

fbshipit-source-id: c6f66ba88fa6c4fc33601f31136422d6cf147203

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57965

The bold effect does not work under quotes, so move it out.

ghstack-source-id: 128570357

Test Plan:

locally view

{F614715259}

Reviewed By: rohan-varma

Differential Revision: D28329694

fbshipit-source-id: 299b427f4c0701ba70c84148f65203a6e2d6ac61

Summary:

This PR is focused on the API for `linalg.matrix_norm` and delegates computations to `linalg.norm` for the moment.

The main difference between the norms is when `dim=None`. In this case

- `linalg.norm` will compute a vector norm on the flattened input if `ord=None`, otherwise it requires the input to be either 1D or 2D in order to disambiguate between vector and matrix norm

- `linalg.vector_norm` will flatten the input

- `linalg.matrix_norm` will compute the norm over the last two dimensions, treating the input as batch of matrices

In future PRs, the computations will be moved to `torch.linalg.matrix_norm` and `torch.norm` and `torch.linalg.norm` will delegate computations to either `linalg.vector_norm` or `linalg.matrix_norm` based on the arguments provided.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57127

Reviewed By: mrshenli

Differential Revision: D28186736

Pulled By: mruberry

fbshipit-source-id: 99ce2da9d1c4df3d9dd82c0a312c9570da5caf25

Summary:

Redo of https://github.com/pytorch/pytorch/issues/56373 out of stack.

---

To reviewers: **please be nitpicky**. I've read this so often that I probably missed some typos and inconsistencies.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57247

Reviewed By: albanD

Differential Revision: D28247402

Pulled By: mruberry

fbshipit-source-id: 71142678ee5c82cc8c0ecc1dad6a0b2b9236d3e6

Summary:

Pull Request resolved: https://github.com/pytorch/elastic/pull/148

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56811

Moves docs sphinx `*.rst` files from the torchelastic repository to torch. Note: only moves the rst files the next step is to link it to the main pytorch `index.rst` and write new `examples.rst`

Reviewed By: H-Huang

Differential Revision: D27974751

fbshipit-source-id: 8ff9f242aa32e0326c37da3916ea0633aa068fc5

Summary:

Fixes https://github.com/pytorch/pytorch/issues/54555

It has been discussed in the issue https://github.com/pytorch/pytorch/issues/54555 that {h,v,d}split methods unexpectedly matches argument of single int[] when it is expected to match single argument of int. The same unexpected behavior can happen in other functions/methods which can take both int[] and int? as single argument signatures.

In this PR we solve this problem by giving higher priority to int/int? arguments over int[] while sorting signatures.

We also add methods of {h,v,d}split methods here, which helped us to discover this unexpected behavior.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57346

Reviewed By: ezyang

Differential Revision: D28121234

Pulled By: iramazanli

fbshipit-source-id: 851cf40b370707be89298177b51ceb4527f4b2d6

Summary:

The new function has the following signature `cholesky_ex(Tensor input, *, bool check_errors=False) -> (Tensor L, Tensor infos)`. When `check_errors=True`, an error is thrown if the decomposition fails; `check_errors=False` - responsibility for checking the decomposition is on the user.

When `check_errors=False`, we don't have host-device memory transfers for checking the values of the `info` tensor.

Rewrote the internal code for `torch.linalg.cholesky`. Added `cholesky_stub` dispatch. `linalg_cholesky` is implemented using calls to `linalg_cholesky_ex` now.

Resolves https://github.com/pytorch/pytorch/issues/57032.

Ref. https://github.com/pytorch/pytorch/issues/34272, https://github.com/pytorch/pytorch/issues/47608, https://github.com/pytorch/pytorch/issues/47953

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56724

Reviewed By: ngimel

Differential Revision: D27960176

Pulled By: mruberry

fbshipit-source-id: f05f3d5d9b4aa444e41c4eec48ad9a9b6fd5dfa5

Summary:

You can find the latest rendered version in the `python_doc_build` CI job below, in the artifact tab of that build on circle CI

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55966

Reviewed By: H-Huang

Differential Revision: D28032446

Pulled By: albanD

fbshipit-source-id: 227ad37b03d39894d736c19cae3195b4d56fc62f

Summary:

This PR tries to make the docs of `torch.linalg` have/be:

- More uniform notation and structure for every function.

- More uniform use of back-quotes and the `:attr:` directive

- More readable for a non-specialised audience through explanations of the form that factorisations take and when would it be beneficial to use what arguments in some solvers.

- More connected among the different functions through the use of the `.. seealso::` directive.

- More information on when do gradients explode / when is a function silently returning a wrong result / when things do not work in general

I tried to follow the structure of "one short description and then the rest" to be able to format the docs like those of `torch.` or `torch.nn`. I did not do that yet, as I am waiting for the green light on this idea:

https://github.com/pytorch/pytorch/issues/54878#issuecomment-816636171

What this PR does not do:

- Clean the documentation of other functions that are not in the `linalg` module (although I started doing this for `torch.svd`, but then I realised that this PR would touch way too many functions).

Fixes https://github.com/pytorch/pytorch/issues/54878

cc mruberry IvanYashchuk

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56265

Reviewed By: H-Huang

Differential Revision: D27993986

Pulled By: mruberry

fbshipit-source-id: adde7b7383387e1213cc0a6644331f0632b7392d

Summary:

No oustanding issue, can create it if needed.

Was looking for that resource and it was moved without fixing the documentation.

Cheers

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56776

Reviewed By: heitorschueroff

Differential Revision: D27967020

Pulled By: ezyang

fbshipit-source-id: a5cd7d554da43a9c9e44966ccd0b0ad9eef2948c

Summary:

In the optimizer documentation, many of the learning rate schedulers [examples](https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate) are provided according to a generic template. In this PR we provide a precise simple use case example to show how to use learning rate schedulers. Moreover, in a followup example we show an example how to chain two schedulers next to each other.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56705

Reviewed By: ezyang

Differential Revision: D27966704

Pulled By: iramazanli

fbshipit-source-id: f32b2d70d5cad7132335a9b13a2afa3ac3315a13

Summary:

The pre-amble here is misformatted at least and is hard to make sense of: https://pytorch.org/docs/master/quantization.html#prototype-fx-graph-mode-quantization

This PR is trying to make things easier to understand.

As I'm new to this please verify that my modifications remain in line with what may have been meant originally.

Thanks.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52192

Reviewed By: ailzhang

Differential Revision: D27941730

Pulled By: vkuzo

fbshipit-source-id: 6c4bbf7c87d8fb87ab5d588b690a72045752e47a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56528

Tried to search across internal and external usage of DataLoader. People haven't started to use `generator` for `DataLoader`.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D27908487

Pulled By: ejguan

fbshipit-source-id: 14c83ed40d4ba4dc988b121968a78c2732d8eb93

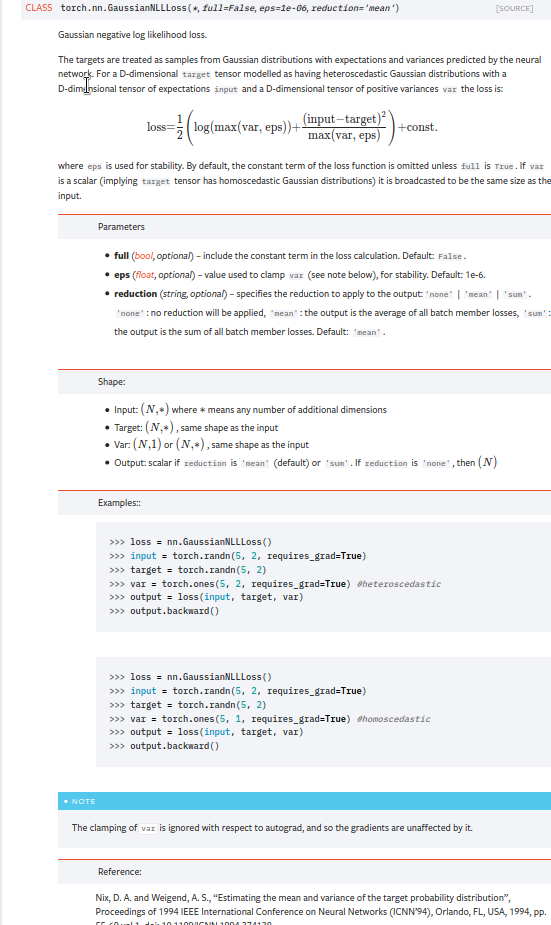

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56547

**Summary**

This commit tweaks the docstrings of `PackageExporter` so that they look

nicer on the docs website.

**Test Plan**

Continuous integration.

Test Plan: Imported from OSS

Reviewed By: ailzhang

Differential Revision: D27912965

Pulled By: SplitInfinity

fbshipit-source-id: 38c0a715365b8cfb9eecdd1b38ba525fa226a453

Summary:

This PR fixes the formatting issues in the new language reference

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56042

Reviewed By: gmagogsfm

Differential Revision: D27830179

Pulled By: nikithamalgifb

fbshipit-source-id: bce3397d4de3f1536a1a8f0a16f10a703e7d4406

Summary:

Reference: https://github.com/pytorch/pytorch/issues/50345

Changes:

* Add `i0e`

* Move some kernels from `UnaryOpsKernel.cu` to `UnarySpecialOpsKernel.cu` to decrease compilation time per file.

Time taken by i0e_vs_scipy tests: around 6.33.s

<details>

<summary>Test Run Log</summary>

```

(pytorch-cuda-dev) kshiteej@qgpu1:~/Pytorch/pytorch_module_special$ pytest test/test_unary_ufuncs.py -k _i0e_vs

======================================================================= test session starts ========================================================================

platform linux -- Python 3.8.6, pytest-6.1.2, py-1.9.0, pluggy-0.13.1

rootdir: /home/kshiteej/Pytorch/pytorch_module_special, configfile: pytest.ini

plugins: hypothesis-5.38.1

collected 8843 items / 8833 deselected / 10 selected

test/test_unary_ufuncs.py ...sss.... [100%]

========================================================================= warnings summary =========================================================================

../../.conda/envs/pytorch-cuda-dev/lib/python3.8/site-packages/torch/backends/cudnn/__init__.py:73

test/test_unary_ufuncs.py::TestUnaryUfuncsCUDA::test_special_i0e_vs_scipy_cuda_bfloat16

/home/kshiteej/.conda/envs/pytorch-cuda-dev/lib/python3.8/site-packages/torch/backends/cudnn/__init__.py:73: UserWarning: PyTorch was compiled without cuDNN/MIOpen support. To use cuDNN/MIOpen, rebuild PyTorch making sure the library is visible to the build system.

warnings.warn(

-- Docs: https://docs.pytest.org/en/stable/warnings.html

===================================================================== short test summary info ======================================================================

SKIPPED [3] test/test_unary_ufuncs.py:1182: not implemented: Could not run 'aten::_copy_from' with arguments from the 'Meta' backend. This could be because the operator doesn't exist for this backend, or was omitted during the selective/custom build process (if using custom build). If you are a Facebook employee using PyTorch on mobile, please visit https://fburl.com/ptmfixes for possible resolutions. 'aten::_copy_from' is only available for these backends: [BackendSelect, Named, InplaceOrView, AutogradOther, AutogradCPU, AutogradCUDA, AutogradXLA, UNKNOWN_TENSOR_TYPE_ID, AutogradMLC, AutogradNestedTensor, AutogradPrivateUse1, AutogradPrivateUse2, AutogradPrivateUse3, Tracer, Autocast, Batched, VmapMode].

BackendSelect: fallthrough registered at ../aten/src/ATen/core/BackendSelectFallbackKernel.cpp:3 [backend fallback]

Named: registered at ../aten/src/ATen/core/NamedRegistrations.cpp:7 [backend fallback]

InplaceOrView: fallthrough registered at ../aten/src/ATen/core/VariableFallbackKernel.cpp:56 [backend fallback]

AutogradOther: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradCPU: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradCUDA: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradXLA: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

UNKNOWN_TENSOR_TYPE_ID: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradMLC: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradNestedTensor: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse1: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse2: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse3: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

Tracer: registered at ../torch/csrc/autograd/generated/TraceType_4.cpp:9348 [kernel]

Autocast: fallthrough registered at ../aten/src/ATen/autocast_mode.cpp:250 [backend fallback]

Batched: registered at ../aten/src/ATen/BatchingRegistrations.cpp:1016 [backend fallback]

VmapMode: fallthrough registered at ../aten/src/ATen/VmapModeRegistrations.cpp:33 [backend fallback]

==================================================== 7 passed, 3 skipped, 8833 deselected, 2 warnings in 6.33s =====================================================

```

</details>

TODO:

* [x] Check rendered docs (https://11743402-65600975-gh.circle-artifacts.com/0/docs/special.html)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54409

Reviewed By: jbschlosser

Differential Revision: D27760472

Pulled By: mruberry

fbshipit-source-id: bdfbcaa798b00c51dc9513c34626246c8fc10548

Summary:

Related to https://github.com/pytorch/pytorch/issues/52256

Use autosummary instead of autofunction to create subpages for optim and cuda functions/classes.

Also fix some minor formatting issues in optim.LBFGS and cuda.stream docstings

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55673

Reviewed By: jbschlosser

Differential Revision: D27747741

Pulled By: zou3519

fbshipit-source-id: 070681f840cdf4433a44af75be3483f16e5acf7d

Summary:

Related to https://github.com/pytorch/pytorch/issues/52256

Use autosummary instead of autofunction to create subpages for autograd functions. I left the autoclass parts intact but manually laid out their members.

Also the Latex formatting of the spcecial page emitted a warning (solved by adding `\begin{align}...\end{align}`) and fixed alignment of equations (by using `&=` instead of `=`).

zou3519

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55672

Reviewed By: jbschlosser

Differential Revision: D27736855

Pulled By: zou3519

fbshipit-source-id: addb56f4f81c82d8537884e0ff243c1e34969a6e

Summary:

Fixes https://github.com/pytorch/pytorch/issues/35901

This change is designed to prevent fragmentation in the Caching Allocator. Permissive block splitting in the allocator allows very large blocks to be split into many pieces. Once split too finely it is unlikely all pieces will be 'free' at that same time so the original allocation can never be returned. Anecdotally, we've seen a model run out of memory failing to alloc a 50 MB block on a 32 GB card while the caching allocator is holding 13 GB of 'split free blocks'

Approach:

- Large blocks above a certain size are designated "oversize". This limit is currently set 1 decade above large, 200 MB

- Oversize blocks can not be split

- Oversize blocks must closely match the requested size (e.g. a 200 MB request will match an existing 205 MB block, but not a 300 MB block)

- In lieu of splitting oversize blocks there is a mechanism to quickly free a single oversize block (to the system allocator) to allow an appropriate size block to be allocated. This will be activated under memory pressure and will prevent _release_cached_blocks()_ from triggering

Initial performance tests show this is similar or quicker than the original strategy. Additional tests are ongoing.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44742

Reviewed By: ngimel

Differential Revision: D23752058

Pulled By: ezyang

fbshipit-source-id: ccb7c13e3cf8ef2707706726ac9aaac3a5e3d5c8

Summary:

Related to https://github.com/pytorch/pytorch/issues/52256

Use autosummary instead of autofunction to create subpages for `torch.fft` and `torch.linalg` functions.

zou3519

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55748

Reviewed By: jbschlosser

Differential Revision: D27739282

Pulled By: heitorschueroff

fbshipit-source-id: 37aa06cb8959721894ffadc15ae8c3b83481a319

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55817

**Summary**

This commit makes minor edits to the docstrings of `PackageExporter` so

that they render properly in the `torch.package` API reference.

**Test Plan**

Continuous integration (especially the docs tests).

Test Plan: Imported from OSS

Reviewed By: gmagogsfm

Differential Revision: D27726817

Pulled By: SplitInfinity

fbshipit-source-id: b81276d7278f586fceded83d23cb4d0532f7c629

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55812

**Summary**

This commit creates a barebones API reference doc for `torch.package`.

The content is sourced from the docstrings in the source for the

`torch.package`.

**Test Plan**

Continuous integration (specifically the docs tests).

Test Plan: Imported from OSS

Reviewed By: gmagogsfm

Differential Revision: D27726816

Pulled By: SplitInfinity

fbshipit-source-id: 5e9194536f80507e337b81c5ec3b5635d7121818

Summary:

Reference: https://github.com/pytorch/pytorch/issues/50345

Chages:

* Alias for sigmoid and logit

* Adds out variant for C++ API

* Updates docs to link back to `special` documentation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54759

Reviewed By: mrshenli

Differential Revision: D27615208

Pulled By: mruberry

fbshipit-source-id: 8bba908d1bea246e4aa9dbadb6951339af353556

Summary:

Related to https://github.com/pytorch/pytorch/issues/52256

Splits torch.nn.functional into a table-of-contents page and many sub-pages, one for each function

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55038

Reviewed By: gchanan

Differential Revision: D27502677

Pulled By: zou3519

fbshipit-source-id: 38e450a0fee41c901eb56f94aee8a32f4eefc807

Summary:

This PR adds `torch.linalg.eig`, and `torch.linalg.eigvals` for NumPy compatibility.

MAGMA uses a hybrid CPU-GPU algorithm and doesn't have a GPU interface for the non-symmetric eigendecomposition. It means that it forces us to transfer inputs living in GPU memory to CPU first before calling MAGMA, and then transfer results from MAGMA to CPU. That is rather slow for smaller matrices and MAGMA is faster than CPU path only for matrices larger than 3000x3000.

Unfortunately, there is no cuSOLVER function for this operation.

Autograd support for `torch.linalg.eig` will be added in a follow-up PR.

Ref https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52491

Reviewed By: anjali411

Differential Revision: D27563616

Pulled By: mruberry

fbshipit-source-id: b42bb98afcd2ed7625d30bdd71cfc74a7ea57bb5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55253

Previously DDP communication hooks takes a tensor list as the input. Now only takes a single tensor, as the preparation of retiring SPMD and only providing a single model replica for DDP communication hooks.

The next step is limiting only 1 model replica in Reducer.

ghstack-source-id: 125677637

Test Plan: waitforbuildbot

Reviewed By: zhaojuanmao

Differential Revision: D27533898

fbshipit-source-id: 5db92549c440f33662cf4edf8e0a0fd024101eae

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55031

It turns out that PowerSGD hooks can work on PyTorch native AMP package, but not Apex AMP package, which can somehow mutate gradients during the execution of communication hooks.

{F561544045}

ghstack-source-id: 125268206

Test Plan:

Used native amp backend for the same pytext model and worked:

f261564342

f261561664

Reviewed By: rohan-varma

Differential Revision: D27436484

fbshipit-source-id: 2b63eb683ce373f9da06d4d224ccc5f0a3016c88

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52859

This reverts commit 92a4ee1cf6.

Added support for bfloat16 for CUDA 11 and removed fast-path for empty input tensors that was affecting autograd graph.

Test Plan: Imported from OSS

Reviewed By: H-Huang

Differential Revision: D27402390

Pulled By: heitorschueroff

fbshipit-source-id: 73c5ccf54f3da3d29eb63c9ed3601e2fe6951034

Summary:

*Context:* https://github.com/pytorch/pytorch/issues/53406 added a lint for trailing whitespace at the ends of lines. However, in order to pass FB-internal lints, that PR also had to normalize the trailing newlines in four of the files it touched. This PR adds an OSS lint to normalize trailing newlines.

The changes to the following files (made in 54847d0adb9be71be4979cead3d9d4c02160e4cd) are the only manually-written parts of this PR:

- `.github/workflows/lint.yml`

- `mypy-strict.ini`

- `tools/README.md`

- `tools/test/test_trailing_newlines.py`

- `tools/trailing_newlines.py`

I would have liked to make this just a shell one-liner like the other three similar lints, but nothing I could find quite fit the bill. Specifically, all the answers I tried from the following Stack Overflow questions were far too slow (at least a minute and a half to run on this entire repository):

- [How to detect file ends in newline?](https://stackoverflow.com/q/38746)

- [How do I find files that do not end with a newline/linefeed?](https://stackoverflow.com/q/4631068)

- [How to list all files in the Git index without newline at end of file](https://stackoverflow.com/q/27624800)

- [Linux - check if there is an empty line at the end of a file [duplicate]](https://stackoverflow.com/q/34943632)

- [git ensure newline at end of each file](https://stackoverflow.com/q/57770972)

To avoid giving false positives during the few days after this PR is merged, we should probably only merge it after https://github.com/pytorch/pytorch/issues/54967.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54737

Test Plan:

Running the shell script from the "Ensure correct trailing newlines" step in the `quick-checks` job of `.github/workflows/lint.yml` should print no output and exit in a fraction of a second with a status of 0. That was not the case prior to this PR, as shown by this failing GHA workflow run on an earlier draft of this PR:

- https://github.com/pytorch/pytorch/runs/2197446987?check_suite_focus=true

In contrast, this run (after correcting the trailing newlines in this PR) succeeded:

- https://github.com/pytorch/pytorch/pull/54737/checks?check_run_id=2197553241

To unit-test `tools/trailing_newlines.py` itself (this is run as part of our "Test tools" GitHub Actions workflow):

```

python tools/test/test_trailing_newlines.py

```

Reviewed By: malfet

Differential Revision: D27409736

Pulled By: samestep

fbshipit-source-id: 46f565227046b39f68349bbd5633105b2d2e9b19

Summary:

This is to prepare for new language reference spec that needs to describe `torch.jit.Attribute` and `torch.jit.annotate`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54485

Reviewed By: SplitInfinity, nikithamalgifb

Differential Revision: D27406843

Pulled By: gmagogsfm

fbshipit-source-id: 98983b9df0f974ed69965ba4fcc03c1a18d1f9f5

Summary:

Reference: https://github.com/pytorch/pytorch/issues/38349

Wrapper around the existing `torch.gather` with broadcasting logic.

TODO:

* [x] Add Doc entry (see if phrasing can be improved)

* [x] Add OpInfo

* [x] Add test against numpy

* [x] Handle broadcasting behaviour and when dim is not given.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52833

Reviewed By: malfet

Differential Revision: D27319038

Pulled By: mruberry

fbshipit-source-id: 00f307825f92c679d96e264997aa5509172f5ed1

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54645

Had to replace RRef[..] with just RRef in the return signature since

sphynx seemed to completely mess up rendering RRef[..]

ghstack-source-id: 125024783

Test Plan: View locally.

Reviewed By: SciPioneer

Differential Revision: D27314609

fbshipit-source-id: 2dd9901e79f31578ac7733f79dbeb376f686ed75

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54277

alltoall already supported in nccl backend, so update the doc to reflect it.

Test Plan: Imported from OSS

Reviewed By: divchenko

Differential Revision: D27172904

Pulled By: wanchaol

fbshipit-source-id: 9afa89583d56b247b2017ea2350936053eb30827

Summary:

This PR adds autograd support for `torch.orgqr`.

Since `torch.orgqr` is one of few functions that expose LAPACK's naming and all other linear algebra routines were renamed a long time ago, I also added a new function with a new name and `torch.orgqr` now is an alias for it.

The new proposed name is `householder_product`. For a matrix `input` and a vector `tau` LAPACK's orgqr operation takes columns of `input` (called Householder vectors or elementary reflectors) scalars of `tau` that together represent Householder matrices and then the product of these matrices is computed. See https://www.netlib.org/lapack/lug/node128.html.

Other linear algebra libraries that I'm aware of do not expose this LAPACK function, so there is some freedom in naming it. It is usually used internally only for QR decomposition, but can be useful for deep learning tasks now when it supports differentiation.

Resolves https://github.com/pytorch/pytorch/issues/50104

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52637

Reviewed By: agolynski

Differential Revision: D27114246

Pulled By: mruberry

fbshipit-source-id: 9ab51efe52aec7c137aa018c7bd486297e4111ce

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54052

Introduce `fp16_compress_wrapper`, which can give some speedup on top of some gradient compression algorithms like PowerSGD.

ghstack-source-id: 124001805

Test Plan: {F509205173}

Reviewed By: iseessel

Differential Revision: D27076064

fbshipit-source-id: 4845a14854cafe2112c0caefc1e2532efe9d3ed8

Summary:

brianjo

- Add a javascript snippet to close the expandable left navbar sections 'Notes', 'Language Bindings', 'Libraries', 'Community'

- Fix two latex bugs that were causing output in the log that might have been misleading when looking for true doc build problems

- Change the way release versions interact with sphinx. I tested these via building docs twice: once with `export RELEASE=1` and once without.

- Remove perl scripting to turn the static version text into a link to the versions.html document. Instead, put this where it belongs in the layout.html template. This is the way the domain libraries (text, vision, audio) do it.

- There were two separate templates for master and release, with the only difference between them is that the master has an admonition "You are viewing unstable developer preview docs....". Instead toggle that with the value of `release`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53851

Reviewed By: mruberry

Differential Revision: D27085875

Pulled By: ngimel

fbshipit-source-id: c2d674deb924162f17131d895cb53cef08a1f1cb

Summary:

Close https://github.com/pytorch/pytorch/issues/51108

Related https://github.com/pytorch/pytorch/issues/38349

This PR implements the `cpu_kernel_multiple_outputs` to support returning multiple values in a CPU kernel.

```c++

auto iter = at::TensorIteratorConfig()

.add_output(out1)

.add_output(out2)

.add_input(in1)

.add_input(in2)

.build();

at::native::cpu_kernel_multiple_outputs(iter,

[=](float a, float b) -> std::tuple<float, float> {

float add = a + b;

float mul = a * b;

return std::tuple<float, float>(add, mul);

}

);

```

The `out1` will equal to `torch.add(in1, in2)`, while the result of `out2` will be `torch.mul(in1, in2)`.

It helps developers implement new torch functions that return two tensors more conveniently, such as NumPy-like functions [divmod](https://numpy.org/doc/1.18/reference/generated/numpy.divmod.html?highlight=divmod#numpy.divmod) and [frexp](https://numpy.org/doc/stable/reference/generated/numpy.frexp.html#numpy.frexp).

This PR adds `torch.frexp` function to exercise the new functionality provided by `cpu_kernel_multiple_outputs`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51097

Reviewed By: albanD

Differential Revision: D26982619

Pulled By: heitorschueroff

fbshipit-source-id: cb61c7f2c79873ab72ab5a61cbdb9203531ad469

Summary:

Fixes https://github.com/pytorch/pytorch/issues/44378 by providing a wider range of drivers similar to what SciPy is doing.

The supported CPU drivers are `gels, gelsy, gelsd, gelss`.

The CUDA interface has only `gels` implemented but only for overdetermined systems.

The current state of this PR:

- [x] CPU interface

- [x] CUDA interface

- [x] CPU tests

- [x] CUDA tests

- [x] Memory-efficient batch-wise iteration with broadcasting which fixes https://github.com/pytorch/pytorch/issues/49252

- [x] docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49093

Reviewed By: albanD

Differential Revision: D26991788

Pulled By: mruberry

fbshipit-source-id: 8af9ada979240b255402f55210c0af1cba6a0a3c

Summary:

This PR proposes to improve the distributed doc:

* [x] putting the init functions together

* [x] moving post-init functions into their own sub-section as they are only available after init and moving that group to after all init sub-sections

If this is too much, could we at least put these 2 functions together:

```

.. autofunction:: init_process_group

.. autofunction:: is_initialized

```

as they are interconnected. and the other functions are not alphabetically sorted in the first place.

Thank you.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52976

Reviewed By: albanD

Differential Revision: D26993933

Pulled By: mrshenli

fbshipit-source-id: 7cacbe28172ebb5849135567b1d734870b49de77

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53855

Remove "noindex" here:

{F492926346}

ghstack-source-id: 123724419

Test Plan:

waitforbuildbot

The failure on doctest does not seem to be relevant.

Reviewed By: rohan-varma

Differential Revision: D26967086

fbshipit-source-id: adf9db1144fa1475573f617402fdbca8177b7c08

Summary:

Fixes https://github.com/pytorch/pytorch/issues/50002

The last commit adds tests for 3d conv with the `SubModelFusion` and `SubModelWithoutFusion` classes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50003

Reviewed By: mrshenli

Differential Revision: D26325953

Pulled By: jerryzh168

fbshipit-source-id: 7406dd2721c0c4df477044d1b54a6c5e128a9034

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53253

Since GradBucket class becomes public, mention this class in ddp_comm_hooks.rst.

Screenshot:

{F478201008}

ghstack-source-id: 123596842

Test Plan: viewed generated html file

Reviewed By: rohan-varma

Differential Revision: D26812210

fbshipit-source-id: 65b70a45096b39f7d41a195e65b365b722645000

Summary:

This is a more fundamental example, as we may support some amount of shape specialization in the future.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53250

Reviewed By: navahgar

Differential Revision: D26841272

Pulled By: Chillee

fbshipit-source-id: 027c719afafc03828a657e40859cbfbf135e05c9

Summary:

Context: https://github.com/pytorch/pytorch/pull/53299#discussion_r587882857

These are the only hand-written parts of this diff:

- the addition to `.github/workflows/lint.yml`

- the file endings changed in these four files (to appease FB-internal land-blocking lints):

- `GLOSSARY.md`

- `aten/src/ATen/core/op_registration/README.md`

- `scripts/README.md`

- `torch/csrc/jit/codegen/fuser/README.md`

The rest was generated by running this command (on macOS):

```

git grep -I -l ' $' -- . ':(exclude)**/contrib/**' ':(exclude)third_party' | xargs gsed -i 's/ *$//'

```

I looked over the auto-generated changes and didn't see anything that looked problematic.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53406

Test Plan:

This run (after adding the lint but before removing existing trailing spaces) failed:

- https://github.com/pytorch/pytorch/runs/2043032377

This run (on the tip of this PR) succeeded:

- https://github.com/pytorch/pytorch/runs/2043296348

Reviewed By: walterddr, seemethere

Differential Revision: D26856620

Pulled By: samestep

fbshipit-source-id: 3f0de7f7c2e4b0f1c089eac9b5085a58dd7e0d97

Summary:

Provides the implementation for feature request issue https://github.com/pytorch/pytorch/issues/28937.

Adds the `Parametrization` functionality and implements `Pruning` on top of it.

It adds the `auto` mode, on which the parametrization is just computed once per forwards pass. The previous implementation computed the pruning on every forward, which is not optimal when pruning RNNs for example.

It implements a caching mechanism for parameters. This is implemented through the mechanism proposed at the end of the discussion https://github.com/pytorch/pytorch/issues/7313. In particular, it assumes that the user will not manually change the updated parameters between the call to `backwards()` and the `optimizer.step()`. If they do so, they would need to manually call the `.invalidate()` function provided in the implementation. This could be made into a function that gets a model and invalidates all the parameters in it. It might be the case that this function has to be called in the `.cuda()` and `.to` and related functions.

As described in https://github.com/pytorch/pytorch/issues/7313, this could be used, to implement in a cleaner way the `weight_norm` and `spectral_norm` functions. It also allows, as described in https://github.com/pytorch/pytorch/issues/28937, for the implementation of constrained optimization on manifolds (i.e. orthogonal constraints, positive definite matrices, invertible matrices, weights on the sphere or the hyperbolic space...)

TODO (when implementation is validated):

- More thorough test

- Documentation

Resolves https://github.com/pytorch/pytorch/issues/28937

albanD

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33344

Reviewed By: zhangguanheng66

Differential Revision: D26816708

Pulled By: albanD

fbshipit-source-id: 07c8f0da661f74e919767eae31335a9c60d9e8fe

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53084

Adding RemoteModule to master RPC docs since it is a prototype

feature.

ghstack-source-id: 122816689

Test Plan: waitforbuildbot

Reviewed By: rohan-varma

Differential Revision: D26743372

fbshipit-source-id: 00ce9526291dfb68494e07be3e67d7d9c2686f1b

Summary:

Fixes https://github.com/pytorch/pytorch/issues/44378 by providing a wider range of drivers similar to what SciPy is doing.

The supported CPU drivers are `gels, gelsy, gelsd, gelss`.

The CUDA interface has only `gels` implemented but only for overdetermined systems.

The current state of this PR:

- [x] CPU interface

- [x] CUDA interface

- [x] CPU tests

- [x] CUDA tests

- [x] Memory-efficient batch-wise iteration with broadcasting which fixes https://github.com/pytorch/pytorch/issues/49252

- [x] docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49093

Reviewed By: H-Huang

Differential Revision: D26723384

Pulled By: mruberry

fbshipit-source-id: c9866a95f14091955cf42de22f4ac9e2da009713