beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

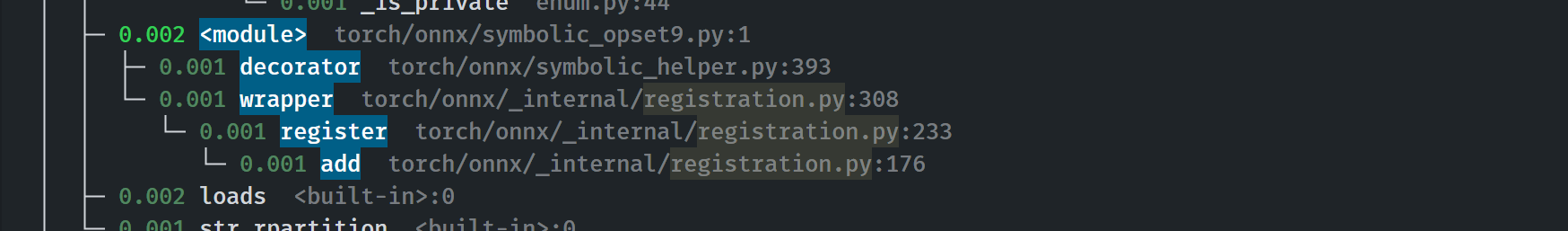

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

This commit improves the export of aten::slice() to ONNX in the following ways:

1. The step size can be an input tensor rather than a constant.

2. Fixes a bug where using a 1-D, 1-element torch tensor as an index created a broken ONNX model.

This commit also adds tests for the new functionality.

Fixes#104314

Pull Request resolved: https://github.com/pytorch/pytorch/pull/104385

Approved by: https://github.com/thiagocrepaldi

* CI Test environment to install onnx and onnx-script.

* Add symbolic function for `bitwise_or`, `convert_element_type` and `masked_fill_`.

* Update symbolic function for `slice` and `arange`.

* Update .pyi signature for `_jit_pass_onnx_graph_shape_type_inference`.

Co-authored-by: Wei-Sheng Chin <wschin@outlook.com>

Co-authored-by: Ti-Tai Wang <titaiwang@microsoft.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94564

Approved by: https://github.com/abock

Fixes https://github.com/pytorch/pytorch/issues/84365 and more

This PR addresses not only the issue above, but the entire family of issues related to `torch._C.Value.type()` parsing when `scalarType()` or `dtype()` is not available.

This issue exists before `JitScalarType` was introduced, but the new implementation refactored the bug in because the new api `from_name` and `from_dtype` requires parsing `torch._C.Value.type()` to get proper inputs, which is exactly the root cause for this family of bugs.

Therefore `from_name` and `from_dtype` must be called when the implementor knows the `name` and `dtype` without parsing a `torch._C.Value`. To handle the corner cases hidden within `torch._C.Value`, a new `from_value` API was introduced and it should be used in favor of the former ones for most cases. The new API is safer and doesn't require type parsing from user, triggering JIT asserts in the core of pytorch.

Although CI is passing for all tests, please review carefully all symbolics/helpers refactoring to make sure the meaning/intetion of the old call are not changed in the new call

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87245

Approved by: https://github.com/justinchuby, https://github.com/BowenBao

ONNX and PyTorch has different equation on pooling and different strategy on ceil_mode, which leads to discrepancy on corner case (#71549 ).

Specifically, PyTorch avereage pooling is not following [the equation on documentation](https://pytorch.org/docs/stable/generated/torch.nn.AvgPool2d.html), it allows sliding window to go off-bound instead, if they start within the left padding or the input (in NOTE section). More details can be found in #57178.

This PR changes avgpool in opset 10 and 11 back the way as opset 9, which it stops using ceil_mode and count_include_pad in onnx::AveragePool

A comprehensive test for all combinations of parameters can be found in the next PR. #87893

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87892

Approved by: https://github.com/BowenBao

This PR create the `GraphContext` class and relays all graph methods to _C.Graph as well as implements the `g.op` method. The GraphContext object is passed into the symbolic functions in place of _C.Graph for compatibility with existing symbolic functions.

This way (1) we can type annotate all `g` args because the method is defined and (2) we can use additional context information in symbolic functions. (3) no more monkey patching on `_C.Graph`

Also

- Fix return type of `_jit_pass_fixup_onnx_controlflow_node`

- Create `torchscript.py` to house torch.Graph related functions

- Change `GraphContext.op` to create nodes in the Block instead of the Graph

- Create `add_op_with_blocks` to handle scenarios where we need to directly manipulate sub-blocks. Update loop and if symbolic functions to use this function.

## Discussion

Should we put all the context inside `SymbolicContext` and make it an attribute in the `GraphContext` class? This way we only define two attributes `GraphContext.graph` and `GraphContext.context`. Currently all context attributes are directly defined in the class.

### Decision

Keep GraphContext flatand note that it will change in the future.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84728

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

This is the 4th PR in the series of #83787. It enables the use of `@onnx_symbolic` across `torch.onnx`.

- **Backward breaking**: Removed some symbolic functions from `__all__` because of the use of `@onnx_symbolic` for registering the same function on multiple aten names.

- Decorate all symbolic functions with `@onnx_symbolic`

- Move Quantized and Prim ops out from classes to functions defined in the modules. Eliminate the need for `isfunction` checking, speeding up the registration process by 60%.

- Remove the outdated unit test `test_symbolic_opset9.py`

- Symbolic function registration moved from the first call to `_run_symbolic_function` to init time.

- Registration is fast:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84448

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

Enable runtime type checking for all torch.onnx public apis, symbolic functions and most helpers (minus two that does not have a checkable type: `_.JitType` does not exist) by adding the beartype decorator. Fix type annotations to makes unit tests green.

Profile:

export `torchvision.models.alexnet(pretrained=True)`

```

with runtime type checking: 21.314 / 10 passes

without runtime type checking: 20.797 / 10 passes

+ 2.48%

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84091

Approved by: https://github.com/BowenBao, https://github.com/thiagocrepaldi

Enable runtime type checking for all torch.onnx public apis, symbolic functions and most helpers (minus two that does not have a checkable type: `_.JitType` does not exist) by adding the beartype decorator. Fix type annotations to makes unit tests green.

Profile:

export `torchvision.models.alexnet(pretrained=True)`

```

with runtime type checking: 21.314 / 10 passes

without runtime type checking: 20.797 / 10 passes

+ 2.48%

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84091

Approved by: https://github.com/BowenBao

Replace runtime errors in torch.onnx with `errors.SymbolicValueError` for more context around jit values.

- Extend `_unimplemented`, `_onnx_unsupported`, `_onnx_opset_unsupported`, `_onnx_opset_unsupported_detailed` errors to include JIT value information

- Replace plain RuntimeError with `errors.SymbolicValueError`

- Clean up: Use `_is_bool` to replace string comparison on jit types

- Clean up: Remove the todo `Remove type ignore after #81112`

#77316

Pull Request resolved: https://github.com/pytorch/pytorch/pull/83332

Approved by: https://github.com/AllenTiTaiWang, https://github.com/thiagocrepaldi, https://github.com/BowenBao

Re-land #81953

Add `_type_utils` for handling data type conversion among JIT, torch and ONNX.

- Replace dictionary / list indexing with methods in ScalarType

- Breaking: **Remove ScalarType from `symbolic_helper`** and move it to `_type_utils`

- Deprecated: "cast_pytorch_to_onnx", "pytorch_name_to_type", "scalar_name_to_pytorch", "scalar_type_to_onnx", "scalar_type_to_pytorch_type" in `symbolic_helper`

- Deprecate the type mappings and lists. Remove all internal references

- Move _cast_func_template to opset 9 and remove its reference elsewhere (clean up). Added documentation for easy discovery

Why: List / dictionary indexing and lookup are error-prone and convoluted.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82995

Approved by: https://github.com/kit1980

Add `_type_utils` for handling data type conversion among JIT, torch and ONNX.

- Replace dictionary / list indexing with methods in ScalarType

- Breaking: **Remove ScalarType from `symbolic_helper`** and move it to `_type_utils`

- Breaking: **Remove "cast_pytorch_to_onnx", "pytorch_name_to_type", "scalar_name_to_pytorch", "scalar_type_to_onnx", "scalar_type_to_pytorch_type"** from `symbolic_helper`

- Deprecate the type mappings and lists. Remove all internal references

- Move _cast_func_template to opset 9 and remove its reference elsewhere (clean up). Added documentation for easy discovery

Why: List / dictionary indexing and lookup are error-prone and convoluted.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/81953

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao