Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54987

Based off of ezyang (https://github.com/pytorch/pytorch/pull/44799) and bdhirsh (https://github.com/pytorch/pytorch/pull/43702) 's prototype:

Here's a summary of the changes in this PR:

This PR adds a new dispatch key called Conjugate. This enables us to make conjugate operation a view and leverage the specialized library functions that fast path with the hermitian operation (conj + transpose).

1. Conjugate operation will now return a view with conj bit (1) for complex tensors and returns self for non-complex tensors as before. This also means `torch.view_as_real` will no longer be a view on conjugated complex tensors and is hence disabled. To fill the gap, we have added `torch.view_as_real_physical` which would return the real tensor agnostic of the conjugate bit on the input complex tensor. The information about conjugation on the old tensor can be obtained by calling `.is_conj()` on the new tensor.

2. NEW API:

a) `.conj()` -- now returning a view.

b) `.conj_physical()` -- does the physical conjugate operation. If the conj bit for input was set, you'd get `self.clone()`, else you'll get a new tensor with conjugated value in its memory.

c) `.conj_physical_()`, and `out=` variant

d) `.resolve_conj()` -- materializes the conjugation. returns self if the conj bit is unset, else returns a new tensor with conjugated values and conj bit set to 0.

e) `.resolve_conj_()` in-place version of (d)

f) `view_as_real_physical` -- as described in (1), it's functionally same as `view_as_real`, just that it doesn't error out on conjugated tensors.

g) `view_as_real` -- existing function, but now errors out on conjugated tensors.

3. Conjugate Fallback

a) Vast majority of PyTorch functions would currently use this fallback when they are called on a conjugated tensor.

b) This fallback is well equipped to handle the following cases:

- functional operation e.g., `torch.sin(input)`

- Mutable inputs and in-place operations e.g., `tensor.add_(2)`

- out-of-place operation e.g., `torch.sin(input, out=out)`

- Tensorlist input args

- NOTE: Meta tensors don't work with conjugate fallback.

4. Autograd

a) `resolve_conj()` is an identity function w.r.t. autograd

b) Everything else works as expected.

5. Testing:

a) All method_tests run with conjugate view tensors.

b) OpInfo tests that run with conjugate views

- test_variant_consistency_eager/jit

- gradcheck, gradgradcheck

- test_conj_views (that only run for `torch.cfloat` dtype)

NOTE: functions like `empty_like`, `zero_like`, `randn_like`, `clone` don't propagate the conjugate bit.

Follow up work:

1. conjugate view RFC

2. Add neg bit to re-enable view operation on conjugated tensors

3. Update linalg functions to call into specialized functions that fast path with the hermitian operation.

Test Plan: Imported from OSS

Reviewed By: VitalyFedyunin

Differential Revision: D28227315

Pulled By: anjali411

fbshipit-source-id: acab9402b9d6a970c6d512809b627a290c8def5f

Summary:

Adds `is_inference` as a native function w/ manual cpp bindings.

Also changes instances of `is_inference_tensor` to `is_inference` to be consistent with other properties such as `is_complex`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58729

Reviewed By: mruberry

Differential Revision: D28874507

Pulled By: soulitzer

fbshipit-source-id: 0fa6bcdc72a4ae444705e2e0f3c416c1b28dadc7

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56017Fixes#55686

This patch is seemingly straightforward but some of the changes are very

subtle. For the general algorithmic approach, please first read the

quoted issue. Based on the algorithm, there are some fairly

straightforward changes:

- New boolean on TensorImpl tracking if we own the pyobj or not

- PythonHooks virtual interface for requesting deallocation of pyobj

when TensorImpl is being released and we own its pyobj, and

implementation of the hooks in python_tensor.cpp

- Modification of THPVariable to MaybeOwned its C++ tensor, directly

using swolchok's nice new class

And then, there is python_variable.cpp. Some of the changes follow the

general algorithmic approach:

- THPVariable_NewWithVar is simply adjusted to handle MaybeOwned and

initializes as owend (like before)

- THPVariable_Wrap adds the logic for reverting ownership back to

PyObject when we take out an owning reference to the Python object

- THPVariable_dealloc attempts to resurrect the Python object if

the C++ tensor is live, and otherwise does the same old implementation

as before

- THPVariable_tryResurrect implements the resurrection logic. It is

modeled after CPython code so read the cited logic and see if

it is faithfully replicated

- THPVariable_clear is slightly updated for MaybeOwned and also to

preserve the invariant that if owns_pyobj, then pyobj_ is not null.

This change is slightly dodgy: the previous implementation has a

comment mentioning that the pyobj nulling is required to ensure we

don't try to reuse the dead pyobj. I don't think, in this new world,

this is possible, because the invariant says that the pyobj only

dies if the C++ object is dead too. But I still unset the field

for safety.

And then... there is THPVariableMetaType. colesbury explained in the

issue why this is necessary: when destructing an object in Python, you

start off by running the tp_dealloc of the subclass before moving up

to the parent class (much in the same way C++ destructors work). The

deallocation process for a vanilla Python-defined class does irreparable

harm to the PyObject instance (e.g., the finalizers get run) making it

no longer valid attempt to resurrect later in the tp_dealloc chain.

(BTW, the fact that objects can resurrect but in an invalid state is

one of the reasons why it's so frickin' hard to write correct __del__

implementations). So we need to make sure that we actually override

the tp_dealloc of the bottom most *subclass* of Tensor to make sure

we attempt a resurrection before we start finalizing. To do this,

we need to define a metaclass for Tensor that can override tp_dealloc

whenever we create a new subclass of Tensor. By the way, it was totally

not documented how to create metaclasses in the C++ API, and it took

a good bit of trial error to figure it out (and the answer is now

immortalized in https://stackoverflow.com/q/67077317/23845 -- the things

that I got wrong in earlier versions of the PR included setting

tp_basicsize incorrectly, incorrectly setting Py_TPFLAGS_HAVE_GC on

the metaclass--you want to leave it unset so that it inherits, and

determining that tp_init is what actually gets called when you construct

a class, not tp_call as another not-to-be-named StackOverflow question

suggests).

Aside: Ordinarily, adding a metaclass to a class is a user visible

change, as it means that it is no longer valid to mixin another class

with a different metaclass. However, because _C._TensorBase is a C

extension object, it will typically conflict with most other

metaclasses, so this is not BC breaking.

The desired new behavior of a subclass tp_dealloc is to first test if

we should resurrect, and otherwise do the same old behavior. In an

initial implementation of this patch, I implemented this by saving the

original tp_dealloc (which references subtype_dealloc, the "standard"

dealloc for all Python defined classes) and invoking it. However, this

results in an infinite loop, as it attempts to call the dealloc function

of the base type, but incorrectly chooses subclass type (because it is

not a subtype_dealloc, as we have overridden it; see

b38601d496/Objects/typeobject.c (L1261) )

So, with great reluctance, I must duplicate the behavior of

subtype_dealloc in our implementation. Note that this is not entirely

unheard of in Python binding code; for example, Cython

c25c3ccc4b/Cython/Compiler/ModuleNode.py (L1560)

also does similar things. This logic makes up the bulk of

THPVariable_subclass_dealloc

To review this, you should pull up the CPython copy of subtype_dealloc

b38601d496/Objects/typeobject.c (L1230)

and verify that I have specialized the implementation for our case

appropriately. Among the simplifications I made:

- I assume PyType_IS_GC, because I assume that Tensor subclasses are

only ever done in Python and those classes are always subject to GC.

(BTW, yes! This means I have broken anyone who has extend PyTorch

tensor from C API directly. I'm going to guess no one has actually

done this.)

- I don't bother walking up the type bases to find the parent dealloc;

I know it is always THPVariable_dealloc. Similarly, I can get rid

of some parent type tests based on knowledge of how

THPVariable_dealloc is defined

- The CPython version calls some private APIs which I can't call, so

I use the public PyObject_GC_UnTrack APIs.

- I don't allow the finalizer of a Tensor to change its type (but

more on this shortly)

One alternative I discussed with colesbury was instead of copy pasting

the subtype_dealloc, we could transmute the type of the object that was

dying to turn it into a different object whose tp_dealloc is

subtype_dealloc, so the stock subtype_dealloc would then be applicable.

We decided this would be kind of weird and didn't do it that way.

TODO:

- More code comments

- Figure out how not to increase the size of TensorImpl with the new

bool field

- Add some torture tests for the THPVariable_subclass_dealloc, e.g.,

involving subclasses of Tensors that do strange things with finalizers

- Benchmark the impact of taking the GIL to release C++ side tensors

(e.g., from autograd)

- Benchmark the impact of adding a new metaclass to Tensor (probably

will be done by separating out the metaclass change into its own

change)

- Benchmark the impact of changing THPVariable to conditionally own

Tensor (as opposed to unconditionally owning it, as before)

- Add tests that this actually indeed preserves the Python object

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D27765125

Pulled By: ezyang

fbshipit-source-id: 857f14bdcca2900727412aff4c2e2d7f0af1415a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57386

Here is the PR for what's discussed in the RFC https://github.com/pytorch/pytorch/issues/55374 to enable the autocast for CPU device. Currently, this PR only enable BF16 as the lower precision datatype.

Changes:

1. Enable new API `torch.cpu.amp.autocast` for autocast on CPU device: include the python API, C++ API, new Dispatchkey etc.

2. Consolidate the implementation for each cast policy sharing between CPU and GPU devices.

3. Add the operation lists to corresponding cast policy for cpu autocast.

Test Plan: Imported from OSS

Reviewed By: soulitzer

Differential Revision: D28572219

Pulled By: ezyang

fbshipit-source-id: db3db509973b16a5728ee510b5e1ee716b03a152

Summary:

This adds the methods `Tensor.cfloat()` and `Tensor.cdouble()`.

I was not able to find the tests for `.float()` functions. I'd be happy to add similar tests for these functions once someone points me to them.

Fixes https://github.com/pytorch/pytorch/issues/56014

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58137

Reviewed By: ejguan

Differential Revision: D28412288

Pulled By: anjali411

fbshipit-source-id: ff3653cb3516bcb3d26a97b9ec3d314f1f42f83d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58039

The new function has the following signature

`inv_ex(Tensor inpit, *, bool check_errors=False) -> (Tensor inverse, Tensor info)`.

When `check_errors=True`, an error is thrown if the matrix is not invertible; `check_errors=False` - responsibility for checking the result is on the user.

`linalg_inv` is implemented using calls to `linalg_inv_ex` now.

Resolves https://github.com/pytorch/pytorch/issues/25095

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision: D28405148

Pulled By: mruberry

fbshipit-source-id: b8563a6c59048cb81e206932eb2f6cf489fd8531

Summary:

Fixes https://github.com/pytorch/pytorch/issues/56608

- Adds binding to the `c10::InferenceMode` RAII class in `torch._C._autograd.InferenceMode` through pybind. Also binds the `torch.is_inference_mode` function.

- Adds context manager `torch.inference_mode` to manage an instance of `c10::InferenceMode` (global). Implemented in `torch.autograd.grad_mode.py` to reuse the `_DecoratorContextManager` class.

- Adds some tests based on those linked in the issue + several more for just the context manager

Issues/todos (not necessarily for this PR):

- Improve short inference mode description

- Small example

- Improved testing since there is no direct way of checking TLS/dispatch keys

-

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58045

Reviewed By: agolynski

Differential Revision: D28390595

Pulled By: soulitzer

fbshipit-source-id: ae98fa036c6a2cf7f56e0fd4c352ff804904752c

Summary:

Backward methods for `torch.lu` and `torch.lu_solve` require the `torch.lu_unpack` method.

However, while `torch.lu` is a Python wrapper over a native function, so its gradient is implemented via `autograd.Function`,

`torch.lu_solve` is a native function, so it cannot access `torch.lu_unpack` as it is implemented in Python.

Hence this PR presents a native (ATen) `lu_unpack` version. It is also possible to update the gradients for `torch.lu` so that backward+JIT is supported (no JIT for `autograd.Function`) with this function.

~~The interface for this method is different from the original `torch.lu_unpack`, so it is decided to keep it hidden.~~

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46913

Reviewed By: albanD

Differential Revision: D28355725

Pulled By: mruberry

fbshipit-source-id: 281260f3b6e93c15b08b2ba66d5a221314b00e78

Summary:

Backward methods for `torch.lu` and `torch.lu_solve` require the `torch.lu_unpack` method.

However, while `torch.lu` is a Python wrapper over a native function, so its gradient is implemented via `autograd.Function`,

`torch.lu_solve` is a native function, so it cannot access `torch.lu_unpack` as it is implemented in Python.

Hence this PR presents a native (ATen) `lu_unpack` version. It is also possible to update the gradients for `torch.lu` so that backward+JIT is supported (no JIT for `autograd.Function`) with this function.

~~The interface for this method is different from the original `torch.lu_unpack`, so it is decided to keep it hidden.~~

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46913

Reviewed By: astaff

Differential Revision: D28117714

Pulled By: mruberry

fbshipit-source-id: befd33db12ecc147afacac792418b6f4948fa4a4

Summary:

This PR is focused on the API for `linalg.matrix_norm` and delegates computations to `linalg.norm` for the moment.

The main difference between the norms is when `dim=None`. In this case

- `linalg.norm` will compute a vector norm on the flattened input if `ord=None`, otherwise it requires the input to be either 1D or 2D in order to disambiguate between vector and matrix norm

- `linalg.vector_norm` will flatten the input

- `linalg.matrix_norm` will compute the norm over the last two dimensions, treating the input as batch of matrices

In future PRs, the computations will be moved to `torch.linalg.matrix_norm` and `torch.norm` and `torch.linalg.norm` will delegate computations to either `linalg.vector_norm` or `linalg.matrix_norm` based on the arguments provided.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57127

Reviewed By: mrshenli

Differential Revision: D28186736

Pulled By: mruberry

fbshipit-source-id: 99ce2da9d1c4df3d9dd82c0a312c9570da5caf25

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57180

We have now a separate function for computing only the singular values.

`compute_uv` argument is not needed and it was decided in the

offline discussion to remove it. This is a BC-breaking change but our

linalg module is beta, therefore we can do it without a deprecation

notice.

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision: D28142163

Pulled By: mruberry

fbshipit-source-id: 3fac1fcae414307ad5748c9d5ff50e0aa4e1b853

Summary:

As per discussion here https://github.com/pytorch/pytorch/pull/57127#discussion_r624948215

Note that we cannot remove the optional type from the `dim` parameter because the default is to flatten the input tensor which cannot be easily captured by a value other than `None`

### BC Breaking Note

This PR changes the `ord` parameter of `torch.linalg.vector_norm` so that it no longer accepts `None` arguments. The default behavior of `2` is equivalent to the previous default of `None`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57662

Reviewed By: albanD, mruberry

Differential Revision: D28228870

Pulled By: heitorschueroff

fbshipit-source-id: 040fd8055bbe013f64d3c8409bbb4b2c87c99d13

Summary:

The new function has the following signature `cholesky_ex(Tensor input, *, bool check_errors=False) -> (Tensor L, Tensor infos)`. When `check_errors=True`, an error is thrown if the decomposition fails; `check_errors=False` - responsibility for checking the decomposition is on the user.

When `check_errors=False`, we don't have host-device memory transfers for checking the values of the `info` tensor.

Rewrote the internal code for `torch.linalg.cholesky`. Added `cholesky_stub` dispatch. `linalg_cholesky` is implemented using calls to `linalg_cholesky_ex` now.

Resolves https://github.com/pytorch/pytorch/issues/57032.

Ref. https://github.com/pytorch/pytorch/issues/34272, https://github.com/pytorch/pytorch/issues/47608, https://github.com/pytorch/pytorch/issues/47953

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56724

Reviewed By: ngimel

Differential Revision: D27960176

Pulled By: mruberry

fbshipit-source-id: f05f3d5d9b4aa444e41c4eec48ad9a9b6fd5dfa5

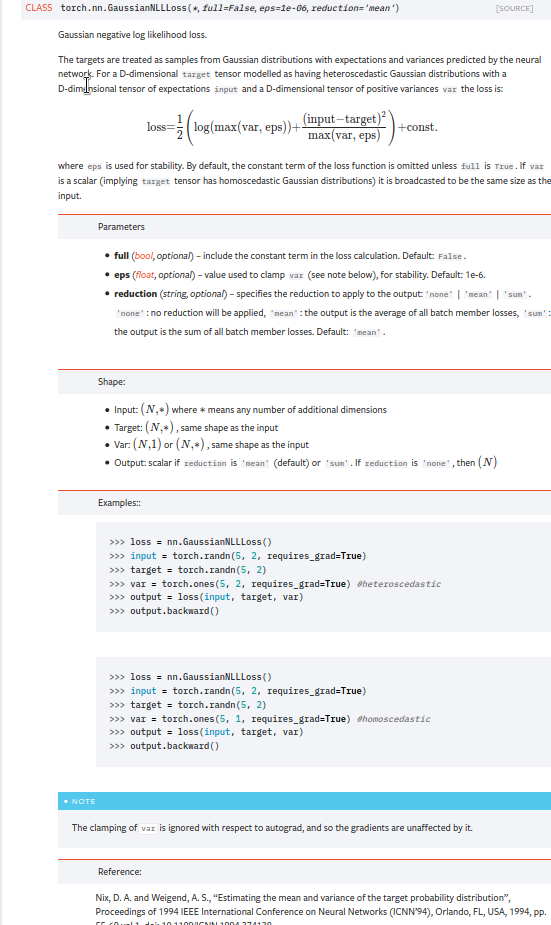

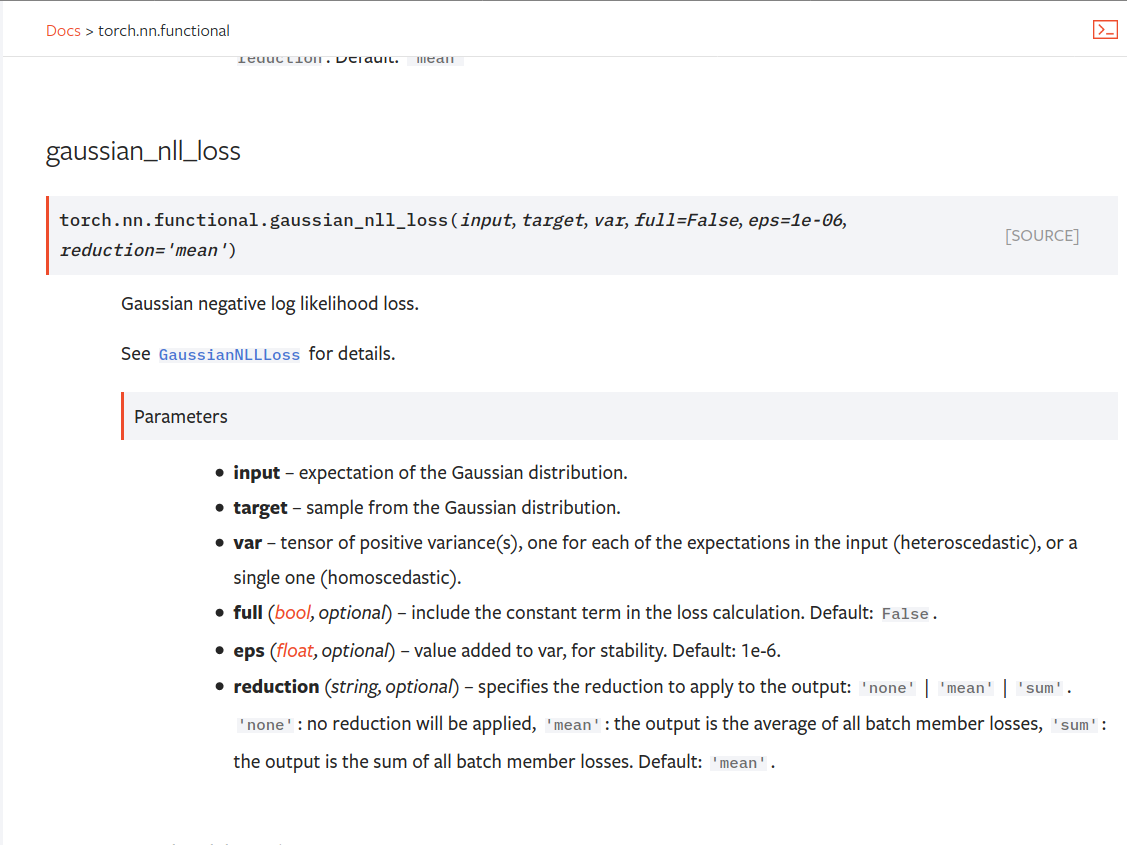

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53238

There is a tension for the Vitals design: (1) we want a macro based logging API for C++ and (2) we want a clean python API. Furthermore, we want to this to work with "print on destruction" semantics.

The unfortunate resolution is that there are (2) ways to define vitals:

(1) Use the macros for local use only within C++ - this keeps the semantics people enjoy

(2) For vitals to be used through either C++ or Python, we use a global VitalsAPI object.

Both these go to the same place for the user: printing to stdout as the globals are destructed.

The long history on this diff shows many different ways to try to avoid having 2 different paths... we tried weak pointers & shared pointers, verbose switch cases, etc. Ultimately each ran into an ugly trade-off and this cuts the difference better the alternatives.

Test Plan:

buck test mode/dev caffe2/test:torch -- --regex vital

buck test //caffe2/aten:vitals

Reviewed By: orionr

Differential Revision: D26736443

fbshipit-source-id: ccab464224913edd07c1e8532093f673cdcb789f

Summary:

Reference: https://github.com/pytorch/pytorch/issues/50345

Changes:

* Add `i0e`

* Move some kernels from `UnaryOpsKernel.cu` to `UnarySpecialOpsKernel.cu` to decrease compilation time per file.

Time taken by i0e_vs_scipy tests: around 6.33.s

<details>

<summary>Test Run Log</summary>

```

(pytorch-cuda-dev) kshiteej@qgpu1:~/Pytorch/pytorch_module_special$ pytest test/test_unary_ufuncs.py -k _i0e_vs

======================================================================= test session starts ========================================================================

platform linux -- Python 3.8.6, pytest-6.1.2, py-1.9.0, pluggy-0.13.1

rootdir: /home/kshiteej/Pytorch/pytorch_module_special, configfile: pytest.ini

plugins: hypothesis-5.38.1

collected 8843 items / 8833 deselected / 10 selected

test/test_unary_ufuncs.py ...sss.... [100%]

========================================================================= warnings summary =========================================================================

../../.conda/envs/pytorch-cuda-dev/lib/python3.8/site-packages/torch/backends/cudnn/__init__.py:73

test/test_unary_ufuncs.py::TestUnaryUfuncsCUDA::test_special_i0e_vs_scipy_cuda_bfloat16

/home/kshiteej/.conda/envs/pytorch-cuda-dev/lib/python3.8/site-packages/torch/backends/cudnn/__init__.py:73: UserWarning: PyTorch was compiled without cuDNN/MIOpen support. To use cuDNN/MIOpen, rebuild PyTorch making sure the library is visible to the build system.

warnings.warn(

-- Docs: https://docs.pytest.org/en/stable/warnings.html

===================================================================== short test summary info ======================================================================

SKIPPED [3] test/test_unary_ufuncs.py:1182: not implemented: Could not run 'aten::_copy_from' with arguments from the 'Meta' backend. This could be because the operator doesn't exist for this backend, or was omitted during the selective/custom build process (if using custom build). If you are a Facebook employee using PyTorch on mobile, please visit https://fburl.com/ptmfixes for possible resolutions. 'aten::_copy_from' is only available for these backends: [BackendSelect, Named, InplaceOrView, AutogradOther, AutogradCPU, AutogradCUDA, AutogradXLA, UNKNOWN_TENSOR_TYPE_ID, AutogradMLC, AutogradNestedTensor, AutogradPrivateUse1, AutogradPrivateUse2, AutogradPrivateUse3, Tracer, Autocast, Batched, VmapMode].

BackendSelect: fallthrough registered at ../aten/src/ATen/core/BackendSelectFallbackKernel.cpp:3 [backend fallback]

Named: registered at ../aten/src/ATen/core/NamedRegistrations.cpp:7 [backend fallback]

InplaceOrView: fallthrough registered at ../aten/src/ATen/core/VariableFallbackKernel.cpp:56 [backend fallback]

AutogradOther: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradCPU: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradCUDA: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradXLA: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

UNKNOWN_TENSOR_TYPE_ID: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradMLC: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradNestedTensor: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse1: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse2: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse3: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

Tracer: registered at ../torch/csrc/autograd/generated/TraceType_4.cpp:9348 [kernel]

Autocast: fallthrough registered at ../aten/src/ATen/autocast_mode.cpp:250 [backend fallback]

Batched: registered at ../aten/src/ATen/BatchingRegistrations.cpp:1016 [backend fallback]

VmapMode: fallthrough registered at ../aten/src/ATen/VmapModeRegistrations.cpp:33 [backend fallback]

==================================================== 7 passed, 3 skipped, 8833 deselected, 2 warnings in 6.33s =====================================================

```

</details>

TODO:

* [x] Check rendered docs (https://11743402-65600975-gh.circle-artifacts.com/0/docs/special.html)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54409

Reviewed By: jbschlosser

Differential Revision: D27760472

Pulled By: mruberry

fbshipit-source-id: bdfbcaa798b00c51dc9513c34626246c8fc10548

Summary:

This PR adds a `padding_idx` parameter to `nn.EmbeddingBag` and `nn.functional.embedding_bag`. As with `nn.Embedding`'s `padding_idx` argument, if an embedding's index is equal to `padding_idx` it is ignored, so it is not included in the reduction.

This PR does not add support for `padding_idx` for quantized or ONNX `EmbeddingBag` for opset10/11 (opset9 is supported). In these cases, an error is thrown if `padding_idx` is provided.

Fixes https://github.com/pytorch/pytorch/issues/3194

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49237

Reviewed By: walterddr, VitalyFedyunin

Differential Revision: D26948258

Pulled By: jbschlosser

fbshipit-source-id: 3ca672f7e768941f3261ab405fc7597c97ce3dfc

Summary:

Reference: https://github.com/pytorch/pytorch/issues/50345

Chages:

* Alias for sigmoid and logit

* Adds out variant for C++ API

* Updates docs to link back to `special` documentation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54759

Reviewed By: mrshenli

Differential Revision: D27615208

Pulled By: mruberry

fbshipit-source-id: 8bba908d1bea246e4aa9dbadb6951339af353556

Summary:

This PR adds `torch.linalg.eig`, and `torch.linalg.eigvals` for NumPy compatibility.

MAGMA uses a hybrid CPU-GPU algorithm and doesn't have a GPU interface for the non-symmetric eigendecomposition. It means that it forces us to transfer inputs living in GPU memory to CPU first before calling MAGMA, and then transfer results from MAGMA to CPU. That is rather slow for smaller matrices and MAGMA is faster than CPU path only for matrices larger than 3000x3000.

Unfortunately, there is no cuSOLVER function for this operation.

Autograd support for `torch.linalg.eig` will be added in a follow-up PR.

Ref https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52491

Reviewed By: anjali411

Differential Revision: D27563616

Pulled By: mruberry

fbshipit-source-id: b42bb98afcd2ed7625d30bdd71cfc74a7ea57bb5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52859

This reverts commit 92a4ee1cf6.

Added support for bfloat16 for CUDA 11 and removed fast-path for empty input tensors that was affecting autograd graph.

Test Plan: Imported from OSS

Reviewed By: H-Huang

Differential Revision: D27402390

Pulled By: heitorschueroff

fbshipit-source-id: 73c5ccf54f3da3d29eb63c9ed3601e2fe6951034

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54702

This fixes subclassing for __iter__ so that it returns an iterator over

subclasses properly instead of Tensor.

Test Plan: Imported from OSS

Reviewed By: H-Huang

Differential Revision: D27352563

Pulled By: ezyang

fbshipit-source-id: 4c195a86c8f2931a6276dc07b1e74ee72002107c

Summary:

Reference: https://github.com/pytorch/pytorch/issues/38349

Wrapper around the existing `torch.gather` with broadcasting logic.

TODO:

* [x] Add Doc entry (see if phrasing can be improved)

* [x] Add OpInfo

* [x] Add test against numpy

* [x] Handle broadcasting behaviour and when dim is not given.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52833

Reviewed By: malfet

Differential Revision: D27319038

Pulled By: mruberry

fbshipit-source-id: 00f307825f92c679d96e264997aa5509172f5ed1

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53727

This is first diff to add native support for segment reduction in PyTorch. It provides similar functionality like torch.scatter or "numpy.ufunc.reduceat".

This diff mainly focuses on API layer to make sure future improvements will not cause backward compatibility issues. Once API is settled, here are next steps I am planning:

- Add support for other major reduction types (e.g. min, sum) for 1D tensor

- Add Cuda support

- Backward support

- Documentation for the op

- Perf optimizations and benchmark util

- Support for multi dimensional tensors (on data and lengths) (not high priority)

- Support for 'indices' (not high priority)

Test Plan: Added unit test

Reviewed By: ngimel

Differential Revision: D26952075

fbshipit-source-id: 8040ec96def3013e7240cf675d499ee424437560

Summary:

This PR adds autograd support for `torch.orgqr`.

Since `torch.orgqr` is one of few functions that expose LAPACK's naming and all other linear algebra routines were renamed a long time ago, I also added a new function with a new name and `torch.orgqr` now is an alias for it.

The new proposed name is `householder_product`. For a matrix `input` and a vector `tau` LAPACK's orgqr operation takes columns of `input` (called Householder vectors or elementary reflectors) scalars of `tau` that together represent Householder matrices and then the product of these matrices is computed. See https://www.netlib.org/lapack/lug/node128.html.

Other linear algebra libraries that I'm aware of do not expose this LAPACK function, so there is some freedom in naming it. It is usually used internally only for QR decomposition, but can be useful for deep learning tasks now when it supports differentiation.

Resolves https://github.com/pytorch/pytorch/issues/50104

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52637

Reviewed By: agolynski

Differential Revision: D27114246

Pulled By: mruberry

fbshipit-source-id: 9ab51efe52aec7c137aa018c7bd486297e4111ce

Summary:

Close https://github.com/pytorch/pytorch/issues/51108

Related https://github.com/pytorch/pytorch/issues/38349

This PR implements the `cpu_kernel_multiple_outputs` to support returning multiple values in a CPU kernel.

```c++

auto iter = at::TensorIteratorConfig()

.add_output(out1)

.add_output(out2)

.add_input(in1)

.add_input(in2)

.build();

at::native::cpu_kernel_multiple_outputs(iter,

[=](float a, float b) -> std::tuple<float, float> {

float add = a + b;

float mul = a * b;

return std::tuple<float, float>(add, mul);

}

);

```

The `out1` will equal to `torch.add(in1, in2)`, while the result of `out2` will be `torch.mul(in1, in2)`.

It helps developers implement new torch functions that return two tensors more conveniently, such as NumPy-like functions [divmod](https://numpy.org/doc/1.18/reference/generated/numpy.divmod.html?highlight=divmod#numpy.divmod) and [frexp](https://numpy.org/doc/stable/reference/generated/numpy.frexp.html#numpy.frexp).

This PR adds `torch.frexp` function to exercise the new functionality provided by `cpu_kernel_multiple_outputs`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51097

Reviewed By: albanD

Differential Revision: D26982619

Pulled By: heitorschueroff

fbshipit-source-id: cb61c7f2c79873ab72ab5a61cbdb9203531ad469

Summary:

Fixes https://github.com/pytorch/pytorch/issues/44378 by providing a wider range of drivers similar to what SciPy is doing.

The supported CPU drivers are `gels, gelsy, gelsd, gelss`.

The CUDA interface has only `gels` implemented but only for overdetermined systems.

The current state of this PR:

- [x] CPU interface

- [x] CUDA interface

- [x] CPU tests

- [x] CUDA tests

- [x] Memory-efficient batch-wise iteration with broadcasting which fixes https://github.com/pytorch/pytorch/issues/49252

- [x] docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49093

Reviewed By: albanD

Differential Revision: D26991788

Pulled By: mruberry

fbshipit-source-id: 8af9ada979240b255402f55210c0af1cba6a0a3c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53276

- One of the tests had a syntax error (but the test

wasn't fine grained enough to catch this; any error

was a pass)

- Doesn't work on ROCm

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision: D26820048

Test Plan: Imported from OSS

Reviewed By: mruberry

Pulled By: ezyang

fbshipit-source-id: b02c4252d10191c3b1b78f141d008084dc860c45