Kurt Mohler

23bdb570cf

Reland: Enable dim=None for torch.sum ( #79881 )

...

Part of #29137

Reland of #75845

Pull Request resolved: https://github.com/pytorch/pytorch/pull/79881

Approved by: https://github.com/albanD , https://github.com/kulinseth

2022-07-09 00:54:42 +00:00

PyTorch MergeBot

39f659c3ba

Revert "[Array API] Add linalg.vecdot ( #70542 )"

...

This reverts commit 74208a9c68https://github.com/pytorch/pytorch/pull/70542 on behalf of https://github.com/malfet due to Broke CUDA-10.2 for vecdot_bfloat16, see 74208a9c68

2022-07-08 22:56:51 +00:00

Peter Bell

cc3126083e

Remove split functional wrapper ( #74727 )

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74727

Approved by: https://github.com/albanD

2022-07-08 19:21:22 +00:00

lezcano

74208a9c68

[Array API] Add linalg.vecdot ( #70542 )

...

This PR adds the function `linalg.vecdot` specified by the [Array

API](https://data-apis.org/array-api/latest/API_specification/linear_algebra_functions.html#function-vecdot )

For the complex case, it chooses to implement \sum x_i y_i. See the

discussion in https://github.com/data-apis/array-api/issues/356

Edit. When it comes to testing, this function is not quite a binopt, nor a reduction opt. As such, we're this close to be able to get the extra testing, but we don't quite make it. Now, it's such a simple op that I think we'll make it without this.

Resolves https://github.com/pytorch/pytorch/issues/18027 .

cc @mruberry @rgommers @pmeier @asmeurer @leofang @AnirudhDagar @asi1024 @emcastillo @kmaehashi

Pull Request resolved: https://github.com/pytorch/pytorch/pull/70542

Approved by: https://github.com/IvanYashchuk , https://github.com/mruberry

2022-07-08 15:37:58 +00:00

lezcano

37a5819665

Make slogdet, linalg.sloget and logdet support metatensors ( #79742 )

...

This PR also adds complex support for logdet, and makes all these

functions support out= and be composite depending on one function. We

also extend the support of `logdet` to complex numbers and improve the

docs of all these functions.

We also use `linalg_lu_factor_ex` in these functions, so we remove the

synchronisation present before.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/79742

Approved by: https://github.com/IvanYashchuk , https://github.com/albanD

2022-07-01 16:09:21 +00:00

Rohit Goswami

04407431ff

MAINT: Harmonize argsort params with array_api ( #75162 )

...

Closes [#70922 ](https://github.com/pytorch/pytorch/issues/70922 ).

- Does what it says on the tin.

- No non-standard implementation details.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/75162

Approved by: https://github.com/rgommers , https://github.com/nikitaved , https://github.com/mruberry

2022-06-09 12:32:01 +00:00

lezcano

c6215b343c

Deprecate torch.lu_solve

...

**BC-breaking note**:

This PR deprecates `torch.lu_solve` in favor of `torch.linalg.lu_solve_factor`.

A upgrade guide is added to the documentation for `torch.lu_solve`.

Note this PR DOES NOT remove `torch.lu_solve`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77637

Approved by: https://github.com/malfet

2022-06-07 22:50:14 +00:00

lezcano

f7b9a46880

Deprecate torch.lu

...

**BC-breaking note**:

This PR deprecates `torch.lu` in favor of `torch.linalg.lu_factor`.

A upgrade guide is added to the documentation for `torch.lu`.

Note this PR DOES NOT remove `torch.lu`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77636

Approved by: https://github.com/malfet

2022-06-07 22:50:14 +00:00

lezcano

f091b3fb4b

Update torch.lu_unpack docs

...

As per title

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77635

Approved by: https://github.com/malfet

2022-06-07 22:50:13 +00:00

Andrij David

bd08d085b0

Update argmin docs to reflect the code behavior ( #78888 )

...

Fixes #78791

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78888

Approved by: https://github.com/ezyang

2022-06-07 00:44:39 +00:00

Kshiteej K

c461d8a977

[primTorch] refs: hsplit, vsplit ( #78418 )

...

As per title

TODO:

* [x] Add error inputs (already exist)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78418

Approved by: https://github.com/mruberry

2022-06-06 19:54:05 +00:00

kshitij12345

57c117d556

update signbit docs and add -0. to reference testing for unary and binary functions. ( #78349 )

...

Fixes https://github.com/pytorch/pytorch/issues/53963

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78349

Approved by: https://github.com/mruberry

2022-06-06 13:48:08 +00:00

John Clow

416f581eb1

Updating torch.log example

...

Fixes issue #78301

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78776

Approved by: https://github.com/ngimel

2022-06-03 00:57:35 +00:00

Gao, Xiang

388d44314d

Fix docs for torch.real ( #78644 )

...

Non-complex types are supported

```python

>>> import torch

>>> z = torch.zeros(5)

>>> torch.real(z.float())

tensor([0., 0., 0., 0., 0.])

>>> torch.real(z.int())

tensor([0, 0, 0, 0, 0], dtype=torch.int32)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78644

Approved by: https://github.com/mruberry , https://github.com/anjali411

2022-06-02 04:17:03 +00:00

Thomas J. Fan

3524428fad

DOC Corrects default value for storage_offset in as_strided ( #78202 )

...

Fixes #77730

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78202

Approved by: https://github.com/mruberry

2022-05-31 19:28:36 +00:00

Yukio Siraichi

3f334f0dfd

Fix asarray documentation formatting ( #78485 )

...

Fixes #78290

Here's a screenshot of the modified doc:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78485

Approved by: https://github.com/ngimel

2022-05-30 19:28:10 +00:00

Mike Ruberry

089203f8bc

Updates floor_divide to perform floor division ( #78411 )

...

Fixes https://github.com/pytorch/pytorch/issues/43874

This PR changes floor_divide to perform floor division instead of truncation division.

This is a BC-breaking change, but it's a "bug fix," and we've already warned users for several releases this behavior would change.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78411

Approved by: https://github.com/ngimel

2022-05-29 21:28:45 +00:00

Ryan Spring

2df1da09e1

Add Elementwise unary ops 4 references ( #78216 )

...

Add reference implementations for `nan_to_num, positive, sigmoid, signbit, tanhshink`

Add prims for `minimum_value(dtype)` and `maximum_value(dtype)`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78216

Approved by: https://github.com/mruberry

2022-05-27 21:55:34 +00:00

Brian Hirsh

07e4533403

reland of as_strided support for functionalization; introduce as_strided_scatter

...

This reverts commit a95f1edd85https://github.com/pytorch/pytorch/pull/78199

Approved by: https://github.com/ezyang

2022-05-24 22:40:44 +00:00

PyTorch MergeBot

a95f1edd85

Revert "as_strided support for functionalization; introduce as_strided_scatter"

...

This reverts commit 3a921f2d26https://github.com/pytorch/pytorch/pull/77128 on behalf of https://github.com/suo due to This broke rocm tests on master 3a921f2d26

2022-05-24 20:19:12 +00:00

Brian Hirsh

3a921f2d26

as_strided support for functionalization; introduce as_strided_scatter

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77128

Approved by: https://github.com/ezyang

2022-05-24 18:20:31 +00:00

Jeff Daily

de86146c61

rocblas alt impl during backward pass only ( #71881 )

...

In preparation of adopting future rocblas library options, it is necessary to track when the backward pass of training is executing. The scope-based helper class `BackwardPassGuard` is provided to toggle state.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/71881

Approved by: https://github.com/albanD

2022-05-18 19:42:58 +00:00

Xiang Gao

0975174652

Fix doc about type promotion of lshift and rshift ( #77613 )

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77613

Approved by: https://github.com/ngimel

2022-05-17 00:28:48 +00:00

Mikayla Gawarecki

841c65f499

Unprivate _index_reduce and add documentation

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/76997

Approved by: https://github.com/cpuhrsch

2022-05-13 19:48:38 +00:00

Xiang Gao

cc9d0f309e

lshift and rshift stop support floating types ( #77146 )

...

Fixes #74358

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77146

Approved by: https://github.com/ngimel

2022-05-11 22:29:30 +00:00

Ivan Yashchuk

890bdf13e1

Remove deprecated torch.solve ( #70986 )

...

The time has come to remove deprecated linear algebra related functions. This PR removes `torch.solve`.

cc @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano

Pull Request resolved: https://github.com/pytorch/pytorch/pull/70986

Approved by: https://github.com/Lezcano , https://github.com/albanD

2022-05-10 13:44:07 +00:00

PyTorch MergeBot

4ceac49425

Revert "Update torch.lu_unpack docs"

...

This reverts commit 9dc8f2562fhttps://github.com/pytorch/pytorch/pull/73803 on behalf of https://github.com/malfet

2022-05-09 19:09:43 +00:00

PyTorch MergeBot

1467e0dd5d

Revert "Deprecate torch.lu"

...

This reverts commit a5bbfd94fbhttps://github.com/pytorch/pytorch/pull/73804 on behalf of https://github.com/malfet

2022-05-09 19:06:44 +00:00

PyTorch MergeBot

b042cc7f4d

Revert "Deprecate torch.lu_solve"

...

This reverts commit f84d4d9cf5https://github.com/pytorch/pytorch/pull/73806 on behalf of https://github.com/malfet

2022-05-09 19:03:26 +00:00

lezcano

f84d4d9cf5

Deprecate torch.lu_solve

...

**BC-breaking note**:

This PR deprecates `torch.lu_solve` in favor of `torch.linalg.lu_solve_factor`.

A upgrade guide is added to the documentation for `torch.lu_solve`.

Note this PR DOES NOT remove `torch.lu_solve`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73806

Approved by: https://github.com/IvanYashchuk , https://github.com/nikitaved , https://github.com/mruberry

2022-05-05 19:19:19 +00:00

lezcano

a5bbfd94fb

Deprecate torch.lu

...

**BC-breaking note**:

This PR deprecates `torch.lu` in favor of `torch.linalg.lu_factor`.

A upgrade guide is added to the documentation for `torch.lu`.

Note this PR DOES NOT remove `torch.lu`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73804

Approved by: https://github.com/IvanYashchuk , https://github.com/mruberry

2022-05-05 19:17:11 +00:00

lezcano

9dc8f2562f

Update torch.lu_unpack docs

...

As per title

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73803

Approved by: https://github.com/IvanYashchuk , https://github.com/nikitaved , https://github.com/mruberry

2022-05-05 19:12:23 +00:00

lezcano

7cb7cd5802

Add linalg.lu

...

This PR modifies `lu_unpack` by:

- Using less memory when unpacking `L` and `U`

- Fuse the subtraction by `-1` with `unpack_pivots_stub`

- Define tensors of the correct types to avoid copies

- Port `lu_unpack` to be a strucutred kernel so that its `_out` version

does not incur on extra copies

Then we implement `linalg.lu` as a structured kernel, as we want to

compute its derivative manually. We do so because composing the

derivatives of `torch.lu_factor` and `torch.lu_unpack` would be less efficient.

This new function and `lu_unpack` comes with all the things it can come:

forward and backward ad, decent docs, correctness tests, OpInfo, complex support,

support for metatensors and support for vmap and vmap over the gradients.

I really hope we don't continue adding more features.

This PR also avoids saving some of the tensors that were previously

saved unnecessarily for the backward in `lu_factor_ex_backward` and

`lu_backward` and does some other general improvements here and there

to the forward and backward AD formulae of other related functions.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67833

Approved by: https://github.com/IvanYashchuk , https://github.com/nikitaved , https://github.com/mruberry

2022-05-05 09:17:05 +00:00

Pearu Peterson

5adf97d492

Add docstrings to sparse compressed tensor factory functions

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/76651

Approved by: https://github.com/cpuhrsch

2022-05-04 03:36:14 +00:00

Natalia Gimelshein

ce76244200

fix where type promotion

...

Fixes #73298

I don't know whether `where` kernel actually supports type promotion, nor am I in the mood to find out, so it's manual type promotion.

Edit: nah, i can't tell TI to "promote to common dtype" because of bool condition, so manual type promotion is our only option.

I'll see what tests start failing and fix.

Uses some parts from #62084

Pull Request resolved: https://github.com/pytorch/pytorch/pull/76691

Approved by: https://github.com/mruberry

2022-05-03 04:40:04 +00:00

Peter Bell

39717d3034

Remove histogramdd functional wrapper

...

Merge once the forward compatibility period is expired for the histogramdd

operator.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74201

Approved by: https://github.com/ezyang , https://github.com/albanD

2022-04-14 20:56:24 +00:00

PyTorch MergeBot

715e07b97f

Revert "Remove histogramdd functional wrapper"

...

This reverts commit 8cc338e5c2https://github.com/pytorch/pytorch/pull/74201 on behalf of https://github.com/suo

2022-04-14 03:56:48 +00:00

Peter Bell

8cc338e5c2

Remove histogramdd functional wrapper

...

Merge once the forward compatibility period is expired for the histogramdd

operator.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74201

Approved by: https://github.com/ezyang

2022-04-14 02:47:39 +00:00

PyTorch MergeBot

3471b0eb3d

Revert "Remove histogramdd functional wrapper"

...

This reverts commit 7c9017127fhttps://github.com/pytorch/pytorch/pull/74201 on behalf of https://github.com/malfet

2022-04-13 12:54:24 +00:00

Peter Bell

7c9017127f

Remove histogramdd functional wrapper

...

Merge once the forward compatibility period is expired for the histogramdd

operator.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74201

Approved by: https://github.com/ezyang

2022-04-13 03:02:59 +00:00

Brian Hirsh

23b8414391

code-generate non-aliasing {view}_copy kernels ( #73442 )

...

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/73442

Test Plan: Imported from OSS

Reviewed By: ezyang

Differential Revision: D35016025

Pulled By: bdhirsh

fbshipit-source-id: 2a7f303ec76f5913b744c7822a531d55a57589c9

(cherry picked from commit 3abe13c2a787bcbe9c41b0a335c96e5a3d3642fb)

2022-04-11 19:48:55 +00:00

Mikayla Gawarecki

11f1fef981

Update documentation for scatter_reduce

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74608

Approved by: https://github.com/cpuhrsch

2022-04-07 15:41:23 +00:00

Mikayla Gawarecki

e9a8e6f74a

Add include_self flag to scatter_reduce

...

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74607

Approved by: https://github.com/cpuhrsch

2022-04-05 16:31:39 +00:00

Mikayla Gawarecki

2bfa018462

[BC-breaking] Use ScatterGatherKernel for scatter_reduce (CPU-only) ( #74226 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74226

Update signature of `scatter_reduce_` to match `scatter_/scatter_add_`

`Tensor.scatter_reduce_(int64 dim, Tensor index, Tensor src, str reduce)`

- Add new reduction options in ScatterGatherKernel.cpp and update `scatter_reduce` to call into the cpu kernel for `scatter.reduce`

- `scatter_reduce` now has the same shape constraints as `scatter_` and `scatter_add_`

- Migrate `test/test_torch.py:test_scatter_reduce` to `test/test_scatter_gather_ops.py`

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision: D35222842

Pulled By: mikaylagawarecki

fbshipit-source-id: 84930add2ad30baf872c495251373313cb7428bd

(cherry picked from commit 1b45139482e22eb0dc8b6aec2a7b25a4b58e31df)

2022-04-01 05:57:45 +00:00

Mehdi Amini

f17ad06caa

Fix docstring for torch.roll

...

The doc was indicating "If a dimension is not specified, the tensor will

be flattened", whereas the actual behavior is that the input tensor is

flattened only if the `dims` argument is not provided at all.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74880

Approved by: https://github.com/albanD

2022-03-29 17:03:13 +00:00

Yukio Siraichi

116d879b83

Fix asarray docs + add test case.

...

Follow up: #71757

- Added a range object as a test case example

- Remove `torch.as_tensor` entry from the `see also` section

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73736

Approved by: https://github.com/mruberry

2022-03-28 13:58:49 +00:00

Nikita Shulga

cfb6c942fe

scatter_reduce documentation (#73125 )

...

Summary:

Reland of https://github.com/pytorch/pytorch/issues/68580 (which were milestoned for 1.11) plus partial revert of https://github.com/pytorch/pytorch/pull/72543

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73125

Reviewed By: bdhirsh

Differential Revision: D34355217

Pulled By: malfet

fbshipit-source-id: 325ecdeaf53183d653b44ee5e6e8839ceefd9200

(cherry picked from commit 71db31748a

2022-02-22 19:33:46 +00:00

Nikita Shulga

cb00d9601c

Revert D33800694: [pytorch][PR] scatter_reduce documentation

...

Test Plan: revert-hammer

Differential Revision:

D33800694 (12a1df27c712a1df27c74bd6c0d2bb

2022-02-15 20:10:26 +00:00

rusty1s

12a1df27c7

scatter_reduce documentation (#68580 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/63780 (part 2)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68580

Reviewed By: atalman

Differential Revision: D33800694

Pulled By: malfet

fbshipit-source-id: 2e09492a29cef115a7cca7c8209d1dcb6ae24eb9

(cherry picked from commit 696ff75940

2022-02-15 19:43:54 +00:00

Kurt Mohler

47c6993355

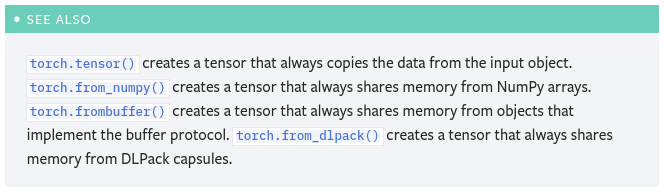

Update from_dlpack tests and documentation ( #70543 )

...

Summary:

Part of https://github.com/pytorch/pytorch/issues/58742

Pull Request resolved: https://github.com/pytorch/pytorch/pull/70543

Reviewed By: soulitzer

Differential Revision: D34172475

Pulled By: mruberry

fbshipit-source-id: d498764b8651a8b7a19181b3421aeebf28a5db2b

(cherry picked from commit 05332f164c

2022-02-14 03:35:17 +00:00