Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63568

This PR adds the first solver with structure to `linalg`. This solver

has an API compatible with that of `linalg.solve` preparing these for a

possible future merge of the APIs. The new API:

- Just returns the solution, rather than the solution and a copy of `A`

- Removes the confusing `transpose` argument and replaces it by a

correct handling of conj and strides within the call

- Adds a `left=True` kwarg. This can be achieved via transposes of the

inputs and the result, but it's exposed for convenience.

This PR also implements a dataflow that minimises the number of copies

needed before calling LAPACK / MAGMA / cuBLAS and takes advantage of the

conjugate and neg bits.

This algorithm is implemented for `solve_triangular` (which, for this, is

the most complex of all the solvers due to the `upper` parameters).

Once more solvers are added, we will factor out this calling algorithm,

so that all of them can take advantage of it.

Given the complexity of this algorithm, we implement some thorough

testing. We also added tests for all the backends, which was not done

before.

We also add forward AD support for `linalg.solve_triangular` and improve the

docs of `linalg.solve_triangular`. We also fix a few issues with those of

`torch.triangular_solve`.

Resolves https://github.com/pytorch/pytorch/issues/54258

Resolves https://github.com/pytorch/pytorch/issues/56327

Resolves https://github.com/pytorch/pytorch/issues/45734

cc jianyuh nikitaved pearu mruberry walterddr IvanYashchuk xwang233 Lezcano

Test Plan: Imported from OSS

Reviewed By: jbschlosser

Differential Revision: D32588230

Pulled By: mruberry

fbshipit-source-id: 69e484849deb9ad7bb992cc97905df29c8915910

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63568

This PR adds the first solver with structure to `linalg`. This solver

has an API compatible with that of `linalg.solve` preparing these for a

possible future merge of the APIs. The new API:

- Just returns the solution, rather than the solution and a copy of `A`

- Removes the confusing `transpose` argument and replaces it by a

correct handling of conj and strides within the call

- Adds a `left=True` kwarg. This can be achieved via transposes of the

inputs and the result, but it's exposed for convenience.

This PR also implements a dataflow that minimises the number of copies

needed before calling LAPACK / MAGMA / cuBLAS and takes advantage of the

conjugate and neg bits.

This algorithm is implemented for `solve_triangular` (which, for this, is

the most complex of all the solvers due to the `upper` parameters).

Once more solvers are added, we will factor out this calling algorithm,

so that all of them can take advantage of it.

Given the complexity of this algorithm, we implement some thorough

testing. We also added tests for all the backends, which was not done

before.

We also add forward AD support for `linalg.solve_triangular` and improve the

docs of `linalg.solve_triangular`. We also fix a few issues with those of

`torch.triangular_solve`.

Resolves https://github.com/pytorch/pytorch/issues/54258

Resolves https://github.com/pytorch/pytorch/issues/56327

Resolves https://github.com/pytorch/pytorch/issues/45734

cc jianyuh nikitaved pearu mruberry walterddr IvanYashchuk xwang233 Lezcano

Test Plan: Imported from OSS

Reviewed By: zou3519, JacobSzwejbka

Differential Revision: D32283178

Pulled By: mruberry

fbshipit-source-id: deb672e6e52f58b76536ab4158073927a35e43a8

Summary:

This PR simply updates the documentation following up on https://github.com/pytorch/pytorch/pull/64234, by adding `Union` as a supported type.

Any feedback is welcome!

cc ansley albanD gmagogsfm

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68435

Reviewed By: davidberard98

Differential Revision: D32494271

Pulled By: ansley

fbshipit-source-id: c3e4806d8632e1513257f0295568a20f92dea297

Summary:

The `torch.histogramdd` operator is documented in `torch/functional.py` but does not appear in the generated docs because it is missing from `docs/source/torch.rst`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68273

Reviewed By: cpuhrsch

Differential Revision: D32470522

Pulled By: saketh-are

fbshipit-source-id: a23e73ba336415457a30bae568bda80afa4ae3ed

Summary:

### Create `linalg.cross`

Fixes https://github.com/pytorch/pytorch/issues/62810

As discussed in the corresponding issue, this PR adds `cross` to the `linalg` namespace (**Note**: There is no method variant) which is slightly different in behaviour compared to `torch.cross`.

**Note**: this is NOT an alias as suggested in mruberry's [https://github.com/pytorch/pytorch/issues/62810 comment](https://github.com/pytorch/pytorch/issues/62810#issuecomment-897504372) below

> linalg.cross being consistent with the Python Array API (over NumPy) makes sense because NumPy has no linalg.cross. I also think we can implement linalg.cross without immediately deprecating torch.cross, although we should definitely refer users to linalg.cross. Deprecating torch.cross will require additional review. While it's not used often it is used, and it's unclear if users are relying on its unique behavior or not.

The current default implementation of `torch.cross` is extremely weird and confusing. This has also been reported multiple times previously. (See https://github.com/pytorch/pytorch/issues/17229, https://github.com/pytorch/pytorch/issues/39310, https://github.com/pytorch/pytorch/issues/41850, https://github.com/pytorch/pytorch/issues/50273)

- [x] Add `torch.linalg.cross` with default `dim=-1`

- [x] Add OpInfo and other tests for `torch.linalg.cross`

- [x] Add broadcasting support to `torch.cross` and `torch.linalg.cross`

- [x] Remove out skip from `torch.cross` OpInfo

- [x] Add docs for `torch.linalg.cross`. Improve docs for `torch.cross` mentioning `linalg.cross` and the difference between the two. Also adds a warning to `torch.cross`, that it may change in the future (we might want to deprecate it later)

---

### Additional Fixes to `torch.cross`

- [x] Fix Doc for Tensor.cross

- [x] Fix torch.cross in `torch/overridres.py`

While working on `linalg.cross` I noticed these small issues with `torch.cross` itself.

[Tensor.cross docs](https://pytorch.org/docs/stable/generated/torch.Tensor.cross.html) still mentions `dim=-1` default which is actually wrong. It should be `dim=None` after the behaviour was updated in PR https://github.com/pytorch/pytorch/issues/17582 but the documentation for the `method` or `function` variant wasn’t updated. Later PR https://github.com/pytorch/pytorch/issues/41850 updated the documentation for the `function` variant i.e `torch.cross` and also added the following warning about the weird behaviour.

> If `dim` is not given, it defaults to the first dimension found with the size 3. Note that this might be unexpected.

But still, the `Tensor.cross` docs were missed and remained outdated. I’m finally fixing that here. Also fixing `torch/overrides.py` for `torch.cross` as well now, with `dim=None`.

To verify according to the docs the default behaviour of `dim=-1` should raise, you can try the following.

```python

a = torch.randn(3, 4)

b = torch.randn(3, 4)

b.cross(a) # this works because the implementation finds 3 in the first dimension and the default behaviour as shown in documentation is actually not true.

>>> tensor([[ 0.7171, -1.1059, 0.4162, 1.3026],

[ 0.4320, -2.1591, -1.1423, 1.2314],

[-0.6034, -1.6592, -0.8016, 1.6467]])

b.cross(a, dim=-1) # this raises as expected since the last dimension doesn't have a 3

>>> RuntimeError: dimension -1 does not have size 3

```

Please take a closer look (particularly the autograd part, this is the first time I'm dealing with `derivatives.yaml`). If there is something missing, wrong or needs more explanation, please let me know. Looking forward to the feedback.

cc mruberry Lezcano IvanYashchuk rgommers

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63285

Reviewed By: gchanan

Differential Revision: D32313346

Pulled By: mruberry

fbshipit-source-id: e68c2687c57367274e8ddb7ef28ee92dcd4c9f2c

Summary:

https://github.com/pytorch/pytorch/issues/67578 disabled reduced precision reductions for FP16 GEMMs. After benchmarking, we've found that this has substantial performance impacts for common GEMM shapes (e.g., those found in popular instantiations of multiheaded-attention) on architectures such as Volta. As these performance regressions may come as a surprise to current users, this PR adds a toggle to disable reduced precision reductions

`torch.backends.cuda.matmul.allow_fp16_reduced_precision_reduction = `

rather than making it the default behavior.

CC ngimel ptrblck

stas00 Note that the behavior after the previous PR can be replicated with

`torch.backends.cuda.matmul.allow_fp16_reduced_precision_reduction = False`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67946

Reviewed By: zou3519

Differential Revision: D32289896

Pulled By: ngimel

fbshipit-source-id: a1ea2918b77e27a7d9b391e030417802a0174abe

Summary:

When I run this part of the code on the document with PyTorch version 1.10.0, I found some differences between the output and the document, as follows:

```python

import torch

import torch.fx as fx

class M(torch.nn.Module):

def forward(self, x, y):

return x + y

# Create an instance of `M`

m = M()

traced = fx.symbolic_trace(m)

print(traced)

print(traced.graph)

traced.graph.print_tabular()

```

I get the result:

```shell

def forward(self, x, y):

add = x + y; x = y = None

return add

graph():

%x : [#users=1] = placeholder[target=x]

%y : [#users=1] = placeholder[target=y]

%add : [#users=1] = call_function[target=operator.add](args = (%x, %y), kwargs = {})

return add

opcode name target args kwargs

------------- ------ ----------------------- ------ --------

placeholder x x () {}

placeholder y y () {}

call_function add <built-in function add> (x, y) {}

output output output (add,) {}

```

This pr modified the document。

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68043

Reviewed By: driazati

Differential Revision: D32287178

Pulled By: jamesr66a

fbshipit-source-id: 48ebd0e6c09940be9950cd57ba0c03274a849be5

Summary:

**Summary:** This commit adds the `torch.nn.qat.dynamic.modules.Linear`

module, the dynamic counterpart to `torch.nn.qat.modules.Linear`.

Functionally these are very similar, except the dynamic version

expects a memoryless observer and is converted into a dynamically

quantized module before inference.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67325

Test Plan:

`python3 test/test_quantization.py TestQuantizationAwareTraining.test_dynamic_qat_linear`

**Reviewers:** Charles David Hernandez, Jerry Zhang

**Subscribers:** Charles David Hernandez, Supriya Rao, Yining Lu

**Tasks:** 99696812

**Tags:** pytorch

Reviewed By: malfet, jerryzh168

Differential Revision: D32178739

Pulled By: andrewor14

fbshipit-source-id: 5051bdd7e06071a011e4e7d9cc7769db8d38fd73

Summary:

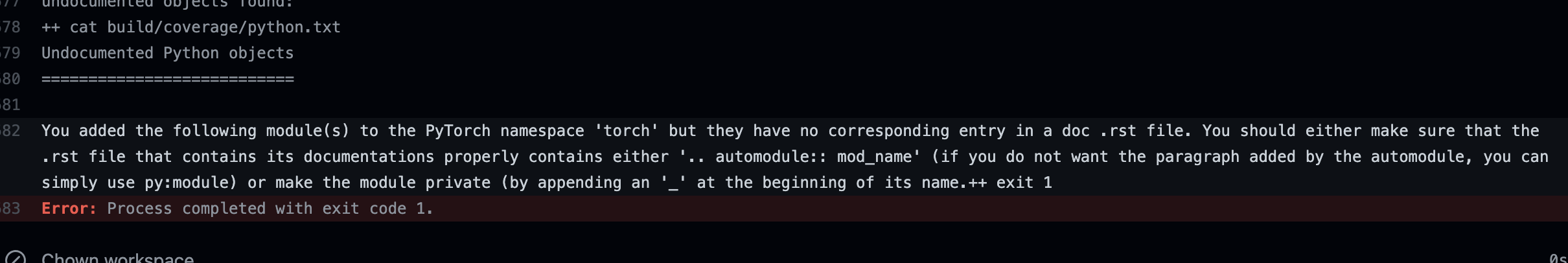

Add check to make sure we do not add new submodules without documenting them in an rst file.

This is especially important because our doc coverage only runs for modules that are properly listed.

temporarily removed "torch" from the list to make sure the failure in CI looks as expected. EDIT: fixed now

This is what a CI failure looks like for the top level torch module as an example:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67440

Reviewed By: jbschlosser

Differential Revision: D32005310

Pulled By: albanD

fbshipit-source-id: 05cb2abc2472ea4f71f7dc5c55d021db32146928

Summary:

Adds `torch.argwhere` as an alias to `torch.nonzero`

Currently, `torch.nonzero` is actually provides equivalent functionality to `np.argwhere`.

From NumPy docs,

> np.argwhere(a) is almost the same as np.transpose(np.nonzero(a)), but produces a result of the correct shape for a 0D array.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64257

Reviewed By: qihqi

Differential Revision: D32049884

Pulled By: saketh-are

fbshipit-source-id: 016e49884698daa53b83e384435c3f8f6b5bf6bb

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67449

Adds a description of what the current custom module API does

and API examples for Eager mode and FX graph mode to the main

PyTorch quantization documentation page.

Test Plan:

```

cd docs

make html

python -m http.server

// check the docs page, it renders correctly

```

Reviewed By: jbschlosser

Differential Revision: D31994641

Pulled By: vkuzo

fbshipit-source-id: d35a62947dd06e71276eb6a0e37950d3cc5abfc1

Summary:

This reduces the chance of a newly added functions to be ignored by mistake.

The only test that this impacts is the coverage test that runs as part of the python doc build. So if that one works, it means that the update to the list here is correct.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67395

Reviewed By: jbschlosser

Differential Revision: D31991936

Pulled By: albanD

fbshipit-source-id: 5b4ce7764336720827501641311cc36f52d2e516

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66143

Delete test_list_remove. There's no point in testing conversion of

this model since TorchScript doesn't support it.

Add a link to an issue tracking test_embedding_bag_dynamic_input.

[ONNX] fix docs (#65379)

Mainly fix the sphinx build by inserting empty before

bulleted lists.

Also some minor improvements:

Remove superfluous descriptions of deprecated and ignored args.

The user doesn't need to know anything other than that they are

deprecated and ignored.

Fix custom_opsets description.

Make indentation of Raises section consistent with Args section.

[ONNX] publicize func for discovering unconvertible ops (#65285)

* [ONNX] Provide public function to discover all unconvertible ATen ops

This can be more productive than finding and fixing a single issue at a

time.

* [ONNX] Reorganize test_utility_funs

Move common functionality into a base class that doesn't define any

tests.

Add a new test for opset-independent tests. This lets us avoid running

the tests repeatedly for each opset.

Use simple inheritance rather than the `type()` built-in. It's more

readable.

* [ONNX] Use TestCase assertions rather than `assert`

This provides better error messages.

* [ONNX] Use double quotes consistently.

[ONNX] Fix code block formatting in doc (#65421)

Test Plan: Imported from OSS

Reviewed By: jansel

Differential Revision: D31424093

fbshipit-source-id: 4ced841cc546db8548dede60b54b07df9bb4e36e

Summary:

Adds `torch.argwhere` as an alias to `torch.nonzero`

Currently, `torch.nonzero` is actually provides equivalent functionality to `np.argwhere`.

From NumPy docs,

> np.argwhere(a) is almost the same as np.transpose(np.nonzero(a)), but produces a result of the correct shape for a 0D array.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64257

Reviewed By: dagitses

Differential Revision: D31474901

Pulled By: saketh-are

fbshipit-source-id: 335327a4986fa327da74e1fb8624cc1e56959c70

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66577

There was a rebase artifact erroneously landed to quantization docs,

this PR removes it.

Test Plan:

CI

Imported from OSS

Reviewed By: soulitzer

Differential Revision: D31651350

fbshipit-source-id: bc254cbb20724e49e1a0ec6eb6d89b28491f9f78

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66380

Description:

1. creates doc pages for Eager and FX numeric suites

2. adds a link from main quantization doc to (1)

3. formats docblocks in Eager NS to render well

4. adds example code and docblocks to FX numeric suite

Test Plan:

```

cd docs

make html

python -m http.server

// renders well

```

Reviewed By: jerryzh168

Differential Revision: D31543173

Pulled By: vkuzo

fbshipit-source-id: feb291bcbe92747495f45165f738631fa5cbffbd

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66379

Description:

Creates a quantization API reference and fixes all the docblock errors.

This is #66122 to #66210 squashed together

Test Plan:

```

cd docs

make html

python -m http.server

// open webpage, inspect it, looks good

```

Reviewed By: ejguan

Differential Revision: D31543172

Pulled By: vkuzo

fbshipit-source-id: 9131363d6528337e9f100759654d3f34f02142a9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66222

Description:

1. creates doc pages for Eager and FX numeric suites

2. adds a link from main quantization doc to (1)

3. formats docblocks in Eager NS to render well

4. adds example code and docblocks to FX numeric suite

Test Plan:

```

cd docs

make html

python -m http.server

// renders well

```

Reviewed By: jerryzh168

Differential Revision: D31447610

Pulled By: vkuzo

fbshipit-source-id: 441170c4a6c3ddea1e7c7c5cc2f1e1cd5aa65f2f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66210

Description:

Moves the backend section of the quantization page further down,

to ensure that the API description and reference sections are closer

to the top.

Test Plan:

```

cd docs

make html

python -m server.http

// renders well

```

Reviewed By: jerryzh168

Differential Revision: D31447611

Pulled By: vkuzo

fbshipit-source-id: 537b146559bce484588b3c78e6b0cdb4c274e8dd

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66201

Description:

This PR switches the quantization API reference to use `autosummary`

for each section. We define the sections and manually write a list

of modules/functions/methods to include, and sphinx does the rest.

A result is a single page where we have every quantization function

and module with a quick autogenerated blurb, and user can click

through to each of them for a full documentation page.

This mimics how the `torch.nn` and `torch.nn.functional` doc

pages are set up.

In detail, for each section before this PR:

* creates a new section using `autosummary`

* adds all modules/functions/methods which were previously in the manual section

* adds any additional modules/functions/methods which are public facing but not previously documented

* deletes the old manual summary and all links to it

Test Plan:

```

cd docs

make html

python -m http.server

// renders well, links work

```

Reviewed By: jerryzh168

Differential Revision: D31447615

Pulled By: vkuzo

fbshipit-source-id: 09874ad9629f9c00eeab79c406579c6abd974901

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66198

Consolidates all API reference material for quantization on a single

page, to reduce duplication of information.

Future PRs will improve the API reference page itself.

Test Plan:

```

cd docs

make html

python -m http.server

// renders well

```

Reviewed By: jerryzh168

Differential Revision: D31447616

Pulled By: vkuzo

fbshipit-source-id: 2f9c4dac2b2fb377568332aef79531d1f784444a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66129

Adds a documentation page for `torch.ao.quantization.QConfig`. It is useful

for this to have a separate page since it shared between Eager and FX graph

mode quantization.

Also, ensures that all important functions and module attributes in this

module have docstrings, so users can discover these without reading the

source code.

Test Plan:

```

cd docs

make html

python -m http.server

// open webpage, inspect it, renders correctly

```

Reviewed By: jerryzh168

Differential Revision: D31447614

Pulled By: vkuzo

fbshipit-source-id: 5d9dd2a4e8647fa17b96cefbaae5299adede619c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66125

Before this PR, the documentation for observers and fake_quants was inlined in the

Eager mode quantization page. This was hard to discover, especially

since that page is really long, and we now have FX graph mode quantization reusing

all of this code.

This PR moves observers and fake_quants into their own documentation pages. It also

adds docstrings to all user facing module attributes such as the default observers

and fake_quants, so people can discover them from documentation without having

to inspect the source code.

For now, enables autoformatting (which means all public classes, functions, members

with docstrings will get docs). If we need to exclude something in these files from

docs in the future, we can go back to manual docs.

Test Plan:

```

cd docs

make html

python -m server.http

// inspect docs on localhost, renders correctly

```

Reviewed By: dagitses

Differential Revision: D31447613

Pulled By: vkuzo

fbshipit-source-id: 63b4cf518badfb29ede583a5c2ca823f572c8599

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66122

Description:

Adds a documentation page for FX graph mode quantization APIs which

reads from the docstrings in `quantize_fx`, and links it from the main

quantization documentation page.

Also, updates the docstrings in `quantize_fx` to render well with reStructuredText.

Test Plan:

```

cd docs

make html

python -m http.server

// open webpage, inspect it, looks good

```

Reviewed By: dagitses

Differential Revision: D31447612

Pulled By: vkuzo

fbshipit-source-id: 07d0a6137f1537af82dce0a729f9617efaa714a0

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65838

closes https://github.com/pytorch/pytorch/pull/65675

The default `--max_restarts` for `torch.distributed.run` was changed to `0` from `3` to make things backwards compatible with `torch.distributed.launch`. Since the default `--max_restarts` used to be greater than `0` we never documented passing `--max_restarts` explicitly in any of our example code.

Test Plan: N/A doc change only

Reviewed By: d4l3k

Differential Revision: D31279544

fbshipit-source-id: 98b31e6a158371bc56907552c5c13958446716f9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64373

* Fix some bad formatting and clarify things in onnx.rst.

* In `export_to_pretty_string`:

* Add documentation for previously undocumented args.

* Document that `f` arg is ignored and mark it deprecated.

* Update tests to stop setting `f`.

* Warn if `_retain_param_name` is set.

* Use double quotes for string literals in test_operators.py.

Test Plan: Imported from OSS

Reviewed By: ezyang

Differential Revision: D30905271

Pulled By: malfet

fbshipit-source-id: 3627eeabf40b9516c4a83cfab424ce537b36e4b3

Summary:

Related to https://github.com/pytorch/pytorch/issues/30987. Fix the following task:

- [ ] Remove the use of `.data` in all our internal code:

- [ ] ...

- [x] `docs/source/scripts/build_activation_images.py` and `docs/source/notes/extending.rst`

In `docs/source/scripts/build_activation_images.py`, I used `nn.init` because the snippet already assumes `nn` is available (the class inherits from `nn.Module`).

cc albanD

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65358

Reviewed By: malfet

Differential Revision: D31061790

Pulled By: albanD

fbshipit-source-id: be936c2035f0bdd49986351026fe3e932a5b4032

Summary:

Powers have decided this API should be listed as beta.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65247

Reviewed By: malfet

Differential Revision: D31057940

Pulled By: ngimel

fbshipit-source-id: 137b63cbd2c7409fecdc161a22135619bfc96bfa

Summary:

Puts memory sharing intro under Sharing memory... header, where it should have been all along.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64996

Reviewed By: mruberry

Differential Revision: D30948619

Pulled By: ngimel

fbshipit-source-id: 5d9dd267b34e9d3fc499d4738377b58a22da1dc2

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62671

Very crude first implementation of `torch.nanmean`. The current reduction kernels do not have good support for implementing nan* variants. Rather than implementing new kernels for each nan* operator, I will work on new reduction kernels with support for a `nan_policy` flag and then I will port `nanmean` to use that.

**TODO**

- [x] Fix autograd issue

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision: D30515181

Pulled By: heitorschueroff

fbshipit-source-id: 303004ebd7ac9cf963dc4f8e2553eaded5f013f0

Summary:

Partially resolves https://github.com/pytorch/vision/issues/4281

In this PR we are proposing a new scheduler --SequentialLR-- which enables list of different schedulers called in different periods of the training process.

The main motivation of this scheduler is recently gained popularity of warming up phase in the training time. It has been shown that having a small steps in initial stages of training can help convergence procedure get faster.

With the help of SequentialLR we mainly enable to call a small constant (or linearly increasing) learning rate followed by actual target learning rate scheduler.

```PyThon

scheduler1 = ConstantLR(optimizer, factor=0.1, total_iters=2)

scheduler2 = ExponentialLR(optimizer, gamma=0.9)

scheduler = SequentialLR(optimizer, schedulers=[scheduler1, scheduler2], milestones=[5])

for epoch in range(100):

train(...)

validate(...)

scheduler.step()

```

which this code snippet will call `ConstantLR` in the first 5 epochs and will follow up with `ExponentialLR` in the following epochs.

This scheduler could be used to provide call of any group of schedulers next to each other. The main consideration we should make is every time we switch to a new scheduler we assume that new scheduler starts from the beginning- zeroth epoch.

We also add Chained Scheduler to `optim.rst` and `lr_scheduler.pyi` files here.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64037

Reviewed By: albanD

Differential Revision: D30841099

Pulled By: iramazanli

fbshipit-source-id: 94f7d352066ee108eef8cda5f0dcb07f4d371751

Summary:

Fixes https://github.com/pytorch/pytorch/issues/62811

Add `torch.linalg.matmul` alias to `torch.matmul`. Note that the `linalg.matmul` doesn't have a `method` variant.

Also cleaning up `torch/_torch_docs.py` when formatting is not needed.

cc IvanYashchuk Lezcano mruberry rgommers

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63227

Reviewed By: mrshenli

Differential Revision: D30770235

Pulled By: mruberry

fbshipit-source-id: bfba77dfcbb61fcd44f22ba41bd8d84c21132403

Summary:

Partially unblocks https://github.com/pytorch/vision/issues/4281

Previously we have added WarmUp Schedulers to PyTorch Core in the PR : https://github.com/pytorch/pytorch/pull/60836 which had two mode of execution - linear and constant depending on warming up function.

In this PR we are changing this interface to more direct form, as separating linear and constant modes to separate Schedulers. In particular

```Python

scheduler1 = WarmUpLR(optimizer, warmup_factor=0.1, warmup_iters=5, warmup_method="constant")

scheduler2 = WarmUpLR(optimizer, warmup_factor=0.1, warmup_iters=5, warmup_method="linear")

```

will look like

```Python

scheduler1 = ConstantLR(optimizer, warmup_factor=0.1, warmup_iters=5)

scheduler2 = LinearLR(optimizer, warmup_factor=0.1, warmup_iters=5)

```

correspondingly.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64395

Reviewed By: datumbox

Differential Revision: D30753688

Pulled By: iramazanli

fbshipit-source-id: e47f86d12033f80982ddf1faf5b46873adb4f324

Summary:

Fixes https://github.com/pytorch/pytorch/issues/61767

## Changes

- [x] Add `torch.concat` alias to `torch.cat`

- [x] Add OpInfo for `cat`/`concat`

- [x] Fix `test_out` skips (Use `at::native::resize_output` or `at::native::resize_output_check`)

- [x] `cat`/`concat`

- [x] `stack`

- [x] `hstack`

- [x] `dstack`

- [x] `vstack`/`row_stack`

- [x] Remove redundant tests for `cat`/`stack`

~I've not added `cat`/`concat` to OpInfo `op_db` yet, since cat is a little more tricky than other OpInfos (should have a lot of tests) and currently there are no OpInfos for that. I can try to add that in a subsequent PR or maybe here itself, whatever is suggested.~

**Edit**: cat/concat OpInfo has been added.

**Note**: I've added the named tensor support for `concat` alias as well, maybe that's out of spec in `array-api` but it is still useful for consistency in PyTorch.

Thanks to krshrimali for guidance on my first PR :))

cc mruberry rgommers pmeier asmeurer leofang AnirudhDagar asi1024 emcastillo kmaehashi heitorschueroff krshrimali

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62560

Reviewed By: saketh-are

Differential Revision: D30762069

Pulled By: mruberry

fbshipit-source-id: 6985159d1d9756238890488a0ab3ae7699d94337

Summary:

This PR is created to replace https://github.com/pytorch/pytorch/pull/53180 PR stack, which has all the review discussions. Reason for needing a replacement is due to a messy Sandcastle issue.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64234

Reviewed By: gmagogsfm

Differential Revision: D30656444

Pulled By: ansley

fbshipit-source-id: 77536c8bcc88162e2c72636026ca3c16891d669a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63582

Current quantization docs do not define qconfig and qengine. Added text to define these concepts before they are used.

ghstack-source-id: 137051719

Test Plan: Imported from OSS

Reviewed By: HDCharles

Differential Revision: D30658656

fbshipit-source-id: a45a0fcdf685ca1c3f5c3506337246a430f8f506

Summary:

Implements an orthogonal / unitary parametrisation.

It does passes the tests and I have trained a couple models with this implementation, so I believe it should be somewhat correct. Now, the implementation is very subtle. I'm tagging nikitaved and IvanYashchuk as reviewers in case they have comments / they see some room for optimisation of the code, in particular of the `forward` function.

Fixes https://github.com/pytorch/pytorch/issues/42243

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62089

Reviewed By: ezyang

Differential Revision: D30639063

Pulled By: albanD

fbshipit-source-id: 988664f333ac7a75ce71ba44c8d77b986dff2fe6

Summary:

This PR expands the [note on modules](https://pytorch.org/docs/stable/notes/modules.html) with additional info for 1.10.

It adds the following:

* Examples of using hooks

* Examples of using apply()

* Examples for ParameterList / ParameterDict

* register_parameter() / register_buffer() usage

* Discussion of train() / eval() modes

* Distributed training overview / links

* TorchScript overview / links

* Quantization overview / links

* FX overview / links

* Parametrization overview / link to tutorial

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63963

Reviewed By: albanD

Differential Revision: D30606604

Pulled By: jbschlosser

fbshipit-source-id: c1030b19162bcb5fe7364bcdc981a2eb6d6e89b4

Summary:

This PR introduces a new `torchrun` entrypoint that simply "points" to `python -m torch.distributed.run`. It is shorter and less error-prone to type and gives a nicer syntax than a rather cryptic `python -m ...` command line. Along with the new entrypoint the documentation is also updated and places where `torch.distributed.run` are mentioned are replaced with `torchrun`.

cc pietern mrshenli pritamdamania87 zhaojuanmao satgera rohan-varma gqchen aazzolini osalpekar jiayisuse agolynski SciPioneer H-Huang mrzzd cbalioglu gcramer23

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64049

Reviewed By: cbalioglu

Differential Revision: D30584041

Pulled By: kiukchung

fbshipit-source-id: d99db3b5d12e7bf9676bab70e680d4b88031ae2d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63910

Addresses the current issue that `init_method=tcp://` is not compatible with `torch.distributed.run` and `torch.distributed.launch`. When running with a training script that initializes the process group with `init_method=tcp://localhost:$port` as such:

```

$ python -u -m torch.distributed.run --max_restarts 0 --nproc_per_node 1 --nnodes 1 --master_addr $(hostname) --master_port 6000 ~/tmp/test.py

```

An `Address in use` error is raised since the training script tries to create a TCPStore on port 6000, which is already taken since the elastic agent is already running a TCPStore on that port.

For details see: https://github.com/pytorch/pytorch/issues/63874.

This change does a couple of things:

1. Adds `is_torchelastic_launched()` check function that users can use in the training scripts to see whether the script is launched via torchelastic.

1. Update the `torch.distributed` docs page to include the new `is_torchelastic_launched()` function.

1. Makes `init_method=tcp://` torchelastic compatible by modifying `_tcp_rendezvous_handler` in `torch.distributed.rendezvous` (this is NOT the elastic rendezvous, it is the old rendezvous module which is slotted for deprecation in future releases) to check `is_torchelastic_launched()` AND `torchelastic_use_agent_store()` and if so, only create TCPStore clients (no daemons, not even for rank 0).

1. Adds a bunch of unittests to cover the different code paths

NOTE: the issue mentions that we should fail-fast with an assertion on `init_method!=env://` when `is_torchelastic_launched()` is `True`. There are three registered init_methods in pytorch: env://, tcp://, file://. Since this diff makes tcp:// compatible with torchelastic and I've validated that file is compatible with torchelastic. There is no need to add assertions. I did update the docs to point out that env:// is the RECOMMENDED init_method. We should probably deprecate the other init_methods in the future but this is out of scope for this issue.

Test Plan: Unittests.

Reviewed By: cbalioglu

Differential Revision: D30529984

fbshipit-source-id: 267aea6d4dad73eb14a2680ac921f210ff547cc5

Summary:

CUDA_VERSION and HIP_VERSION follow very unrelated versioning schemes, so it does not make sense to use CUDA_VERSION to determine the ROCm path. This note explicitly addresses it.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62850

Reviewed By: mruberry

Differential Revision: D30547562

Pulled By: malfet

fbshipit-source-id: 02990fa66a88466c2330ab85f446b25b78545150

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63241

This is a common source of confusion, but it matches the NumPy

behavior.

Fixes#44010Fixes#59526

Test Plan: Imported from OSS

Reviewed By: ejguan

Differential Revision: D30307646

Pulled By: dagitses

fbshipit-source-id: d848140ba267560387d83f3e7acba8c3cdc53d82