Print unexpected success as XPASS. I will submit a PR to test-infra so that the log classifier can find these

Ex: https://github.com/pytorch/pytorch/actions/runs/3466368885/jobs/5790424173

```

test_import_hipify (__main__.TestHipify) ... ok (0.000s)

test_check_onnx_broadcast (__main__.TestONNXUtils) ... ok (0.000s)

test_prepare_onnx_paddings (__main__.TestONNXUtils) ... ok (0.000s)

test_load_standalone (__main__.TestStandaloneCPPJIT) ... ok (16.512s)

======================================================================

XPASS [4.072s]: test_smoke (__main__.TestCollectEnv)

----------------------------------------------------------------------

----------------------------------------------------------------------

Ran 31 tests in 24.594s

FAILED (skipped=7, unexpected successes=1)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/89020

Approved by: https://github.com/huydhn, https://github.com/seemethere

Rerun all disabled test to gather their latest result so that we can close disabled tickets automatically. When running under this mode (RERUN_DISABLED_TESTS=true), only disabled tests are run while the rest are skipped `<skipped message="Test is enabled but --rerun-disabled-tests verification mode is set, so only disabled tests are run" type="skip"/>`

The logic is roughly as follows, the test runs multiple times (n=50)

* If the disabled test passes, and it's flaky, do nothing because it's still flaky. In the test report, we'll see the test passes with the following skipped message:

```

<testcase classname="TestMultiprocessing" file="test_multiprocessing.py" line="357" name="test_fs" time="0.000" timestamp="0001-01-01T00:00:00">

<skipped message="{"flaky": True, "num_red": 4, "num_green": 0, "max_num_retries": 3, "rerun_disabled_test": true}" type="skip"/>

</testcase>

```

* If the disabled test passes every single time, and it is not flaky anymore, mark it so that it can be closed later. We will see the test runs and passes, i.e.

```

<testcase classname="TestCommonCUDA" name="test_out_warning_linalg_lu_factor_cuda" time="0.170" file="test_ops.py" />

```

* If the disabled test fails after all retries, this is also expected. So only report this but don't fail the job (because we don't care about red signals here), we'll see the test is skipped (without the `flaky` field), i.e.

```

<testcase classname="TestMultiprocessing" file="test_multiprocessing.py" line="357" name="test_fs" time="0.000" timestamp="0001-01-01T00:00:00">

<skipped message="{"num_red": 4, "num_green": 0, "max_num_retries": 3, "rerun_disabled_test": true}" type="skip"/>

</testcase>

```

This runs at the same schedule as `mem_leak_check` (daily). The change to update test stats, and (potentially) grouping on HUD will come in separated PRs.

### Testing

* pull https://github.com/pytorch/pytorch/actions/runs/3447434434

* trunk https://github.com/pytorch/pytorch/actions/runs/3447434928

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88646

Approved by: https://github.com/clee2000

Hybrid sparse CSR tensors can currently not be compared to strided ones since `.to_dense` does not work:

```py

import torch

from torch.testing._internal.common_utils import TestCase

assertEqual = TestCase().assertEqual

actual = torch.sparse_csr_tensor([0, 2, 4], [0, 1, 0, 1], [[1, 11], [2, 12] ,[3, 13] ,[4, 14]])

expected = torch.stack([actual[0].to_dense(), actual[1].to_dense()])

assertEqual(actual, expected)

```

```

main.py:4: UserWarning: Sparse CSR tensor support is in beta state. If you miss a functionality in the sparse tensor support, please submit a feature request to https://github.com/pytorch/pytorch/issues. (Triggered internally at ../aten/src/ATen/SparseCsrTensorImpl.cpp:54.)

actual = torch.sparse_csr_tensor([0, 2, 4], [0, 1, 0, 1], [[1, 11], [2, 12] ,[3, 13] ,[4, 14]])

Traceback (most recent call last):

File "/home/philip/git/pytorch/torch/torch/testing/_comparison.py", line 1098, in assert_equal

pair.compare()

File "/home/philip/git/pytorch/torch/torch/testing/_comparison.py", line 619, in compare

actual, expected = self._equalize_attributes(actual, expected)

File "/home/philip/git/pytorch/torch/torch/testing/_comparison.py", line 706, in _equalize_attributes

actual = actual.to_dense() if actual.layout != torch.strided else actual

RuntimeError: sparse_compressed_to_dense: Hybrid tensors are not supported

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "main.py", line 10, in <module>

assertEqual(actual, expected)

File "/home/philip/git/pytorch/torch/torch/testing/_internal/common_utils.py", line 2503, in assertEqual

msg=(lambda generated_msg: f"{generated_msg}\n{msg}") if isinstance(msg, str) and self.longMessage else msg,

File "/home/philip/git/pytorch/torch/torch/testing/_comparison.py", line 1112, in assert_equal

) from error

RuntimeError: Comparing

TensorOrArrayPair(

id=(),

actual=tensor(crow_indices=tensor([0, 2, 4]),

col_indices=tensor([0, 1, 0, 1]),

values=tensor([[ 1, 11],

[ 2, 12],

[ 3, 13],

[ 4, 14]]), size=(2, 2, 2), nnz=4,

layout=torch.sparse_csr),

expected=tensor([[[ 1, 11],

[ 2, 12]],

[[ 3, 13],

[ 4, 14]]]),

rtol=0.0,

atol=0.0,

equal_nan=True,

check_device=False,

check_dtype=True,

check_layout=False,

check_stride=False,

check_is_coalesced=False,

)

resulted in the unexpected exception above. If you are a user and see this message during normal operation please file an issue at https://github.com/pytorch/pytorch/issues. If you are a developer and working on the comparison functions, please except the previous error and raise an expressive `ErrorMeta` instead.

```

This adds a temporary hack to `TestCase.assertEqual` to enable this. Basically, we are going through the individual CSR subtensors, call `.to_dense()` on them, and stack everything back together. I opted to not do this in the common machinery, since that way users are not affected by this (undocumented) hack.

I also added an xfailed test that will trigger as soon as the behavior is supported natively so we don't forget to remove the hack when it is no longer needed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88749

Approved by: https://github.com/mruberry, https://github.com/pearu

tbh at this point it might be easier to make a new workflow and copy the relevant jobs...

Changes:

* Disable cuda mem leak check except for on scheduled workflows

* Make pull and trunk run on a schedule which will run the memory leak check

* Periodic will always run the memory leak check -> periodic does not have parallelization anymore

* Concurrency check changed to be slightly more generous

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88373

Approved by: https://github.com/ZainRizvi, https://github.com/huydhn

Fixes: https://github.com/pytorch/pytorch/issues/88010

This PR does a couple things to stop slow gradcheck from timing out:

- Splits out test_ops_fwd_gradients from test_ops_gradients, and factors out TestFwdGradients and TestBwdGradients which both inherit from TestGradients, now situated in common_utils (maybe there is a better place?)

- Skips CompositeCompliance (and several other test files) for slow gradcheck CI since they do not use gradcheck

- because test times for test_ops_fwd_gradients and test_ops_gradients are either unknown or wrong, we hardcode them for now to prevent them from being put together. We can undo the hack after we see actual test times are updated. ("def calculate_shards" randomly divides tests with unknown test times in a round-robin fashion.)

- Updates references to test_ops_gradients and TestGradients

- Test files that are skipped for slow gradcheck CI are now centrally located in in run_tests.py, this reduces how fine-grained we can be with the skips, so for some skips (one so far) we still use the old skipping mechanism, e.g. for test_mps

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88216

Approved by: https://github.com/albanD

Meta tensor does a lot of work to make sure tensors "look" similar

to the original parts; e.g., if the original was a non-leaf, meta

converter ensures the meta tensor is a non-leaf too. Fake tensor

destroyed some of these properties when it wraps it in a FakeTensor.

This patch pushes the FakeTensor constructor into the meta converter

itself, so that we first create a fake tensor, and then we do various

convertibility bits to it to make it look right.

The two tricky bits:

- We need to have no_dispatch enabled when we allocate the initial meta

tensor, or fake tensor gets mad at us for making a meta fake tensor.

This necessitates the double-callback structure of the callback

arguments: the meta construction happens *inside* the function so

it is covered by no_dispatch

- I can't store tensors for the storages anymore, as that will result

in a leak. But we have untyped storage now, so I just store untyped

storages instead.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

cc @jansel @mlazos @soumith @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @chunyuan-w @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87943

Approved by: https://github.com/eellison, https://github.com/albanD

dynamo tests call a helper function in torch/_dynamo/test_case.py which then calls run_tests in common_utils.py so the test report path looked something like /opt/conda/lib/python3/10/site-packages/torch/_dynamo/test_case

* instead of using frame, use argv[0] which should be the invoking file

* got rid of sanitize functorch test name because theyve been moved into the test folder

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87378

Approved by: https://github.com/huydhn

I noticed that a lot of bugs are being suppressed by torchdynamo's default

error suppression, and worse yet, there's no way to unsuppress them. After

discussion with voz and soumith, we decided that we will unify error suppression

into a single option (suppress_errors) and default suppression to False.

If your model used to work and no longer works, try TORCHDYNAMO_SUPPRESS_ERRORS=1

to bring back the old suppression behavior.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

cc @jansel @lezcano @fdrocha @mlazos @soumith @voznesenskym @yanboliang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87440

Approved by: https://github.com/voznesenskym, https://github.com/albanD

If an invalid platform is specified when disabling a test with flaky test bot, the CI crashes, skipping all tests that come after it.

This turns it into a console message instead. Not erroring out here since it'll affect random PRs. Actual error message should go into the bot that parses the original issue so that it can respond on that issue directly

Pull Request resolved: https://github.com/pytorch/pytorch/pull/86632

Approved by: https://github.com/huydhn

This achieves the same things as https://github.com/pytorch/pytorch/pull/85908 but using backends instead of kwargs (which breaks torchscript unfortunately). This also does mean we let go of numpy compatibility BUT the wins here are that users can control what opt einsum they wanna do!

The backend allows for..well you should just read the docs:

```

.. attribute:: torch.backends.opteinsum.enabled

A :class:`bool` that controls whether opt_einsum is enabled (on by default). If so,

torch.einsum will use opt_einsum (https://optimized-einsum.readthedocs.io/en/stable/path_finding.html)

to calculate an optimal path of contraction for faster performance.

.. attribute:: torch.backends.opteinsum.strategy

A :class:`str` that specifies which strategies to try when `torch.backends.opteinsum.enabled` is True.

By default, torch.einsum will try the "auto" strategy, but the "greedy" and "optimal" strategies are

also supported. Note that the "optimal" strategy is factorial on the number of inputs as it tries all

possible paths. See more details in opt_einsum's docs

(https://optimized-einsum.readthedocs.io/en/stable/path_finding.html).

```

In trying (and failing) to land 85908, I discovered that jit script does NOT actually pull from python's version of einsum (because it cannot support variadic args nor kwargs). Thus I learned that jitted einsum does not subscribe to the new opt_einsum path calculation. Overall, this is fine since jit script is getting deprecated, but where is the best place to document this?

## Test plan:

- added tests to CI

- locally tested that trying to set the strategy to something invalid will error properly

- locally tested that tests will pass even if you don't have opt-einsum

- locally tested that setting the strategy when opt-einsum is not there will also error properly

Pull Request resolved: https://github.com/pytorch/pytorch/pull/86219

Approved by: https://github.com/soulitzer, https://github.com/malfet

Fixes#85615

Currently, internal test discovery instantiates an `ArgumentParser` and adds numerous arguments to the internal parser:

f0570354dd/torch/testing/_internal/common_utils.py (L491-L500)

...

In this context, `argparse` will load [system args](b494f5935c/Lib/argparse.py (L1826-L1829)) from any external scripts invoking PyTorch testing (e.g. `vscode`).

The default behavior of `argparse` is to [allow abbreviations](b494f5935c/Lib/argparse.py (L2243-L2251)) of arguments, but when an `ArgumentParser` instance has many arguments and may be invoked in the context of potentially conflicting system args, the `ArgumentParser` should reduce the potential for conflicts by being instantiated with `allow_abbrev` set to `False`.

With the current default configuration, some abbreviations of the `ArgumentParser` long options conflict with system args used by `vscode` to invoke PyTorch test execution:

```bash

python ~/.vscode-server/extensions/ms-python.python-2022.14.0/pythonFiles/get_output_via_markers.py \

~/.vscode-server/extensions/ms-python.python-2022.14.0/pythonFiles/visualstudio_py_testlauncher.py \

--us=./test --up=test_cuda.py --uvInt=2 -ttest_cuda.TestCuda.test_memory_allocation \

--testFile=./test/test_cuda.py

>>>PYTHON-EXEC-OUTPUT

...

visualstudio_py_testlauncher.py: error: argument --use-pytest: ignored explicit argument './test'

```

The full relevant stack:

```

pytorch/test/jit/test_cuda.py, line 11, in <module>\n from torch.testing._internal.jit_utils import JitTestCase\n'\

pytorch/torch/testing/_internal/jit_utils.py, line 18, in <module>\n from torch.testing._internal.common_utils import IS_WINDOWS, \\\n'

pytorch/torch/testing/_internal/common_utils.py, line 518, in <module>\n args, remaining = parser.parse_known_args()\n'

argparse.py, line 1853, in parse_known_args\n namespace, args = self._parse_known_args(args, namespace)\n'

argparse.py, line 2062, in _parse_known_args\n start_index = consume_optional(start_index)\n'

argparse.py, line 1983, in consume_optional\n msg = _(\'ignored explicit argument %r\')\n'

```

The `argparse` [condition](b494f5935c/Lib/argparse.py (L2250)) that generates the error in this case:

```python

print(option_string)

--use-pytest

print(option_prefix)

--us

option_string.startswith(option_prefix)

True

```

It'd be nice if `vscode` didn't use two-letter options 🤦 but PyTorch testing shouldn't depend on such good behavior by invoking wrappers IMHO.

I haven't seen any current dependency on the abbreviated internal PyTorch `ArgumentParser` options so this change should only extend the usability of the (always improving!) PyTorch testing modules.

This simple PR avoids these conflicting options by instantiating the `ArgumentParser` with `allow_abbrev=False`

Thanks to everyone in the community for their continued contributions to this incredibly valuable framework.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/85616

Approved by: https://github.com/clee2000

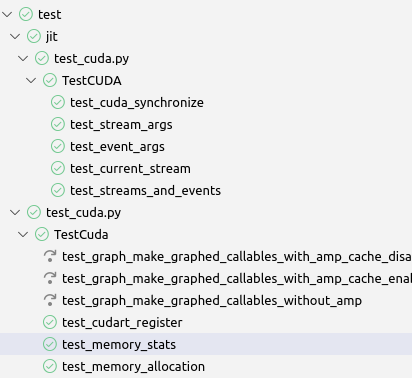

Fixes#85578

Currently, many test modules customize test loading and discovery via the [load_tests protocol](https://docs.python.org/3/library/unittest.html#load-tests-protocol). The salient custom behavior included (introduced with https://github.com/pytorch/pytorch/pull/13250) is to verify that the script discovering or executing the test is the same script in which the test is defined.

I believe this unnecessarily precludes the use of external tools to discover and execute tests (e.g. the vscode testing extension is widely used and IMHO quite convenient).

This simple PR retains the current restriction by default while offering users the option to disable the aforementioned check if desired by setting an environmental variable.

For example:

1. Setup a test env:

```bash

./tools/nightly.py checkout -b some_test_branch

conda activate pytorch-deps

conda install -c pytorch-nightly numpy expecttest mypy pytest hypothesis astunparse ninja pyyaml cmake cffi typing_extensions future six requests dataclasses -y

```

2. The default test collection behavior discovers 5 matching tests (only tests within `test/jit/test_cuda.py` because it doesn't alter the default `load_test` behavior:

```bash

python ~/.vscode-server/extensions/ms-python.python-2022.14.0/pythonFiles/get_output_via_markers.py \

~/.vscode-server/extensions/ms-python.python-2022.14.0/pythonFiles/testing_tools/unittest_discovery.py \

./test test_cuda.py | grep test_cuda | wc -l

5

```

3. Set the new env variable (in vscode, you would put it in the .env file)

```bash

export PYTORCH_DISABLE_RUNNING_SCRIPT_CHK=1

```

4. All of the desired tests are now discovered and can be executed successfully!

```bash

python ~/.vscode-server/extensions/ms-python.python-2022.14.0/pythonFiles/get_output_via_markers.py \

~/.vscode-server/extensions/ms-python.python-2022.14.0/pythonFiles/testing_tools/unittest_discovery.py \

./test test_cuda.py | grep test_cuda | wc -l

175

```

A potentially relevant note, the previous behavior of the custom `load_tests` flattened all the `TestSuite`s in each test module:

4c01c51266/torch/testing/_internal/common_utils.py (L3260-L3262)

I haven't been able to find any code that depends upon this behavior but I think retaining the `TestSuite` structure is preferable from a user perspective and likely safe (`TestSuite`s [can be executed](https://docs.python.org/3/library/unittest.html#load-tests-protocol:~:text=test%20runner%20to-,allow%20it%20to%20be%20run,-as%20any%20other) just like `TestCase`s and this is the structure [recommended](https://docs.python.org/3/library/unittest.html#load-tests-protocol:~:text=provides%20a%20mechanism%20for%20this%3A%20the%20test%20suite) by the standard python documentation).

If necessary, I can change this PR to continue flattening each test module's `TestSuite`s. Since I expect external tools using the `unittest` `discover` API will usually assume discovered `TestSuite`s to retain their structure (e.g. like [vscode](192c3eabd8/pythonFiles/visualstudio_py_testlauncher.py (L336-L349))) retaining the `testsuite` flattening behavior would likely require customization of those external tools for PyTorch though.

Thanks to everyone in the community for the continued contributions to this incredibly valuable framework!

Pull Request resolved: https://github.com/pytorch/pytorch/pull/85584

Approved by: https://github.com/huydhn

run tests in parallel at the test file granularity

runs 3 files in parallel using multiprocessing pool, output goes to a file, which is then printed when the test finishes. Some tests cannot be run in parallel (usually due to lacking memory), so we run those after. Sharding is changed to attempt to mask large files with other large files/run them on the same shard.

test_ops* gets a custom handler to run it because it is simply too big (2hrs on windows) and linalg_cholesky fails (I would really like a solution to this if possible, but until then we use the custom handler).

reduces cuda tests by a lot, reduces total windows test time by ~1hr

Ref. https://github.com/pytorch/pytorch/issues/82894

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84961

Approved by: https://github.com/huydhn

Follow up:

- ~Remove non-float dtypes from allow-list for gradients~

- ~Map dtypes to short-hand so there aren't so many lines, i.e. float16 should be f16.~

- ~There were a lot of linting issues that flake8 wouldn't format for me, so I reformatted with black. This makes the diff a little trickier to parse.~

Observations:

- there are entries in the allow-list that weren't there before

- some forward that we previously passing now fail with requires_grad=True

- Because the allow list does not know about variants, a special skip was added for that in the block list

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84242

Approved by: https://github.com/kulinseth, https://github.com/malfet

This reverts commit 1187dedd33.

Reverted https://github.com/pytorch/pytorch/pull/83436 on behalf of https://github.com/huydhn due to The previous change breaks internal builds D38714900 and other OSS tests. The bug has been fixed by this PR. But we decide that it is safer to revert both, merge them into one PR, then reland the fix

This is a new version of #15648 based on the latest master branch.

Unlike the previous PR where I fixed a lot of the doctests in addition to integrating xdoctest, I'm going to reduce the scope here. I'm simply going to integrate xdoctest, and then I'm going to mark all of the failing tests as "SKIP". This will let xdoctest run on the dashboards, provide some value, and still let the dashboards pass. I'll leave fixing the doctests themselves to another PR.

In my initial commit, I do the bare minimum to get something running with failing dashboards. The few tests that I marked as skip are causing segfaults. Running xdoctest results in 293 failed, 201 passed tests. The next commits will be to disable those tests. (unfortunately I don't have a tool that will insert the `#xdoctest: +SKIP` directive over every failing test, so I'm going to do this mostly manually.)

Fixes https://github.com/pytorch/pytorch/issues/71105

@ezyang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82797

Approved by: https://github.com/ezyang