Summary:

Minor doc fix in clarifying that the input data is rounded not truncated.

CC zasdfgbnm ngimel

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49625

Reviewed By: mruberry

Differential Revision: D25668244

Pulled By: ngimel

fbshipit-source-id: ac97e41e0ca296276544f9e9f85b2cf1790d9985

Summary:

Related https://github.com/pytorch/pytorch/issues/38349

Implement NumPy-like function `torch.broadcast_to` to broadcast the input tensor to a new shape.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48997

Reviewed By: anjali411, ngimel

Differential Revision: D25663937

Pulled By: mruberry

fbshipit-source-id: 0415c03f92f02684983f412666d0a44515b99373

Summary:

This PR adds `torch.linalg.solve`.

`linalg_solve_out` uses in-place operations on the provided result tensor.

I modified `apply_solve` to accept tensor of Int instead of std::vector, that way we can write a function similar to `linalg_solve_out` but removing the error checks and device memory synchronization.

In comparison to `torch.solve` this routine accepts 1-dimensional tensors and batches of 1-dim tensors for the right-hand-side term. `torch.solve` requires it to be at least 2-dimensional.

Ref. https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48456

Reviewed By: izdeby

Differential Revision: D25562222

Pulled By: mruberry

fbshipit-source-id: a9355c029e2442c2e448b6309511919631f9e43b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48280

Adding new API for the kineto profiler that supports enable predicate

function

Test Plan: unit test

Reviewed By: ngimel

Differential Revision: D25142220

Pulled By: ilia-cher

fbshipit-source-id: c57fa42855895075328733d7379eaf3dc1743d14

Summary:

BC-breaking note:

This PR changes the behavior of the any and all functions to always return a bool tensor. Previously these functions were only defined on bool and uint8 tensors, and when called on uint8 tensors they would also return a uint8 tensor. (When called on a bool tensor they would return a bool tensor.)

PR summary:

https://github.com/pytorch/pytorch/pull/44790#issuecomment-725596687

Fixes 2 and 3

Also Fixes https://github.com/pytorch/pytorch/issues/48352

Changes

* Output dtype is always `bool` (consistent with numpy) **BC Breaking (Previously used to match the input dtype**)

* Uses vectorized version for all dtypes on CPU

* Enables test for complex

* Update doc for `torch.all` and `torch.any`

TODO

* [x] Update docs

* [x] Benchmark

* [x] Raise issue on XLA

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47878

Reviewed By: H-Huang

Differential Revision: D25421263

Pulled By: mruberry

fbshipit-source-id: c6c681ef94004d2bcc787be61a72aa059b333e69

Summary:

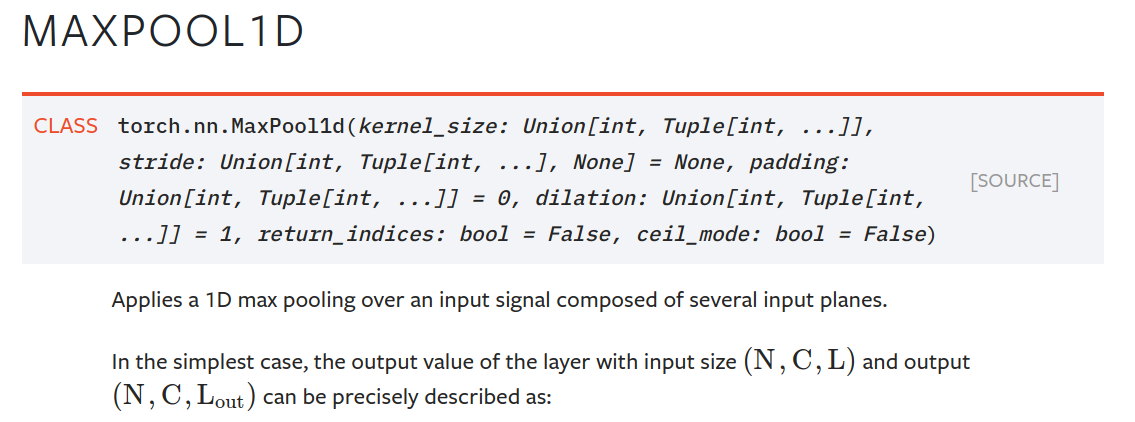

One unintended side effect of moving type annotations inline was that those annotations now show up in signatures in the html docs. This is more confusing and ugly than it is helpful. An example for `MaxPool1d`:

This makes the docs readable again. The parameter descriptions often already have type information, and there will be many cases where the type annotations will make little sense to the user (e.g., returning typevar T, long unions).

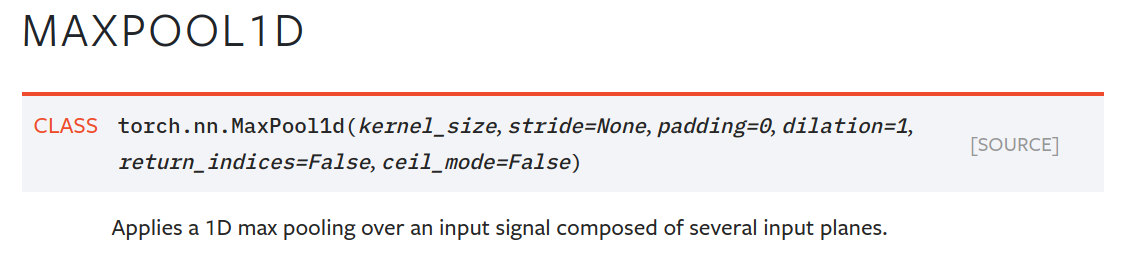

Change to `MaxPool1d` example:

Note that once we can build the docs with Sphinx 3 (which is far off right now), we have two options to make better use of the extra type info in the annotations (some of which is useful):

- `autodoc_type_aliases`, so we can leave things like large unions unevaluated to keep things readable

- `autodoc_typehints = 'description'`, which moves the annotations into the parameter descriptions.

Another, more labour-intensive option, is what vadimkantorov suggested in gh-44964: show annotations on hover. Could also be done with some foldout, or other optional way to make things visible. Would be nice, but requires a Sphinx contribution or plugin first.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49294

Reviewed By: glaringlee

Differential Revision: D25535272

Pulled By: ezyang

fbshipit-source-id: 5017abfea941a7ae8c4595a0d2bdf8ae8965f0c4

Summary:

The args parameter of ONNX export is changed to better support optional arguments such that args is represented as:

args (tuple of arguments or torch.Tensor, a dictionary consisting of named arguments (optional)):

a dictionary to specify the input to the corresponding named parameter:

- KEY: str, named parameter

- VALUE: corresponding input

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47367

Reviewed By: H-Huang

Differential Revision: D25432691

Pulled By: bzinodev

fbshipit-source-id: 9d4cba73cbf7bef256351f181f9ac5434b77eee8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48909

Adds these new APIs to the documentation

ghstack-source-id: 117965961

Test Plan: CI

Reviewed By: mrshenli

Differential Revision: D25363279

fbshipit-source-id: af6889d377f7b5f50a1a77a36ab2f700e5040150

Summary:

Ref https://github.com/pytorch/pytorch/issues/42175

This removes the 4 deprecated spectral functions: `torch.{fft,rfft,ifft,irfft}`. `torch.fft` is also now imported by by default.

The actual `at::native` functions are still used in `torch.stft` so can't be full removed yet. But will once https://github.com/pytorch/pytorch/issues/47601 has been merged.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48594

Reviewed By: heitorschueroff

Differential Revision: D25298929

Pulled By: mruberry

fbshipit-source-id: e36737fe8192fcd16f7e6310f8b49de478e63bf0

Summary:

Fixes https://github.com/pytorch/pytorch/issues/43837

This adds a `torch.broadcast_shapes()` function similar to Pyro's [broadcast_shape()](7c2c22c10d/pyro/distributions/util.py (L151)) and JAX's [lax.broadcast_shapes()](https://jax.readthedocs.io/en/test-docs/_modules/jax/lax/lax.html). This helper is useful e.g. in multivariate distributions that are parameterized by multiple tensors and we want to `torch.broadcast_tensors()` but the parameter tensors have different "event shape" (e.g. mean vectors and covariance matrices). This helper is already heavily used in Pyro's distribution codebase, and we would like to start using it in `torch.distributions`.

- [x] refactor `MultivariateNormal`'s expansion logic to use `torch.broadcast_shapes()`

- [x] add unit tests for `torch.broadcast_shapes()`

- [x] add docs

cc neerajprad

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43935

Reviewed By: bdhirsh

Differential Revision: D25275213

Pulled By: neerajprad

fbshipit-source-id: 1011fdd597d0a7a4ef744ebc359bbb3c3be2aadc

Summary:

This PR adds `torch.linalg.matrix_rank`.

Changes compared to the original `torch.matrix_rank`:

- input with the complex dtype is supported

- batched input is supported

- "symmetric" kwarg renamed to "hermitian"

Should I update the documentation for `torch.matrix_rank`?

For the input with no elements (for example 0×0 matrix), the current implementation is divergent from NumPy. NumPy stumbles on not defined max for such input, here I chose to return appropriately sized tensor of zeros. I think that's mathematically a correct thing to do.

Ref https://github.com/pytorch/pytorch/issues/42666.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48206

Reviewed By: albanD

Differential Revision: D25211965

Pulled By: mruberry

fbshipit-source-id: ae87227150ab2cffa07f37b4a3ab228788701837

Summary:

The approach is to simply reuse `torch.repeat` but adding one more functionality to tile, which is to prepend 1's to reps arrays if there are more dimensions to the tensors than the reps given in input. Thus for a tensor of shape (64, 3, 24, 24) and reps of (2, 2) will become (1, 1, 2, 2), which is what NumPy does.

I've encountered some instability with the test on my end, where I could get a random failure of the test (due to, sometimes, random value of `self.dim()`, and sometimes, segfaults). I'd appreciate any feedback on the test or an explanation for this instability so I can this.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47974

Reviewed By: ngimel

Differential Revision: D25148963

Pulled By: mruberry

fbshipit-source-id: bf63b72c6fe3d3998a682822e669666f7cc97c58

Summary:

This PR adds `torch.linalg.eigh`, and `torch.linalg.eigvalsh` for NumPy compatibility.

The current `torch.symeig` uses (on CPU) a different LAPACK routine than NumPy (`syev` vs `syevd`). Even though it shouldn't matter in practice, `torch.linalg.eigh` uses `syevd` (as NumPy does).

Ref https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45526

Reviewed By: gchanan

Differential Revision: D25022659

Pulled By: mruberry

fbshipit-source-id: 3676b77a121c4b5abdb712ad06702ac4944e900a

Summary:

Adds ldexp operator for https://github.com/pytorch/pytorch/issues/38349

I'm not entirely sure the changes to `NamedRegistrations.cpp` were needed but I saw other operators in there so I added it.

Normally the ldexp operator is used along with the frexp to construct and deconstruct floating point values. This is useful for performing operations on either the mantissa and exponent portions of floating point values.

Sleef, std math.h, and cuda support both ldexp and frexp but not for all data types. I wasn't able to figure out how to get the iterators to play nicely with a vectorized kernel so I have left this with just the normal CPU kernel for now.

This is the first operator I'm adding so please review with an eye for errors.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45370

Reviewed By: mruberry

Differential Revision: D24333516

Pulled By: ranman

fbshipit-source-id: 2df78088f00aa9789aae1124eda399771e120d3f

Summary:

xref gh-46927 to the 1.7 release branch

This backports a fix to the script to push docs to pytorch/pytorch.github.io. Specifically, it pushes to the correct directory when a tag is created here. This issue became apparent in the 1.7 release cycle and should be backported to here.

Along the way, fix the canonical link to the pytorch/audio documentation now that they use subdirectories for the versions, xref pytorch/audio#992. This saves a redirect.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47349

Reviewed By: zhangguanheng66

Differential Revision: D25073752

Pulled By: seemethere

fbshipit-source-id: c778c94a05f1c3e916217bb184f69107e7d2c098

Summary:

Reference https://github.com/pytorch/pytorch/issues/38349

Delegates to `torch.transpose` (not sure what is the best way to alias)

TODO:

* [x] Add test

* [x] Add documentation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46041

Reviewed By: gchanan

Differential Revision: D25022816

Pulled By: mruberry

fbshipit-source-id: c80223d081cef84f523ef9b23fbedeb2f8c1efc5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48038

nn.ReLU works for both float and quantized input, we don't want to define an nn.quantized.ReLU

that does the same thing as nn.ReLU, similarly for nn.quantized.functional.relu

this also removes the numerical inconsistency for models quantizes nn.ReLU independently in qat mode

Test Plan:

Imported from OSS

Imported from OSS

Reviewed By: vkuzo

Differential Revision: D25000462

fbshipit-source-id: e3609a3ae4a3476a42f61276619033054194a0d2