Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48620

In preparation for storing bare function pointer (8 bytes)

instead of std::function (32 bytes).

ghstack-source-id: 118568242

Test Plan: CI

Reviewed By: ezyang

Differential Revision: D25132183

fbshipit-source-id: 3790cfb5d98479a46cf665b14eb0041a872c13da

Summary:

### Java, CPP

Introducing additional parameter `device` to LiteModuleLoader to specify device on which the `forward` will work.

On the java side this is enum that contains CPU and VULKAN, passing as jint to jni side and storing it as a member field on the same level as module.

On pytorch_jni_lite.cpp - for all input tensors converting them to vulkan.

On pytorch_jni_common.cpp (also goes to OSS) - if result Tensor is not cpu - call cpu. (Not Cpu at the moment is only Vulkan).

### BUCK

Introducing `pytorch_jni_lite_with_vulkan` target, that depends on `pytorch_jni_lite_with_vulkan` and adds `aten_vulkan`

In that case `pytorch_jni_lite_with_vulkan` can be used along with `pytorch_jni_lite_with_vulkan`.

Test Plan:

After the following diff with aidemo segmentation:

```

buck install -r aidemos-android

```

{F296224521}

Reviewed By: dreiss

Differential Revision: D23198335

fbshipit-source-id: 95328924e398901d76718c4d828f96e112dfa1b0

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44202

In preparation for changing mobile run_method() to be variadic, this diff:

* Implements get_method() for mobile Module, which is similar to find_method but expects the method to exist.

* Replaces calls to the current nonvariadic implementation of run_method() by calling get_method() and then invoking the operator() overload on Method objects.

ghstack-source-id: 111848222

Test Plan: CI, and all the unit tests which currently contain run_method that are being changed.

Reviewed By: iseeyuan

Differential Revision: D23436351

fbshipit-source-id: 4655ed7182d8b6f111645d69798465879b67a577

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40785

The main goal of this change is to support creating Tensors specifying blob in NHWC (ChannelsLast) format.

ChannelsLast is supported only for 4-dim tensors, this is enforced on LibTorch side, I have not added asserts on java side in case that this limitation will be changed in future and not to have double asserts.

Additional changes in `aten/src/ATen/templates/Functions.h`:

`from_blob` creates `at::empty({0}, options)` tensor first and sets it Storage with sizes and strides afterwards.

But as ChannelsLast is only for 4-dim tensors - it fails on that creation, as dim==1.

I've added `zero_sizes()` function that returns `{0, 0, 0, 0}` for ChannelsLast and ChannelsLast3d.

Test Plan: Imported from OSS

Reviewed By: dreiss

Differential Revision: D22396244

Pulled By: IvanKobzarev

fbshipit-source-id: 02582d748a554e0f859aefe71cd2c1e321fb8979

Summary:

These were added accidentally (probably by an IDE) during a refactor.

These files have always been Open Source.

Test Plan: CI

Reviewed By: xcheng16

Differential Revision: D23250761

fbshipit-source-id: 4974430c0e28dd3269424d38edb36f4f71508157

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40199

Mobile custom selective build has already been covered by `test/mobile/custom_build/build.sh`.

It builds a CLI binary with host-toolchain and runs on host machine to

check correctness of the result.

But that custom build test doesn't cover the android/gradle build part.

And we cannot use it to measure and track the in-APK size of custom

build library.

So this PR adds the selective build test coverage for android NDK build.

Also integrate with the CI to upload the custom build size to scuba.

TODO:

Ideally it should build android/test_app and measure the in-APK size.

But the test_app hasn't been covered by any CI yet and is currently

broken, so build & measure AAR instead (which can be inaccurate as we

plan to pack C++ header files into AAR soon).

Sample result: https://fburl.com/scuba/pytorch_binary_size/skxwb1gh

```

+---------------------+-------------+-------------------+-----------+----------+

| build_mode | arch | lib | Build Num | Size |

+---------------------+-------------+-------------------+-----------+----------+

| custom-build-single | armeabi-v7a | libpytorch_jni.so | 5901579 | 3.68 MiB |

| prebuild | armeabi-v7a | libpytorch_jni.so | 5901014 | 6.23 MiB |

| prebuild | x86_64 | libpytorch_jni.so | 5901014 | 7.67 MiB |

+---------------------+-------------+-------------------+-----------+----------+

```

Test Plan: Imported from OSS

Differential Revision: D22111115

Pulled By: ljk53

fbshipit-source-id: 11d24efbc49a85f851ecd0e481d14123f405b3a9

Summary:

1. Modularize some bzl files to break circular buck load

2. Use query-based on instrumentation_tests

(Note: this ignores all push blocking failures!)

Test Plan: CI

Reviewed By: kwanmacher

Differential Revision: D22188728

fbshipit-source-id: affbabd333c51c8b1549af6602c6bb79fabb7236

Summary:

edit: apparently we hardcode a lot more versions that I would've anticipated.

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40519

Differential Revision: D22221280

Pulled By: seemethere

fbshipit-source-id: ba15a910a6755ec08c10f7783ed72b1e06e6b570

Summary:

This re-applies D21232894 (b9d3869df3) and D22162524, plus updates jni_deps in a few places

to avoid breaking host JNI tests.

Test Plan: `buck test @//fbandroid/mode/server //fbandroid/instrumentation_tests/com/facebook/caffe2:host-test`

Reviewed By: xcheng16

Differential Revision: D22199952

fbshipit-source-id: df13eef39c01738637ae8cf7f581d6ccc88d37d5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40442

Problem:

Nightly builds do not include libtorch headers as local build.

The reason is that on docker images path is different than local path when building with `scripts/build_pytorch_android.sh`

Solution:

Introducing gradle property to be able to specify it and add its specification to gradle build job and snapshots publishing job which run on the same docker image.

Test:

ci-all jobs check https://github.com/pytorch/pytorch/pull/40443

checking that gradle build will result with headers inside aar

Test Plan: Imported from OSS

Differential Revision: D22190955

Pulled By: IvanKobzarev

fbshipit-source-id: 9379458d8ab024ee991ca205a573c21d649e5f8a

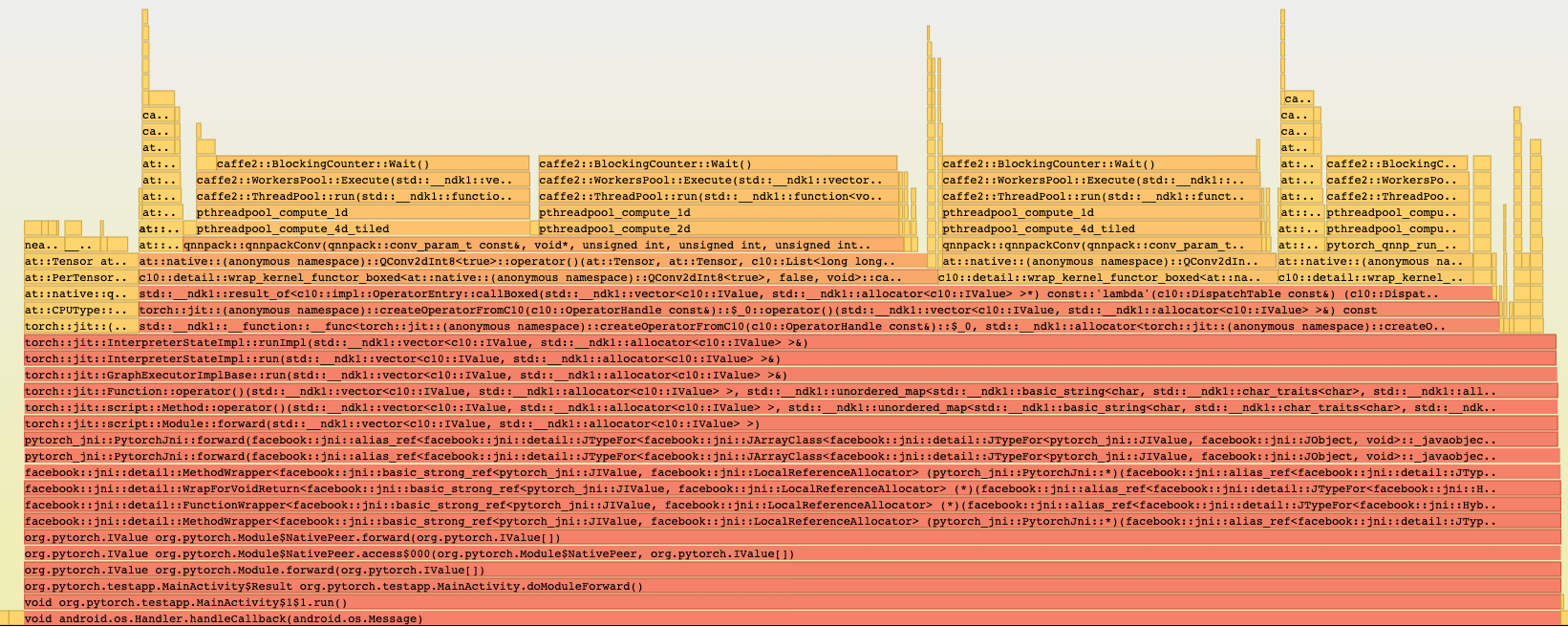

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37243

*** Why ***

As it stands, we have two thread pool solutions concurrently in use in PyTorch mobile: (1) the open source pthreadpool library under third_party, and (2) Caffe2's implementation of pthreadpool under caffe2/utils/threadpool. Since the primary use-case of the latter has been to act as a drop-in replacement for the third party version so as to enable integration and usage from within NNPACK and QNNPACK, Caffe2's implementation is intentionally written to the exact same interface as the third party version.

The original argument in favor of C2's implementation has been improved performance as a result of using spin locks, as opposed to relinquishing the thread's time slot and putting it to sleep - a less expensive operation up to a point. That seems to have given C2's implementation the upper hand in performance, hence justifying the added maintenance complexity, until the third party version improved in parallel surpassing the efficiency of C2's implementation as I have verified in benchmarks. With that advantage gone, there is no reason to continue using C2's implementation in PyTorch mobile either from the perspective of performance or code hygiene. As a matter of fact, there is considerable performance benefit to be had as a result of using the third party version as it currently stands.

This is a tricky change though, mainly because in order to avoid potential performance regressions, of which I have witnessed none but just in abundance of caution, we have decided to continue using the internal C2's implementation whenever building for Caffe2. Again, this is mainly to avoid potential performance regressions in production C2 use cases even if doing so results in reduced performance as far as I can tell.

So to summarize, today, and as it currently stands, we are using C2's implementation for (1) NNPACK, (2) PyTorch QNNPACK, and (3) ATen parallel_for on mobile builds, while using the third party version of pthreadpool for XNNPACK as XNNPACK does not provide any build options to link against an external implementation unlike NNPACK and QNNPACK do.

The goal of this PR then, is to unify all usage on mobile to the third party implementation both for improved performance and better code hygiene. This applies to PyTorch's use of NNPACK, QNNPACK, XNNPACK, and mobile's implementation of ATen parallel_for, all getting routed to the

exact same third party implementation in this PR.

Considering that NNPACK, QNNPACK, and XNNPACK are not mobile specific, these benefits carry over to non-mobile builds of PyTorch (but not Caffe2) as well. The implementation of ATen parallel_for on non-mobile builds remains unchanged.

*** How ***

This is where things get tricky.

A good deal of the build system complexity in this PR arises from our desire to maintain C2's implementation intact for C2's use.

pthreadpool is a C library with no concept of namespaces, which means two copies of the library cannot exist in the same binary or symbol collision will occur violating ODR. This means that somehow, and based on some condition, we must decide on the choice of a pthreadpool implementation. In practice, this has become more complicated as a result of all the possible combinations that USE_NNPACK, USE_QNNPACK, USE_PYTORCH_QNNPACK, USE_XNNPACK, USE_SYSTEM_XNNPACK, USE_SYSTEM_PTHREADPOOL and other variables can result in. Having said that, I have done my best in this PR to surgically cut through this complexity in a way that minimizes the side effects, considering the significance of the performance we are leaving on the table, yet, as a result of this combinatorial explosion explained above I cannot guarantee that every single combination will work as expected on the first try. I am heavily relying on CI to find any issues as local testing can only go that far.

Having said that, this PR provides a simple non mobile-specific C++ thread pool implementation on top of pthreadpool, namely caffe2::PThreadPool that automatically routes to C2's implementation or the third party version depending on the build configuration. This simplifies the logic at the cost of pushing the complexity to the build scripts. From there on, this thread pool is used in aten parallel_for, and NNPACK and family, again, routing all usage of threading to C2 or third party pthreadpool depending on the build configuration.

When it is all said or done, the layering will look like this:

a) aten::parallel_for, uses

b) caffe2::PThreadPool, which uses

c) pthreadpool C API, which delegates to

c-1) third_party implementation of pthreadpool if that's what the build has requested, and the rabbit hole ends here.

c-2) C2's implementation of pthreadpool if that's what the build has requested, which itself delegates to

c-2-1) caffe2::ThreadPool, and the rabbit hole ends here.

NNPACK, and (PyTorch) QNNPACK directly hook into (c). They never go through (b).

Differential Revision: D21232894

Test Plan: Imported from OSS

Reviewed By: dreiss

Pulled By: AshkanAliabadi

fbshipit-source-id: 8b3de86247fbc3a327e811983e082f9d40081354

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39587

Example of using direct linking to pytorch_jni library from aar and updating android/README.md with the tutorial how to do it.

Adding `nativeBuild` dimension to `test_app`, using direct aar dependencies, as headers packaging is not landed yet, excluding `nativeBuild` from building by default for CI.

Additional change to `scripts/build_pytorch_android.sh`:

Skipping clean task here as android gradle plugin 3.3.2 exteralNativeBuild has problems with it when abiFilters are specified.

Will be returned back in the following diffs with upgrading of gradle and android gradle plugin versions.

Test Plan: Imported from OSS

Differential Revision: D22118945

Pulled By: IvanKobzarev

fbshipit-source-id: 31c54b49b1f262cbe5f540461d3406f74851db6c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39588

Before this diff we used c++_static linking.

Users will dynamically link to libpytorch_jni.so and have at least one more their own shared library that probably uses stl library.

We must have not more than one stl per app. ( https://developer.android.com/ndk/guides/cpp-support#one_stl_per_app )

To have only one stl per app changing ANDROID_STL way to c++_shared, that will add libc++_shared.so to packaging.

Test Plan: Imported from OSS

Differential Revision: D22118031

Pulled By: IvanKobzarev

fbshipit-source-id: ea1e5085ae207a2f42d1fa9f6ab8ed0a21768e96

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39507

Adding gradle task that will be run after `assemble` to add `headers` folder to the aar.

Headers are choosed for the first specified abi, they should be the same for all abis.

Adding headers works through temporary unpacking into gradle `$buildDir`, copying headers to it, zipping aar with headers.

Test Plan: Imported from OSS

Differential Revision: D22118009

Pulled By: IvanKobzarev

fbshipit-source-id: 52e5b1e779eb42d977c67dba79e278f1922b8483

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39999

Cleaned up the android build scripts. Consolidated common functions into

common.sh. Also made a few minor fixes:

- We should trust build_android.sh doing right about reusing existing

`build_android_$abi` directory;

- We should clean up `pytorch_android/src/main/jniLibs/` to remove

broken symbolic links in case custom abi list changes since last build;

Test Plan: Imported from OSS

Differential Revision: D22036926

Pulled By: ljk53

fbshipit-source-id: e93915ee4f195111b6171cdabc667fa0135d5195

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39691

After switching on using fbjni-java-only dependency, we do not need to have gradle subproject fbjni.

Test Plan: Imported from OSS

Differential Revision: D22054575

Pulled By: IvanKobzarev

fbshipit-source-id: 331478a57dd0d0aa06a5ce96278b6c897cb0ac78

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39188

Extracting Vulkan_LIBS and Vulkan_INCLUDES setup from `cmake/Dependencies.cmake` to `cmake/VulkanDependencies.cmake` and reuse it in android/pytorch_android/CMakeLists.txt

Adding control to build with Vulkan setting env variable `USE_VULKAN` for `scripts/build_android.sh` `scripts/build_pytorch_android.sh`

We do not use Vulkan backend in pytorch_android, but with this build option we can track android aar change with `USE_VULKAN` added.

Currently it is 88Kb.

Test Plan: Imported from OSS

Differential Revision: D21770892

Pulled By: IvanKobzarev

fbshipit-source-id: a39433505fdcf43d3b524e0fe08062d5ebe0d872

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/37548

Moving RecordFunction from torch::autograd::profiler into at namespace

Test Plan:

CI

Imported from OSS

Differential Revision: D21315852

fbshipit-source-id: 4a4dbabf116c162f9aef0da8606590ec3f3847aa

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34710

Extending RecordFunction API to support new recording scopes (such as TorchScript functions), as well as giving more flexibility to set sampling rate.

Test Plan: unit test (test_misc.cpp/testRecordFunction)

Reviewed By: gdankel, dzhulgakov

Differential Revision: D20158523

fbshipit-source-id: a9e0819d21cc06f4952d92d43246587c36137582

Summary:

Ignore mixed upper-case/lower-case style for now

Fix space between function and its arguments violation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/35574

Test Plan: CI

Differential Revision: D20712969

Pulled By: malfet

fbshipit-source-id: 0012d430aed916b4518599a0b535e82d15721f78

Summary:

Since we've done the branch cut for 1.5.0 we should bump nightlies to 1.6.0

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/35495

Differential Revision: D20697043

Pulled By: seemethere

fbshipit-source-id: 3646187a5e729994138bf2c68625f25f11430b3a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32313

`torch::autograd::profiler::pushCallback()`, `torch::jit::setPrintHandler` should be called only once, not before every loading

`JITCallGuard guard;` not needed before loading module and has no effect

Test Plan: Imported from OSS

Differential Revision: D20559676

Pulled By: IvanKobzarev

fbshipit-source-id: 70cce5d2dda20a00b378639725294cb3c440bad2

Summary:

There are three guards related to mobile build:

* AutoGradMode

* AutoNonVariableTypeMode

* GraphOptimizerEnabledGuard

Today we need set some of these guards before calling libtorch APIs because we customized mobile build to only support inference (for both OSS and most FB use cases) to optimize binary size.

Several changes were made since 1.3 release so there are already inconsistent uses of these guards in the codebase. I did a sweep of all mobile related model loading & forward() call sites, trying to unify the use of these guards:

Full JIT: still set all three guards. More specifically:

* OSS: Fixed a bug of not setting the guard at model load time correctly in Android JNI.

* FB: Not covered by this diff (as we are using mobile interpreter for most internal builds).

Lite JIT (mobile interpreter): only needs AutoNonVariableTypeMode guard. AutoGradMode doesn't seem to be relevant (so removed from a few places) and GraphOptimizerEnabledGuard definitely not relevant (only full JIT has graph optimizer). More specifically:

* OSS: At this point we are not committed to support Lite-JIT. For Android it shares the same code with FB JNI callsites.

* FB:

** JNI callsites: Use the unified LiteJITCallGuard.

** For iOS/C++: manually set AutoNonVariableTypeMode for _load_for_mobile() & forward() callsites.

Ideally we should avoid having to set AutoNonVariableTypeMode for mobile interpreter. It's currently needed for dynamic dispatch + inference-only mobile build (where variable kernels are not registered) - without the guard it will try to run `variable_fallback_kernel` and crash (PR #34038). The proper fix will take some time so using this workaround to unblock selective BUCK build which depends on dynamic dispatch.

PS. The current status (of having to set AutoNonVariableTypeMode) should not block running FL model + mobile interpreter - if all necessary variable kernels are registered then it can call _load_for_mobile()/forward() against the FL model without setting the AutoNonVariableTypeMode guard. It's still inconvenient for JAVA callsites as it's set unconditionally inside JNI methods.

Test Plan: - CI

Reviewed By: xta0

Differential Revision: D20498017

fbshipit-source-id: ba6740f66839a61790873df46e8e66e4e141c728

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34515

Once upon a time we thought this was necessary. In reality it is not, so

removing it.

For backcompat, our public interface (defined in `api/`) still has

typedefs to the old `script::` names.

There was only one collision: `Pass` as a `Stmt` and `Pass` as a graph

transform. I renamed one of them.

Test Plan: Imported from OSS

Differential Revision: D20353503

Pulled By: suo

fbshipit-source-id: 48bb911ce75120a8c9e0c6fb65262ef775dfba93

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34556

According to

https://github.com/pytorch/pytorch/pull/34012#discussion_r388581548,

this `at::globalContext().setQEngine(at::QEngine::QNNPACK);` call isn't

really necessary for mobile.

In Context.cpp it selects the last available QEngine if the engine isn't

set explicitly. For OSS mobile prebuild it should only include QNNPACK

engine so the default behavior should already be desired behavior.

It makes difference only when USE_FBGEMM is set - but it should be off

for both OSS mobile build and internal mobile build.

Test Plan: Imported from OSS

Differential Revision: D20374522

Pulled By: ljk53

fbshipit-source-id: d4e437a03c6d4f939edccb5c84f02609633a0698

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34203

Currently cmake and mobile build scripts still build libcaffe2 by

default. To build pytorch mobile users have to set environment variable

BUILD_PYTORCH_MOBILE=1 or set cmake option BUILD_CAFFE2_MOBILE=OFF.

PyTorch mobile has been released for a while. It's about time to change

CMake and build scripts to build libtorch by default.

Changed caffe2 CI job to build libcaffe2 by setting BUILD_CAFFE2_MOBILE=1

environment variable. Only found android CI for libcaffe2 - do we ever

have iOS CI for libcaffe2?

Test Plan: Imported from OSS

Differential Revision: D20267274

Pulled By: ljk53

fbshipit-source-id: 9d997032a599c874d62fbcfc4f5d4fbf8323a12e

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33722

In order to improve CPU performance on floating-point models on mobile, this PR introduces a new CPU backend for mobile that implements the most common mobile operators with NHWC memory layout support through integration with XNNPACK.

XNNPACK itself, and this codepath, are currently only included in the build, but the actual integration is gated with USE_XNNPACK preprocessor guards. This preprocessor symbol is intentionally not passed on to the compiler, so as to enable this rollout in multiple stages in follow up PRs. This changeset will build XNNPACK as part of the build if the identically named USE_XNNPACK CMAKE variable, defaulted to ON, is enabled, but will not actually expose or enable this code path in any other way.

Furthermore, it is worth pointing out that in order to efficiently map models to these operators, some front-end method of exposing this backend to the user is needed. The less efficient implementation would be to hook these operators into their corresponding native implementations, granted that a series of XNNPACK-specific conditions are met, much like how NNPACK is integrated with PyTorch today for instance.

Having said that, while the above implementation is still expected to outperform NNPACK based on the benchmarks I ran, the above integration would be leave a considerable gap between the performance achieved and the maximum performance potential XNNPACK enables, as it does not provide a way to compute and factor out one-time operations out of the inner most forward() loop.

The more optimal solution, and one we will decide on soon, would involve either providing a JIT pass that maps nn operators onto these newly introduced operators, while allowing one-time calculations to be factored out, much like quantized mobile models. Alternatively, new eager-mode modules can also be introduced that would directly call into these implementations either through c10 or some other mechanism, also allowing for decoupling of op creation from op execution.

This PR does not include any of the front end changes mentioned above. Neither does it include the mobile threadpool unification present in the original https://github.com/pytorch/pytorch/issues/30644. Furthermore, this codepath seems to be faster than NNPACK in a good number of use cases, which can potentially allow us to remove NNPACK from aten to make the codebase a little simpler, granted that there is widespread support for such a move.

Regardless, these changes will be introduced gradually and in a more controlled way in subsequent PRs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32509

Test Plan:

Build: CI

Functionality: Not exposed

Reviewed By: dreiss

Differential Revision: D20069796

Pulled By: AshkanAliabadi

fbshipit-source-id: d46c1c91d4bea91979ea5bd46971ced5417d309c

Summary:

In order to improve CPU performance on floating-point models on mobile, this PR introduces a new CPU backend for mobile that implements the most common mobile operators with NHWC memory layout support through integration with XNNPACK.

XNNPACK itself, and this codepath, are currently only included in the build, but the actual integration is gated with USE_XNNPACK preprocessor guards. This preprocessor symbol is intentionally not passed on to the compiler, so as to enable this rollout in multiple stages in follow up PRs. This changeset will build XNNPACK as part of the build if the identically named USE_XNNPACK CMAKE variable, defaulted to ON, is enabled, but will not actually expose or enable this code path in any other way.

Furthermore, it is worth pointing out that in order to efficiently map models to these operators, some front-end method of exposing this backend to the user is needed. The less efficient implementation would be to hook these operators into their corresponding **native** implementations, granted that a series of XNNPACK-specific conditions are met, much like how NNPACK is integrated with PyTorch today for instance.

Having said that, while the above implementation is still expected to outperform NNPACK based on the benchmarks I ran, the above integration would be leave a considerable gap between the performance achieved and the maximum performance potential XNNPACK enables, as it does not provide a way to compute and factor out one-time operations out of the inner most forward() loop.

The more optimal solution, and one we will decide on soon, would involve either providing a JIT pass that maps nn operators onto these newly introduced operators, while allowing one-time calculations to be factored out, much like quantized mobile models. Alternatively, new eager-mode modules can also be introduced that would directly call into these implementations either through c10 or some other mechanism, also allowing for decoupling of op creation from op execution.

This PR does not include any of the front end changes mentioned above. Neither does it include the mobile threadpool unification present in the original https://github.com/pytorch/pytorch/issues/30644. Furthermore, this codepath seems to be faster than NNPACK in a good number of use cases, which can potentially allow us to remove NNPACK from aten to make the codebase a little simpler, granted that there is widespread support for such a move.

Regardless, these changes will be introduced gradually and in a more controlled way in subsequent PRs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32509

Reviewed By: dreiss

Differential Revision: D19521853

Pulled By: AshkanAliabadi

fbshipit-source-id: 99a1fab31d0ece64961df074003bb852c36acaaa

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32567

As a first change to support proguard.

even if these methods could be not called from java, on jni level we register them and this registration will fail if methods are stripped.

Adding DoNotStrip to all native methods that are registered in OSS.

After integration of consumerProguardFiles in fbjni that prevents stripping by proguard DoNotStrip it will fix errors with proguard on.

Test Plan: Imported from OSS

Differential Revision: D19624684

Pulled By: IvanKobzarev

fbshipit-source-id: cd7d9153e9f8faf31c99583cede4adbf06bab507

Summary:

Without this, dlopen won't look in the proper directory for dependencies

(like libtorch and fbjni).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/32247

Test Plan:

Build libpytorch_jni.dylib on Mac, replaced the one from the libtorch

nightly, and was able to run the Java demo.

Differential Revision: D19501498

Pulled By: dreiss

fbshipit-source-id: 13ffdff9622aa610f905d039f951ee9a3fdc6b23

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/31456

External request https://discuss.pytorch.org/t/jit-android-debugging-the-model/63950

By default torchscript print function goes to stdout. For android it is not seen in logcat by default.

This change propagates it to logcat.

Test Plan: Imported from OSS

Differential Revision: D19171405

Pulled By: IvanKobzarev

fbshipit-source-id: f9c88fa11d90bb386df9ed722ec9345fc6b25a34

Summary: I think this was wrong before?

Test Plan: Not sure.

Reviewed By: IvanKobzarev

Differential Revision: D19221358

fbshipit-source-id: 27e675cac15dde29e026305f4b4e6cc774e15767

Summary:

These were returning incorrect data before. Now we make a contiguous copy

before converting to Java. Exposing raw data to the user might be faster in

some cases, but it's not clear that it's worth the complexity and code size.

Test Plan: New unit test.

Reviewed By: IvanKobzarev

Differential Revision: D19221361

fbshipit-source-id: 22ecdad252c8fd968f833a2be5897c5ae483700c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/31584

These were returning incorrect data before.

Test Plan: New unit test.

Reviewed By: IvanKobzarev

Differential Revision: D19221360

fbshipit-source-id: b3f01de086857027f8e952a1c739f60814a57acd

Summary: These are valid tensors.

Test Plan: New unit test.

Reviewed By: IvanKobzarev

Differential Revision: D19221362

fbshipit-source-id: fa9af2fc539eb7381627b3d473241a89859ef2ba

Summary:

Done with:

```

❯ sed -i 's/1\.4\.0/1.5.0/g' $(find -type f -not -path "./third_party/*")

```

This was previously done in separate commits, but it would be beneficial to bump all included projects within this repository at the same time.

Old bumps for reference:

* [iOS]Update Cocoapods to 1.4.0: https://github.com/pytorch/pytorch/pull/30326

* [android] Change nightly builds version to 1.4.0-SNAPSHOT: https://github.com/pytorch/pytorch/pull/27381

* Roll master to 1.4.0: https://github.com/pytorch/pytorch/pull/27374

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/31785

Differential Revision: D19277925

Pulled By: seemethere

fbshipit-source-id: f72ad082f0566004858c9374879f4b1bee169f9c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30195

1. Added flavorDimensions 'build' local/nightly

to be able to test the latest nightlies

```

cls && gradle clean test_app:installMobNet2QuantNightlyDebug -PABI_FILTERS=x86 --refresh-dependencies && adb shell am start -n org.pytorch.testapp.mobNet2Quant/org.pytorch.testapp.MainActivity

```

2. To be able to change all new model setup editing only `test_app/build.gradle`

Inlined model asset file names to `build.gradle`

Extracted input tensor shape to `build.gradle` (BuildConfig)

Test Plan: Imported from OSS

Differential Revision: D18893394

Pulled By: IvanKobzarev

fbshipit-source-id: 1fae9989d6f4b02afb42f8e26d0f3261d7ca929b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30501

**Motivation**:

In current state output of libtorch Module forward,runMethod is mem copied to java ByteBuffer, which is allocated, at least in some versions of android, on java heap. That could lead to intensive garbage collection.

**Change**:

Output java tensor becomes owner of output at::Tensor and holds it (as `pytorch_jni::TensorHybrid::tensor_` field) alive until java part is not destroyed by GC. For that org.pytorch.Tensor becomes 'Hybrid' class in fbjni naming and starts holding member field `HybridData mHybridData;`

If construction of it starts from java side - java constructors of subclasses (we need all the fields initialized, due to this `mHybridData` is not declared final, but works as final) call `this.mHybridData = super.initHybrid();` to initialize cpp part (`at::Tensor tensor_`).

If construction starts from cpp side - cpp side is initialiaed using provided at::Tensor with `makeCxxInstance(std::move(tensor))` and is passed to java method `org.pytorch.Tensor#nativeNewTensor` as parameter `HybridData hybridData`, which holds native pointer to cpp side.

In that case `initHybrid()` method is not called, but parallel set of ctors of subclasses are used, which stores `hybridData` in `mHybridData`.

Renaming:

`JTensor` -> `TensorHybrid`

Removed method:

`JTensor::newAtTensorFromJTensor(JTensor)` becomes trivial `TensorHybrid->cthis()->tensor()`

Test Plan: Imported from OSS

Differential Revision: D18893320

Pulled By: IvanKobzarev

fbshipit-source-id: df94775d2a010a1ad945b339101c89e2b79e0f83

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30175

fbjni was opensourced and java part is published as 'com.facebook.fbjni:fbjni-java-only:0.0.3'

switching to it.

We still need submodule fbjni inside the repo (which is already pointing to https://github.com/facebookincubator/fbjni) for so linking.

**Packaging changes**:

before that `libfbjni.so` came from pytorch_android_fbjni dependency, as we also linked fbjni in `pytorch_android/CMakeLists.txt` - it was built in pytorch_android, but excluded for publishing. As we had 2 libfbjni.so there was a hack to exclude it for publishing and resolve duplication locally.

```

if (rootProject.isPublishing()) {

exclude '**/libfbjni.so'

} else {

pickFirst '**/libfbjni.so'

}

```

After this change fbjni.so will be packaged inside pytorch_android.aar artefact and we do not need this gradle logic.

I will update README in separate PR after landing previous PR to readme(https://github.com/pytorch/pytorch/pull/30128) to avoid conflicts

Test Plan: Imported from OSS

Differential Revision: D18982235

Pulled By: IvanKobzarev

fbshipit-source-id: 5097df2557858e623fa480625819a24a7e8ad840

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30315

The new structure is that libtorch_cpu contains the bulk of our

code, and libtorch depends on libtorch_cpu and libtorch_cuda.

This is a reland of https://github.com/pytorch/pytorch/pull/29731 but

I've extracted all of the prep work into separate PRs which can be

landed before this one.

Some things of note:

* torch/csrc/cuda/nccl.cpp was added to the wrong list of SRCS, now fixed (this didn't matter before because previously they were all in the same library)

* The dummy file for libtorch was brought back from the dead; it was previously deleted in #20774

In an initial version of the patch, I forgot to make torch_cuda explicitly depend on torch_cpu. This lead to some very odd errors, most notably "bin/blob_test: hidden symbol `_ZNK6google8protobuf5Arena17OnArenaAllocationEPKSt9type_infom' in lib/libprotobuf.a(arena.cc.o) is referenced by DSO"

* A number of places in Android/iOS builds have to add torch_cuda explicitly as a library, as they do not have transitive dependency calculation working correctly

* I had to torch_cpu/torch_cuda caffe2_interface_library so that they get whole-archived linked into torch when you statically link. And I had to do this in an *exported* fashion because torch needs to depend on torch_cpu_library. In the end I exported everything and removed the redefinition in the Caffe2Config.cmake. However, I am not too sure why the old code did it in this way in the first place; however, it doesn't seem to have broken anything to switch it this way.

* There's some uses of `__HIP_PLATFORM_HCC__` still in `torch_cpu` code, so I had to apply it to that library too (UGH). This manifests as a failer when trying to run the CUDA fuser. This doesn't really matter substantively right now because we still in-place HIPify, but it would be good to fix eventually. This was a bit difficult to debug because of an unrelated HIP bug, see https://github.com/ROCm-Developer-Tools/HIP/issues/1706Fixes#27215 (as our libraries are smaller), and executes on

part of the plan in #29235.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D18790941

Pulled By: ezyang

fbshipit-source-id: 01296f6089d3de5e8365251b490c51e694f2d6c7

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30428

Reported issue https://discuss.pytorch.org/t/incomprehensible-behaviour/61710

Steps to reproduce:

```

class WrapRPN(nn.Module):

def __init__(self):

super().__init__()

def forward(self, features):

# type: (Dict[str, Tensor]) -> int

return 0

```

```

#include <torch/script.h>

int main() {

torch::jit::script::Module module = torch::jit::load("dict_str_tensor.pt");

torch::Tensor tensor = torch::rand({2, 3});

at::IValue ivalue{tensor};

c10::impl::GenericDict dict{c10::StringType::get(),ivalue.type()};

dict.insert("key", ivalue);

module.forward({dict});

}

```

ValueType of `c10::impl::GenericDict` is from the first specified element as `ivalue.type()`

It fails on type check in` function_schema_inl.h` !value.type()->isSubtypeOf(argument.type())

as `DictType::isSubtypeOf` requires equal KeyType and ValueType, while `TensorType`s are different.

Fix:

Use c10::unshapedType for creating Generic List/Dict

Test Plan: Imported from OSS

Differential Revision: D18717189

Pulled By: IvanKobzarev

fbshipit-source-id: 1e352a9c776a7f7e69fd5b9ece558f1d1849ea57

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30472

Add DoNotStrip to nativeNewTensor method.

ghstack-source-id: 94596624

Test Plan:

Triggered build on diff for automation_fbandroid_fallback_release.

buck install -r fb4a

Tested BI cloaking using pytext lite interpreter.

Obverse that logs are sent to scuba table:

{F223408345}

Reviewed By: linbinyu

Differential Revision: D18709087

fbshipit-source-id: 74fa7a0665640c294811a50913a60ef8d6b9b672

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30390

Fix the crashes for c++ not able to find java class through Jni

ghstack-source-id: 94499644

Test Plan: buck install -r fb4a

Reviewed By: ljk53

Differential Revision: D18667992

fbshipit-source-id: aa1b19c6dae39d46440f4a3e691054f7f8b1d42e

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30285

PR #30144 introduced custom build script to tailor build to specific

models. It requires a list of all potentially used ops at build time.

Some JIT optimization passes can transform the IR by replacing

operators, e.g. decompose pass can replace aten::addmm with aten::mm if

coefficients are 1s.

Disabling optimization pass can ensure that the list of ops we dump from

the model is the list of ops that are needed.

Test Plan: - rerun the test on PR #30144 to verify the raw list without aten::mm works.

Differential Revision: D18652777

Pulled By: ljk53

fbshipit-source-id: 084751cb9a9ee16d8df7e743e9e5782ffd8bc4e3

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30206

- --whole-archive isn't needed because we link libtorch as a dynamic

dependency, rather than static.

- --gc-sections isn't necessary because most (all?) of the code in our

JNI library is used (and we're not staticly linking libtorch).

Removing this one is useful because it's not supported by lld.

Test Plan:

Built on Linux. Library size was unchanged.

Upcoming diff enables Mac JNI build.

Differential Revision: D18653500

Pulled By: dreiss

fbshipit-source-id: 49ce46fb86a775186f803ada50445b4b2acb54a8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29731

The new structure is that libtorch_cpu contains the bulk of our

code, and libtorch depends on libtorch_cpu and libtorch_cuda.

Some subtleties about the patch:

- There were a few functions that crossed CPU-CUDA boundary without API macros. I just added them, easy enough. An inverse situation was aten/src/THC/THCTensorRandom.cu where we weren't supposed to put API macros directly in a cpp file.

- DispatchStub wasn't getting all of its symbols related to static members on DispatchStub exported properly. I tried a few fixes but in the end I just moved everyone off using DispatchStub to dispatch CUDA/HIP (so they just use normal dispatch for those cases.) Additionally, there were some mistakes where people incorrectly were failing to actually import the declaration of the dispatch stub, so added includes for those cases.

- torch/csrc/cuda/nccl.cpp was added to the wrong list of SRCS, now fixed (this didn't matter before because previously they were all in the same library)

- The dummy file for libtorch was brought back from the dead; it was previously deleted in #20774

- In an initial version of the patch, I forgot to make torch_cuda explicitly depend on torch_cpu. This lead to some very odd errors, most notably "bin/blob_test: hidden symbol `_ZNK6google8protobuf5Arena17OnArenaAllocationEPKSt9type_infom' in lib/l

ibprotobuf.a(arena.cc.o) is referenced by DSO"

- A number of places in Android/iOS builds have to add torch_cuda explicitly as a library, as they do not have transitive dependency calculation working correctly. This situation also happens with custom C++ extensions.

- There's a ROCm compiler bug where extern "C" on functions is not respected. There's a little workaround to handle this.

- Because I was too lazy to check if HIPify was converting TORCH_CUDA_API into TORCH_HIP_API, I just made it so HIP build also triggers the TORCH_CUDA_API macro. Eventually, we should translate and keep the nature of TORCH_CUDA_API constant in all cases.

Fixes#27215 (as our libraries are smaller), and executes on

part of the plan in #29235.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Differential Revision: D18632773

Pulled By: ezyang

fbshipit-source-id: ea717c81e0d7554ede1dc404108603455a81da82

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/30180

Just applying `clang-format -i` to not mix it with other changes

Test Plan: Imported from OSS

Differential Revision: D18627473

Pulled By: IvanKobzarev

fbshipit-source-id: ed341e356fea31b8515de29d5ea2ede07e8b66a2

Summary:

- Add a "BUILD_JNI" option that enables building PyTorch JNI bindings and

fbjni. This is off by default because it adds a dependency on jni.h.

- Update to the latest fbjni so we can inhibit building its tests,

because they depend on gtest.

- Set JAVA_HOME and BUILD_JNI in Linux binary build configurations if we

can find jni.h in Docker.

Test Plan:

- Built on dev server.

- Verified that libpytorch_jni links after libtorch when both are built

in a parallel build.

Differential Revision: D18536828

fbshipit-source-id: 19cb3be8298d3619352d02bb9446ab802c27ec66

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29861

Follow https://github.com/pytorch/pytorch/issues/6570 to run ./run_host_tests.sh for Mac Build, we saw error below:

```error: cannot initialize a parameter of type 'const facebook::jni::JPrimitiveArray<_jlongArray *>::T *' (aka 'const long *') with an rvalue of type

'std::__1::vector<long long, std::__1::allocator<long long> >::value_type *' (aka 'long long *')

jTensorShape->setRegion(0, tensorShapeVec.size(), tensorShapeVec.data());```

ghstack-source-id: 93961091

Test Plan: Run ./run_host_tests.sh and verify build succeed.

Reviewed By: dreiss

Differential Revision: D18519087

fbshipit-source-id: 869be12c82e6e0f64c878911dc12459defebf40b

Summary:

The issue with previous build was that after phabricators lint error about double quotes I changed:

`$GRADLE_PATH $GRADLE_PARAMS` -> `"$GRADLE_PATH" "$GRADLE_PARAMS"`

which ended in error:

```

Nov 13 17:16:38 + /opt/gradle/gradle-4.10.3/bin/gradle '-p android assembleRelease --debug --stacktrace --offline'

Nov 13 17:16:40 Starting a Gradle Daemon (subsequent builds will be faster)

Nov 13 17:16:41

Nov 13 17:16:41 FAILURE: Build failed with an exception.

Nov 13 17:16:41

Nov 13 17:16:41 * What went wrong:

Nov 13 17:16:41 The specified project directory '/var/lib/jenkins/workspace/ android assembleRelease --debug --stacktrace --offline' does not exist.

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29738

Differential Revision: D18486605

Pulled By: IvanKobzarev

fbshipit-source-id: 2b06600feb9db35b49e097a6d44422f50e46bb20

Summary:

https://github.com/pytorch/pytorch/issues/29159

Introducing GRADLE_OFFLINE environment variable to use '--offline' gradle argument which will only use local gradle cache without network.

As it is cache and has some expiration logic - before every start of gradle 'touch' files to update last access time.

Deploying new docker images that includes prefetching to gradle cache all android dependencies, commit with update of docker images: df07dd5681

Reenable android gradle jobs on CI (revert of 54e6a7eede)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29262

Differential Revision: D18455666

Pulled By: IvanKobzarev

fbshipit-source-id: 8fb0b54fd94e13b3144af2e345c6b00b258dcc0f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29617

As for internal build, we will use mobile interpreter instead of full jit, so we will need to separate the existing pytorch_jni.cpp into pytorch_jni_jit.cpp and pytorch_jni_common.cpp. pytorch_jni_common.cpp will be used both from pytorch_jni_jit.cpp(open_source) and future pytorch_jni_lite.cpp(internal).

ghstack-source-id: 93691214

Test Plan: buck build xplat/caffe2/android:pytorch

Reviewed By: dreiss

Differential Revision: D18387579

fbshipit-source-id: 26ab845c58a0959bc0fdf1a2b9a99f6ad6f2fc9c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29412

Originally, this was going to be Android-only, so the name wasn't too

important. But now that we're planning to distribute it with libtorch,

we should give it a more distinctive name.

Test Plan:

Ran tests according to

https://github.com/pytorch/pytorch/issues/6570#issuecomment-548537834

Reviewed By: IvanKobzarev

Differential Revision: D18405207

fbshipit-source-id: 0e6651cb34fb576438f24b8a9369e10adf9fecf9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/29455

- Don't need to load native library.

- Shape is now private.

Test Plan: Ran test.

Reviewed By: IvanKobzarev

Differential Revision: D18405213

fbshipit-source-id: e1d1abcf2122332317693ce391e840904b69e135

Summary:

Reason:

To have one-step build for test android application based on the current code state that is ready for profiling with simpleperf, systrace etc. to profile performance inside the application.

## Parameters to control debug symbols stripping

Introducing /CMakeLists parameter `ANDROID_DEBUG_SYMBOLS` to be able not to strip symbols for pytorch (not add linker flag `-s`)

which is checked in `scripts/build_android.sh`

On gradle side stripping happens by default, and to prevent it we have to specify

```

android {

packagingOptions {

doNotStrip "**/*.so"

}

}

```

which is now controlled by new gradle property `nativeLibsDoNotStrip `

## Test_App

`android/test_app` - android app with one MainActivity that does inference in cycle

`android/build_test_app.sh` - script to build libtorch with debug symbols for specified android abis and adds `NDK_DEBUG=1` and `-PnativeLibsDoNotStrip=true` to keep all debug symbols for profiling.

Script assembles all debug flavors:

```

└─ $ find . -type f -name *apk

./test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

./test_app/app/build/outputs/apk/resnet/debug/test_app-resnet-debug.apk

```

## Different build configurations

Module for inference can be set in `android/test_app/app/build.gradle` as a BuildConfig parameters:

```

productFlavors {

mobilenetQuant {

dimension "model"

applicationIdSuffix ".mobilenetQuant"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_MOBILENET_QUANT'))

addManifestPlaceholders([APP_NAME: "PyMobileNetQuant"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-mobilenet\"")

}

resnet {

dimension "model"

applicationIdSuffix ".resnet"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_RESNET18'))

addManifestPlaceholders([APP_NAME: "PyResnet"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-resnet\"")

}

```

In that case we can setup several apps on the same device for comparison, to separate packages `applicationIdSuffix`: 'org.pytorch.testapp.mobilenetQuant' and different application names and logcat tags as `manifestPlaceholder` and another BuildConfig parameter:

```

─ $ adb shell pm list packages | grep pytorch

package:org.pytorch.testapp.mobilenetQuant

package:org.pytorch.testapp.resnet

```

In future we can add another BuildConfig params e.g. single/multi threads and other configuration for profiling.

At the moment 2 flavors - for resnet18 and for mobilenetQuantized

which can be installed on connected device:

```

cd android

```

```

gradle test_app:installMobilenetQuantDebug

```

```

gradle test_app:installResnetDebug

```

## Testing:

```

cd android

sh build_test_app.sh

adb install -r test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

```

```

cd $ANDROID_NDK

python simpleperf/run_simpleperf_on_device.py record --app org.pytorch.testapp.mobilenetQuant -g --duration 10 -o /data/local/tmp/perf.data

adb pull /data/local/tmp/perf.data

python simpleperf/report_html.py

```

Simpleperf report has all symbols:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28406

Differential Revision: D18386622

Pulled By: IvanKobzarev

fbshipit-source-id: 3a751192bbc4bc3c6d7f126b0b55086b4d586e7a

Summary:

Copy of android.md from the site + information about Nightly builds

It's a bit of duplication with separate repo pytorch.github.io , but I think more people will find it and we can faster iterate on it and keep in sync with the code.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28533

Reviewed By: dreiss

Differential Revision: D18153638

Pulled By: IvanKobzarev

fbshipit-source-id: 288ef3f153d8e239795a85e3b8992e99f072f3b7

Summary:

The central fbjni repository is now public, so point to it and

take the latest version, which includes support for host builds

and some condensed syntax.

Test Plan: CI

Differential Revision: D18217840

fbshipit-source-id: 454e3e081f7e3155704fed692506251c4018b2a1

Summary:

The Java and Python code were updated, but the test currently fails

because the model was not regenerated.

Test Plan: Ran test.

Reviewed By: xcheng16

Differential Revision: D18217841

fbshipit-source-id: 002eb2d3ed0eaa14b3d7b087b621a6970acf1378

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27664

When ANDROID_ABI is not set, find libtorch headers and libraries from

the LIBTORCH_HOME build variable (which must be set by hand), place

output under a "host" directory, and use dynamic linking instead of

static.

This doesn't actually work without some local changes to fbjni, but I

want to get the changes landed to avoid unnecessary merge conflicts.

Test Plan: Imported from OSS

Differential Revision: D18210315

Pulled By: dreiss

fbshipit-source-id: 685a62de3c2a0a52bec7fd6fb95113058456bac8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27663

CMake sets CMAKE_BINARY_DIR and creates it automatically. Using this

allows us to use the -B command-line flag to CMake to specify an

alternate output directory.

Test Plan: Imported from OSS

Differential Revision: D18210316

Pulled By: dreiss

fbshipit-source-id: ba2f6bd4b881ddd00de73fe9c33d82645ad5495d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27662

This adds a new gradle subproject at pytorch_android/host and tweaks

the top-level build.gradle to only run some Android bits on the other

projects.

Referencing Java sources from inside the host directory feels a bit

hacky, but getting host and Android Gradle builds to coexist in the same

directory hit several roadblocks. We can try a bigger refactor to

separate the Android-specific and non-Android-specific parts of the

code, but that seems overkill at this point for 4 Java files.

This doesn't actually run without some local changes to fbjni, but I

want to get the files landed to avoid unnecessary merge conflicts.

Test Plan: Imported from OSS

Differential Revision: D18210317

Pulled By: dreiss

fbshipit-source-id: dafb54dde06a5a9a48fc7b7065d9359c5c480795

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28399

This is also to address issue #26764

Turns out it's incorrect to wrap the entire forward() call with

NonVariableTypeMode guard as some JIT passes has is_variable() check and

can be triggered within forward() call, e.g.:

jit/passes/constant_propagation.cpp

Since now we are toggling NonVariableTypeMode per method/op call, we can

remove the guard around forward() now.

Test Plan: - With stacked PRs, verified it can load and run previously failed models.

Differential Revision: D18055850

Pulled By: ljk53

fbshipit-source-id: 3074d0ed3c6e05dbfceef6959874e5916aea316c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26767

Now that we have tagged ivalues, we can accurately recover the type with

`ivalue.type()`. This reomoves the other half-implemented pathways that

were created because we didn't have tags.

Test Plan: Imported from OSS

Differential Revision: D17561191

Pulled By: zdevito

fbshipit-source-id: 26aaa134099e75659a230d8a5a34a86dc39a3c5c

Summary:

All of the test cases move into a base class that is extended by the

intrumentation test and a new "HostTests" class that can be run in

normal Java. (Some changes to the build script and dependencies are

required before the host test can actually run.)

ghstack-source-id: fe1165b513241b92c5f4a81447f5e184b3bfc75e

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27453

Test Plan: Imported from OSS

Reviewed By: IvanKobzarev

Differential Revision: D17800410

fbshipit-source-id: 1184f0caebdfa219f4ccd1464c67826ac0220181

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27359

Adding methods to TensorImageUtils:

```

bitmapToFloatBuffer(..., FloatBuffer outBuffer, int outBufferOffset)

imageYUV420CenterCropToFloat32Tensor(..., FloatBuffer outBuffer, int outBufferOffset)

```

To be able to

- reuse FloatBuffer for inference

- to create batch-Tensor (contains several images/bitmaps)

As we reuse FloatBuffer for example demo app - image classification,

profiler shows less memory allocations (before that for every run we created new input tensor with newly allocated FloatBuffer) and ~-20ms on my PixelXL

Known open question:

At the moment every tensor element is written separatly calling `outBuffer.put()`, which is native call crossing lang boundaries

As an alternative - to allocation `float[]` on java side and fill it and put it in `outBuffer` with one call, reducing native calls, but increasing memory allocation on java side.

Tested locally just eyeballing durations - have not noticed big difference - decided to go with less memory allocations.

Will be good to merge into 1.3.0, but if not - demo app can use snapshot dependencies with this change.

PR with integration to demo app:

https://github.com/pytorch/android-demo-app/pull/6

Test Plan: Imported from OSS

Differential Revision: D17758621

Pulled By: IvanKobzarev

fbshipit-source-id: b4f1a068789279002d7ecc0bc680111f781bf980

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27381

Changing android nightly builds from master to version 1.4.0-SNAPSHOT, as we also have 1.3.0-SNAPSHOT from the branch v1.3.0

Test Plan: Imported from OSS

Differential Revision: D17773620

Pulled By: IvanKobzarev

fbshipit-source-id: c39a1dbf5e06f79c25367c3bc602cc8ce42cd939

Summary:

1. scripts/build_android_libtorch_and_pytorch_android.sh

- Builds libtorch for android_abis (by default for all 4: x86, x86_64, armeabi-v7a, arm-v8a) but cab be specified only custom list as a first parameter e.g. "x86"

- Creates symbolic links inside android/pytorch_android to results of the previous builds:

`pytorch_android/src/main/jniLibs/${abi}` -> `build_android/install/lib`

`pytorch_android/src/main/cpp/libtorch_include/${abi}` -> `build_android/install/include`

- Runs gradle assembleRelease to build aar files

proxy can be specified inside (for devservers)

2. android/run_tests.sh

Running pytorch_android tests, contains instruction how to setup and run android emulator in headless and noaudio mode to run it on devserver

proxy can be specified inside (for devservers)

#Test plan

Scenario to build x86 libtorch and android aars with it and run tests:

```

cd pytorch

sh scripts/build_android_libtorch_and_pytorch_android.sh x86

sh android/run_tests.sh

```

Tested on my devserver - build works, tests passed

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26833

Differential Revision: D17673972

Pulled By: IvanKobzarev

fbshipit-source-id: 8cb7c3d131781854589de6428a7557c1ba7471e9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26995

Fix current setup, exclude fbjni - we can not use independently pytorch_android:package, for example for testing `gradle pytorch_android:cAT`

But for publishing it works as pytorch_android has dep on fbjni that will be also published

For other cases - we have 2 fbjni.so - one from native build (CMakeLists.txt does add_subdirectory(fbjni_dir)), and from dependency ':fbjni'

We need both of them as ':fbjni' also contains java classes

As a fix: keep excluding for publishing tasks (bintrayUpload, uploadArchives), but else - pickFirst (as we have 2 sources of fbjni.so)

# Testing

gradle cAT works, fbjni.so included

gradle bintrayUpload (dryRun==true) - no fbjni.so

Test Plan: Imported from OSS

Differential Revision: D17637775

Pulled By: IvanKobzarev

fbshipit-source-id: edda56ba555678272249fe7018c1f3a8e179947c

Summary:

- Normalization mean and std specified as parameters instead of hardcode

- imageYUV420CenterCropToFloat32Tensor before this change worked only with square tensors (width==height) - added generalization to support width != height with all rotations and scalings

- javadocs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26690

Differential Revision: D17556006

Pulled By: IvanKobzarev

fbshipit-source-id: 63f3321ea2e6b46ba5c34f9e92c48d116f7dc5ce

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26565

For OSS mobile build we should keep QNNPACK off and PYTORCH_QNNPACK on

as we don't include caffe2 ops that use third_party/QNNPACK.

Update android/iOS build script to include new libraries accordingly.

Test Plan: - CI build

Differential Revision: D17508918

Pulled By: ljk53

fbshipit-source-id: 0483d45646d4d503b4e5c1d483e4df72cffc6c68

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26525

Create a util function to avoid boilerplate code as we are adding more

libraries.

Test Plan: - build CI;

Differential Revision: D17495394

Pulled By: ljk53

fbshipit-source-id: 9e19f96ede4867bdff5157424fa68b71e6cff8bf

Summary:

USE_STATIC_DISPATCH needs to be exposed as we don't hide header files

containing it for iOS (yet). Otherwise it's error-prone to request all

external projects to set the macro correctly on their own.

Also remove redundant USE_STATIC_DISPATCH definition from other places.

Test Plan:

- build android gradle to confirm linker can still strip out dead code;

- integrate with demo app to confirm inference can run without problem;

Differential Revision: D17484260

Pulled By: ljk53

fbshipit-source-id: 653f597acb2583761b723eff8026d77518007533

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26477

- At inference time we need turn off autograd mode and turn on no-variable

mode since we strip out these modules for inference-only mobile build.

- Both flags are stored in thread-local variables so we cannot simply

set them to false glboally.

- Add "autograd/grad_mode.h" header to all-in-one header 'torch/script.h'

to reduce friction for iOS engs who might need do this manually in their

project.

P.S. I tried to hide AutoNonVariableTypeMode in codegen but figured it's not

very trivial (e.g. there are manually written part not covered by codegen).

Might try it again later.

Test Plan: - Integrate with Android demo app to confirm inference runs correctly.

Differential Revision: D17484259

Pulled By: ljk53

fbshipit-source-id: 06887c8b527124aa0cc1530e8e14bb2361acef31

Summary:

At the moment it includes https://github.com/pytorch/pytorch/pull/26219 changes. That PR is landing at the moment, afterwards this PR will contain only javadocs.

Applied all dreiss comments from previous version.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26149

Differential Revision: D17490720

Pulled By: IvanKobzarev

fbshipit-source-id: f340dee660d5ffe40c96b43af9312c09f85a000b

Summary:

fbjni is used during linking `libpytorch.so` and is specified in `pytorch_android/CMakeLists.txt` and as a result its included as separate `libfbjni.so` and is included to `pytorch_android.aar`

We also have java part of fbjni and its connected to pytorch_android as gradle dependency which contains `libfbjni.so`

As a result when we specify gradle dep `'org.pytorch:pytorch_android'` (it has libjni.so) and it has transitive dep `'org.pytorch:pytorch_android_fbjni'` that has `libfbjni.so` and we will have gradle ambiguity error about this

Fix - excluding libfbjni.so from pytorch_android.aar packaging, using `libfbjni.so` from gradle dep `'org.pytorch:pytorch_android_fbjni'`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26382

Differential Revision: D17468723

Pulled By: IvanKobzarev

fbshipit-source-id: fcad648cce283b0ee7e8b2bab0041a2e079002c6

Summary:

After offline discussion with dzhulgakov :

- In future we will introduce creation of byte signed and byte unsigned dtype tensors, but java has only signed byte - we will have to add some separation for it in method names ( java types and tensor types can not be clearly mapped) => Returning type in method names

- fixes in error messages

- non-static method Tensor.numel()

- Change Tensor toString() to be more consistent with python

Update on Sep 16:

Type renaming on java side to uint8, int8, int32, float32, int64, float64

```

public abstract class Tensor {

public static final int DTYPE_UINT8 = 1;

public static final int DTYPE_INT8 = 2;

public static final int DTYPE_INT32 = 3;

public static final int DTYPE_FLOAT32 = 4;

public static final int DTYPE_INT64 = 5;

public static final int DTYPE_FLOAT64 = 6;

```

```

public static Tensor newUInt8Tensor(long[] shape, byte[] data)

public static Tensor newInt8Tensor(long[] shape, byte[] data)

public static Tensor newInt32Tensor(long[] shape, int[] data)

public static Tensor newFloat32Tensor(long[] shape, float[] data)

public static Tensor newInt64Tensor(long[] shape, long[] data)

public static Tensor newFloat64Tensor(long[] shape, double[] data)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26219

Differential Revision: D17406467

Pulled By: IvanKobzarev

fbshipit-source-id: a0d7d44dc8ce8a562da1a18bd873db762975b184

Summary:

Applying dzhulgakov review comments

org.pytorch.Tensor:

- dims renamed to shape

- typeCode to dtype

- numElements to numel

newFloatTensor, newIntTensor... to newTensor(...)

Add support of dtype=long, double

Resorted in code byte,int,float,long,double

For if conditions order float,int,byte,long,double as I expect that float and int branches will be used more often

Tensor.toString() does not have data, only numel (data buffer capacity)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26183

Differential Revision: D17374332

Pulled By: IvanKobzarev

fbshipit-source-id: ee93977d9c43c400b6c054b6286080321ccb81bc

Summary:

The main part is to switch at::Tensor creation from usage of `torch::empty(torch::IntArrayRef(...))->ShareExternalPointer(...) to torch::from_blob(...)`

Removed explicit set of `device CPU` as `at::TensorOptions` by default `device CPU`

And renaming of local variables removing `input` prefix to make them shorter

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25973

Differential Revision: D17356837

Pulled By: IvanKobzarev

fbshipit-source-id: 679e099b8aebd787dbf8ed422dae07a81243e18f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25984

Link static libtorch libraries into pytorch.so (API library for android)

with "-Wl,--gc-sections" flag to remove unused symbols in libtorch.

Test Plan:

- full gradle CI with stacked PR;

- will check final artifacts.tgz size change;

Differential Revision: D17312859

Pulled By: ljk53

fbshipit-source-id: 99584d15922867a7b3c3d661ba238a6f99f43db5

Summary:

Gradle tasks for publishing to bintray and jcenter, mavencentral; snapshot buidls go to oss.sonatype.org

Those gradle changes adds tasks:

bintrayUpload - publishing on bintray, in 'facebook' org

uploadArchives - uploading to maven repos

Gradle tasks are copied from facebook open sourced libraries like https://github.com/facebook/litho, https://github.com/facebookincubator/spectrum

To do the publishing we need to provide somehow (e.g. in ~/.gradle/gradle.properties)

```

signing.keyId=

signing.password=

signing.secretKeyRingFile=

bintrayUsername=

bintrayApiKey=

bintrayGpgPassword=

SONATYPE_NEXUS_USERNAME=

SONATYPE_NEXUS_PASSWORD=

```

android/libs/fbjni is submodule, to be able to add publishing tasks to it (it needs to be published as separate maven dependency) - I created `android/libs/fbjni_local` that has only `build.gradle` with release tasks.

pytorch_android dependency for ':fbjni' changed from implementation -> api as implementation treated as 'private' dependency which is translated to scope=runtime in maven pom file, api works as 'compile'

Testing:

it's already published on bintray with version 0.0.4 and can be used in gradle files as

```

repositories {

maven {

url "https://dl.bintray.com/facebook/maven"

}

}

dependencies {

implementation 'com.facebook:pytorch_android:0.0.4'

implementation 'com.facebook:pytorch_android_torchvision:0.0.4'

}

```

It was published in com.facebook group

I requested sync to jcenter from bintray, that usually takes 2-3 days

Versioning added version suffixes to aar output files and circleCI jobs for android start failing as they expected just pytorch_android.aar pytorch_android_torchvision.aar, without any version

To avoid it - I changed circleCI android jobs to zip *.aar files and publish as single artifact with name artifacts.zip, I will add kostmo to check this part, if circleCI jobs finish ok - everything works :)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25351

Reviewed By: kostmo

Differential Revision: D17135886

Pulled By: IvanKobzarev

fbshipit-source-id: 64eebac670bbccaaafa1b04eeab15760dd5ecdf9

Summary:

Introducing circleCI jobs for pytorch_android gradle builds, the ultimate goal of it at the moment - to run:

```

gradle assembleRelease -p ~/workspace/android/pytorch_android assembleRelease

```

To assemble android gradle build (aar) we need to have results of libtorch-android shared library with headers for 4 android abis, so pytorch_android_gradle_build requires 4 jobs

```

- pytorch_android_gradle_build:

requires:

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_x86_32_build

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_x86_64_build

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_arm_v7a_build

- pytorch_linux_xenial_py3_clang5_android_ndk_r19c_arm_v8a_build

```

All jobs use the same base docker_image, differentiate them by committing docker images with different android_abi -suffixes (like it is now for xla and namedtensor): (it's in `&pytorch_linux_build_defaults`)

```

if [[ ${BUILD_ENVIRONMENT} == *"namedtensor"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-namedtensor

elif [[ ${BUILD_ENVIRONMENT} == *"xla"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-xla

elif [[ ${BUILD_ENVIRONMENT} == *"-x86"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-x86

elif [[ ${BUILD_ENVIRONMENT} == *"-arm-v7a"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-arm-v7a

elif [[ ${BUILD_ENVIRONMENT} == *"-arm-v8a"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-arm-v8a

elif [[ ${BUILD_ENVIRONMENT} == *"-x86_64"* ]]; then

export COMMIT_DOCKER_IMAGE=$output_image-android-x86_64

else

export COMMIT_DOCKER_IMAGE=$output_image

fi

```

pytorch_android_gradle_build job copies headers and libtorch.so, libc10.so results from libtorch android docker images, to workspace first and to android_abi=x86 docker image afterwards, to run there final gradle build calling `.circleci/scripts/build_android_gradle.sh`

For PR jobs we have only `pytorch_linux_xenial_py3_clang5_android_ndk_r19c_x86_32_build` libtorch android build => it will have separate gradle build `pytorch_android_gradle_build-x86_32` that does not do docker copying,

it calls the same `.circleci/scripts/build_android_gradle.sh` which has only-x86_32 logic by condition on BUILD_ENVIRONMENT:

`[[ "${BUILD_ENVIRONMENT}" == *-gradle-build-only-x86_32* ]]`

And has filtering to un only for PR as for other runs we will have the full build. Filtering checks `-z "${CIRCLE_PULL_REQUEST:-}"`

```

- run:

name: filter_run_only_on_pr

no_output_timeout: "5m"

command: |

echo "CIRCLE_PULL_REQUEST: ${CIRCLE_PULL_REQUEST:-}"

if [ -z "${CIRCLE_PULL_REQUEST:-}" ]; then

circleci step halt

fi

```

Updating docker images to the version with gradle, android_sdk, openjdk - jenkins job with them https://ci.pytorch.org/jenkins/job/pytorch-docker-master/339/

pytorch_android_gradle_build successful run: https://circleci.com/gh/pytorch/pytorch/2604797#artifacts/containers/0

pytorch_android_gradle_build-x86_32 successful run: https://circleci.com/gh/pytorch/pytorch/2608945#artifacts/containers/0

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25286

Reviewed By: kostmo

Differential Revision: D17115861

Pulled By: IvanKobzarev

fbshipit-source-id: bc88fd38b38ed0d0170d719fffa375772bdea142

Summary:

Initial commit of pytorch_android_torchvision that has utility methods for

android.media.Image, YUV_420_888 format (camera output) -> Tensor(Float) with torchvision format, normalized by ImageNet mean,std

Bitmap -> Tensor(Float) torchvision format

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25185

Reviewed By: dreiss

Differential Revision: D17053008

Pulled By: IvanKobzarev

fbshipit-source-id: 6bf7a39615bf876999982b06925e7444700e284b

Summary:

Tensor has getDataAsFloatArray(), we also support Int and Byte Tensors,

adding symmetric methods for Int and Byte, that will throw

IllegalStateException if called for not appropriate type

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25183

Reviewed By: dreiss

Differential Revision: D17052674

Pulled By: IvanKobzarev

fbshipit-source-id: 1d44944461ad008e202e382152cd0690c61124f4

Summary:

TLDR; initial commit of android java-jni wrapper of pytorchscript c++ api

The main idea is to provide java interface for android developers to use pytorchscript modules.

java API tries to repeat semantic of c++ and python pytorchscript API

org.pytorch.Module (wrapper of torch::jit::script::Module)

- static Module load(String path)

- IValue forward(IValue... inputs)

- IValue runMethod(String methodName, IValue... inputs)

org.pytorch.Tensor (semantic of at::Tensor)

- newFloatTensor(long[] dims, float[] data)

- newFloatTensor(long[] dims, FloatBuffer data)

- newIntTensor(long[] dims, int[] data)

- newIntTensor(long[] dims, IntBuffer data)

- newByteTensor(long[] dims, byte[] data)

- newByteTensor(long[] dims, ByteBuffer data)

org.pytorch.IValue (semantic of at::IValue)

- static factory methods to create pytorchscript supported types

Examples of usage api could be found in PytorchInstrumentedTests.java:

Module module = Module.load(path);

IValue input = IValue.tensor(Tensor.newByteTensor(new long[]{1}, Tensor.allocateByteBuffer(1)));

IValue output = module.forward(input);

Tensor outputTensor = output.getTensor();

ThreadSafety:

Api is not thread safe, all synchronization must be done on caller side.

Mutability:

org.pytorch.Tensor buffer is DirectBuffer with native byte order, can be created with static factory methods specifing DirectBuffer.

At the moment org.pytorch.Tensor does not hold at::Tensor on jni side, it has: long[] dimensions, type, DirectByteBuffer blobData

Input tensors are mutable (can be modified and used for the next inference),

Uses values from buffer on the momment of Module#forward or Module#runMethod calls.

Buffers of input tensors is used directly by input at::Tensor

Output is copied from output at::Tensor and is immutable.

Dependencies:

Jni level is implemented with usage of fbjni library, that was developed in Facebook,

and was already used and opensourced in several opensource projects,

added to the repo as submodule from personal account to be able to switch submodule

when fbjni will be opensourced separately.

ghstack-source-id: b39c848359a70d717f2830a15265e4aa122279c0

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25084

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25105

Reviewed By: dreiss

Differential Revision: D16988107

Pulled By: IvanKobzarev

fbshipit-source-id: 41ca7c9869f8370b8504c2ef8a96047cc16516d4