Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55253

Previously DDP communication hooks takes a tensor list as the input. Now only takes a single tensor, as the preparation of retiring SPMD and only providing a single model replica for DDP communication hooks.

The next step is limiting only 1 model replica in Reducer.

ghstack-source-id: 125677637

Test Plan: waitforbuildbot

Reviewed By: zhaojuanmao

Differential Revision: D27533898

fbshipit-source-id: 5db92549c440f33662cf4edf8e0a0fd024101eae

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55031

It turns out that PowerSGD hooks can work on PyTorch native AMP package, but not Apex AMP package, which can somehow mutate gradients during the execution of communication hooks.

{F561544045}

ghstack-source-id: 125268206

Test Plan:

Used native amp backend for the same pytext model and worked:

f261564342

f261561664

Reviewed By: rohan-varma

Differential Revision: D27436484

fbshipit-source-id: 2b63eb683ce373f9da06d4d224ccc5f0a3016c88

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52859

This reverts commit 92a4ee1cf6.

Added support for bfloat16 for CUDA 11 and removed fast-path for empty input tensors that was affecting autograd graph.

Test Plan: Imported from OSS

Reviewed By: H-Huang

Differential Revision: D27402390

Pulled By: heitorschueroff

fbshipit-source-id: 73c5ccf54f3da3d29eb63c9ed3601e2fe6951034

Summary:

*Context:* https://github.com/pytorch/pytorch/issues/53406 added a lint for trailing whitespace at the ends of lines. However, in order to pass FB-internal lints, that PR also had to normalize the trailing newlines in four of the files it touched. This PR adds an OSS lint to normalize trailing newlines.

The changes to the following files (made in 54847d0adb9be71be4979cead3d9d4c02160e4cd) are the only manually-written parts of this PR:

- `.github/workflows/lint.yml`

- `mypy-strict.ini`

- `tools/README.md`

- `tools/test/test_trailing_newlines.py`

- `tools/trailing_newlines.py`

I would have liked to make this just a shell one-liner like the other three similar lints, but nothing I could find quite fit the bill. Specifically, all the answers I tried from the following Stack Overflow questions were far too slow (at least a minute and a half to run on this entire repository):

- [How to detect file ends in newline?](https://stackoverflow.com/q/38746)

- [How do I find files that do not end with a newline/linefeed?](https://stackoverflow.com/q/4631068)

- [How to list all files in the Git index without newline at end of file](https://stackoverflow.com/q/27624800)

- [Linux - check if there is an empty line at the end of a file [duplicate]](https://stackoverflow.com/q/34943632)

- [git ensure newline at end of each file](https://stackoverflow.com/q/57770972)

To avoid giving false positives during the few days after this PR is merged, we should probably only merge it after https://github.com/pytorch/pytorch/issues/54967.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54737

Test Plan:

Running the shell script from the "Ensure correct trailing newlines" step in the `quick-checks` job of `.github/workflows/lint.yml` should print no output and exit in a fraction of a second with a status of 0. That was not the case prior to this PR, as shown by this failing GHA workflow run on an earlier draft of this PR:

- https://github.com/pytorch/pytorch/runs/2197446987?check_suite_focus=true

In contrast, this run (after correcting the trailing newlines in this PR) succeeded:

- https://github.com/pytorch/pytorch/pull/54737/checks?check_run_id=2197553241

To unit-test `tools/trailing_newlines.py` itself (this is run as part of our "Test tools" GitHub Actions workflow):

```

python tools/test/test_trailing_newlines.py

```

Reviewed By: malfet

Differential Revision: D27409736

Pulled By: samestep

fbshipit-source-id: 46f565227046b39f68349bbd5633105b2d2e9b19

Summary:

This is to prepare for new language reference spec that needs to describe `torch.jit.Attribute` and `torch.jit.annotate`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54485

Reviewed By: SplitInfinity, nikithamalgifb

Differential Revision: D27406843

Pulled By: gmagogsfm

fbshipit-source-id: 98983b9df0f974ed69965ba4fcc03c1a18d1f9f5

Summary:

Reference: https://github.com/pytorch/pytorch/issues/38349

Wrapper around the existing `torch.gather` with broadcasting logic.

TODO:

* [x] Add Doc entry (see if phrasing can be improved)

* [x] Add OpInfo

* [x] Add test against numpy

* [x] Handle broadcasting behaviour and when dim is not given.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52833

Reviewed By: malfet

Differential Revision: D27319038

Pulled By: mruberry

fbshipit-source-id: 00f307825f92c679d96e264997aa5509172f5ed1

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54645

Had to replace RRef[..] with just RRef in the return signature since

sphynx seemed to completely mess up rendering RRef[..]

ghstack-source-id: 125024783

Test Plan: View locally.

Reviewed By: SciPioneer

Differential Revision: D27314609

fbshipit-source-id: 2dd9901e79f31578ac7733f79dbeb376f686ed75

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54277

alltoall already supported in nccl backend, so update the doc to reflect it.

Test Plan: Imported from OSS

Reviewed By: divchenko

Differential Revision: D27172904

Pulled By: wanchaol

fbshipit-source-id: 9afa89583d56b247b2017ea2350936053eb30827

Summary:

This PR adds autograd support for `torch.orgqr`.

Since `torch.orgqr` is one of few functions that expose LAPACK's naming and all other linear algebra routines were renamed a long time ago, I also added a new function with a new name and `torch.orgqr` now is an alias for it.

The new proposed name is `householder_product`. For a matrix `input` and a vector `tau` LAPACK's orgqr operation takes columns of `input` (called Householder vectors or elementary reflectors) scalars of `tau` that together represent Householder matrices and then the product of these matrices is computed. See https://www.netlib.org/lapack/lug/node128.html.

Other linear algebra libraries that I'm aware of do not expose this LAPACK function, so there is some freedom in naming it. It is usually used internally only for QR decomposition, but can be useful for deep learning tasks now when it supports differentiation.

Resolves https://github.com/pytorch/pytorch/issues/50104

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52637

Reviewed By: agolynski

Differential Revision: D27114246

Pulled By: mruberry

fbshipit-source-id: 9ab51efe52aec7c137aa018c7bd486297e4111ce

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54052

Introduce `fp16_compress_wrapper`, which can give some speedup on top of some gradient compression algorithms like PowerSGD.

ghstack-source-id: 124001805

Test Plan: {F509205173}

Reviewed By: iseessel

Differential Revision: D27076064

fbshipit-source-id: 4845a14854cafe2112c0caefc1e2532efe9d3ed8

Summary:

brianjo

- Add a javascript snippet to close the expandable left navbar sections 'Notes', 'Language Bindings', 'Libraries', 'Community'

- Fix two latex bugs that were causing output in the log that might have been misleading when looking for true doc build problems

- Change the way release versions interact with sphinx. I tested these via building docs twice: once with `export RELEASE=1` and once without.

- Remove perl scripting to turn the static version text into a link to the versions.html document. Instead, put this where it belongs in the layout.html template. This is the way the domain libraries (text, vision, audio) do it.

- There were two separate templates for master and release, with the only difference between them is that the master has an admonition "You are viewing unstable developer preview docs....". Instead toggle that with the value of `release`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53851

Reviewed By: mruberry

Differential Revision: D27085875

Pulled By: ngimel

fbshipit-source-id: c2d674deb924162f17131d895cb53cef08a1f1cb

Summary:

Close https://github.com/pytorch/pytorch/issues/51108

Related https://github.com/pytorch/pytorch/issues/38349

This PR implements the `cpu_kernel_multiple_outputs` to support returning multiple values in a CPU kernel.

```c++

auto iter = at::TensorIteratorConfig()

.add_output(out1)

.add_output(out2)

.add_input(in1)

.add_input(in2)

.build();

at::native::cpu_kernel_multiple_outputs(iter,

[=](float a, float b) -> std::tuple<float, float> {

float add = a + b;

float mul = a * b;

return std::tuple<float, float>(add, mul);

}

);

```

The `out1` will equal to `torch.add(in1, in2)`, while the result of `out2` will be `torch.mul(in1, in2)`.

It helps developers implement new torch functions that return two tensors more conveniently, such as NumPy-like functions [divmod](https://numpy.org/doc/1.18/reference/generated/numpy.divmod.html?highlight=divmod#numpy.divmod) and [frexp](https://numpy.org/doc/stable/reference/generated/numpy.frexp.html#numpy.frexp).

This PR adds `torch.frexp` function to exercise the new functionality provided by `cpu_kernel_multiple_outputs`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51097

Reviewed By: albanD

Differential Revision: D26982619

Pulled By: heitorschueroff

fbshipit-source-id: cb61c7f2c79873ab72ab5a61cbdb9203531ad469

Summary:

Fixes https://github.com/pytorch/pytorch/issues/44378 by providing a wider range of drivers similar to what SciPy is doing.

The supported CPU drivers are `gels, gelsy, gelsd, gelss`.

The CUDA interface has only `gels` implemented but only for overdetermined systems.

The current state of this PR:

- [x] CPU interface

- [x] CUDA interface

- [x] CPU tests

- [x] CUDA tests

- [x] Memory-efficient batch-wise iteration with broadcasting which fixes https://github.com/pytorch/pytorch/issues/49252

- [x] docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49093

Reviewed By: albanD

Differential Revision: D26991788

Pulled By: mruberry

fbshipit-source-id: 8af9ada979240b255402f55210c0af1cba6a0a3c

Summary:

This PR proposes to improve the distributed doc:

* [x] putting the init functions together

* [x] moving post-init functions into their own sub-section as they are only available after init and moving that group to after all init sub-sections

If this is too much, could we at least put these 2 functions together:

```

.. autofunction:: init_process_group

.. autofunction:: is_initialized

```

as they are interconnected. and the other functions are not alphabetically sorted in the first place.

Thank you.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52976

Reviewed By: albanD

Differential Revision: D26993933

Pulled By: mrshenli

fbshipit-source-id: 7cacbe28172ebb5849135567b1d734870b49de77

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53855

Remove "noindex" here:

{F492926346}

ghstack-source-id: 123724419

Test Plan:

waitforbuildbot

The failure on doctest does not seem to be relevant.

Reviewed By: rohan-varma

Differential Revision: D26967086

fbshipit-source-id: adf9db1144fa1475573f617402fdbca8177b7c08

Summary:

Fixes https://github.com/pytorch/pytorch/issues/50002

The last commit adds tests for 3d conv with the `SubModelFusion` and `SubModelWithoutFusion` classes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50003

Reviewed By: mrshenli

Differential Revision: D26325953

Pulled By: jerryzh168

fbshipit-source-id: 7406dd2721c0c4df477044d1b54a6c5e128a9034

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53253

Since GradBucket class becomes public, mention this class in ddp_comm_hooks.rst.

Screenshot:

{F478201008}

ghstack-source-id: 123596842

Test Plan: viewed generated html file

Reviewed By: rohan-varma

Differential Revision: D26812210

fbshipit-source-id: 65b70a45096b39f7d41a195e65b365b722645000

Summary:

This is a more fundamental example, as we may support some amount of shape specialization in the future.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53250

Reviewed By: navahgar

Differential Revision: D26841272

Pulled By: Chillee

fbshipit-source-id: 027c719afafc03828a657e40859cbfbf135e05c9

Summary:

Context: https://github.com/pytorch/pytorch/pull/53299#discussion_r587882857

These are the only hand-written parts of this diff:

- the addition to `.github/workflows/lint.yml`

- the file endings changed in these four files (to appease FB-internal land-blocking lints):

- `GLOSSARY.md`

- `aten/src/ATen/core/op_registration/README.md`

- `scripts/README.md`

- `torch/csrc/jit/codegen/fuser/README.md`

The rest was generated by running this command (on macOS):

```

git grep -I -l ' $' -- . ':(exclude)**/contrib/**' ':(exclude)third_party' | xargs gsed -i 's/ *$//'

```

I looked over the auto-generated changes and didn't see anything that looked problematic.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53406

Test Plan:

This run (after adding the lint but before removing existing trailing spaces) failed:

- https://github.com/pytorch/pytorch/runs/2043032377

This run (on the tip of this PR) succeeded:

- https://github.com/pytorch/pytorch/runs/2043296348

Reviewed By: walterddr, seemethere

Differential Revision: D26856620

Pulled By: samestep

fbshipit-source-id: 3f0de7f7c2e4b0f1c089eac9b5085a58dd7e0d97

Summary:

Provides the implementation for feature request issue https://github.com/pytorch/pytorch/issues/28937.

Adds the `Parametrization` functionality and implements `Pruning` on top of it.

It adds the `auto` mode, on which the parametrization is just computed once per forwards pass. The previous implementation computed the pruning on every forward, which is not optimal when pruning RNNs for example.

It implements a caching mechanism for parameters. This is implemented through the mechanism proposed at the end of the discussion https://github.com/pytorch/pytorch/issues/7313. In particular, it assumes that the user will not manually change the updated parameters between the call to `backwards()` and the `optimizer.step()`. If they do so, they would need to manually call the `.invalidate()` function provided in the implementation. This could be made into a function that gets a model and invalidates all the parameters in it. It might be the case that this function has to be called in the `.cuda()` and `.to` and related functions.

As described in https://github.com/pytorch/pytorch/issues/7313, this could be used, to implement in a cleaner way the `weight_norm` and `spectral_norm` functions. It also allows, as described in https://github.com/pytorch/pytorch/issues/28937, for the implementation of constrained optimization on manifolds (i.e. orthogonal constraints, positive definite matrices, invertible matrices, weights on the sphere or the hyperbolic space...)

TODO (when implementation is validated):

- More thorough test

- Documentation

Resolves https://github.com/pytorch/pytorch/issues/28937

albanD

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33344

Reviewed By: zhangguanheng66

Differential Revision: D26816708

Pulled By: albanD

fbshipit-source-id: 07c8f0da661f74e919767eae31335a9c60d9e8fe

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53084

Adding RemoteModule to master RPC docs since it is a prototype

feature.

ghstack-source-id: 122816689

Test Plan: waitforbuildbot

Reviewed By: rohan-varma

Differential Revision: D26743372

fbshipit-source-id: 00ce9526291dfb68494e07be3e67d7d9c2686f1b

Summary:

Fixes https://github.com/pytorch/pytorch/issues/44378 by providing a wider range of drivers similar to what SciPy is doing.

The supported CPU drivers are `gels, gelsy, gelsd, gelss`.

The CUDA interface has only `gels` implemented but only for overdetermined systems.

The current state of this PR:

- [x] CPU interface

- [x] CUDA interface

- [x] CPU tests

- [x] CUDA tests

- [x] Memory-efficient batch-wise iteration with broadcasting which fixes https://github.com/pytorch/pytorch/issues/49252

- [x] docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49093

Reviewed By: H-Huang

Differential Revision: D26723384

Pulled By: mruberry

fbshipit-source-id: c9866a95f14091955cf42de22f4ac9e2da009713

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52141

Remove BufferShuffleDataSet, as it's not being used anywhere within PyTorch (no usage on Github based on a search) and it's not included in the release of PyTorch 1.7.1.

Test Plan: Imported from OSS

Reviewed By: H-Huang

Differential Revision: D26710940

Pulled By: ejguan

fbshipit-source-id: 90023b4bfb105d6aa392753082100f9181ecebd0

Summary:

Fixes https://github.com/pytorch/pytorch/issues/52724.

This fixes the following for the LKJCholesky distribution in master:

- `log_prob` does sample validation when `validate_args=True`.

- exposes documentation for the LKJCholesky distribution.

cc. fehiepsi, fritzo

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52763

Reviewed By: anjali411

Differential Revision: D26657216

Pulled By: neerajprad

fbshipit-source-id: 12e8f8384cf0c3df8a29564c1e1718d2d6a5833f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51807

Implemented torch.linalg.multi_dot similar to [numpy.linalg.multi_dot](https://numpy.org/doc/stable/reference/generated/numpy.linalg.multi_dot.html).

This function does not support broadcasting or batched inputs at the moment.

**NOTE**

numpy.linalg.multi_dot allows the first and last tensors to have more than 2 dimensions despite their docs stating these must be either 1D or 2D. This PR diverges from NumPy in that it enforces this restriction.

**TODO**

- [ ] Benchmark against NumPy

- [x] Add OpInfo testing

- [x] Remove unnecessary copy for out= argument

Test Plan: Imported from OSS

Reviewed By: nikithamalgifb

Differential Revision: D26375734

Pulled By: heitorschueroff

fbshipit-source-id: 839642692424c4b1783606c76dd5b34455368f0b

Summary:

Toward fixing https://github.com/pytorch/pytorch/issues/47624

~Step 1: add `TORCH_WARN_MAYBE` which can either warn once or every time in c++, and add a c++ function to toggle the value.

Step 2 will be to expose this to python for tests. Should I continue in this PR or should we take a different approach: add the python level exposure without changing any c++ code and then over a series of PRs change each call site to use the new macro and change the tests to make sure it is being checked?~

Step 1: add a python and c++ toggle to convert TORCH_WARN_ONCE into TORCH_WARN so the warnings can be caught in tests

Step 2: add a python-level decorator to use this toggle in tests

Step 3: (in future PRs): use the decorator to catch the warnings instead of `maybeWarnsRegex`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48560

Reviewed By: ngimel

Differential Revision: D26171175

Pulled By: mruberry

fbshipit-source-id: d83c18f131d282474a24c50f70a6eee82687158f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51748

Adding docs for `fake_quantize_per_tensor_affine` and `fake_quantize_per_channel_affine`

functions.

Note: not documenting `fake_quantize_per_tensor_affine_cachemask` and

`fake_quantize_per_channel_affine_cachemask` since they are implementation details

of `fake_quantize_per_tensor_affine` and `fake_quantize_per_channel_affine`,

and do not need to be exposed to the user at the moment.

Test Plan: Build the docs locally on Mac OS, it looks good

Reviewed By: supriyar

Differential Revision: D26270514

Pulled By: vkuzo

fbshipit-source-id: 8e3c9815a12a3427572cb4d34a779e9f5e4facdd

Summary:

Add some much needed documentation on the Timer callgrind output format, and expand what is shown on the website.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51664

Reviewed By: tugsbayasgalan

Differential Revision: D26246675

Pulled By: robieta

fbshipit-source-id: 7a07ff35cae07bd2da111029242a5dc8de21403c

Summary:

Notes the module is in beta and that the policy for returning optionally computed tensors may change in the future.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51620

Reviewed By: heitorschueroff

Differential Revision: D26220254

Pulled By: mruberry

fbshipit-source-id: edf78fe448d948b43240e138d6d21b780324e41e

Summary:

There has a description error in quantization.rst, fixed it.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50187

Reviewed By: mrshenli

Differential Revision: D25895294

Pulled By: soumith

fbshipit-source-id: c0b2e7ba3fadfc0977ab2d4d4e9ed4f93694cedd

Summary:

Implements `np.diff` for single order differences only:

- method and function variants for `diff` and function variant for `diff_out`

- supports out variant, but not in-place since shape changes

- adds OpInfo entry, and test in `test_torch`

- automatic autograd because we are using the `Math` dispatch

_Update: we only support Tensors for prepend and append in this PR. See discussion below and comments for more details._

Currently there is a quirk in the c++ API based on how this is implemented: it is not possible to specify scalar prepend and appends without also specifying all 4 arguments.

That is because the goal is to match NumPy's diff signature of `diff(int n=1, int dim=-1, Union[Scalar, Tensor] prepend=None, Union[Scalar, Tensor] append)=None` where all arguments are optional, positional and in the correct order.

There are a couple blockers. One is c++ ambiguity. This prevents us from simply doing `diff(int n=1, int dim=-1, Scalar? prepend=None, Tensor? append=None)` etc for all combinations of {Tensor, Scalar} x {Tensor, Scalar}.

Why not have append, prepend not have default args and then write out the whole power set of {Tensor, Scalar, omitted} x {Tensor, Scalar, omitted} you might ask. Aside from having to write 18 overloads, this is actually illegal because arguments with defaults must come after arguments without defaults. This would mean having to write `diff(prepend, append, n, dim)` which is not desired. Finally writing out the entire power set of all arguments n, dim, prepend, append is out of the question because that would actually involve 2 * 2 * 3 * 3 = 36 combinations. And if we include the out variant, that would be 72 overloads!

With this in mind, the current way this is implemented is actually to still do `diff(int n=1, int dim=-1, Scalar? prepend=None, Tensor? append=None)`. But also make use of `cpp_no_default_args`. The idea is to only have one of the 4 {Tensor, Scalar} x {Tensor, Scalar} provide default arguments for the c++ api, and add `cpp_no_default_args` for the remaining 3 overloads. With this, Python api works as expected, but some calls such as `diff(prepend=1)` won't work on c++ api.

We can optionally add 18 more overloads that cover the {dim, n, no-args} x {scalar-tensor, tensor-scalar, scalar-scalar} x {out, non-out} cases for c++ api. _[edit: counting is hard - just realized this number is still wrong. We should try to count the cases we do cover instead and subtract that from the total: (2 * 2 * 3 * 3) - (3 + 2^4) = 17. 3 comes from the 3 of 4 combinations of {tensor, scalar}^2 that we declare to be `cpp_no_default_args`, and the one remaining case that has default arguments has covers 2^4 cases. So actual count is 34 additional overloads to support all possible calls]_

_[edit: thanks to https://github.com/pytorch/pytorch/issues/50767 hacky_wrapper is no longer necessary; it is removed in the latest commit]_

hacky_wrapper was also necessary here because `Tensor?` will cause dispatch to look for the `const optional<Tensor>&` schema but also generate a `const Tensor&` declaration in Functions.h. hacky_wrapper allows us to define our function as `const Tensor&` but wraps it in optional for us, so this avoids both the errors while linking and loading.

_[edit: rewrote the above to improve clarity and correct the fact that we actually need 18 more overloads (26 total), not 18 in total to complete the c++ api]_

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50569

Reviewed By: H-Huang

Differential Revision: D26176105

Pulled By: soulitzer

fbshipit-source-id: cd8e77cc2de1117c876cd71c29b312887daca33f

Summary:

A tiny PR to update the links in the lefthand navbar under Libraries. The canonical link for vision and text is `https://pytorch.org/vision/stable` and `https://pytorch.org/text/stable` respectively. The link without the `/stable` works via a redirect, this is cleaner.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51103

Reviewed By: izdeby

Differential Revision: D26079760

Pulled By: heitorschueroff

fbshipit-source-id: df1fa64d7895831f4e6242445bae02c1faa5e4dc

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50791

Add a dedicated pipeline parallelism doc page explaining the APIs and

the overall value of the module.

ghstack-source-id: 120257168

Test Plan:

1) View locally

2) waitforbuildbot

Reviewed By: rohan-varma

Differential Revision: D25967981

fbshipit-source-id: b607b788703173a5fa4e3526471140506171632b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50649

**Summary**

Tutorials, documentation and comments are not consistent with the file

extension they use for JIT archives. This commit modifies certain

instances of `*.pth` in `torch.jit.save` calls with `*.pt`.

**Test Plan**

Continuous integration.

**Fixes**

This commit fixes#49660.

Test Plan: Imported from OSS

Reviewed By: ZolotukhinM

Differential Revision: D25961628

Pulled By: SplitInfinity

fbshipit-source-id: a40c97954adc7c255569fcec1f389aa78f026d47

Summary:

This PR adds `torch.linalg.slogdet`.

Changes compared to the original torch.slogdet:

- Complex input now works as in NumPy

- Added out= variant (allocates temporary and makes a copy for now)

- Updated `slogdet_backward` to work with complex input

Ref. https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49194

Reviewed By: VitalyFedyunin

Differential Revision: D25916959

Pulled By: mruberry

fbshipit-source-id: cf9be8c5c044870200dcce38be48cd0d10e61a48

Summary:

This PR adds `torch.linalg.pinv`.

Changes compared to the original `torch.pinverse`:

* New kwarg "hermitian": with `hermitian=True` eigendecomposition is used instead of singular value decomposition.

* `rcond` argument can now be a `Tensor` of appropriate shape to apply matrix-wise clipping of singular values.

* Added `out=` variant (allocates temporary and makes a copy for now)

Ref. https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48399

Reviewed By: zhangguanheng66

Differential Revision: D25869572

Pulled By: mruberry

fbshipit-source-id: 0f330a91d24ba4e4375f648a448b27594e00dead

Summary:

This PR adds `torch.linalg.inv` for NumPy compatibility.

`linalg_inv_out` uses in-place operations on provided `result` tensor.

I modified `apply_inverse` to accept tensor of Int instead of std::vector, that way we can write a function similar to `linalg_inv_out` but removing the error checks and device memory synchronization.

I fixed `lda` (leading dimension parameter which is max(1, n)) in many places to handle 0x0 matrices correctly.

Zero batch dimensions are also working and tested.

Ref https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48261

Reviewed By: gchanan

Differential Revision: D25849590

Pulled By: mruberry

fbshipit-source-id: cfee6f1daf7daccbe4612ec68f94db328f327651

Summary:

BC-breaking note:

This PR changes the behavior of the any and all functions to always return a bool tensor. Previously these functions were only defined on bool and uint8 tensors, and when called on uint8 tensors they would also return a uint8 tensor. (When called on a bool tensor they would return a bool tensor.)

PR summary:

https://github.com/pytorch/pytorch/pull/44790#issuecomment-725596687

Fixes 2 and 3

Also Fixes https://github.com/pytorch/pytorch/issues/48352

Changes

* Output dtype is always `bool` (consistent with numpy) **BC Breaking (Previously used to match the input dtype**)

* Uses vectorized version for all dtypes on CPU

* Enables test for complex

* Update doc for `torch.all` and `torch.any`

TODO

* [x] Update docs

* [x] Benchmark

* [x] Raise issue on XLA

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47878

Reviewed By: albanD

Differential Revision: D25714324

Pulled By: mruberry

fbshipit-source-id: a87345f725297524242d69402dfe53060521ea5d

Summary:

This is related to https://github.com/pytorch/pytorch/issues/42666 .

I am opening this PR to have the opportunity to discuss things.

First, we need to consider the differences between `torch.svd` and `numpy.linalg.svd`:

1. `torch.svd` takes `some=True`, while `numpy.linalg.svd` takes `full_matrices=True`, which is effectively the opposite (and with the opposite default, too!)

2. `torch.svd` returns `(U, S, V)`, while `numpy.linalg.svd` returns `(U, S, VT)` (i.e., V transposed).

3. `torch.svd` always returns a 3-tuple; `numpy.linalg.svd` returns only `S` in case `compute_uv==False`

4. `numpy.linalg.svd` also takes an optional `hermitian=False` argument.

I think that the plan is to eventually deprecate `torch.svd` in favor of `torch.linalg.svd`, so this PR does the following:

1. Rename/adapt the old `svd` C++ functions into `linalg_svd`: in particular, now `linalg_svd` takes `full_matrices` and returns `VT`

2. Re-implement the old C++ interface on top of the new (by negating `full_matrices` and transposing `VT`).

3. The C++ version of `linalg_svd` *always* returns a 3-tuple (we can't do anything else). So, there is a python wrapper which manually calls `torch._C._linalg.linalg_svd` to tweak the return value in case `compute_uv==False`.

Currently, `linalg_svd_backward` is broken because it has not been adapted yet after the `V ==> VT` change, but before continuing and spending more time on it I wanted to make sure that the general approach is fine.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45562

Reviewed By: H-Huang

Differential Revision: D25803557

Pulled By: mruberry

fbshipit-source-id: 4966f314a0ba2ee391bab5cda4563e16275ce91f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49902

Adds a common errors section, and details the two errors

we see often on the discuss forums, with recommended solutions.

Test Plan: build the docs on Mac OS, the new section renders correctly.

Reviewed By: supriyar

Differential Revision: D25718195

Pulled By: vkuzo

fbshipit-source-id: c5ef2b24831d18d57bbafdb82d26d8fbf3a90781

Summary:

I am opening this PR early to have a place to discuss design issues.

The biggest difference between `torch.qr` and `numpy.linalg.qr` is that the former `torch.qr` takes a boolean parameter `some=True`, while the latter takes a string parameter `mode='reduced'` which can be one of the following:

`reduced`

this is completely equivalent to `some=True`, and both are the default.

`complete`

this is completely equivalent to `some=False`.

`r`

this returns only `r` instead of a tuple `(r, q)`. We have already decided that we don't want different return types depending on the parameters, so I propose to return `(r, empty_tensor)` instead. I **think** that in this mode it will be impossible to implement the backward pass, so we should raise an appropriate error in that case.

`raw`

in this mode, it returns `(h, tau)` instead of `(q, r)`. Internally, `h` and `tau` are obtained by calling lapack's `dgeqrf` and are later used to compute the actual values of `(q, r)`. The numpy docs suggest that these might be useful to call other lapack functions, but at the moment none of them is exposed by numpy and I don't know how often it is used in the real world.

I suppose the implementing the backward pass need attention to: the most straightforward solution is to use `(h, tau)` to compute `(q, r)` and then use the normal logic for `qr_backward`, but there might be faster alternatives.

`full`, `f`

alias for `reduced`, deprecated since numpy 1.8.0

`economic`, `e`

similar to `raw but it returns only `h` instead of `(h, tau). Deprecated since numpy 1.8.0

To summarize:

* `reduce`, `complete` and `r` are straightforward to implement.

* `raw` needs a bit of extra care, but I don't know how much high priority it is: since it is used rarely, we might want to not support it right now and maybe implement it in the future?

* I think we should just leave `full` and `economic` out, and possibly add a note to the docs explaining what you need to use instead

/cc mruberry

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47764

Reviewed By: ngimel

Differential Revision: D25708870

Pulled By: mruberry

fbshipit-source-id: c25c70a23a02ec4322430d636542041e766ebe1b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49803

Per "https://fb.workplace.com/groups/e/permalink/3320810064641820/" we can no longer use the terms "whitelist" and "blacklist", and editing any file containing them results in a critical error signal. Let's embrace the change.

This diff changes "blacklist" to "blocklist" in a number of non-interface contexts (interfaces would require more extensive testing and might interfere with reading stored data, so those are deferred until later).

Test Plan: Sandcastle

Reviewed By: vkuzo

Differential Revision: D25686924

fbshipit-source-id: 117de2ca43a0ea21b6e465cf5082e605e42adbf6

Summary:

This PR adds `torch.linalg.inv` for NumPy compatibility.

`linalg_inv_out` uses in-place operations on provided `result` tensor.

I modified `apply_inverse` to accept tensor of Int instead of std::vector, that way we can write a function similar to `linalg_inv_out` but removing the error checks and device memory synchronization.

I fixed `lda` (leading dimension parameter which is max(1, n)) in many places to handle 0x0 matrices correctly.

Zero batch dimensions are also working and tested.

Ref https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48261

Reviewed By: ngimel

Differential Revision: D25690129

Pulled By: mruberry

fbshipit-source-id: edb2d03721f22168c42ded8458513cb23dfdc712

Summary:

Minor doc fix in clarifying that the input data is rounded not truncated.

CC zasdfgbnm ngimel

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49625

Reviewed By: mruberry

Differential Revision: D25668244

Pulled By: ngimel

fbshipit-source-id: ac97e41e0ca296276544f9e9f85b2cf1790d9985

Summary:

Related https://github.com/pytorch/pytorch/issues/38349

Implement NumPy-like function `torch.broadcast_to` to broadcast the input tensor to a new shape.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48997

Reviewed By: anjali411, ngimel

Differential Revision: D25663937

Pulled By: mruberry

fbshipit-source-id: 0415c03f92f02684983f412666d0a44515b99373

Summary:

This PR adds `torch.linalg.solve`.

`linalg_solve_out` uses in-place operations on the provided result tensor.

I modified `apply_solve` to accept tensor of Int instead of std::vector, that way we can write a function similar to `linalg_solve_out` but removing the error checks and device memory synchronization.

In comparison to `torch.solve` this routine accepts 1-dimensional tensors and batches of 1-dim tensors for the right-hand-side term. `torch.solve` requires it to be at least 2-dimensional.

Ref. https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48456

Reviewed By: izdeby

Differential Revision: D25562222

Pulled By: mruberry

fbshipit-source-id: a9355c029e2442c2e448b6309511919631f9e43b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48280

Adding new API for the kineto profiler that supports enable predicate

function

Test Plan: unit test

Reviewed By: ngimel

Differential Revision: D25142220

Pulled By: ilia-cher

fbshipit-source-id: c57fa42855895075328733d7379eaf3dc1743d14

Summary:

BC-breaking note:

This PR changes the behavior of the any and all functions to always return a bool tensor. Previously these functions were only defined on bool and uint8 tensors, and when called on uint8 tensors they would also return a uint8 tensor. (When called on a bool tensor they would return a bool tensor.)

PR summary:

https://github.com/pytorch/pytorch/pull/44790#issuecomment-725596687

Fixes 2 and 3

Also Fixes https://github.com/pytorch/pytorch/issues/48352

Changes

* Output dtype is always `bool` (consistent with numpy) **BC Breaking (Previously used to match the input dtype**)

* Uses vectorized version for all dtypes on CPU

* Enables test for complex

* Update doc for `torch.all` and `torch.any`

TODO

* [x] Update docs

* [x] Benchmark

* [x] Raise issue on XLA

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47878

Reviewed By: H-Huang

Differential Revision: D25421263

Pulled By: mruberry

fbshipit-source-id: c6c681ef94004d2bcc787be61a72aa059b333e69

Summary:

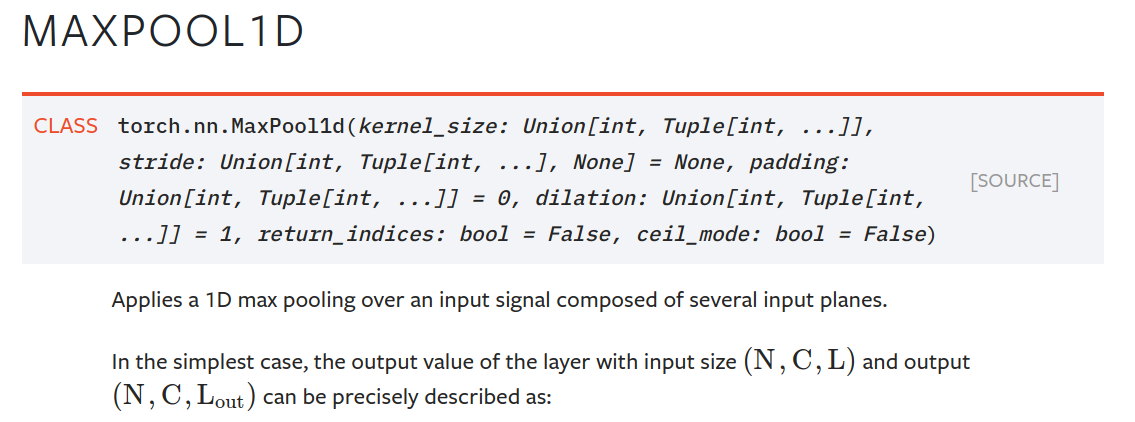

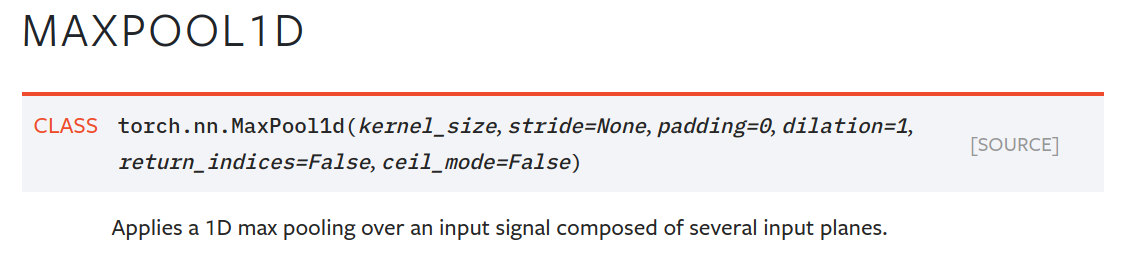

One unintended side effect of moving type annotations inline was that those annotations now show up in signatures in the html docs. This is more confusing and ugly than it is helpful. An example for `MaxPool1d`:

This makes the docs readable again. The parameter descriptions often already have type information, and there will be many cases where the type annotations will make little sense to the user (e.g., returning typevar T, long unions).

Change to `MaxPool1d` example:

Note that once we can build the docs with Sphinx 3 (which is far off right now), we have two options to make better use of the extra type info in the annotations (some of which is useful):

- `autodoc_type_aliases`, so we can leave things like large unions unevaluated to keep things readable

- `autodoc_typehints = 'description'`, which moves the annotations into the parameter descriptions.

Another, more labour-intensive option, is what vadimkantorov suggested in gh-44964: show annotations on hover. Could also be done with some foldout, or other optional way to make things visible. Would be nice, but requires a Sphinx contribution or plugin first.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49294

Reviewed By: glaringlee

Differential Revision: D25535272

Pulled By: ezyang

fbshipit-source-id: 5017abfea941a7ae8c4595a0d2bdf8ae8965f0c4

Summary:

The args parameter of ONNX export is changed to better support optional arguments such that args is represented as:

args (tuple of arguments or torch.Tensor, a dictionary consisting of named arguments (optional)):

a dictionary to specify the input to the corresponding named parameter:

- KEY: str, named parameter

- VALUE: corresponding input

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47367

Reviewed By: H-Huang

Differential Revision: D25432691

Pulled By: bzinodev

fbshipit-source-id: 9d4cba73cbf7bef256351f181f9ac5434b77eee8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48909

Adds these new APIs to the documentation

ghstack-source-id: 117965961

Test Plan: CI

Reviewed By: mrshenli

Differential Revision: D25363279

fbshipit-source-id: af6889d377f7b5f50a1a77a36ab2f700e5040150

Summary:

Ref https://github.com/pytorch/pytorch/issues/42175

This removes the 4 deprecated spectral functions: `torch.{fft,rfft,ifft,irfft}`. `torch.fft` is also now imported by by default.

The actual `at::native` functions are still used in `torch.stft` so can't be full removed yet. But will once https://github.com/pytorch/pytorch/issues/47601 has been merged.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48594

Reviewed By: heitorschueroff

Differential Revision: D25298929

Pulled By: mruberry

fbshipit-source-id: e36737fe8192fcd16f7e6310f8b49de478e63bf0

Summary:

Fixes https://github.com/pytorch/pytorch/issues/43837

This adds a `torch.broadcast_shapes()` function similar to Pyro's [broadcast_shape()](7c2c22c10d/pyro/distributions/util.py (L151)) and JAX's [lax.broadcast_shapes()](https://jax.readthedocs.io/en/test-docs/_modules/jax/lax/lax.html). This helper is useful e.g. in multivariate distributions that are parameterized by multiple tensors and we want to `torch.broadcast_tensors()` but the parameter tensors have different "event shape" (e.g. mean vectors and covariance matrices). This helper is already heavily used in Pyro's distribution codebase, and we would like to start using it in `torch.distributions`.

- [x] refactor `MultivariateNormal`'s expansion logic to use `torch.broadcast_shapes()`

- [x] add unit tests for `torch.broadcast_shapes()`

- [x] add docs

cc neerajprad

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43935

Reviewed By: bdhirsh

Differential Revision: D25275213

Pulled By: neerajprad

fbshipit-source-id: 1011fdd597d0a7a4ef744ebc359bbb3c3be2aadc

Summary:

This PR adds `torch.linalg.matrix_rank`.

Changes compared to the original `torch.matrix_rank`:

- input with the complex dtype is supported

- batched input is supported

- "symmetric" kwarg renamed to "hermitian"

Should I update the documentation for `torch.matrix_rank`?

For the input with no elements (for example 0×0 matrix), the current implementation is divergent from NumPy. NumPy stumbles on not defined max for such input, here I chose to return appropriately sized tensor of zeros. I think that's mathematically a correct thing to do.

Ref https://github.com/pytorch/pytorch/issues/42666.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48206

Reviewed By: albanD

Differential Revision: D25211965

Pulled By: mruberry

fbshipit-source-id: ae87227150ab2cffa07f37b4a3ab228788701837

Summary:

The approach is to simply reuse `torch.repeat` but adding one more functionality to tile, which is to prepend 1's to reps arrays if there are more dimensions to the tensors than the reps given in input. Thus for a tensor of shape (64, 3, 24, 24) and reps of (2, 2) will become (1, 1, 2, 2), which is what NumPy does.

I've encountered some instability with the test on my end, where I could get a random failure of the test (due to, sometimes, random value of `self.dim()`, and sometimes, segfaults). I'd appreciate any feedback on the test or an explanation for this instability so I can this.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47974

Reviewed By: ngimel

Differential Revision: D25148963

Pulled By: mruberry

fbshipit-source-id: bf63b72c6fe3d3998a682822e669666f7cc97c58

Summary:

This PR adds `torch.linalg.eigh`, and `torch.linalg.eigvalsh` for NumPy compatibility.

The current `torch.symeig` uses (on CPU) a different LAPACK routine than NumPy (`syev` vs `syevd`). Even though it shouldn't matter in practice, `torch.linalg.eigh` uses `syevd` (as NumPy does).

Ref https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45526

Reviewed By: gchanan

Differential Revision: D25022659

Pulled By: mruberry

fbshipit-source-id: 3676b77a121c4b5abdb712ad06702ac4944e900a

Summary:

Adds ldexp operator for https://github.com/pytorch/pytorch/issues/38349

I'm not entirely sure the changes to `NamedRegistrations.cpp` were needed but I saw other operators in there so I added it.

Normally the ldexp operator is used along with the frexp to construct and deconstruct floating point values. This is useful for performing operations on either the mantissa and exponent portions of floating point values.

Sleef, std math.h, and cuda support both ldexp and frexp but not for all data types. I wasn't able to figure out how to get the iterators to play nicely with a vectorized kernel so I have left this with just the normal CPU kernel for now.

This is the first operator I'm adding so please review with an eye for errors.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45370

Reviewed By: mruberry

Differential Revision: D24333516

Pulled By: ranman

fbshipit-source-id: 2df78088f00aa9789aae1124eda399771e120d3f

Summary:

xref gh-46927 to the 1.7 release branch

This backports a fix to the script to push docs to pytorch/pytorch.github.io. Specifically, it pushes to the correct directory when a tag is created here. This issue became apparent in the 1.7 release cycle and should be backported to here.

Along the way, fix the canonical link to the pytorch/audio documentation now that they use subdirectories for the versions, xref pytorch/audio#992. This saves a redirect.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47349

Reviewed By: zhangguanheng66

Differential Revision: D25073752

Pulled By: seemethere

fbshipit-source-id: c778c94a05f1c3e916217bb184f69107e7d2c098

Summary:

Reference https://github.com/pytorch/pytorch/issues/38349

Delegates to `torch.transpose` (not sure what is the best way to alias)

TODO:

* [x] Add test

* [x] Add documentation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46041

Reviewed By: gchanan

Differential Revision: D25022816

Pulled By: mruberry

fbshipit-source-id: c80223d081cef84f523ef9b23fbedeb2f8c1efc5

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48038

nn.ReLU works for both float and quantized input, we don't want to define an nn.quantized.ReLU

that does the same thing as nn.ReLU, similarly for nn.quantized.functional.relu

this also removes the numerical inconsistency for models quantizes nn.ReLU independently in qat mode

Test Plan:

Imported from OSS

Imported from OSS

Reviewed By: vkuzo

Differential Revision: D25000462

fbshipit-source-id: e3609a3ae4a3476a42f61276619033054194a0d2

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47415

nn.ReLU works for both float and quantized input, we don't want to define an nn.quantized.ReLU

that does the same thing as nn.ReLU, similarly for nn.quantized.functional.relu

this also removes the numerical inconsistency for models quantizes nn.ReLU independently in qat mode

Test Plan: Imported from OSS

Reviewed By: z-a-f

Differential Revision: D24747035

fbshipit-source-id: b8fdf13e513a0d5f0c4c6c9835635bdf9fdc2769

Summary:

For tracing successfully, we need write pytorch model in torch way. So we add instructions with examples here.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46961

Reviewed By: ailzhang

Differential Revision: D24900040

Pulled By: bzinodev

fbshipit-source-id: b375b533396b11dbc9656fa61e84a3f92f352e4b

Summary:

I have been asked several times how to toggle this flag on libtorch. I think it would be good to mention it in the docs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47331

Reviewed By: glaringlee

Differential Revision: D24777576

Pulled By: mruberry

fbshipit-source-id: cc2a338c477bb57e0bb74b8960c47fde99665e41

Summary:

freeze was temporarily renamed to _freeze in a reorg, and then removed from doc [here](https://github.com/pytorch/pytorch/pull/43473). add it back to docs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47120

Reviewed By: suo

Differential Revision: D24650712

Pulled By: eellison

fbshipit-source-id: 399e31586b8093de66937ba1266007ee291f509e

Summary:

This PR adds a function for calculating the Kronecker product of tensors.

The implementation is based on `at::tensordot` with permutations and reshape.

Tests pass.

TODO:

- [x] Add more test cases

- [x] Write documentation

- [x] Add entry `common_methods_invokations.py`

Ref. https://github.com/pytorch/pytorch/issues/42666

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45358

Reviewed By: mrshenli

Differential Revision: D24680755

Pulled By: mruberry

fbshipit-source-id: b1f8694589349986c3abfda3dc1971584932b3fa

Summary:

CC: gchanan jspisak seemethere

I previewed the docs and they look reasonable. Let me know if I missed anything.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46880

Reviewed By: seemethere, izdeby

Differential Revision: D24551503

Pulled By: robieta

fbshipit-source-id: 627f73d3dd4d8f089777bca8653702735632b9fc

Summary:

Related https://github.com/pytorch/pytorch/issues/38349

This PR implements `column_stack` as the composite ops of `torch.reshape` and `torch.hstack`, and makes `row_stack` as the alias of `torch.vstack`.

Todo

- [x] docs

- [x] alias pattern for `row_stack`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46313

Reviewed By: ngimel

Differential Revision: D24585471

Pulled By: mruberry

fbshipit-source-id: 62fc0ffd43d051dc3ecf386a3e9c0b89086c1d1c

Summary:

Retake on https://github.com/pytorch/pytorch/issues/40493 after all the feedback from albanD

This PR implements the generic Lazy mechanism and a sample `LazyLinear` layer with the `UninitializedParameter`.

The main differences with the previous PR are two;

Now `torch.nn.Module` remains untouched.

We don't require an explicit initialization or a dummy forward pass before starting the training or inference of the actual module. Making this much simpler to use from the user side.

As we discussed offline, there was the suggestion of not using a mixin, but changing the `__class__` attribute of `LazyLinear` to become `Linear` once it's completely initialized. While this can be useful, by the time being we need `LazyLinear` to be a `torch.nn.Module` subclass since there are many checks that rely on the modules being instances of `torch.nn.Module`.

This can cause problems when we create complex modules such as

```

class MyNetwork(torch.nn.Module):

def __init__(self):

super(MyNetwork, self).__init__()

self.conv = torch.nn.Conv2d(20, 4, 2)

self.linear = torch.nn.LazyLinear(10)

def forward(self, x):

y = self.conv(x).clamp(min=0)

return self.linear(y)

```

Here, when the __setattr__ function is called at the time LazyLinear is registered, it won't be added to the child modules of `MyNetwork`, so we have to manually do it later, but currently there is no way to do such thing as we can't access the parent module from LazyLinear once it becomes the Linear module. (We can add a workaround to this if needed).

TODO:

Add convolutions once the design is OK

Fix docstrings

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44538

Reviewed By: ngimel

Differential Revision: D24162854

Pulled By: albanD

fbshipit-source-id: 6d58dfe5d43bfb05b6ee506e266db3cf4b885f0c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45164

This PR implements `fft2`, `ifft2`, `rfft2` and `irfft2`. These are the last functions required for `torch.fft` to match `numpy.fft`. If you look at either NumPy or SciPy you'll see that the 2-dimensional variants are identical to `*fftn` in every way, except for the default value of `axes`. In fact you can even use `fft2` to do general n-dimensional transforms.

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision: D24363639

Pulled By: mruberry

fbshipit-source-id: 95191b51a0f0b8e8e301b2c20672ed4304d02a57

Summary:

The `i` variable in `Line 272` may cause ambiguity in understanding. I think it should be named as `epoch` variable.

Fixes #{issue number}

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45944

Reviewed By: agolynski

Differential Revision: D24219486

Pulled By: vincentqb

fbshipit-source-id: 2af0408594613e82a1a1b63971650cabde2b576e