Refactor torchscript based exporter logic to move them to a single (private) location for better code management. Original public module and method apis are preserved.

- Updated module paths in `torch/csrc/autograd/python_function.cpp` accordingly

- Removed `check_onnx_broadcast` from `torch/autograd/_functions/utils.py` because it is private&unused

@albanD / @soulitzer could you review changes in `torch/csrc/autograd/python_function.cpp` and

`torch/autograd/_functions/utils.py`? Thanks!

## BC Breaking

- **Deprecated members in `torch.onnx.verification` are removed**

Differential Revision: [D81236421](https://our.internmc.facebook.com/intern/diff/D81236421)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/161323

Approved by: https://github.com/titaiwangms, https://github.com/angelayi

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

This PR addresses issue [#81075](https://github.com/pytorch/pytorch/issues/81075), making `torch.stft` compatible with ONNX Opset 17's STFT operator.

The conversion works for _most_ of `torch.stft` functionality:

- Batched or unbatched inputs

- Normalization

- Pre-computed windows

- Rectangular windows

- One-sided returns

- Window centering (implicitly supported)

What is currently _not_ supported is **complex types**, due to the lack of conversion functionality between PyTorch and ONNX (https://github.com/pytorch/pytorch/issues/86746).

Regardless, this is easy to bypass by setting `return_complex=False` when using `torch.stft`.

Note that there is already a draft PR to address this (https://github.com/pytorch/pytorch/pull/83944), but it is currently closed and it only partially addresses the conversion (i.e., most of `torch.stft` functionality is lacking, and unit tests are missing).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/92087

Approved by: https://github.com/justinchuby

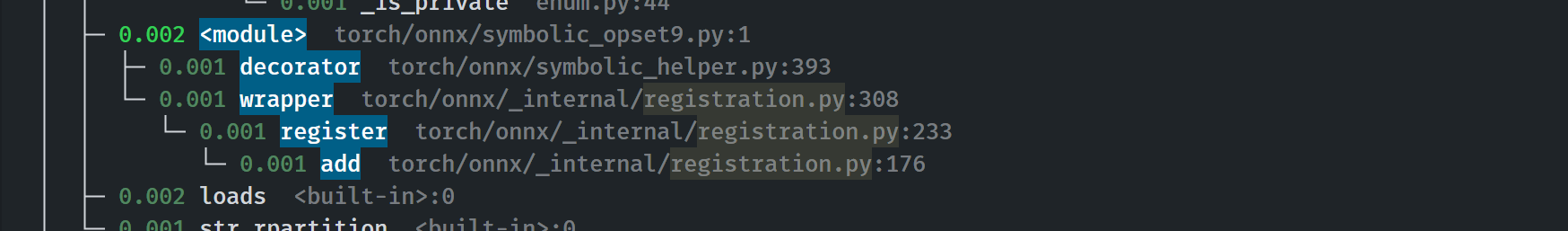

This is the 4th PR in the series of #83787. It enables the use of `@onnx_symbolic` across `torch.onnx`.

- **Backward breaking**: Removed some symbolic functions from `__all__` because of the use of `@onnx_symbolic` for registering the same function on multiple aten names.

- Decorate all symbolic functions with `@onnx_symbolic`

- Move Quantized and Prim ops out from classes to functions defined in the modules. Eliminate the need for `isfunction` checking, speeding up the registration process by 60%.

- Remove the outdated unit test `test_symbolic_opset9.py`

- Symbolic function registration moved from the first call to `_run_symbolic_function` to init time.

- Registration is fast:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84448

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

## Summary

The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`.

The new registry

- Has faster lookup by storing only functions in the opset version they are defined in

- Is easier to manage and interact with due to its class design

- Builds the foundation for the more flexible registration process detailed in #83787

Implementation changes

- **Breaking**: Remove `torch.onnx.symbolic_registry`

- `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility

- Update _onnx_supported_ops.py for doc generation to include quantized ops.

- Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp`

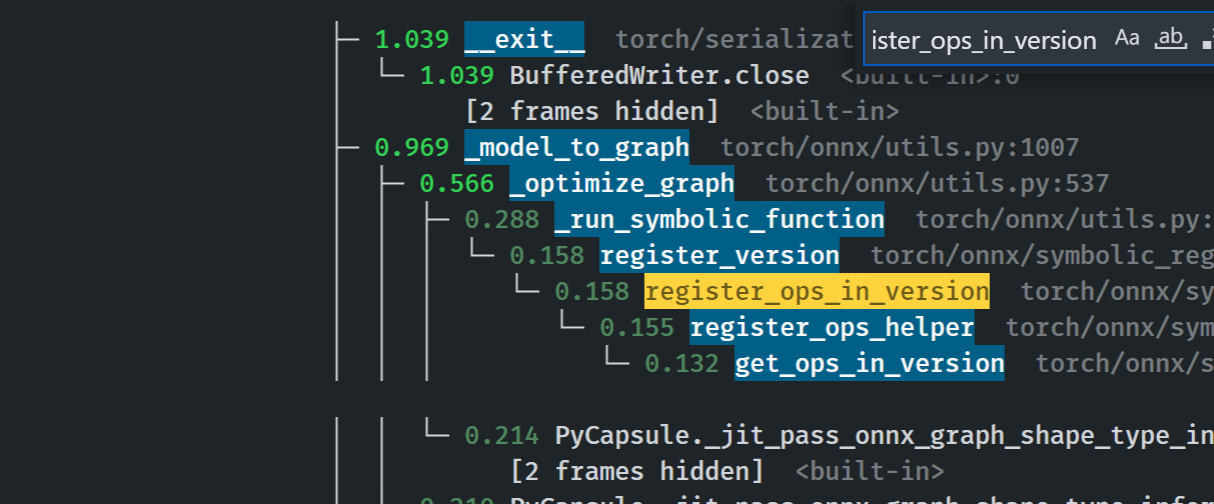

## Profiling results

-0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc.

### After

```

└─ 1.641 export <@beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1

└─ 1.641 export torch/onnx/utils.py:185

└─ 1.640 _export torch/onnx/utils.py:1331

├─ 0.889 _model_to_graph torch/onnx/utils.py:1005

│ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535

│ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0

│ │ │ [2 frames hidden] <built-in>

│ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670

│ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782

│ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18

│ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0

│ │ │ [2 frames hidden] <built-in>

│ │ └─ 0.033 [self]

```

### Before

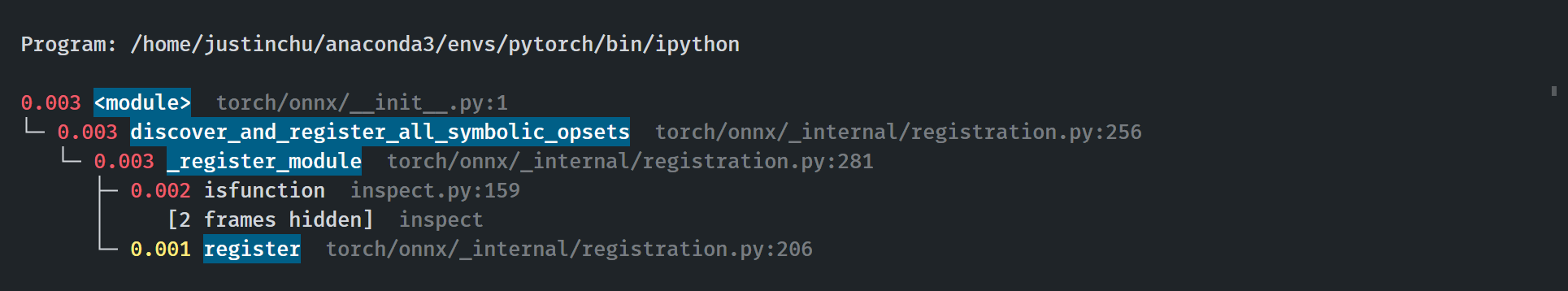

### Start up time

The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84382

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao