This PR is part of a series attempting to re-submit https://github.com/pytorch/pytorch/pull/134592 as smaller PRs.

In quantization tests:

- Add and use a common raise_on_run_directly method for when a user runs a test file directly which should not be run this way. Print the file which the user should have run.

- Raise a RuntimeError on tests which have been disabled (not run)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/154728

Approved by: https://github.com/ezyang

Context: In order to avoid the cluttering of the `torch.nn` namespace

the quantized modules namespace is moved to `torch.ao.nn`.

The list of the `nn.quantized` files that are being migrated:

- [ ] `torch.nn.quantized` → `torch.ao.nn.quantized`

- [X] `torch.nn.quantized.functional` → `torch.ao.nn.quantized.functional`

- [X] `torch.nn.quantized.modules` → `torch.ao.nn.quantized.modules`

- [X] [Current PR] `torch.nn.quantized.dynamic` → `torch.ao.nn.quantized.dynamic`

- [ ] `torch.nn.quantized._reference` → `torch.ao.nn.quantized._reference`

- [ ] `torch.nn.quantizable` → `torch.ao.nn.quantizable`

- [ ] `torch.nn.qat` → `torch.ao.nn.qat`

- [ ] `torch.nn.qat.modules` → `torch.ao.nn.qat.modules`

- [ ] `torch.nn.qat.dynamic` → `torch.ao.nn.qat.dynamic`

- [ ] `torch.nn.intrinsic` → `torch.ao.nn.intrinsic`

- [ ] `torch.nn.intrinsic.modules` → `torch.ao.nn.intrinsic.modules`

- [ ] `torch.nn.intrinsic.qat` → `torch.ao.nn.intrinsic.qat`

- [ ] `torch.nn.intrinsic.quantized` → `torch.ao.nn.intrinsic.quantized`

- [ ] `torch.nn.intrinsic.quantized.modules` → `torch.ao.nn.intrinsic.quantized.modules`

- [ ] `torch.nn.intrinsic.quantized.dynamic` → `torch.ao.nn.intrinsic.quantized.dynamic`

Majority of the files are just moved to the new location.

However, specific files need to be double checked:

- [Documentation](docs/source/quantization-support.rst) @vkuzo

- [Public API test list](test/allowlist_for_publicAPI.json) @peterbell10

- [BC test](test/quantization/bc/test_backward_compatibility.py) @vkuzo

- [IR emitter](torch/csrc/jit/frontend/ir_emitter.cpp) @jamesr66a

- [JIT serialization](torch/csrc/jit/serialization/import_source.cpp) @IvanKobzarev @jamesr66a

Differential Revision: [D36860660](https://our.internmc.facebook.com/intern/diff/D36860660/)

**NOTE FOR REVIEWERS**: This PR has internal Facebook specific changes or comments, please review them on [Phabricator](https://our.internmc.facebook.com/intern/diff/D36860660/)!

Differential Revision: [D36860660](https://our.internmc.facebook.com/intern/diff/D36860660)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78714

Approved by: https://github.com/jerryzh168

Context: In order to avoid the cluttering of the `torch.nn` namespace

the quantized modules namespace is moved to `torch.ao.nn`.

The list of the `nn.quantized` files that are being migrated:

- [ ] `torch.nn.quantized` → `torch.ao.nn.quantized`

- [X] `torch.nn.quantized.functional` → `torch.ao.nn.quantized.functional`

- [X] `torch.nn.quantized.modules` → `torch.ao.nn.quantized.modules`

- [X] [Current PR] `torch.nn.quantized.dynamic` → `torch.ao.nn.quantized.dynamic`

- [ ] `torch.nn.quantized._reference` → `torch.ao.nn.quantized._reference`

- [ ] `torch.nn.quantizable` → `torch.ao.nn.quantizable`

- [ ] `torch.nn.qat` → `torch.ao.nn.qat`

- [ ] `torch.nn.qat.modules` → `torch.ao.nn.qat.modules`

- [ ] `torch.nn.qat.dynamic` → `torch.ao.nn.qat.dynamic`

- [ ] `torch.nn.intrinsic` → `torch.ao.nn.intrinsic`

- [ ] `torch.nn.intrinsic.modules` → `torch.ao.nn.intrinsic.modules`

- [ ] `torch.nn.intrinsic.qat` → `torch.ao.nn.intrinsic.qat`

- [ ] `torch.nn.intrinsic.quantized` → `torch.ao.nn.intrinsic.quantized`

- [ ] `torch.nn.intrinsic.quantized.modules` → `torch.ao.nn.intrinsic.quantized.modules`

- [ ] `torch.nn.intrinsic.quantized.dynamic` → `torch.ao.nn.intrinsic.quantized.dynamic`

Majority of the files are just moved to the new location.

However, specific files need to be double checked:

- [Documentation](docs/source/quantization-support.rst) @vkuzo

- [Public API test list](test/allowlist_for_publicAPI.json) @peterbell10

- [BC test](test/quantization/bc/test_backward_compatibility.py) @vkuzo

- [IR emitter](torch/csrc/jit/frontend/ir_emitter.cpp) @jamesr66a

- [JIT serialization](torch/csrc/jit/serialization/import_source.cpp) @IvanKobzarev @jamesr66a

Differential Revision: [D36860660](https://our.internmc.facebook.com/intern/diff/D36860660/)

**NOTE FOR REVIEWERS**: This PR has internal Facebook specific changes or comments, please review them on [Phabricator](https://our.internmc.facebook.com/intern/diff/D36860660/)!

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78714

Approved by: https://github.com/jerryzh168

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74204

Minor follow-up to

https://github.com/pytorch/pytorch/pull/73863 that re-enables a

serialization test.

Test Plan:

python test/test_quantization.py

TestSerialization.test_linear_relu_package_quantization_transforms

Imported from OSS

Reviewed By: jerryzh168

Differential Revision: D34880378

fbshipit-source-id: f873f63e46cfcd936d7bdffb15c8f2d29e27b3c0

(cherry picked from commit 6a11a3b43ea130097a465304bf386e19992de03a)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73863

This PR fully aligns the convert function with the design: https://github.com/pytorch/rfcs/blob/master/RFC-0019-Extending-PyTorch-Quantization-to-Custom-Backends.md

and simplifies the implementation of convert function by always produce a reference quantized model (with reference patterns) first,

and then lower the model to a quantized model that is runnable with PyTorch native backend (fbgemm/qnnpack).

This PR makes the convert.py much easier to understand than the previous implementation, and we are able to remove majority of code

in quantization_patterns.py as well (in followup PRs).

Test Plan:

```

python test/test_quantization.py TestQuantizeFx

python test/test_quantization.py TestQuantizeFxOps

python test/test_quantization.py TestFXNumericSuiteCoreAPIs

python test/test_quantization.py TestFXNumericSuiteCoreAPIsModels

```

and other internal/oss regression tests

Imported from OSS

Reviewed By: andrewor14

Differential Revision: D34778506

fbshipit-source-id: 0678b66addf736039a8749b352f6f569caca962b

(cherry picked from commit 33ec9caf23f3ab373d827117efbd9db0668b2437)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65538

Adds a test which verifies that `prepare_fx` and `convert_fx` work

on models created by `torch.package` in the past. In detail:

1. (one time) create a model and save it with torch.package. Also save input,

expected output, and names of quantization related get_attrs added by

our passes.

2. (every time) load the model from (1), and verify that expected output

matches current output, and that get_attr targets did not change.

Test Plan:

```

python test/test_quantization.py TestSerialization.test_linear_relu_package_quantization_transforms

```

Imported from OSS

Reviewed By: supriyar

Differential Revision: D31512939

fbshipit-source-id: 718ad5fb66e09b6b31796ebe0dc698186e9a659f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63043

In version 1 we use the fused module/operator during QAT. Making this the default for all QAT runs going forward.

Older models saved after prepare_qat_fx can still load their state_dict into a model prepared using version 1.

The state_dict will still have the same attribute for the observer/fake_quant modules.

There may be some numerics difference between the old observer code in observer.py and the new fused module that was

re-written in C++/CUDA to perform observe + fake_quantize.

This PR also updates the test to check for the new module instead of the default FakeQuantize module.

Note: there are also some changes to make the operator work for multi-dim per-channel quantization + updated the test for that.

Test Plan:

python test/test_quantization.py TestSerialization.test_default_qat_qconfig

Imported from OSS

Reviewed By: raghuramank100

Differential Revision: D30232222

fbshipit-source-id: f3553a1926ab7c663bbeed6d574e30a7e90dfb5b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62345

This PR updates the attribute names from min_vals to min_val. the motivation for this is to keep the attribute name consistent with per-tensor observers so that dependencies (like FusedMovingAvgObsFakeQuantize) don't need to differentiate between the two observer types to access the attributes.

It also adds some BC tests to make sure that observers saved earlier with min_vals/max_vals can be loaded depending on the state_dict version.

Note: Scriptability of the observers isn't fully supported yet, so we aren't testing for that in this PR.

Test Plan:

python test/test_quantization.py TestSerialization

Imported from OSS

Reviewed By: HDCharles

Differential Revision: D30003700

fbshipit-source-id: 20e673f1bb15e2b209551b6b9d5f8f3be3f85c0a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903

### Remaining Tasks

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP).

### Summary

1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed.

2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415).

It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now.

3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now.

4. One test is currently being skipped -

[test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines.

The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d.

Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses.

Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code.

Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests.

### Testing

1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2.

Only one test had to be modified, as it was hardcoded for AVX2.

2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support.

### Would the downclocking caused by AVX512 pose an issue?

I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance.

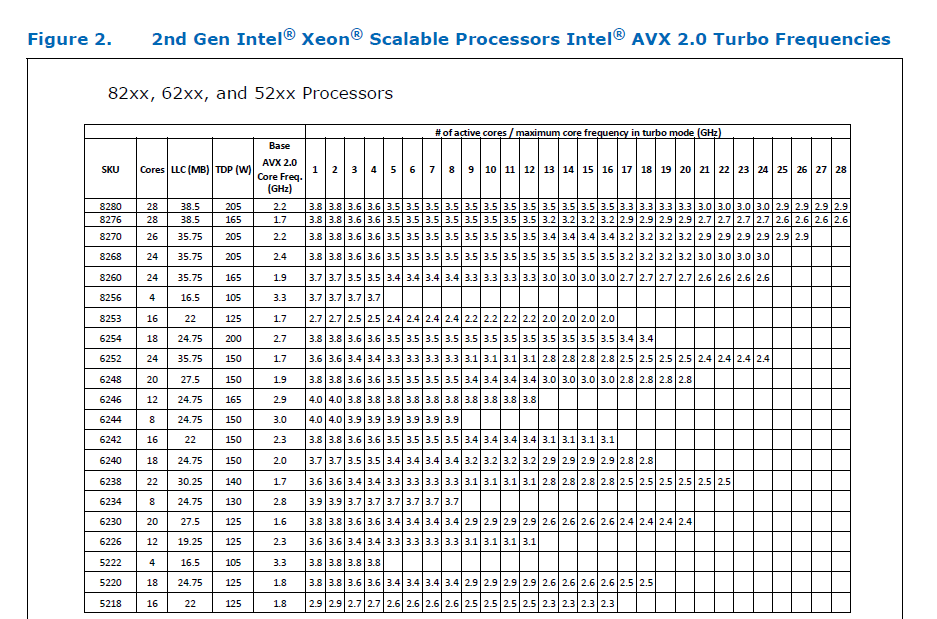

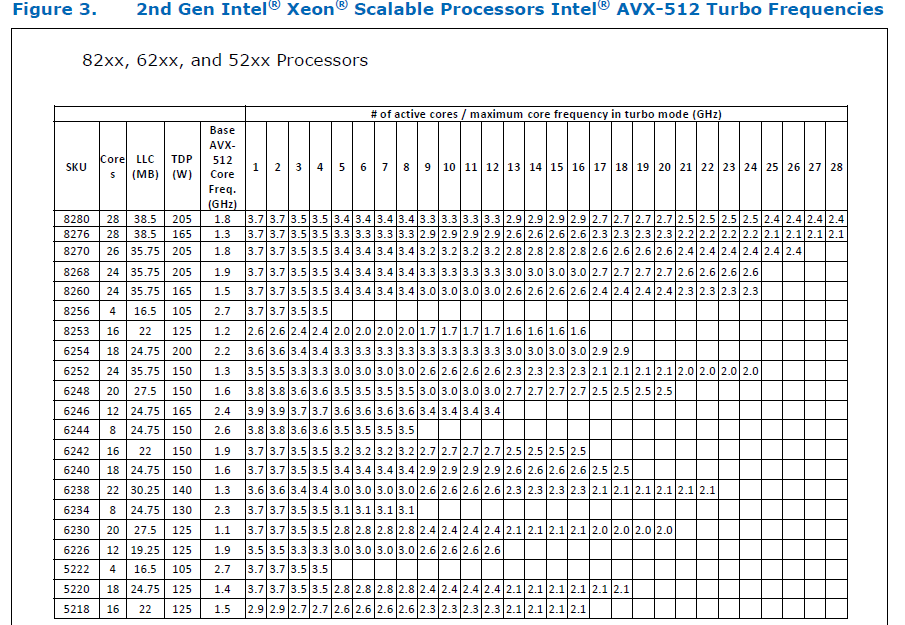

This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance.

Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -

The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them.

### Is PyTorch always faster with AVX512?

No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512.

It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed.

Original pull request: https://github.com/pytorch/pytorch/pull/56992

Reviewed By: soulitzer

Differential Revision: D29266289

Pulled By: ezyang

fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60241

We're going to make a forward-incompatible change to this serialization

format soon, so I'm taking the opportunity to do a little cleanup.

- Use int for version. This was apparently not possible when V2

was introduced, but it works fine now as long as we use int64_t.

(Note that the 64-bits are only used in memory. The serializer will

use 1 byte for small non-negative ints.)

- Remove the "packed params" tensor and replace it with a list of ints.

- Replace the "transpose" field with "flags" to allow more binary flags

to be packed in.

- Unify required and optional tensors. I just made them all optional

and added an explicit assertion for the one we require.

A bit of a hack: I added an always-absent tensor to the front of the

tensor list. Without this, when passing unpacked params from Python to

the ONNX JIT pass, they type would be inferred to `List[Tensor]` if all

tensors were present, making it impossible to cast to

`std::vector<c10::optional<at:Tensor>>` without jumping through hoops.

The plan is to ship this, along with another diff that adds a flag to

indicate numerical requirements, wait a few weeks for an FC grace

period, then flip the serialization version.

Test Plan: CI. BC tests.

Reviewed By: vkuzo, dhruvbird

Differential Revision: D29349782

Pulled By: dreiss

fbshipit-source-id: cfef5d006e940ac1b8e09dc5b4c5ecf906de8716

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59007

Create folders for each test category and move the tests.

Will follow-up with a cleanup of test_quantization.py

Test Plan:

python test/test_quantization.py

Imported from OSS

Reviewed By: HDCharles

Differential Revision: D28718742

fbshipit-source-id: 4c2dbbf36db35d289df9708565b7e88e2381ff04